Comments (3)

It took me a moment to get a hand on that book in order to make sure I understand what you are referring to. This is quite a different situation, since the equations in that chapter do not refer to spiking networks, but to continuous interactions between non-spiking neurons (the same methods could also be applied to population dynamics). Such interactions can be simulated in Brian, but you cannot have delays for continuous interactions (see #1098 and #467). However, you can easily implement what he calls a "multistage delay", i.e. an approximation of a delayed connection by one or more additional variables.

E.g., the equations 4.15 from the book

Can be written as:

eqs = '''

dE/dt = 1/(10*ms) * (-E - 2*Delta) : 1

dI/dt = 1/(50*ms) * (-I + 8*E) : 1

dDelta/dt = 1/delta * (-Delta + I) : 1

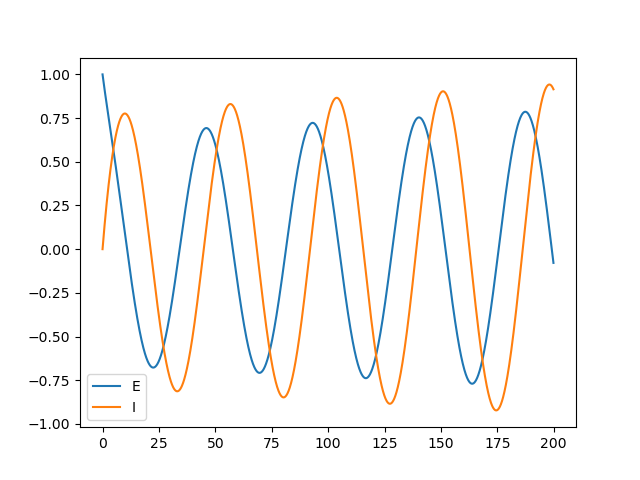

'''You can run this directly like this, to show that there is oscillation for some values of

from brian2 import *

eqs = '...#see above'

delta = 7.61*ms

group = NeuronGroup(1, eqs, method='euler')

group.E = 1 # initial activity

mon = StateMonitor(group, ['E', 'I'], record=True)

run(200*ms)

plt.plot(mon.t/ms, mon.E[0], label='E')

plt.plot(mon.t/ms, mon.I[0], label='I')

plt.legend()

plt.show()This is not a good way of doing things in general, though, since here the connection between the E and I neurons is hardcoded into the equations, i.e. you cannot have several E and I neurons that are connected this way. Instead, a more general approach would be to separate the E and I populations into two separate NeuronGroups and then connect them either using a "linked variable" (if you only have 1-to-1 connections), or via a "summed variable" (for the general case). We don't have many examples of this kind, but see Tetzlaff et al. (2015) for a (quite complex) example of non-spiking interactions via summed variables.

If you have more questions about this, I'd suggest asking over at https://brian.discourse.group.

from brian2.

Indeed, here is the original issue: #355 – nothing has fundamentally changed since then, unfortunately we still don't have a mechanism for it.

I can see possible workarounds:

- Instead of changing the delays, have multiple synapses between each source/target pair with different delays and switch between them (i.e. adapt the weights instead of the delays). Approaches like this have been used to model plasticity in the auditory system: https://direct.mit.edu/neco/article-abstract/24/9/2251/7800/Frequency-Selectivity-Emerging-from-Spike-Timing

- Instead of changing the delays during the run, change another variable. At regular intervals, stop the simulation and apply the changes accumulated in the other variable to the actual delays. If you did this at every time step, this would be perfectly equivalent to changing the delays dynamically, but… it would also be terribly slow and not useful in practice. But if it is ok to only apply the changes in delays, say, every 100ms or so, then it could be feasibly – I don't know what kind of plasticity to have in mind, but I'd expect changes in dynamic delays to be rather slow.

from brian2.

Thanks for your quick reply! If you need me to continue this discussion in the other post let me know! However, I have another question before that, if you don't mind. Was it considered to use multistage delays in the neuron's dynamic equations to simulate delays? I am referring to the method mentioned in the book "https://www.amazon.com/Spikes-Decisions-Actions-Foundations-Neuroscience/dp/0198524307" by Wilson, Page "56". If it was considered what were the difficulties in implementation? I am asking this because I was thinking of using this method to learn delays.

from brian2.

Related Issues (20)

- Incompatible with numpy version >= 1.20 due to deprecated aliases HOT 5

- SpatialNeuron (spatialstateupdate) fails with numpy without scipy HOT 7

- Improve parser for model descriptions HOT 1

- Improve the MarkDown exporter

- Use randomised timesteps to avoid artificial synchronisation

- Improve Pyodide support

- [Question] Regarding the spatial neuron equation HOT 2

- GSL incompatibility with latest Cython beta

- Problem with storing Neurongroups in dictionaries HOT 1

- the Izhikevich_2007 example neuron model bug HOT 4

- [readthedocs] Tutorials disappeared HOT 2

- Random number generation on C++ standalone is slow HOT 3

- [beginner question]step through brian2 to understand inner workings of neuronal models HOT 1

- Setting Neuron weights? HOT 2

- Floor division of integer values sometimes incorrect on C++ standalone

- Returned value needs to be retrieved for object to work HOT 7

- A bug in the Synapse.connect documentation HOT 2

- module 'brian2' has no attribute 'units' HOT 2

- problem with NeuronGroup HOT 1

- (Very) small refractory values get lost in code generation

Recommend Projects

-

React

React

A declarative, efficient, and flexible JavaScript library for building user interfaces.

-

Vue.js

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

-

Typescript

Typescript

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

-

TensorFlow

An Open Source Machine Learning Framework for Everyone

-

Django

The Web framework for perfectionists with deadlines.

-

Laravel

A PHP framework for web artisans

-

D3

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

-

Recommend Topics

-

javascript

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

-

web

Some thing interesting about web. New door for the world.

-

server

A server is a program made to process requests and deliver data to clients.

-

Machine learning

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

-

Visualization

Some thing interesting about visualization, use data art

-

Game

Some thing interesting about game, make everyone happy.

Recommend Org

-

Facebook

We are working to build community through open source technology. NB: members must have two-factor auth.

-

Microsoft

Open source projects and samples from Microsoft.

-

Google

Google ❤️ Open Source for everyone.

-

Alibaba

Alibaba Open Source for everyone

-

D3

Data-Driven Documents codes.

-

Tencent

China tencent open source team.

from brian2.