Comments (33)

@henry-hz Thanks for the feedback Henry. Let me read through & digest before responding.

from commanded.

-

Regarding registration, I would create a wrapper (a new lib, maybe "commanded-registry"), because of backwards compatibility and drop-in replacements. For example, I am very interested in Swarm, because takes partitioning into account, and that's important in Event Sourcing with or without containers, but you might want to use gproc or whatever. I still think that PMs don't have to be FSMs. We must provide an API that allows to use a third party FSM library easily and without hassle, but not force it. Another option would be to have different kinds of PMs: Simple (as simple as possible, actually like the current iteration of aggregates), stateful and FSM.

-

It seems to me that you are modeling your aggregates from a technical point of view, which is completely discouraged. In fact, :target and :source aren't used in the aggregate itself, you are just enriching events, with (always in my opinion) is not a good approach. And I insist, target and source make sense in a money transfer context, but not in others. A Deposit or a Withdraw can be tied to a different context other than a money transfer. They have meaning by themselves, one could deposit or withdrawn without transferring money, that's why I don't like the extra target and source fields.

-

As I pointed out in point 5 before, testing is extremely clean and easy now. You can even chain aggregate calls with the pipe operator. It's just a matter of checking that a given call raises an exception. Another approach would be to return the typical {:error, reason} tuple, but somehow it feels bad to not do the same in the correct cases (which woule be {:ok, events}), but that would require using "with" instead of just the pipe operator.

from commanded.

@henry-hz I understand you're trying facilitate testing the entire system as if it were pure.

CQRS instills the idea of the aggregate as the consistency boundary. Outside of that you have eventual consistency. You can't model inter-aggregate communication as a pure system.

With black box testing you're concerned with the input commands and output events. You shouldn't care about which aggregates or process managers are involved. Otherwise you are testing the specific implementation and not the behaviour. If your tests only deal with commands and events then you can refactor the implementation.

When the event store integration is extracted into a pluggable behaviour we could implement an in-memory storage for testing-only. Would that help with your black box tests?

from commanded.

In my book ProcessManagers listen to events, don't receive commands. I would just send the PlaceOrder to the Order aggregate.

@henry-hz if you really want to test your system, you can only do that by including the event store, and I recommend you to do that.

For the record, I have an unfinished Ruby EventSourcing library which in fact has a default in-memory store (for testing and development purposes). In its /features dir there are integration tests and a sample application.

from commanded.

wow.... that is a batch! @slashdotdash please don't get scared by the number of proposed features... it would be great if you join forces and keep the best ideas... Not all of them might land in that repository, and that is fine. It would be great if we keep small tight packages with optional dependencies... really interested how the discussion will evolve!

from commanded.

Hey @henry-hz, the code simplification was my proposal. @slashdotdash and me talked about that on https://gitter.im/commanded/Lobby, please feel free to join.

-

Regarding FSMs, we also chatted a bit. I think that the code and patterns in commanded should allow the usage of FSMs wherever they are needed, but I wouldn't force them nor implement anything. Just use any third party library that pleases you.

-

Actually, I don't like that your WithdrawMoney command has a target field. What if there is no such thing? What if the target is cash? Source and target make sense in a Transfer context. Otherwise there is no such thing as a target. I mean, the domain is badly modelled IMO. Of course if your domain never ever has the concept of cash, it's ok, but I think that stateful process managers actually make things easier to reason about because of SRP.

-

I guess that I should read the code, but I don't get what you mean with this.

-

There is already an issue for that. commanded/eventstore#22

-

The reason behind the code simplification was precisely easier testing, so I don't understand what's the problem. Aggregate command functions don't have side effects, they just return 0, 1 or more events, and they are contained in plain Elixir modules. Just call the functions and check the result:

assert Account.execute(%Account{balance: 100}, %Deposit{amount: 100}) == %MoneyDeposited{amount: 100} -

I would raise exceptions in the aggregates for business errors. Commands should be validated elsewhere so it's easy to tell them apart.

from commanded.

Hi @Papipo ,

-

Here the example: https://github.com/work-capital/engine/blob/develop/test/engine/process_manager/process_manager_test.exs , in fact, instead of using 'respond', 'next_state' would be better, and handle(%Command) could be used instead of the command name. We also think that the process router is difficult to maintain, and a gproc/syn could better manage process registration, also for registry.ex

-

I used 'target' and 'source', when you transfer money using a Bank, you inform the Bank, where the money should be transfered, and also the receiver, knows the "source". If you use "source" and "target/destination", a FSM without data can manage and reroute money back only based on Events, that generate Commands. Having a "data state" is a port to have also business logic, and at the end, the process manager becomes an aggregator. Think process managers as "Cisco" switches, instead of "Check point Firewalls".

-

the example will help

-

Cool, see how we implemented there, "storage.ex", simple. As Extreme must use the Protobuf messages, it would be easier to @slashdotdash adapt them in Eventstore-Postgres driver instead of writing one more layer.

-

very good, the simplification brought us back to this proposal

-

raising exceptions is faraway from functional coding style, specially for us, that we have a super complex business model, and we need to test and model them outside the "effects" world. If we encapsulate all the system in the Writer monad, we can "log" all the pipes and get what we want from the models, before implementing them under gen_servers.

from commanded.

- FSM support for process managers

I'm moving towards being less prescriptive with aggregate roots and process managers. To allow consumers of Commanded to implement these concepts eactly how they prefer. If they wish to use an FSM then they can.

The requirements would be for consumers of Commanded to implement the following behaviours in their own modules to allow Commanded to host them.

Aggregate root

defmodule Commanded.Aggregates.AggregateRoot do

@type domain_event :: struct

@type command :: struct

@type aggregate_root_state :: struct

@callback execute(aggregate_root_state, command) :: list(domain_event) | nil | {:error, term}

@callback apply(aggregate_root_state, domain_event) :: aggregate_root_state

endThis also supports making a command handler optional. By default the command router could be configured to delegate the command directly to the aggregate root's execute function (issue #30).

Process manager

defmodule Commanded.ProcessManagers.ProcessManager do

@type domain_event :: struct

@type command :: struct

@type process_manager_state :: struct

@type process_uuid :: String.t

@callback interested?(domain_event) :: {:start, process_uuid} | {:continue, process_uuid} | false

@callback handle(process_manager_state, domain_event) :: list(command) | nil | {:error, term}

@callback apply(process_manager_state, domain_event) :: process_manager_state

endYour FSM macro example could then be implemented outside Commanded. It just needs to implement the above behaviour.

- Keep state out of Process Manager

If process managers don't have their own state you are then forced to enrich your domain events with potentially unnecessary data. This is problematic when your process manager is orchestrating between many aggregate roots. In the simple case with only two aggregates it's not an issue to include the data in any relevant events. When you have one-to-many relationships it is more problematic. The state is useful for fan-out type events where one incoming event causes multiple commands to be dispatched to different aggregate roots.

- Snapshoting and Separete the Data Persistence Layer

Aggregate state snapshots #27. Similar concept to the code you linked to. This would be a nice performance gain for long lived aggregates or those with many events.

- Support Eventstore database

Yes, that's a useful feature. From a quick search in the source of Command there are only three main usages of the eventstore library.

EventStore.read_stream_forward/3EventStore.append_to_stream/3EventStore.subscribe_to_all_streams/2

These three could become a protocol/behaviour that we implement for each supported event store to plug in.

- Monads support to test Aggregates and Process Managers without side-efects

Aggregate roots and process managers should be implemented as pure functions. That should allow simple unit testing scenarios. Aggregate root state and a given command must always return same domain events.

The side effects occur within the hosting processes that Commanded provides (e.g. persist domain events to storage, dispatch commands). These should be fully unit tested in Commanded.

I use the following evolve function in aggregate root unit tests. It applies domain events to the state that can be used in a with block.

defmodule Aggregate do

def evolve(aggregate, events) do

Enum.reduce(List.wrap(events), aggregate, &aggregate.__struct__.apply(&2, &1))

end

end@tag :unit

test "import club members should remove members who have left the club" do

club_uuid = UUID.uuid4

events =

with club <- evolve(%Club{}, Club.import_club(%Club{}, club_uuid, build(:club))),

club <- evolve(club, Club.import_members(club, [build(:athlete)])),

do: Club.import_members(club, [])

assert events == [

%MemberLeft{club_uuid: club_uuid, member_uuid: .... }

]

endI also create integration tests that dispatch commands and then use the wait_for_event and assert_receive_event helpers from event_assertions.ex.

- Differentiate between Domain error and Command Validation.

Command validation can be implemented as router middleware. Here's an example using the Vex library.

defmodule Validation.Middleware do

@behaviour Commanded.Middleware

require Logger

alias Commanded.Middleware.Pipeline

import Pipeline

def before_dispatch(%Pipeline{command: command} = pipeline) do

case Vex.valid?(command) do

true -> pipeline

false -> failed_validation(pipeline)

end

end

def after_dispatch(pipeline), do: pipeline

def after_failure(pipeline), do: pipeline

defp failed_validation(%Pipeline{command: command} = pipeline) do

errors = Vex.errors(command)

pipeline

|> respond({:error, :validation_failure, errors})

|> halt

end

endThis prevents invalid commands from reaching your aggregate root.

For business rule violations you can return an {error, reason} tagged tuple from an aggregate root command function (and/or command handler), or raise an exception. In some cases you want to record the fact that a business rule was broken. In which case you raise a domain event. There's an example of this in the BankAccount in Commanded's unit tests. The account is allowed to go overdrawn when the amount to withdraw exceeds the balance. This is a business decision.

def withdraw(%BankAccount{state: :active, balance: balance}, %WithdrawMoney{account_number: account_number, transfer_uuid: transfer_uuid, amount: amount})

when is_number(amount) and amount > 0

do

case balance - amount do

balance when balance < 0 ->

[

%MoneyWithdrawn{account_number: account_number, transfer_uuid: transfer_uuid, amount: amount, balance: balance},

%AccountOverdrawn{account_number: account_number, balance: balance},

]

balance ->

%MoneyWithdrawn{account_number: account_number, transfer_uuid: transfer_uuid, amount: amount, balance: balance}

end

end- Solve the pending bugs as soon as possible

Agreed for the high priority bugs.

Feel free to chat in the Gitter room too.

from commanded.

For tests, we could provide some assertions or utils in a module (like that evolve function).

from commanded.

@henry-hz Not sure if I answered your questions/concerns. In brief, it would be great to collaborate together to build a robust platform for hosting CQRS/ES applications.

We have a high priority in supporting the Eventstore database, implement a good FSM support for process managers, and Monads for free testing environment ...

- Extract event store integration into a behaviour to support multiple storage back-ends. Provide implementations for Greg Young's Event Store and the existing PostgreSQL eventstore dependency.

- FSM support is the consumer's concern, outside Commanded. Just need to adhere to the aggregate and process manager behaviours.

- Integrate and use the

evolvetest helper function. Consider how to reducewithboilerplate.

from commanded.

Closed by mistake.

from commanded.

@slashdotdash wow! I will carefully study your feedback, thanks. Meanwhile, I started an example application, based on a known Microsoft case https://github.com/work-capital/ev_sim . Yes, yes, we can purify Microsoft... :)

from commanded.

@Papipo Good feedback, I have to study also, but it seems that we can converge our views using one or more real life examples, and they will also be very illustrative or boilerplate to for new comers.

from commanded.

@slashdotdash , thanks for the feedback, and we are happy that you are leading this project and welcomed our desire to collaborate together.

You answer the majority of our concerns. The major one is still open, and it's crucial, specially to keep our team moving ahead.

- A flexible way to implement Process Managers seems to be fine for us, but we need to build a POC to see how it looks like in real life. The https://github.com/work-capital/ev_sim can be an illustration of what we mean. The starting point is to have a complete and common environment for specifying, testing and simulating (also answering @Papipo comment n. 3) the whole system (instead of unit testing). Dealing with unit testing for aggregates is okay, but the "I/O Protocol" should be uniform to be piped into the whole system, and the whole system should behave as a pure function. For example, in a system with a Trade, Inventory, and Payment aggregates, and a Process Manager to handle the Purchase Process, we need to input one command to buy an Item in Aggregate that will trigger the Inventory, that will back to the Process Manager and wait for the Payment command, that will finish the Process Cycle.

Test Case Business Simulation Cycle:

commands = [%BuyBook{}, PayBook{}]

aggregates = [%Trade{}, %Inventory{}, %Payment{}]

process_m = PurchaseProcessManager

system = aggregates . process_m

events = [%BookBought{}, %PaymentAccepted{}]

assert commands -> system -> events

As you see, two commands were sequencially injected into the whole system, and we asserted that in with this commands, the system as whole reacted and produced out these two events. Imangine that with 5 or more aggregates, and 2 process managers, how easy will be for our team to receive the business requirements and transform into a complete pure functional system, even before writing anything on the database. In a few words, we write the pure aggregates and process managers (with or without FSMs), package them together, and test I/O behavior in several scenarios.

Let me list some libraries that could help us to finish this task. Maybe we should get some ideas from them, and build a pure test/simulator framework to encapsulate CQRS/ES components.

effects - Very interesting

monadex - Operators could be more elegant,

but very complete

plumber_girl - Railway oriented

towel - Pragmatic Elixir stilysh {:ok, _}

For that it's crucial that we agree on how inputs and outputs will be piped and

handled in an uniform way, avoiding anbigous responses, sometimes the event,

sometimes the {:error, :reason}. Let me suggest to use the Monad

Writer, so we keep

processing, changing the state, and logging the output. The final output, as the

assertion above, should be the logged events accumulated in the Writer Monad.

Using execution(state, command) will also help to make the aggregate easier to

test, and should be part of the protocol. Please, let me know your thoughts.

Some links on Process Managers:

http://blog.devarchive.net/2015/11/saga-vs-process-manager.html

http://codebetter.com/gregyoung/2012/04/24/state-machines-and-business-users-2/

http://www.enterpriseintegrationpatterns.com/patterns/messaging/ProcessManager.html

from commanded.

If I understand correctly, what I would do is just integration testing. You either execute commands on the system when it's running or you could even test using a REST endpoint or whatever you are using to communicate with the outside world.

Just test the system as a black box. Commands come in, and you just assert that the expected events are written to the Event Store. We might provide assertions to ease this last part, but I find it easy enough even with them.

Actually, I tend to use Gherkin-style tests even if I am a lone developer. For elixir there is white-bread:

Given -> Pre-existing events

When -> Commands

Then -> Expected Events

from commanded.

@Papipo yes! you got it, for sure using a Gherkin-style is very important. The microsoft example has it also.

https://github.com/MicrosoftArchive/cqrs-journey

I am advancing on it, https://github.com/work-capital/ev_sim . Hey, imagine that we will be able to contract developers only for the Business Modeling... add a pure functional structure to the side-effects world should be only an I.T issue.

See this Business Process Engine in Erlang:

https://github.com/spawnproc/bpe

Once we have that, adding a form generator with json files, and a DSL to generate the aggregates and process managers, in Elixir, the output (in some years) could be a super business development platform on the cloud, with several services, etc...

from commanded.

Black box testing using commands -> events is straightforward enough. You just create a subscription to the event store, execute the commands, and then receive the published events to assert.

test "deposit money" do

events = execute([

%OpenAccount{account_number: "ACC123", initial_balance: 1_000},

%DepositMoney{account_number: "ACC123", amount: 100},

])

assert events == [

%AccountOpened{account_number: "ACC123", balance: 1_000},

%MoneyDeposited{account_number: "ACC123", balance: 1_100},

]

end

defp execute(commands) do

capture_events(fn ->

Enum.each(commands, fn command ->

:ok = Router.dispatch(command)

end)

end)

end

defp capture_events(fun) do

subscription_name = UUID.uuid4

create_subscription(subscription_name)

try do

apply(callback_fn, [])

after

remove_subscription(subscription_name)

end

receive do

{:events, received_events, subscription} -> received_events

end

end

defp create_subscription(subscription_name) do

{:ok, _subscription} = EventStore.subscribe_to_all_streams(subscription_name, self)

end

defp remove_subscription(subscription_name) do

EventStore.unsubscribe_from_all_streams(subscription_name)

endfrom commanded.

@slashdotdash I meant without external resources, infra-structure, database, etc..., maybe using: https://hex.pm/packages/effects ? The solution above is also good, would work with Process Managers ?

from commanded.

In addition, both Greg Young's Event Store [1] and the PostgreSQL-based eventstore only support atomically writing to a single stream. One reason for this is to allow streams to be partitioned across nodes without requiring a two-phase commit. Therefore you cannot use a monad to record and later store events if they apply to more than one aggregate (stream) as there is no persistence guarantee when writing multiple streams.

[1] http://docs.geteventstore.com/dotnet-api/3.9.0/writing-to-a-stream/

from commanded.

@slashdotdash I meant 100% plugable, if using Monads, you are right, mixing both paradigms wouldn't work.

The in-memory "virtual eventstore" would solve the issue, good idea, and also detaches the "protocol" from the side-effects implementation. I loved how https://hex.pm/packages/effects solved the "plugin" composition using https://github.com/metalabdesign/pipeline . But in the case we decide to use it, maybe, as you mentioned, the "virtual environment" should be detached from the real side effects implementation. But because of the nature of "Free Monads", I am still researching if it would be possible, to create a "pipeline" to the eventstore implementations anyway. Let me suggest to use https://github.com/work-capital/ev_sim , so we build a real life example, with https://github.com/meadsteve/white-bread as suggested by @Papipo , the FSM, the scheduler (for the next release, but the idea is there).

I will work on the Example implementation, meanwhile I gently ask you if you can add the commanded framework, specially with the FSM process manager there. ok ? If you prefer to have the repo on your user-name, let us know pls

The diagram is cool, and can inspire a lot of people.

from commanded.

@henry-hz I just forked your ev_sim repo to look at the process manager. I can look at this, are you getting it in a state where it will compile. Don't want to duplicate effort.

from commanded.

@slashdotdash yes, it compiles . I will start to model it better, implement the aggregates also

from commanded.

Note how it makes sense, that a command is sent to start a process, in the process manager itself, and finishes when the order is completed. So starting a process manager would not depend on triggers... only routing to them commands... really easier to maintain, an aggregate stylish way of living, but much more powerful.

pg.289 of this book: https://www.microsoft.com/en-us/download/details.aspx?id=34774

from commanded.

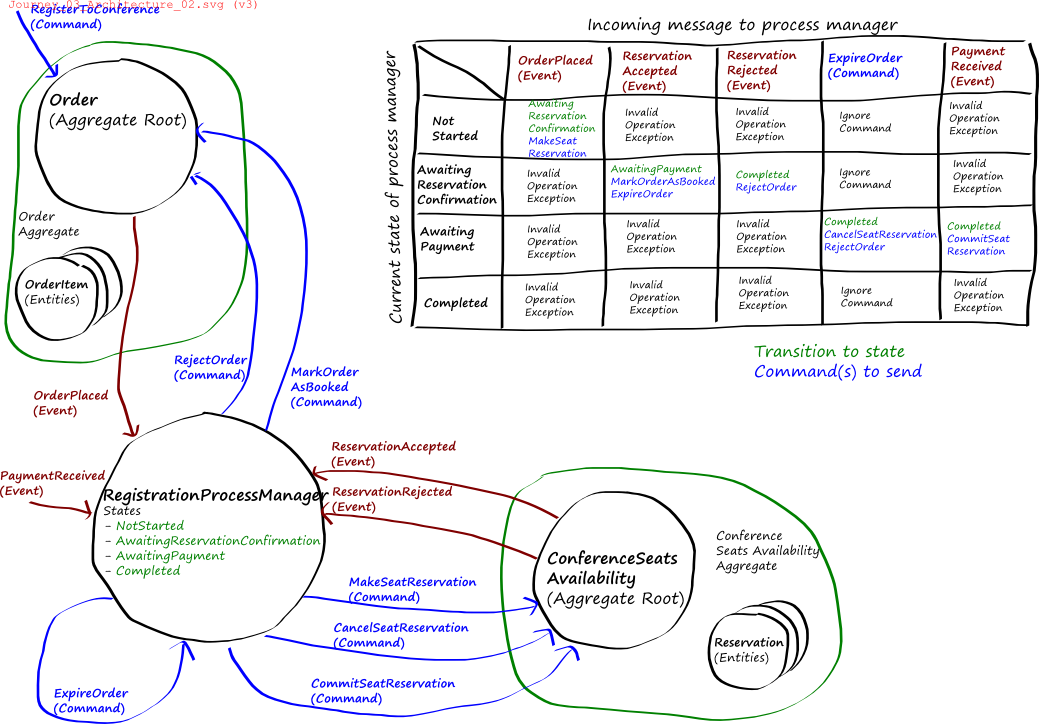

In Microsoft's CQRS Journey example the registration process manager router is initially triggered by an OrderPlaced event, raised from the Order aggregate root.

I like the simplicity of process managers only responding to events and dispatching commands. With aggregate roots the only recipient of commands. I'm not sure why you would want to route a command to a process manager who then simply dispatches another command.

from commanded.

Please, take a look again, until the last lines: https://github.com/MicrosoftArchive/cqrs-journey/blob/master/source/Conference/Registration/RegistrationProcessManagerRouter.cs , it handles a command at the end of the code.

The diagram above is from the end of the book, that also shows a PM handling a start command (but not in the code, as you said), maybe there are discrepancies, but anyway, as the process manager has a start and end life-cycle, how much simpler would be the overall implementation, if we route a command to start it. I also noticed that the Maxim BPE also has a clear start and end point. I understand how it sounds heresy, but carefully observe the diagram above, and notice that Aggregates also are listening to events... and also, PlaceOrder only 'starts' the PM, and a CreateOrder is routed to the Order aggregate. In fact, if we start from the premise that the diagram is correct, and it is some kind of "conclusion at the end of the book", it can means that the first "command" to the process flow is only a "hey, let's start a complete process", and the last event to aggregates (excluding payments) is "hey, let's shutdown these processes", and we have here finally a design with start, middle and end. Thinking OTP erlangish FSMish it also makes sense. I can even risk to say that in the long run, this is the harmony and simplicity we are looking for, that instead of making a process manager that depends on a process router gen_server, it could have the simplicity of an aggregate, and also, because it has clear start & end points, the middle point also becomes defined, so it starts as the desire expressed from an user (command) and ends with a clear result (effect). Advantages:

- suitable for the mind (I want and Hamburger, you got an Hamburger)

- it's an honor for the manager receive a request, instead of listen to effects, and the same that received the 'important' request, is the one who provides the 'important' result, (it's an ethical problem olny)

- more suitable for testing the whole process (input -> place order, output -> order confirmed)

- OTP aligned: if PM crashes, it can shutdown all the aggregates around, and if it recovers, it would also be responsible on starting them again, so no aggregates would never be orphan (this item is only a felling, maybe completely no-sense)

- process managers that receive commands are easy to integrate with schedulers (that are a point-to-point non pub-sub messages). the schedule is 'someone' pointing to that PM specifically and telling it what to do, so it's in fact a state changer, and a business decision (see the microsoft FSM), outside the scope of the aggregates, once it's an orchestration decision, see the self-expire-order, a self-command.

- it complies with the idea of "routing messages", but more broadly, once it routes from commands to events, and from events to commands... it's more open for creativity than a process manager that says "I only route this input to this output".

- if the item 4 makes sense, we could give to PMs even more IT responsibilities, as routing and dispatching, supervise it's aggregates, and even create an UI to monitor and control them.

- as ES is a ledger in nature, a sequence of "commands" could be sent to "correct" the undesired state, i.e. error, in case any command was issued to some aggregate, and a correction strategy should also be able to realign the PM state.

- who will be able to refuse the PlaceOrder command ? In the Command example, we have an aggregate created only to be able to receive the Transfer Command, and route the event start the process manager. See how it added complexity, we needed a complete new aggregate only to have a "start" option for the PM... if we start the PM directly and PM will route this command [1.PlaceOrder] as [2.CreateOrder] transparently, we solve the redundancy of having a new aggregate only to handle the 'start request', and the PlaceOrder in the end is directly handled by an aggregate (business logic), but only translated by the PM, that was interested in this command to start its instance.

- From the book "Enterprise Integration Patterns"

The Correlation Identifier allows a component to associate an incoming reply message with the original

request by storing a unique identifier in the reply message that correlates it to the request

message. Using this identifier the component can match up the reply with the correct request

even if the component has sent multiple requests and the replies arrive out of order. The Process

Manager requires a similar mechanism. When the Process Manager receives a message from a

processing unit, it needs to be able to associate the message with the process instance that sent

the message to the processing unit. The Process Manager needs to include a Correlation Identifier

inside messages that it sends to processing units. The component needs to return this identifier in

the reply message as a Correlation Identifier.

The balance for Commanded is, as a framework, it's worth to consider flexibility, but we have to weight the outcomes, specially on item 4.

BPEL was substituted by BPMN, but this presentation is interesting.

http://www.daml.org/services/swsl/materials/bpel11.pdf

@Papipo About the testing, I agree, the system also should be tested, but the idea of having a complete model in "pure data structure" and monads (or effects) is like the idea of having an UI to model like this one: https://bpmn.io/ , and very easily we could model and test business data structures, and also generate them using gherkin parsers. It's also more fun(ctional).

http://verraes.net/2014/05/functional-foundation-for-cqrs-event-sourcing/

https://github.com/BardurArantsson/cqrs/tree/master/cqrs-example/src/CQRSExample

from commanded.

Somehow I think that sticking with Aggregates that only receive commands and emit events and Process Managers that only listen to events and emit commands, couldn't be simpler. It's also uniform and predictable. Apart from the fact that it's the recommended way to go, I've read it thousands of times in the dddcqrs mailing list. You can have an aggregate listen to events, but once it becomes too complex, it's just plain better to move that logic to a PM.

- Aggregates can handle that. RequestHamburguer -> HamburguerDelivered. I don't care if there is something in-between.

- I can understand the urge to take bad decisions based on a technical point of view, but for ethical reasons regarding the code constructs? Code is code and it serves a purpose.

- The code is exactly as testable as before, it's just the aggregate who receives the command.

- A Process Manager is not a Supervisor. It shouldn't shutdown anything, specially aggregates. Aggregates can receive commands not related to the process manager. Why would you want a PM to shut down an Aggregate? Same with restarting them.

- There are many ways to schedule tasks and none of them need PMs to receive commands.

- In the DDD/ES context PMs are a pattern that listen to events but not receive commands. Of course you could have PMs that receive commands, but you want to bend the pattern to accomplish what? There is no need for PMs to receive commands at all.

- So you want to violate SRP on purpose.

- What does this have to do with PMs receiving commands?

- You don't need an extra aggregate, the Order aggregate should be the one receiving the PlaceOrder commands. In fact business logic lives in aggregates, which are the ones in charge of refusing commands because of their invariants. As @slashdotdash pointed before, why do you want a PM to receive a command to send another command? That seems unneeded complexity to me. Wouldn't it be better to send the command to the aggregate in the first place?

- Correlation ids are interesting for various purposes, but they have nothing to do in this discussion.

I think that you must forget about that example in the microsoft book. It's not simplifying things, it's the other way around. It violates SRP and does not comply with DDD/ES patterns.

Believe me when I say that my role here is to try to simplify things. See how Aggregates are now without the eventsourced lib. They are exactly what they need to be, no more, no less. And I'll try to see the big picture in order to keep the API clean and simple.

from commanded.

@Papipo yep, makes sense, aggregates are really cool, very elegant design. About the Microsoft example, let's forget it for a while, and stick with the rules, but, let the idea (a command to start the PM) open, and observe. About the ethical issue, just a joke, don't take it serious .

I love the idea of keeping it simple.

PM router design, as it's today, for me, is quite difficult to reason about, I would be happy to have a detailed diagram using https://www.websequencediagrams.com/ . When I tried to fix the bug #25 , it took me more time that I thought to understand until I gave up fixing it, and because we are building a financial transaction app, complete control on what is happening behind the scenes is crucial. Maybe if we encapsulate things around, some separation of concerns... From the gitter chat:

"There’s an open issue to add retrying to event handlers #20

At the moment, if the command fails the process manager instance will crash and should be restarted

I also want to add time triggers to process managers. So you could configure it to dispatch a command and wait for an expected message or be notified after x minutes/hours "

I am thinking how could we cover all scenario combinations to handle crashing, recovering the system state in sync (I mean, PM and aggregates at the same position), at the same time new messages (commands and events) being queued... this is how things are on production... The TCP/IP protocol is a one-shot robust solution, many years around. Encapsulating and having exclusive modules to handle this fail/recovery scenarios would be great

from commanded.

The registration process manger from the CQRS journey is shown as being started by and responding to events. There's one command that it receives: ExpireOrder. This command is a special case because it is dispatched with a delay (e.g. dispatch this command to process it one hour later).

I would probably design the expire order command to be sent to the order aggregate. Since it is responsible for knowing the state of the order and whether it should be cancelled (that's its domain logic).

That allows the separation between aggregates and process managers:

- Aggregate receives commands and publishes domain events.

- Process manager handles events and dispatches commands.

I'd like to add support for time-based triggers for process managers (#36). This would then allow timing out long running processes.

from commanded.

@henry-hz Quoting you: "recovering the system state in sync".

Just embrace eventual consistency. You want a distributed transactional system, and that's a no-no under DDD principles. Aggregates are in reality transactional boundaries, that's their main concern. Nothing has to be in sync with anything. You just need to be sure that you receive events at-least-once and that have idempotent receivers.

from commanded.

@Papipo got it, thanks. @slashdotdash, yep, routing to order aggregate seems to work well.

As you check the book the pg. 289 (also above) has a different diagram, where the "PlaceOrder" goes to the PM. Strange, but I had an intuition that we could drop-out all the code on router, let the process-manager-instance handle the subscriptions and routing parsing the "on" functions. Does it make sense ?

from commanded.

It does make sense. In my ruby project Event Subscribers had a subscribe_to() method, which did what you say.

We can do the same with a macro in elixir. The problem I see, is that it's probably harder to know where things are defined. I grew used to ruby magic and then got tired of it. Too much implicit things and then you lose control of everything.

Besides, one could define a PM with a plain elixir module, test it like we do with aggregates and once that's nailed, add the subscriptions and routing.

from commanded.

Keeping the aggregate roots and process managers in your application pure will make them easier to test and reason about. The impure side effects should be taken care of by the hosting process (implemented in Commanded). These are generic, fully unit tested, and resilient to failure.

from commanded.

Closing this issue, please open a new issue with individual feature requests if you still have the requirements

from commanded.

Related Issues (20)

- Process manager router option not working

- Lessons learned from performance optimization - an unlikely culprit HOT 3

- no function clause matching in Commanded.Commands.Dispatcher.telemetry_stop/3 HOT 1

- Docs questions

- Stacktrace in event handler error? HOT 2

- Paralelization Strategies in EventHandlers

- Should Commanded.Event.Handler support messages from swarm? HOT 2

- Event retention policies?

- please support multiple commanded application with one eventstore HOT 6

- Process Manager state serialization breaks when using a custom TypeProvider with the JsonSerializer

- `Commanded.ProcessManagers.ProcessManager.identity/0` function returns `nil` in unit tests

- no function clause matching in Commanded.Event.Handler.partition_event/4 HOT 1

- EventstoreDB is sunsetting the TCP protocol HOT 1

- Is it a bad practice for an event handler to depend on a projector completion? HOT 2

- Snapshotting 2 Aggregates having same identity

- Is it possible to log contents of InMemoryEventStore on failed test?

- Ecto Sandbox, Projections and In Memory adapter HOT 3

- Aggregate throws error when execute returns more than 1000 events.

- Individual stream events handling

- Skipped event with concurrency enabled HOT 8

Recommend Projects

-

React

React

A declarative, efficient, and flexible JavaScript library for building user interfaces.

-

Vue.js

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

-

Typescript

Typescript

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

-

TensorFlow

An Open Source Machine Learning Framework for Everyone

-

Django

The Web framework for perfectionists with deadlines.

-

Laravel

A PHP framework for web artisans

-

D3

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

-

Recommend Topics

-

javascript

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

-

web

Some thing interesting about web. New door for the world.

-

server

A server is a program made to process requests and deliver data to clients.

-

Machine learning

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

-

Visualization

Some thing interesting about visualization, use data art

-

Game

Some thing interesting about game, make everyone happy.

Recommend Org

-

Facebook

We are working to build community through open source technology. NB: members must have two-factor auth.

-

Microsoft

Open source projects and samples from Microsoft.

-

Google

Google ❤️ Open Source for everyone.

-

Alibaba

Alibaba Open Source for everyone

-

D3

Data-Driven Documents codes.

-

Tencent

China tencent open source team.

from commanded.