Comments (4)

Hi shikongzxz,

The deviation in height is caused by voxelization and the lack of translation in the z-axis. This means that the height of an object, after aggregating all frames, may be larger than its true value.

As we do not have access to the original data, this deviation is considered acceptable in this competition. However, we plan to collaborate with the official team of nuscene to address the pose issue after the competition.

from cvpr2023-3d-occupancy-prediction.

Can you further explain why the height of an object is calculated using the number of pixels in an image? Objects of the same height in 3D space may appear at different vertical position in the image due to projection.

For your question, check the projecting process. It should be noted that the voxel ground truth is in the vehicle coordinate. The following pseudo code example shows how to project a 3D point onto an image.

reference_points_cam = torch.matmul(torch.matmul(lidar2img.to(torch.float32), ego2lidar.to(torch.float32)), points.to(torch.float32))

There are also two extra reasons that can cause deviation between point clouds and images pixels:

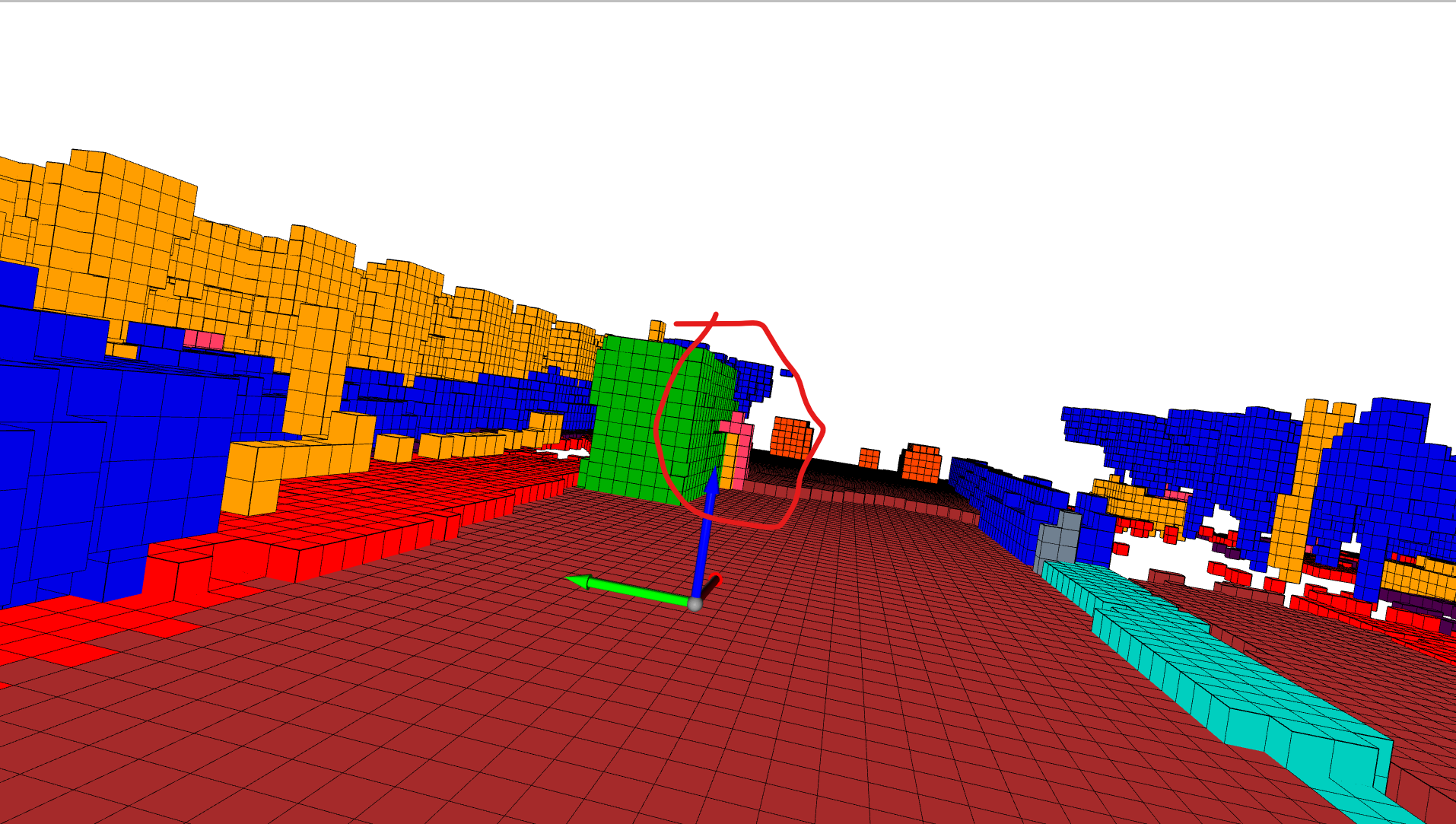

- Voxel size. In this challenge, we provided a voxel size of 0.4m to reduce the GPU memory requirements. As you mentioned, the voxel center is actually not the actual point location due to discretization error. When adopting a smaller voxel size, we have better alignment between point clouds and images, as shown in the figure below.

- Nuscene (issues-721) lacks translation in the z-axis, which makes it difficult to achieve accurate 6D localization and can lead to misalignment of point clouds when accumulating them over the entire scene.

from cvpr2023-3d-occupancy-prediction.

I am not counting the pixels, but the voxels which are projected to the image. If you doubt that, you can visualize the GT file mini/gts/scene-0061/ca9a282c9e77460f8360f564131a8af5/labels.npz, it gives this image:

the pink voxels, which stands for a person, it occupies 7 voxels.

P.S. I used the following code to project the center of the voxels to the image:

meta_file = "annotations.json"

with open(meta_file, 'r') as f:

meta = json.load(f)

scene_name = meta["train_split"][0]

scene_info = meta["scene_infos"][scene_name]

color_map = get_color_map_nuscenes_seg()

frame_info = next(iter(scene_info.values()))

timestamp = frame_info["timestamp"]

ts_save_path = os.path.join(save_dir, str(timestamp))

os.makedirs(ts_save_path, exist_ok=True)

voxel_gt_file = os.path.join(data_root, frame_info["gt_path"])

voxel_gt = np.load(voxel_gt_file)

voxel_semantics = voxel_gt["semantics"]

mask_lidar = voxel_gt["mask_lidar"]

mask_camera = voxel_gt["mask_camera"]

grid_shape = voxel_semantics.shape

voxel_center, voxel_semantics = voxel2points(

voxel_semantics, [voxel_size] * 3)

voxel_center = voxel_center.numpy()

voxel_semantics = voxel_semantics.numpy()

print(voxel_center.shape)

print(voxel_semantics.shape)

# rander each image view

for cam_name, cam_info in frame_info["camera_sensor"].items():

img = cv2.imread("imgs/"+cam_info["img_path"])

print(cam_info["img_path"])

img_h, img_w = img.shape[:2]

K = np.array(cam_info["intrinsics"])

# get camera horizontal fov, if not given

fov_half = get_horizontal_fov(img_w, K)

print(f"Half of fov of {cam_name} is {fov_half}")

extrinsic = cam_info["extrinsic"]

T_ego2camera = transform_matrix(

np.array(extrinsic['translation']),

Quaternion(extrinsic['rotation']),

inverse=True,

)

pts = np.concatenate([voxel_center,

np.ones((voxel_center.shape[0], 1))], axis=-1)

pts = np.squeeze(np.matmul(T_ego2camera[None, :, :],

pts[:, :, None]))

pts = pts[:, :3]

alpha = np.abs(np.arctan2(pts[:, 0], pts[:, 2]))

view_mask = alpha < fov_half

pts = pts[view_mask]

view_sematics = voxel_semantics[view_mask]

pts = np.squeeze(np.matmul(K[None, :, :],

pts[:, :, None]))

pts /= pts[:, [2]]

pts = pts.astype(int)

for i in range(pts.shape[0]):

if 0 < pts[i, 0] < img_w and 0 < pts[i, 1] < img_h:

color = [int(color_map[view_sematics[i], j]) for j in

range(2, -1, -1)]

cv2.circle(img, (pts[i, 0], pts[i, 1]), 1, color, -1)

cv2.imwrite(os.path.join(ts_save_path, f"{cam_name}.jpg"), img)from cvpr2023-3d-occupancy-prediction.

Thanks for replying. Looking forward to a better version of the data :)

from cvpr2023-3d-occupancy-prediction.

Related Issues (20)

- BEVDet baseline with 42 mIOU HOT 3

- Is there any inference code for visualize predicted result? HOT 1

- When does the challenge start? HOT 1

- What coordinate system is the occ gts in? HOT 1

- About the usage specification of mask camera HOT 2

- Can baseline code support fp16? HOT 3

- Regarding the rule HOT 2

- When will you release test dataset HOT 3

- Question about mask_camera HOT 1

- Bug in nuscenes_dataset.py HOT 5

- The frame number of test split is 6008? HOT 1

- visualization error HOT 1

- Can the validation set be used for training? HOT 1

- i can't test using baseline HOT 1

- solved

- Question about LiDAR accumulation range

- Discussion of the 1st-ranked solution

- About test dataset evaluation in the future HOT 2

- Hope for some detail about GTS

- Camera visibility mask HOT 1

Recommend Projects

-

React

React

A declarative, efficient, and flexible JavaScript library for building user interfaces.

-

Vue.js

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

-

Typescript

Typescript

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

-

TensorFlow

An Open Source Machine Learning Framework for Everyone

-

Django

The Web framework for perfectionists with deadlines.

-

Laravel

A PHP framework for web artisans

-

D3

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

-

Recommend Topics

-

javascript

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

-

web

Some thing interesting about web. New door for the world.

-

server

A server is a program made to process requests and deliver data to clients.

-

Machine learning

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

-

Visualization

Some thing interesting about visualization, use data art

-

Game

Some thing interesting about game, make everyone happy.

Recommend Org

-

Facebook

We are working to build community through open source technology. NB: members must have two-factor auth.

-

Microsoft

Open source projects and samples from Microsoft.

-

Google

Google ❤️ Open Source for everyone.

-

Alibaba

Alibaba Open Source for everyone

-

D3

Data-Driven Documents codes.

-

Tencent

China tencent open source team.

from cvpr2023-3d-occupancy-prediction.