Comments (112)

There will be more details when a complete training example is released.

from detectron2.

Putting out a full example is not on our todo list for near term, but to unblock some users, what you need to train a Rotated Faster R-CNN is the following changes to config:

MODEL:

ANCHOR_GENERATOR:

NAME: RotatedAnchorGenerator

ANGLES: [[-90,-60,-30,0,30,60,90]]

PROPOSAL_GENERATOR:

NAME: RRPN

RPN:

BBOX_REG_WEIGHTS: (1,1,1,1,1)

ROI_BOX_HEAD:

POOLER_TYPE: ROIAlignRotated

BBOX_REG_WEIGHTS: (10,10,5,5,1)

ROI_HEADS:

NAME: RROIHeadsplus a custom mapper in the data loader to handle rotated boxes. Some notable changes needed in the mapper:

utils.transform_instance_annotationsdoes not work for rotated boxes and you need a custom version usingtransform.apply_rotated_box.utils.annotations_to_instancesneeds to be replaced byutils.annotations_to_instances_rotated.

Plus a custom dataset that contains boxes with mode XYWHA.

from detectron2.

ok I got rotated bounding box working on DOTA dataset:

https://captain-whu.github.io/DOTA/dataset.html

for training, after several epochs, it'll fail but I'm pretty sure the issue is with respect to invalid ground truth bounding boxes in my dataset.

@ppwwyyxx do you suggest I first train rrpn and then rroi heads rather than end to end?

For inference, get_deltas() calls torch.div_() which resulted in a pytorch error. I got past this by changing the in place version to torch.div(). Not sure if this is considered a bug.

I wrote a custom dataloader and data augmentation using imgaug.

Dataloader prepares the data in detectron2 input format (list of dictionaries)

As far as this issue goes, here are my configs that overwrite the base rcnn fpn config:

BASE: "./Base-RCNN-FPN.yaml"

MODEL:

PIXEL_MEAN: [103.530, 116.280, 123.675] # Pixel mean of imagenet dataset R/G/B?

WEIGHTS: "./model_final_a3ec72.pkl"

MASK_ON: False

RESNETS:

DEPTH: 101

PROPOSAL_GENERATOR:

NAME: "RRPN"

RPN:

HEAD_NAME: "StandardRPNHead"

BBOX_REG_WEIGHTS: (1.0, 1.0, 1.0, 1.0, 1.0)

ANCHOR_GENERATOR:

NAME: "RotatedAnchorGenerator"

ANGLES: [[0, 30, 60, 90]]

ASPECT_RATIOS: [[0.5, 1.0, 2.0]]

ROI_HEADS:

NAME: "RROIHeads"

NUM_CLASSES: 15 # number of foreground classes

ROI_BOX_HEAD:

POOLER_TYPE: "ROIAlignRotated"

BBOX_REG_WEIGHTS: (10.0, 10.0, 5.0, 5.0, 10.0)

SOLVER:

STEPS: (210000, 250000)

MAX_ITER: 10 # number of epochs ( I modified the plain net train from iterations to epochs)

CHECKPOINT_PERIOD: 1 # number of epochs

from detectron2.

For anyone else still working on a rotated implementation, I've clean up the very helpful work from @st7ma784 to be a bit simpler to run.

Let me know if there are any improvements.

Hope it is of help to someone.

https://colab.research.google.com/drive/1324pVWKpyscblSNUU1aqSDuDJb6liAvo?usp=sharing

from detectron2.

Thanks for your reply!! I still have some question:

1、what kind of network can support rotated bounding boxes?

2、what kind of datasets have you tried when detecting with rotated bounding boxes and how to calculate mAP while eval and could you give me a baseline?

3、Could you share papers in rotated bounding boxes in detectron2?

from detectron2.

My training now works, cheers @ppwwyyxx!

for anyone else struggling, heres the cell that made a difference:

from detectron2.engine import DefaultTrainer

from detectron2.config import get_cfg

from detectron2.modeling import build_model

from detectron2.evaluation import inference_on_dataset, RotatedCOCOEvaluator

from detectron2 import model_zoo

from detectron2.data import transforms as T

from detectron2.data import detection_utils as utils

from detectron2.data import DatasetCatalog, MetadataCatalog, build_detection_test_loader,build_detection_train_loader

import copy

from detectron2.engine import DefaultTrainer, default_argument_parser, default_setup, hooks, launch

cfg = get_cfg()

cfg.merge_from_file(model_zoo.get_config_file("COCO-Detection/faster_rcnn_R_50_FPN_3x.yaml"))

cfg.MODEL.WEIGHTS = model_zoo.get_checkpoint_url("COCO-Detection/faster_rcnn_R_50_FPN_3x.yaml") # Let training initialize from model zoo

cfg.DATASETS.TRAIN = (['Train'])

cfg.DATASETS.TEST = ()

cfg.DATALOADER.NUM_WORKERS = 4

cfg.MODEL.MASK_ON=False

cfg.SOLVER.IMS_PER_BATCH = 4

cfg.SOLVER.CHECKPOINT_PERIOD=2000

cfg.SOLVER.BASE_LR = 0.0005 # pick a good LR

cfg.SOLVER.MAX_ITER=10000 # 300 iterations seems good enough for this toy dataset; you may need to train longer for a practical dataset

cfg.MODEL.ROI_HEADS.BATCH_SIZE_PER_IMAGE = 512 # faster, and good enough for this toy dataset (default: 512)

cfg.MODEL.ROI_HEADS.NUM_CLASSES =ClassCount

cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST = 0.5 # set threshold for this model

cfg.MODEL.PROPOSAL_GENERATOR.NAME = "RRPN"

cfg.MODEL.RPN.HEAD_NAME = "StandardRPNHead"

cfg.MODEL.RPN.BBOX_REG_WEIGHTS = (10,10,5,5,1)

cfg.MODEL.ANCHOR_GENERATOR.NAME = "RotatedAnchorGenerator"

cfg.MODEL.ANCHOR_GENERATOR.ANGLES = [[-90,-60,-30,0,30,60,90]]

cfg.MODEL.ROI_HEADS.NAME = "RROIHeads"

cfg.MODEL.ROI_HEADS.BATCH_SIZE_PER_IMAGE = 256 # faster, and good enough for this toy dataset (default: 512)

cfg.MODEL.ROI_BOX_HEAD.POOLER_TYPE = "ROIAlignRotated"

cfg.MODEL.ROI_BOX_HEAD.BBOX_REG_WEIGHTS = (10.0, 10.0, 5.0, 5.0, 10.0)

os.makedirs(cfg.OUTPUT_DIR, exist_ok=True)#

def my_transform_instance_annotations(annotation, transforms, image_size, *, keypoint_hflip_indices=None):

annotation["bbox"] = transforms.apply_rotated_box(np.asarray([annotation['bbox']]))[0]

annotation["bbox_mode"] = BoxMode.XYXY_ABS

return annotation

def mapper(dataset_dict):

dataset_dict = copy.deepcopy(dataset_dict) # it will be modified by code below

image = utils.read_image(dataset_dict["file_name"], format="BGR")

image, transforms = T.apply_transform_gens([T.Resize((800, 800))], image)

dataset_dict["image"] = torch.as_tensor(image.transpose(2, 0, 1).astype("float32"))

annos = [

my_transform_instance_annotations(obj, transforms, image.shape[:2])

for obj in dataset_dict.pop("annotations")

if obj.get("iscrowd", 0) == 0

]

instances = utils.annotations_to_instances_rotated(annos, image.shape[:2])

dataset_dict["instances"] = utils.filter_empty_instances(instances)

return dataset_dict

class MyTrainer(DefaultTrainer):

@classmethod

def build_evaluator(cls, cfg, dataset_name):

output_folder = os.path.join(cfg.OUTPUT_DIR, "inference")

evaluators = [RotatedCOCOEvaluator(dataset_name, cfg, True, output_folder)]

return DatasetEvaluators(evaluators)

@classmethod

def build_train_loader(cls, cfg):

return build_detection_train_loader(cfg,mapper=mapper)

EDIT Changed evaluator to ROTATEDcocoevaluator

from detectron2.

Apologies for delay:

Here's a stripped back notebook from my dissertation - Its pulling in data I've stored on my Google Drive so you'll need to rewrite the dataloader before trying it.

https://colab.research.google.com/drive/1ijCf9LwJ3HbXMBFXi4CM6qd5IBXlh330?usp=sharing

from detectron2.

What do you mean by "oriention target"?

If you mean objects that are often not horizontal/vertically aligned, like texts, detectron2 supports rotated bounding boxes. But an example is not available now.

from detectron2.

@WeihongM , this should help: https://pytorch.org/tutorials/beginner/data_loading_tutorial.html

I'm not sure I can share the code but basically you'll want to get a list of dictionaries where each dictionary corresponds to a training sample with keys "image", "gt_instance", etc. Image should bea pytorch tensor and gt_instances is an instances object. Part of debugging, you can step through the network and check the exact keys it expects. The design pattern makes stepping through the code a little confusing at first as it jumps from place to place.

Angle representation is described in the comment here. When calculating angle, you'll come across atan or atan2. Should use atan2.

@MaximKuklin , I've trained it on DOTA 1.0 train set splitting the image down to 256x256 squares and removing any samples that don't have anything objects in them. Hardware is 2080Ti and 8 core cpu. Takes about a day or 2 for ~200k iterations (minibatch size 1). I'd train it for a week total; it depends on your dataset, and learning configurations.

I've also adapted this rotated faster rcnn to SCRDet and found it to perform better.

from detectron2.

Update:

I found another config that needs to be overridden:

MODEL.RPN.BBOX_REG_WEIGHTS: (1.0, 1.0, 1.0, 1.0, 1.0)

The default configs file doesn't have a section for RRPN, assuming it's intentional and we're supposed to override RPN configs

from detectron2.

Yup, now I'm taking a step back and getting horizontal bounding boxes to work first, found another config that needs to be changed for rotated boxes:

MODEL.ROI_BOX_HEAD.POOLER_TYPE = "ROIAlignRotated"

from detectron2.

Waiting for some updates.......

from detectron2.

Waiting for some updates too .......

from detectron2.

Hi, would like to follow up on this. Would you have a minimal working example on training and evaluating models using rotated bounding boxes? Thanks!

from detectron2.

We almost cry out for it! A minimal working example is needed!Thanks!

from detectron2.

I'm attempting to do this as well, so far I've found these configs:

cfg.MODEL.PROPOSAL_GENERATOR.NAME = "RRPN"

cfg.MODEL.ANCHOR_GENERATOR.NAME = "RotatedAnchorGenerator"

Currently, the angles in anchors are at 90 degree intervals so i'll need to add more angles.

I'm using the faster_rcnn_R_101_FPN_3x pretrained network.

The issue now is using detectron2's dataloader with rotated bounding box annotations.

In the documentation it says that box must be a member of detectron2.structures.BoxMode which only supports horizontal bounding box annotations.

To get it to work now I'm thinking i'll have to use another way of loading data.

@ppwwyyxx do you think it will be available in days, weeks, or months?

from detectron2.

Yes a custom dataloader is needed to provide rotated boxes as input. We don't have a concrete timeline.

from detectron2.

@st7ma784 could you reformat your post with code (tripe `) to be more readable?

Also, would you like to commit the rotated dataloader for the PR #996 ?

from detectron2.

My training now works, cheers @ppwwyyxx!

for anyone else struggling, heres the cell that made a difference:

from detectron2.engine import DefaultTrainer from detectron2.config import get_cfg from detectron2.modeling import build_model from detectron2.evaluation import inference_on_dataset, COCOEvaluator from detectron2 import model_zoo from detectron2.data import transforms as T from detectron2.data import detection_utils as utils from detectron2.data import DatasetCatalog, MetadataCatalog, build_detection_test_loader,build_detection_train_loader import copy from detectron2.engine import DefaultTrainer, default_argument_parser, default_setup, hooks, launch cfg = get_cfg() cfg.merge_from_file(model_zoo.get_config_file("COCO-Detection/faster_rcnn_R_50_FPN_3x.yaml")) cfg.MODEL.WEIGHTS = model_zoo.get_checkpoint_url("COCO-Detection/faster_rcnn_R_50_FPN_3x.yaml") # Let training initialize from model zoo cfg.DATASETS.TRAIN = (['Train']) cfg.DATASETS.TEST = () cfg.DATALOADER.NUM_WORKERS = 4 cfg.MODEL.MASK_ON=False cfg.SOLVER.IMS_PER_BATCH = 4 cfg.SOLVER.CHECKPOINT_PERIOD=2000 cfg.SOLVER.BASE_LR = 0.0005 # pick a good LR cfg.SOLVER.MAX_ITER=10000 # 300 iterations seems good enough for this toy dataset; you may need to train longer for a practical dataset cfg.MODEL.ROI_HEADS.BATCH_SIZE_PER_IMAGE = 512 # faster, and good enough for this toy dataset (default: 512) cfg.MODEL.ROI_HEADS.NUM_CLASSES =ClassCount cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST = 0.5 # set threshold for this model cfg.MODEL.PROPOSAL_GENERATOR.NAME = "RRPN" cfg.MODEL.RPN.HEAD_NAME = "StandardRPNHead" cfg.MODEL.RPN.BBOX_REG_WEIGHTS = (10,10,5,5,1) cfg.MODEL.ANCHOR_GENERATOR.NAME = "RotatedAnchorGenerator" cfg.MODEL.ANCHOR_GENERATOR.ANGLES = [[-90,-60,-30,0,30,60,90]] cfg.MODEL.ROI_HEADS.NAME = "RROIHeads" cfg.MODEL.ROI_HEADS.BATCH_SIZE_PER_IMAGE = 256 # faster, and good enough for this toy dataset (default: 512) cfg.MODEL.ROI_BOX_HEAD.POOLER_TYPE = "ROIAlignRotated" cfg.MODEL.ROI_BOX_HEAD.BBOX_REG_WEIGHTS = (10.0, 10.0, 5.0, 5.0, 10.0) os.makedirs(cfg.OUTPUT_DIR, exist_ok=True)# def my_transform_instance_annotations(annotation, transforms, image_size, *, keypoint_hflip_indices=None): annotation["bbox"] = transforms.apply_rotated_box(np.asarray([annotation['bbox']]))[0] annotation["bbox_mode"] = BoxMode.XYXY_ABS return annotation def mapper(dataset_dict): dataset_dict = copy.deepcopy(dataset_dict) # it will be modified by code below image = utils.read_image(dataset_dict["file_name"], format="BGR") image, transforms = T.apply_transform_gens([T.Resize((800, 800))], image) dataset_dict["image"] = torch.as_tensor(image.transpose(2, 0, 1).astype("float32")) annos = [ my_transform_instance_annotations(obj, transforms, image.shape[:2]) for obj in dataset_dict.pop("annotations") if obj.get("iscrowd", 0) == 0 ] instances = utils.annotations_to_instances_rotated(annos, image.shape[:2]) dataset_dict["instances"] = utils.filter_empty_instances(instances) return dataset_dict class MyTrainer(DefaultTrainer): @classmethod def build_evaluator(cls, cfg, dataset_name): output_folder = os.path.join(cfg.OUTPUT_DIR, "inference") evaluators = [COCOEvaluator(dataset_name, cfg, True, output_folder)] return DatasetEvaluators(evaluators) @classmethod def build_train_loader(cls, cfg): return build_detection_train_loader(cfg,mapper=mapper)

,i think it will be annotation["bbox_mode"] = BoxMode.XYWHA_ABS in your my_transform_instance_annotations()

from detectron2.

from detectron2.

Hello, anyone knows about annotation tools can be used for rotated bounding boxes?

from detectron2.

State-of-the-art object detection networks depend on region proposal algorithms to hypothesize object locations. Advances like SPPnet and Fast R-CNN have reduced the running time of these detection networks, exposing region proposal computation as a bottleneck. In this work, we introduce a Region Proposal Network (RPN) that shares full-image convolutional features with the detection network, thus enabling nearly cost-free region proposals. An RPN is a fully-convolutional network that simultaneously predicts object bounds and objectness scores at each position. RPNs are trained end-to-end to generate high-quality region proposals, which are used by Fast R-CNN for detection. With a simple alternating optimization, RPN and Fast R-CNN can be trained to share convolutional features. For the very deep VGG-16 model, our detection system has a frame rate of 5fps (including all steps) on a GPU, while achieving state-of-the-art object detection accuracy on PASCAL VOC 2007 (73.2% mAP) and 2012 (70.4% mAP) using 300 proposals per image. The code will be released.

from detectron2.

@ppwwyyxx Any updates?

from detectron2.

@edwardchaos Appreciate you for working on it! Can you keep on updating your progress here? That will be very helpful!

from detectron2.

@edwardchaos Hello, how do you prepare your data loader, can you share the script here?

Also, how you prepare the angle theta.

Thanks.

from detectron2.

Hi @edwardchaos, many thanks for your config! I faced the same problem with torch.div_() func. So, this can be fixed by putting torch.no_grad(). This helps because we are working with self.weights field, which contains gradient.

with torch.no_grad():

wx, wy, ww, wh, wa = self.weights

dx, dy, dw, dh, da = torch.unbind(deltas, dim=1)

dx.div_(wx)

dy.div_(wy)

dw.div_(ww)

dh.div_(wh)

da.div_(wa)

I found solution here: https://discuss.pytorch.org/t/runtimeerror-output-nr-0-assert-failed-at-pytorch-torch-csrc-autograd-variable-cpp-196-please-report-a-bug-to-pytorch/39604/2

Moreover, can you share the info about how long does it take to train your model and how many images in dataset do you have, please?

from detectron2.

Are rotated bounding boxes available for Mask R-CNN too?

from detectron2.

Looking forward to the example in 2019. 😄

from detectron2.

Look forward to it too.

from detectron2.

hello @edwardchaos i use your config, and write my dataloader, i can start to train r2cnn, but the train crashed after several step, CUDA out of memory

[02/22 19:08:27 d2.utils.events]: eta: 0:53:32 iter: 16 total_loss: 2.584 loss_cls: 0.722 loss_box_reg: 0.004 loss_rpn_cls: 0.694 loss_rpn_loc: 1.209 time: 0.4244 data_time: 0.0099 lr: 0.000017 max_mem: 3168M

[02/22 19:08:27 d2.utils.events]: eta: 0:54:36 iter: 17 total_loss: 2.656 loss_cls: 0.709 loss_box_reg: 0.004 loss_rpn_cls: 0.694 loss_rpn_loc: 1.210 time: 0.4257 data_time: 0.0094 lr: 0.000018 max_mem: 3168M

[02/22 19:08:28 d2.utils.events]: eta: 0:53:31 iter: 18 total_loss: 2.584 loss_cls: 0.695 loss_box_reg: 0.004 loss_rpn_cls: 0.694 loss_rpn_loc: 1.211 time: 0.4230 data_time: 0.0090 lr: 0.000019 max_mem: 3168M

ERROR [02/22 19:08:28 d2.engine.train_loop]: Exception during training:

Traceback (most recent call last):

File "/home/1T/workspace/detectron2/tools/../detectron2/engine/train_loop.py", line 132, in train

self.run_step()

File "/home/1T/workspace/detectron2/tools/../detectron2/engine/train_loop.py", line 214, in run_step

loss_dict = self.model(data)

File "/home/kakaluote/anaconda3/envs/pytorch-py3-lastest/lib/python3.6/site-packages/torch/nn/modules/module.py", line 541, in call

result = self.forward(*input, **kwargs)

File "/home/1T/workspace/detectron2/tools/../detectron2/modeling/meta_arch/rcnn.py", line 123, in forward

proposals, proposal_losses = self.proposal_generator(images, features, gt_instances)

File "/home/kakaluote/anaconda3/envs/pytorch-py3-lastest/lib/python3.6/site-packages/torch/nn/modules/module.py", line 541, in call

result = self.forward(*input, **kwargs)

File "/home/1T/workspace/detectron2/tools/../detectron2/modeling/proposal_generator/rrpn.py", line 53, in forward

losses = outputs.losses()

File "/home/1T/workspace/detectron2/tools/../detectron2/modeling/proposal_generator/rpn_outputs.py", line 322, in losses

gt_objectness_logits, gt_anchor_deltas = self.get_ground_truth()

File "/home/1T/workspace/detectron2/tools/../detectron2/modeling/proposal_generator/rrpn_outputs.py", line 219, in get_ground_truth

matched_idxs, gt_objectness_logits_i = self.anchor_matcher(match_quality_matrix)

File "/home/1T/workspace/detectron2/tools/../detectron2/modeling/matcher.py", line 95, in call

self.set_low_quality_matches(match_labels, match_quality_matrix)

File "/home/1T/workspace/detectron2/tools/../detectron2/modeling/matcher.py", line 115, in set_low_quality_matches

match_quality_matrix == highest_quality_foreach_gt[:, None]

RuntimeError: CUDA out of memory. Tried to allocate 2.53 GiB (GPU 0; 7.76 GiB total capacity; 2.08 GiB already allocated; 1.21 GiB free; 1.42 GiB cached)

[02/22 19:08:28 d2.engine.hooks]: Overall training speed: 17 iterations in 0:00:07 (0.4509 s / it)

[02/22 19:08:28 d2.engine.hooks]: Total training time: 0:00:08 (0:00:00 on hooks)

Do you have any suggestions?need your help, thanks

from detectron2.

Try reducing your batch size or input volume or network

from detectron2.

yes,batchsize is 1, input is (600,) ,backbone is res50fpn

same crash。。。

Could it be a crash caused by input data?

i'm sure the angle is (-180,180)

from detectron2.

Hello @ppwwyyxx . I am using these configs:

`cfg = get_cfg()

cfg.merge_from_file(model_zoo.get_config_file("COCO-Detection/faster_rcnn_R_50_FPN_3x.yaml"))

cfg.DATASETS.TRAIN = ("train",)

cfg.DATASETS.TEST = ("val",)

cfg.MODEL.WEIGHTS = model_zoo.get_checkpoint_url("COCO-Detection/faster_rcnn_R_50_FPN_3x.yaml") # Let training initialize from model zoo

cfg.MODEL.PROPOSAL_GENERATOR.NAME = "RRPN"

cfg.MODEL.RPN.HEAD_NAME = "StandardRPNHead"

cfg.MODEL.RPN.BBOX_REG_WEIGHTS = (1.0, 1.0, 1.0, 1.0, 1.0)

cfg.MODEL.ANCHOR_GENERATOR.NAME = "RotatedAnchorGenerator"

cfg.MODEL.ANCHOR_GENERATOR.ANGLES = [[0, 30, 60, 90]]

cfg.MODEL.ANCHOR_GENERATOR.ASPECT_RATIOS = [[0.5, 1.0, 2.0]]

cfg.MODEL.ROI_HEADS.NAME = "RROIHeads"

cfg.MODEL.ROI_HEADS.BATCH_SIZE_PER_IMAGE = 128 # faster, and good enough for this toy dataset (default: 512)

cfg.MODEL.ROI_HEADS.NUM_CLASSES = 1 # only has one class

cfg.MODEL.ROI_BOX_HEAD.POOLER_TYPE = "ROIAlignRotated"

cfg.MODEL.ROI_BOX_HEAD.BBOX_REG_WEIGHTS = (10.0, 10.0, 5.0, 5.0, 10.0)

cfg.SOLVER.MAX_ITER = 300 # 300 iterations seems good enough for this toy dataset; you may need to train longer for a practical dataset`

but getting the following errors after running:

[02/24 17:55:30 d2.engine.train_loop]: Starting training from iteration 0 ERROR [02/24 17:55:33 d2.engine.train_loop]: Exception during training: Traceback (most recent call last): File "/usr/local/lib/python3.6/dist-packages/detectron2/engine/train_loop.py", line 132, in train self.run_step() File "/usr/local/lib/python3.6/dist-packages/detectron2/engine/train_loop.py", line 214, in run_step loss_dict = self.model(data) File "/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py", line 532, in __call__ result = self.forward(*input, **kwargs) File "/usr/local/lib/python3.6/dist-packages/detectron2/modeling/meta_arch/rcnn.py", line 123, in forward proposals, proposal_losses = self.proposal_generator(images, features, gt_instances) File "/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py", line 532, in __call__ result = self.forward(*input, **kwargs) File "/usr/local/lib/python3.6/dist-packages/detectron2/modeling/proposal_generator/rrpn.py", line 53, in forward losses = outputs.losses() File "/usr/local/lib/python3.6/dist-packages/detectron2/modeling/proposal_generator/rpn_outputs.py", line 322, in losses gt_objectness_logits, gt_anchor_deltas = self._get_ground_truth() File "/usr/local/lib/python3.6/dist-packages/detectron2/modeling/proposal_generator/rrpn_outputs.py", line 234, in _get_ground_truth anchors_i.tensor, matched_gt_boxes.tensor File "/usr/local/lib/python3.6/dist-packages/detectron2/modeling/box_regression.py", line 149, in get_deltas target_boxes, dim=1 ValueError: not enough values to unpack (expected 5, got 4) [02/24 17:55:33 d2.engine.hooks]: Total training time: 0:00:02 (0:00:00 on hooks)

I am not sure how to fix this. I assume I don't need a custom dataloader anymore. (not using)

Can you please help? Thanks.

from detectron2.

update

I found that the number of objects in image is related to the memory usage

on my dataset,some image have 47 objects, and the training always crash at these images.

27 objects will use 4171M GPU memory.

more info: crash place is same as below

File "/home/1T/workspace/detectron2/tools/../detectron2/modeling/proposal_generator/rrpn_outputs.py", line 219, in get_ground_truth

matched_idxs, gt_objectness_logits_i = self.anchor_matcher(match_quality_matrix)

File "/home/1T/workspace/detectron2/tools/../detectron2/modeling/matcher.py", line 95, in call

self.set_low_quality_matches(match_labels, match_quality_matrix)

File "/home/1T/workspace/detectron2/tools/../detectron2/modeling/matcher.py", line 115, in set_low_quality_matches

match_quality_matrix == highest_quality_foreach_gt[:, None]

RuntimeError: CUDA out of memory. Tried to allocate 2.53 GiB (GPU 0; 7.76 GiB total capacity; 2.08 GiB already allocated; 1.21 GiB free; 1.42 GiB cached)

@ppwwyyxx Why the number of targets is related to memory?

from detectron2.

I am yet to try rotated bounding boxes, but hoping to soon. Good question by @kakaluote. In the normal faster-rcnn, the training batch sizes are fixed both for the RPN and the second stage (RCNN). So even if we have more GT boxes, and hence more foreground proposals / ROIs, there should be no proportional increase in memory allocated. At least that's what I would think.

from detectron2.

@minhajul-arifin-badhon It looks like when the model is beginning training your dataset only has 4 values where it should have 5. From what I can tell, RRCNN methods require an extra BBox value for rotation (I suspect -im about to look and play around- that its x,y,w,h, angle)

BoxMode.XYWHA_ABS is what you want?

from detectron2.

record = {}

record["file_name"] = filename

record["image_id"] = idx

record["height"] = 1024

record["width"] = 1024

objs = []

for row_index, row in df_group.iterrows():

obj = {

"bbox": [row['x_c'], row['y_c'], row['w'], row['h'], row['a']],

"bbox_mode": BoxMode.XYWHA_ABS,

"category_id": 0,

"iscrowd": 0

}

objs.append(obj)

record["annotations"] = objs

@st7ma784 yes, I want to train with rotated bounding boxes. I have used the above code to get each of the annotations. I think I am using angles properly. I also tried to train with only two images and checked that the data I am providing is correct. Not sure what I am missing. Have you tried training with rotated bounding boxes?

from detectron2.

I havent got it working yet(being optimistic here :-P ). my guess is that

has something to do with it

from detectron2.

help me to resolve this issue,,,i am using BoxMode.XYWHA_ABS

ERROR [03/01 19:44:57 d2.engine.train_loop]: Exception during training:

Traceback (most recent call last):

File "/content/detectron2_repo/detectron2/engine/train_loop.py", line 132, in train

self.run_step()

File "/content/detectron2_repo/detectron2/engine/train_loop.py", line 215, in run_step

loss_dict = self.model(data)

File "/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py", line 532, in call

result = self.forward(*input, **kwargs)

File "/content/detectron2_repo/detectron2/modeling/meta_arch/rcnn.py", line 124, in forward

proposals, proposal_losses = self.proposal_generator(images, features, gt_instances)

File "/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py", line 532, in call

result = self.forward(*input, **kwargs)

File "/content/detectron2_repo/detectron2/modeling/proposal_generator/rrpn.py", line 53, in forward

losses = outputs.losses()

File "/content/detectron2_repo/detectron2/modeling/proposal_generator/rpn_outputs.py", line 327, in losses

gt_objectness_logits, gt_anchor_deltas = self._get_ground_truth()

File "/content/detectron2_repo/detectron2/modeling/proposal_generator/rrpn_outputs.py", line 238, in _get_ground_truth

anchors_i.tensor, matched_gt_boxes.tensor

File "/content/detectron2_repo/detectron2/modeling/box_regression.py", line 157, in get_deltas

target_boxes, dim=1

ValueError: not enough values to unpack (expected 5, got 4)

[03/01 19:44:57 d2.engine.hooks]: Total training time: 0:00:00 (0:00:00 on hooks)

ValueError Traceback (most recent call last)

in ()

132 trainer = DefaultTrainer(cfg)

133 trainer.resume_or_load(resume=False)

--> 134 trainer.train()

156 target_ctr_x, target_ctr_y, target_widths, target_heights, target_angles = torch.unbind(

--> 157 target_boxes, dim=1

158 )

159

from detectron2.

so, interesting development.

print(DatasetCatalog.list())

DatasetCatalog.register("Train", returnTRAINRECORDS)

MetadataCatalog.get("Train").set(thing_classes=list(ClassesNames.keys()))

try:

convert_to_coco_json("Train", ''.join("./output/"+"Train"+"_coco_format.json"))

print("Succeeded in making Train_coco_format.json")

MetadataCatalog.get("Train").set(json_file=''.join("./output/"+"Train"+"_coco_format.json"))

except:

pass`

Is what Im using to load my dataset into a coco format JSON file, meaning it can be used later in an evaluator. I'm suspicious that this doesnt get to the print in the "try" section when the Bbox mode is XYWHA_ABS ... might this be the cause of only getting 4 values when resolving deltas?

from detectron2.

with my own code to generate data dict

i am getting dataset_dicts

[{'annotations': [{'bbox': [465.1667, 696.8333, 757.0, 67.0, 0.0],

'bbox_mode': <BoxMode.XYWHA_ABS: 4>,

'category_id': 1,

'iscrowd': 0},

{'bbox': [238.4438, 263.116, 343.7455, 38.4476, 0.02],

'bbox_mode': <BoxMode.XYWHA_ABS: 4>,

'category_id': 0,

'iscrowd': 0}],

'file_name': '/content/gdrive/My Drive/app/detectro/images/ad7ab3c1-1e87-43e2-af06-c53ed76e9e82.jpg',

'height': 1010,

'image_id': 0,

'width': 898},

{'annotations': [{'bbox': [425.295, 612.7028, 247.3418, 25.9827, 3.021593],

'bbox_mode': <BoxMode.XYWHA_ABS: 4>,

'category_id': 1,

'iscrowd': 0},

{'bbox': [82.0213, 480.0, 115.0, 26.0, 2.991593],

'bbox_mode': <BoxMode.XYWHA_ABS: 4>,

'category_id': 0,

'iscrowd': 0}],

'file_name': '/content/gdrive/My Drive/app/detectro/images/fa981233-a7f2-4bb5-84b1-8f7a5cc06011.jpg',

'height': 812,

'image_id': 1,

'width': 575},

then i register with below code is it correct??????

from detectron2.data import DatasetCatalog, MetadataCatalog

for d in ["train"]:

DatasetCatalog.register("notic_" + d, lambda d=d: get_data_dicts(image_paths))

MetadataCatalog.get("notic_" + d).set(thing_classes=classes)

from detectron2.

Guys, I've been working on broken RRPN and only now found this useful thread!

If you can help me review and implement needed missing piece in the PR #996 , I think we can finally make this work out of the box 🗃️

from detectron2.

@breznak Thanks for your work on this! I've been trying to get this workflow working too.

I'm not sure if this is the right place to discuss (or if it should be on your PR) but I have been running into a problem with your code. The error occurs when I am trying to train (I'm using some of the above config options as well as my own data loaded):

<ipython-input-13-381075003630> in <module>

1 os.makedirs(cfg.OUTPUT_DIR, exist_ok=True)

----> 2 trainer = DefaultTrainer(cfg)

3 trainer.resume_or_load(resume=False)

c:\users\rgooch\documents\detectron2_repo\detectron2\engine\defaults.py in __init__(self, cfg)

249 setup_logger()

250 # Assume these objects must be constructed in this order.

--> 251 model = self.build_model(cfg)

252 optimizer = self.build_optimizer(cfg, model)

253 data_loader = self.build_train_loader(cfg)

c:\users\rgooch\documents\detectron2_repo\detectron2\engine\defaults.py in build_model(cls, cfg)

395 Overwrite it if you'd like a different model.

396 """

--> 397 model = build_model(cfg)

398 logger = logging.getLogger(__name__)

399 logger.info("Model:\n{}".format(model))

c:\users\rgooch\documents\detectron2_repo\detectron2\modeling\meta_arch\build.py in build_model(cfg)

17 """

18 meta_arch = cfg.MODEL.META_ARCHITECTURE

---> 19 return META_ARCH_REGISTRY.get(meta_arch)(cfg)

c:\users\rgooch\documents\detectron2_repo\detectron2\modeling\meta_arch\rcnn.py in __init__(self, cfg)

32 self.device = torch.device(cfg.MODEL.DEVICE)

33 self.backbone = build_backbone(cfg)

---> 34 self.proposal_generator = build_proposal_generator(cfg, self.backbone.output_shape())

35 self.roi_heads = build_roi_heads(cfg, self.backbone.output_shape())

36 self.vis_period = cfg.VIS_PERIOD

c:\users\rgooch\documents\detectron2_repo\detectron2\modeling\proposal_generator\build.py in build_proposal_generator(cfg, input_shape)

22 return None

23

---> 24 return PROPOSAL_GENERATOR_REGISTRY.get(name)(cfg, input_shape)

c:\users\rgooch\documents\detectron2_repo\detectron2\modeling\proposal_generator\rrpn.py in __init__(self, cfg, input_shape)

26

27 def __init__(self, cfg, input_shape: Dict[str, ShapeSpec]):

---> 28 super(RRPN, self).__init__(cfg, input_shape)

29 # three lines below added for pull request 996

30 assert(isinstance(self.anchor_generator, RotatedAnchorGenerator)), \

c:\users\rgooch\documents\detectron2_repo\detectron2\modeling\proposal_generator\rpn.py in __init__(self, cfg, input_shape)

119 cfg, [input_shape[f] for f in self.in_features]

120 )

--> 121 self.box2box_transform = Box2BoxTransform(weights=cfg.MODEL.RPN.BBOX_REG_WEIGHTS)

122 self.anchor_matcher = Matcher(

123 cfg.MODEL.RPN.IOU_THRESHOLDS, cfg.MODEL.RPN.IOU_LABELS, allow_low_quality_matches=True

c:\users\rgooch\documents\detectron2_repo\detectron2\modeling\box_regression.py in __init__(self, weights, scale_clamp)

34 """

35 self.weights = weights

---> 36 assert(len(weights)==4),"Expecting a 4-elements tuple (for scaling (dx,dy,dw,dh))"

37 self.scale_clamp = scale_clamp

38

AssertionError: Expecting a 4-elements tuple (for scaling (dx,dy,dw,dh))

I suspect that the RRPN class in rrpn.py is trying to initialize the RPN class, which has in its __init __ block a call to Box2BoxTransform, which expects 4 weights, and receives the 5 weights from RRPN.

What do you think?

from detectron2.

from detectron2.

My training is working,but it seem not convergence

from detectron2.

@breznak sure- a little new to gtihub with not just my own project so not sure how. (this is one of my first "real world" projects using github)

from detectron2.

@kakaluote I found the same, with a very low LR, (I was using 0.0005) after 6000 iters there was no convergence, upping it to 0.005 helped massively, but I suspect its to do with the RegBox weights having angle as least. I think that might depend on the object shapes in the dataset, if your object are long and thin (Im using Ships) then the angle is far more important than the X\Y of a BBOX proposal. try changing cfg.MODEL.RPN.BBOX_REG_WEIGHTS or cfg.MODEL.ROI_BOX_HEAD.BBOX_REG_WEIGHTS back to (1,1,1,1,1)

from detectron2.

@st7ma784 you solved my training issue thanks for help:))))))))

i use your custom mapper function and modify as per your cfg params

os.makedirs(cfg.OUTPUT_DIR, exist_ok=True)

trainer = MyTrainer(cfg)

trainer.resume_or_load(resume=False)

trainer.train()

now i my training has started

[03/06 17:02:15 d2.utils.events]: eta: 1:31:35 iter: 799 total_loss: 0.204 loss_cls: 0.055 loss_box_reg: 0.057 loss_rpn_cls: 0.030 loss_rpn_loc: 0.042 time: 0.5960 data_time: 0.0080 lr: 0.000400 max_mem: 3370M

[03/06 17:02:28 d2.utils.events]: eta: 1:31:26 iter: 819 total_loss: 0.252 loss_cls: 0.071 loss_box_reg: 0.079 loss_rpn_cls: 0.032 loss_rpn_loc: 0.071 time: 0.5963 data_time: 0.0067 lr: 0.000410 max_mem: 3370M

[03/06 17:02:40 d2.utils.events]: eta: 1:31:17 iter: 839 total_loss: 0.307 loss_cls: 0.081 loss_box_reg: 0.097 loss_rpn_cls: 0.026 loss_rpn_loc: 0.073 time: 0.5965 data_time: 0.0089 lr: 0.000420 max_mem: 3370M

[03/06 17:02:52 d2.utils.events]: eta: 1:31:06 iter: 859 total_loss: 0.298 loss_cls: 0.094 loss_box_reg: 0.096 loss_rpn_cls: 0.027 loss_rpn_loc: 0.055 time: 0.5968 data_time: 0.0076 lr: 0.000430 max_mem: 3370M

from detectron2.

@kakaluote I found the same, with a very low LR, (I was using 0.0005) after 6000 iters there was no convergence, upping it to 0.005 helped massively, but I suspect its to do with the RegBox weights having angle as least. I think that might depend on the object shapes in the dataset, if your object are long and thin (Im using Ships) then the angle is far more important than the X\Y of a BBOX proposal. try changing

cfg.MODEL.RPN.BBOX_REG_WEIGHTSorcfg.MODEL.ROI_BOX_HEAD.BBOX_REG_WEIGHTSback to (1,1,1,1,1)

can i change both the param to (1,1,1,1,1)???

from detectron2.

I presume so, still waiting for other tests in colab to run... also to check correct angles of BBOXes 👍

import random

dataset_dicts = returnTRAINRECORDS()

print(ClassesTotals)

class myVisualizer(Visualizer):

def draw_dataset_dict(self, dic):

annos = dic.get("annotations", None)

if annos:

if "segmentation" in annos[0]:

masks = [x["segmentation"] for x in annos]

else:

masks = None

if "keypoints" in annos[0]:

keypts = [x["keypoints"] for x in annos]

keypts = np.array(keypts).reshape(len(annos), -1, 3)

else:

keypts = None

boxes = [BoxMode.convert(x["bbox"], x["bbox_mode"], BoxMode.XYWHA_ABS) for x in annos]

labels = [x["category_id"] for x in annos]

names = self.metadata.get("thing_classes", None)

if names:

labels = [names[i] for i in labels]

labels = [

"{}".format(i) + ("|crowd" if a.get("iscrowd", 0) else "")

for i, a in zip(labels, annos)

]

self.overlay_instances(labels=labels, boxes=boxes, masks=masks, keypoints=keypts)

sem_seg = dic.get("sem_seg", None)

if sem_seg is None and "sem_seg_file_name" in dic:

sem_seg = cv2.imread(dic["sem_seg_file_name"], cv2.IMREAD_GRAYSCALE)

if sem_seg is not None:

self.draw_sem_seg(sem_seg, area_threshold=0, alpha=0.5)

return self.output

for d in random.sample(dataset_dicts, 3):

e=dict(d)

name=e.get("file_name")

print(name)

print(e.items())

img = cv2.imread(name)

visualizer = myVisualizer(img[:, :, ::-1], metadata={}, scale=1)

vis = visualizer.draw_dataset_dict(e, )

cv2_imshow(vis.get_image()[:, :, ::-1])

from detectron2.

I presume so, still waiting for other tests in colab to run... also to check correct angles of BBOXes 👍

import random dataset_dicts = returnTRAINRECORDS() print(ClassesTotals) class myVisualizer(Visualizer): def draw_dataset_dict(self, dic): annos = dic.get("annotations", None) if annos: if "segmentation" in annos[0]: masks = [x["segmentation"] for x in annos] else: masks = None if "keypoints" in annos[0]: keypts = [x["keypoints"] for x in annos] keypts = np.array(keypts).reshape(len(annos), -1, 3) else: keypts = None boxes = [BoxMode.convert(x["bbox"], x["bbox_mode"], BoxMode.XYWHA_ABS) for x in annos] labels = [x["category_id"] for x in annos] names = self.metadata.get("thing_classes", None) if names: labels = [names[i] for i in labels] labels = [ "{}".format(i) + ("|crowd" if a.get("iscrowd", 0) else "") for i, a in zip(labels, annos) ] self.overlay_instances(labels=labels, boxes=boxes, masks=masks, keypoints=keypts) sem_seg = dic.get("sem_seg", None) if sem_seg is None and "sem_seg_file_name" in dic: sem_seg = cv2.imread(dic["sem_seg_file_name"], cv2.IMREAD_GRAYSCALE) if sem_seg is not None: self.draw_sem_seg(sem_seg, area_threshold=0, alpha=0.5) return self.output for d in random.sample(dataset_dicts, 3): e=dict(d) name=e.get("file_name") print(name) print(e.items()) img = cv2.imread(name) visualizer = myVisualizer(img[:, :, ::-1], metadata={}, scale=1) vis = visualizer.draw_dataset_dict(e, ) cv2_imshow(vis.get_image()[:, :, ::-1])

its not working still getting straight boxes,,notgetting angled/rotated boxes while doing prediction from saved model,,,,,,,,i think it will be annotation["bbox_mode"] = BoxMode.XYWHA_ABS in your my_transform_instance_annotations()

from detectron2.

from detectron2.

If you look at how boxmode converts, if you are using a presaved model, Im presuming the output of that is naturally ‘BoxMode.XYXY_ABS’ converting this to XYWHA doesn’t make it smaller it simply changes makes it XYWH and appends a 0 for the angle. For reference, that code is used after I load in my dataset so I can verify its correct pre training.

…

________________________________ From: Amir Khan [email protected] Sent: Friday, March 6, 2020 7:23:32 PM To: facebookresearch/detectron2 [email protected] Cc: Mander, Stephen (Student) [email protected]; Mention [email protected] Subject: [External] Re: [facebookresearch/detectron2] Example for rotated faster rcnn (#21) This email originated outside the University. Check before clicking links or attachments. I presume so, still waiting for other tests in colab to run... also to check correct angles of BBOXes 👍 import random dataset_dicts = returnTRAINRECORDS() print(ClassesTotals) class myVisualizer(Visualizer): def draw_dataset_dict(self, dic): annos = dic.get("annotations", None) if annos: if "segmentation" in annos[0]: masks = [x["segmentation"] for x in annos] else: masks = None if "keypoints" in annos[0]: keypts = [x["keypoints"] for x in annos] keypts = np.array(keypts).reshape(len(annos), -1, 3) else: keypts = None boxes = [BoxMode.convert(x["bbox"], x["bbox_mode"], BoxMode.XYWHA_ABS) for x in annos] labels = [x["category_id"] for x in annos] names = self.metadata.get("thing_classes", None) if names: labels = [names[i] for i in labels] labels = [ "{}".format(i) + ("|crowd" if a.get("iscrowd", 0) else "") for i, a in zip(labels, annos) ] self.overlay_instances(labels=labels, boxes=boxes, masks=masks, keypoints=keypts) sem_seg = dic.get("sem_seg", None) if sem_seg is None and "sem_seg_file_name" in dic: sem_seg = cv2.imread(dic["sem_seg_file_name"], cv2.IMREAD_GRAYSCALE) if sem_seg is not None: self.draw_sem_seg(sem_seg, area_threshold=0, alpha=0.5) return self.output for d in random.sample(dataset_dicts, 3): e=dict(d) name=e.get("file_name") print(name) print(e.items()) img = cv2.imread(name) visualizer = myVisualizer(img[:, :, ::-1], metadata={}, scale=1) vis = visualizer.draw_dataset_dict(e, ) cv2_imshow(vis.get_image()[:, :, ::-1]) its not working still getting straight boxes,,notgetting angled/rotated boxes while doing prediction from saved model — You are receiving this because you were mentioned. Reply to this email directly, view it on GitHubhttps://eur02.safelinks.protection.outlook.com/?url=https%3A%2F%2Fgithub.com%2Ffacebookresearch%2Fdetectron2%2Fissues%2F21%3Femail_source%3Dnotifications%26email_token%3DAI25TU2XZ2YEK6CDYD6VHXLRGFETJA5CNFSM4JABAQJKYY3PNVWWK3TUL52HS4DFVREXG43VMVBW63LNMVXHJKTDN5WW2ZLOORPWSZGOEOCQXZA%23issuecomment-595921892&data=02%7C01%7Cs.mander%40lancaster.ac.uk%7Cf7fbc413f4204d69d90708d7c203ddfb%7C9c9bcd11977a4e9ca9a0bc734090164a%7C1%7C0%7C637191194171292922&sdata=eYVBUB5tIbczFpvGE9e5GbH9RoE%2FNQZd8xVpZLQjSIc%3D&reserved=0, or unsubscribehttps://eur02.safelinks.protection.outlook.com/?url=https%3A%2F%2Fgithub.com%2Fnotifications%2Funsubscribe-auth%2FAI25TU4NHXMDFA7MRSQWWTTRGFETJANCNFSM4JABAQJA&data=02%7C01%7Cs.mander%40lancaster.ac.uk%7Cf7fbc413f4204d69d90708d7c203ddfb%7C9c9bcd11977a4e9ca9a0bc734090164a%7C1%7C0%7C637191194171302916&sdata=WYrS9e02EXMV0g12gyf%2FIkuAuf5hPgrkXjyN9xB4uBE%3D&reserved=0.

so, do u know which pre saved model has boxmode XYWHA_ABS as output???,,,or i have to train it from scratch

from detectron2.

from detectron2.

@st7ma784 Could you share the RotatedCOCOEvaluator?

from detectron2.

from detectron2.

thank you, the new version has RotatedCOCOEvaluator

from detectron2.

Hi @st7ma784 ,

I don't think fvcore has transforms.apply_rotated_box(). Did you define this yourself?

Thanks

from detectron2.

from detectron2.

Any one now works well with rotated faster rcnn? Waiting for some updates.......

from detectron2.

from detectron2.

@st7ma784 Thanks for your update :)

So, which annotation format should i prepare my custom dataset ? bbox: x1, y1, x2, y2 or box: xc, yc, w, h, angle

from detectron2.

from detectron2.

@st7ma784 Can you share your rotated maskrcnn config settings? much appreciate. ^_^

from detectron2.

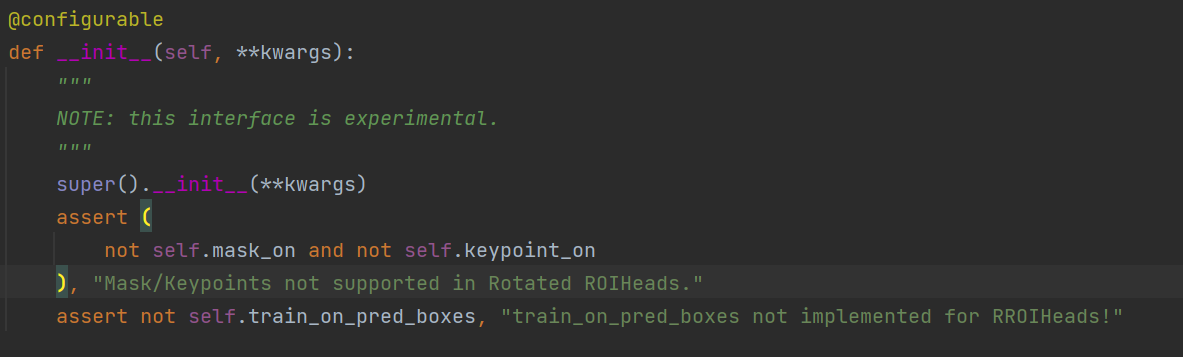

File "tools/rotated_train_net.py", line 109, in <module>

args=(args,),

File "/home/work/project/detectron2/detectron2/engine/launch.py", line 52, in launch

main_func(*args)

File "tools/rotated_train_net.py", line 91, in main

trainer = MyTrainer(cfg)

File "/home/work/project/detectron2/detectron2/engine/defaults.py", line 253, in __init__

model = self.build_model(cfg)

File "/home/work/project/detectron2/detectron2/engine/defaults.py", line 397, in build_model

model = build_model(cfg)

File "/home/work/project/detectron2/detectron2/modeling/meta_arch/build.py", line 19, in build_model

return META_ARCH_REGISTRY.get(meta_arch)(cfg)

File "/home/work/project/detectron2/detectron2/modeling/meta_arch/rcnn.py", line 35, in __init__

self.roi_heads = build_roi_heads(cfg, self.backbone.output_shape())

File "/home/work/project/detectron2/detectron2/modeling/roi_heads/roi_heads.py", line 42, in build_roi_heads

return ROI_HEADS_REGISTRY.get(name)(cfg, input_shape)

File "/home/work/project/detectron2/detectron2/modeling/roi_heads/rotated_fast_rcnn.py", line 172, in __init__

), "Mask/Keypoints not supported in Rotated ROIHeads."

AssertionError: Mask/Keypoints not supported in Rotated ROIHeads.

Seems like haven't support rotated mask rcnn yet, only just support faster rcnn

from detectron2.

@ppwwyyxx : can you give some guidance on adding support for mask head to rotated_fast_rcnn.py? Your hints above got me to the same point as @yh673025667.

from detectron2.

Hi @st7ma784 ,

I don't think fvcore hastransforms.apply_rotated_box(). Did you define this yourself?

Thanks

i can't find transforms.apply_rotated_box() in detectron2 and fvcore too

from detectron2.

@st7ma784 Hi, you set the MASK_ON to False, that means your work is still based rotated faster rcnn but not rotated mask rcnn

from detectron2.

Ah, squints at issue title

Have I indeed :-P ?

from detectron2.

@st7ma784 Why are you using the pre-trained weight cfg.MODEL.WEIGHTS = model_zoo.get_checkpoint_url("COCO-Detection/faster_rcnn_R_50_FPN_3x.yaml")

And use annotation["bbox_mode"] = BoxMode.XYWHA_ABS ?

Where you able to accurately predict the ship orientation and localisation?

from detectron2.

As a quirk of Colab, you aren't garunteed which GPU you get, and are limited to 12hrs. As such using pretrained weights massively speeds up training, (quite a few papers written about first chunk of training being largely about segmentation over RPN). From the dataset I had, XYWHA was the best option. but also, Its the only one with Angular data, thus I suspect the only one which works with the RRPN. (you can convert back to XYXY easily but the Boxmode convert isnt able to convert the other way)

And yes, pretty accurately. I was achieving about a 50% AP, which for a <1000 dataset spread unevenly across 20 classes is pretty good

from detectron2.

@st7ma784 Thanks for your work.

1 - You can use colab pro for 10 usd a month. Higher GPU and more than 24H without disconnection.

2 - I wasn't getting the fact your pre-trained model is in XYXY while your training data is in XYWHA.

3 - Are X,Y the center of the box in XYWHA ?

4 - I decided to train from scratch.

5 - It keeps crashing sometimes even with the pre-trained weight.

6 - I got a few trainings without crashing, but the boxes are oversized and outside the image.

7 - I'm using opencv to convert the inference back to normal bbox:

d =get_data()[500]

im_path = d["file_name"]

im = cv2.imread(im_path)

outputs = predictor(im)

bbox = outputs["instances"].pred_boxes.to("cpu").tensor.numpy().astype(float)

[cx,cy,w,h,a ] = bbox[0]

rect = ((cx, cy), (w, h), a)

box = cv2.boxPoints(rect)

box = np.int0(box)

lx,ly = box.T

x1,x2 = lx.min(),lx.max()

y1,y2 = ly.min(),ly.max()

##############

Training Config

##############

cfg = get_cfg()

cfg.merge_from_file(model_zoo.get_config_file("Base-RCNN-FPN.yaml"))

cfg.DATASETS.TRAIN = ([dataset_name])

cfg.DATASETS.TEST = ()

cfg.MODEL.MASK_ON=False

cfg.MODEL.KEYPOINTS_ON=False

mr,mg,mb = 243.59909935767192 ,243.60688569955659, 243.61098590081198

cfg.MODEL.PIXEL_MEAN = [mr,mg,mb]

cfg.MODEL.PIXEL_STD = [1.0, 1.0, 1.0]

cfg.MODEL.RESNETS.STRIDE_IN_1X1= False

cfg.MODEL.RESNETS.NUM_GROUPS= 32

cfg.MODEL.RESNETS.WIDTH_PER_GROUP= 8

cfg.MODEL.RESNETS.DEPTH = 101

cfg.SOLVER.IMS_PER_BATCH = 2

cfg.SOLVER.CHECKPOINT_PERIOD=2000

cfg.SOLVER.BASE_LR = 0.0025 # pick a good LR

cfg.SOLVER.MAX_ITER= 5000 #66000

cfg.SOLVER.GAMMA = 0.1

#The iteration number to decrease learning rate by GAMMA.

cfg.SOLVER.STEPS= (1000, 4000)

cfg.MODEL.ROI_HEADS.NUM_CLASSES =6

cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST = 0.5 # set threshold for this model

cfg.DATALOADER.NUM_WORKERS = 2 # Number of data loading threads

cfg.DATALOADER.SAMPLER_TRAIN= "RepeatFactorTrainingSampler" # Options: TrainingSampler, RepeatFactorTrainingSampler

cfg.DATALOADER.REPEAT_THRESHOLD=0.01 # Repeat threshold for RepeatFactorTrainingSampler

cfg.DATALOADER.FILTER_EMPTY_ANNOTATIONS = True # if True, the dataloader will filter out images that have no associated # annotations at train time.

cfg.MODEL.PROPOSAL_GENERATOR.NAME = "RRPN"

cfg.MODEL.RPN.HEAD_NAME = "StandardRPNHead"

cfg.MODEL.RPN.BBOX_REG_WEIGHTS = (1.0, 1.0, 1.0, 1.0,1.0) #10,10,5,5,1 #10,10,5,5,10

#cfg.MODEL.RPN.BOUNDARY_THRESH = 5

cfg.MODEL.RPN.IOU_THRESHOLDS = [0.5, 0.9]

cfg.MODEL.RPN.BATCH_SIZE_PER_IMAGE = 256

cfg.MODEL.ANCHOR_GENERATOR.NAME = "RotatedAnchorGenerator"

cfg.MODEL.ANCHOR_GENERATOR.ANGLES = [[-90,-60, -30, 0, 30,60, 90]]

cfg.MODEL.ROI_HEADS.NAME = "RROIHeads"

cfg.MODEL.ROI_HEADS.BATCH_SIZE_PER_IMAGE = 256

cfg.MODEL.ROI_BOX_HEAD.POOLER_TYPE = "ROIAlignRotated"

cfg.MODEL.ROI_BOX_HEAD.BBOX_REG_WEIGHTS = (1.0,1.0,1.0,1.0,1.0)

from detectron2.

not-so-fun fact: Colab Pro isnt available in the UK :-( (would have made stuff a lot easier)

The pretrained model speeds up the learning. the the Feature extraction part of a Faster-RCNN network, the BBOX format is somewhat irrelevant, as this is then fed into a classification and region proposal layers. For the task I was looking at, some papers suggest a modified RRPN with feature extractions, as the base objects are relatively easy to identify within and image, but the classification and feature extraction is difficult.

I did have a lot of finicking with getting the XYWHA read in correctly: the XY are the center. It's why in my notebook I have a visualizer before the training as it helps ensure annotations are accurate.

from detectron2.

@st7ma784 Yes, your visualizer works perfectly. I'm looking into using it for inference too. Will update more on this. My boxes are lines of 1-pixel width with different orientations.

from detectron2.

sounds fun... If you've got a dataset like that, perhaps tools designed for edge-detection might work well?

I've spent the last day or so grappling with Kaggle so I can get a demo dataset read in straight to the Colab environment so I can share (eventually) a fully working notebook

.

from detectron2.

@st7ma784 It's lines classification and detection, with certain lines like dimension lines should not be included. While others should be detected and classified. The perfect model for me would be rotated bbox with keypoints. The Ship dataset I thought is an open dataset: https://www.kaggle.com/c/airbus-ship-detection/data?select=train_v2

It would be interesting to compete on the Airbus Ship Detection Challenge using Detectron2 :)

from detectron2.

It very much would be. Though I've been using the far smaller HRSC2016 Dataset, as my dissertation was less about exact detection and segmentation, rather than identifying the ships present. As such, I've been trying to investigate ways of accurately identifying ships if a training set only has a handful of samples. I.e submarines/smuggling vessels...

from detectron2.

@st7ma784 thank you very much for the colab code. Btw, for the evaluation part how did you generate the coco-format json file? because

convert_to_coco_json("Train",''.join("./output/"+"Train"+"_coco_format.json"))

this tries to convert BoxMode = XYWHA_ABS to BoxMode = XYWH_ABS in the convert_to_coco_dict(dataset_name) method in coco.py file. It says that, the conversion is not supported. Could you give an idea how you acheived that?

from detectron2.

from detectron2.

ok I got rotated bounding box working on DOTA dataset:

https://captain-whu.github.io/DOTA/dataset.htmlfor training, after several epochs, it'll fail but I'm pretty sure the issue is with respect to invalid ground truth bounding boxes in my dataset.

@ppwwyyxx do you suggest I first train rrpn and then rroi heads rather than end to end?

For inference, get_deltas() calls torch.div_() which resulted in a pytorch error. I got past this by changing the in place version to torch.div(). Not sure if this is considered a bug.

I wrote a custom dataloader and data augmentation using imgaug.

Dataloader prepares the data in detectron2 input format (list of dictionaries)As far as this issue goes, here are my configs that overwrite the base rcnn fpn config:

BASE: "./Base-RCNN-FPN.yaml"

MODEL:

PIXEL_MEAN: [103.530, 116.280, 123.675] # Pixel mean of imagenet dataset R/G/B?

WEIGHTS: "./model_final_a3ec72.pkl"

MASK_ON: False

RESNETS:

DEPTH: 101

PROPOSAL_GENERATOR:

NAME: "RRPN"

RPN:

HEAD_NAME: "StandardRPNHead"

BBOX_REG_WEIGHTS: (1.0, 1.0, 1.0, 1.0, 1.0)

ANCHOR_GENERATOR:

NAME: "RotatedAnchorGenerator"

ANGLES: [[0, 30, 60, 90]]

ASPECT_RATIOS: [[0.5, 1.0, 2.0]]

ROI_HEADS:

NAME: "RROIHeads"

NUM_CLASSES: 15 # number of foreground classes

ROI_BOX_HEAD:

POOLER_TYPE: "ROIAlignRotated"

BBOX_REG_WEIGHTS: (10.0, 10.0, 5.0, 5.0, 10.0)

SOLVER:

STEPS: (210000, 250000)

MAX_ITER: 10 # number of epochs ( I modified the plain net train from iterations to epochs)

CHECKPOINT_PERIOD: 1 # number of epochs

why My Evaluation always like this when I use the Retinanet with Rotated anchor

| AP | AP50 | AP75 | APs | APm | APl |

|:------:|:----- -:|:----- -:|:----- :|:--- --:|:------:|

| 0 | 0 | 0 | nan | nan | 0 |

from detectron2.

imgaug

how to write the rotated box augmentation when image is doing augmentation @edwardchaos

from detectron2.

Hi @st7ma784,

First of all, thanks for sharing your work. I was following your old colab notebook and also checked out the newer one. But as @SujoyDU had mentioned the convert_to_coco_json("Train",''.join("./output/"+"Train"+"_coco_format.json")) bit when trying to convert to coco-json throws this error NotImplementedError: Conversion from BoxMode 4 to 1 is not supported yet which is occuring cause we are trying to convert BoxMode.XYWHA_ABS to BoxMode.XWWH_ABS. Did you also get the similar error? I am using the same dataset as you. @SujoyDU did you find a workaround? Any help would be appreciated. Thanks guys.

from detectron2.

from detectron2.

Hi @karan-shr I kind of found a work around that. Detectron2 supports XYWHA_ABS to XYXY_ABS. So you can do that first and then convert it from XYXY_ABS to XYWH_ABS.

from detectron2.

Apologies for delay:

Here's a stripped back notebook from my dissertation - Its pulling in data I've stored on my Google Drive so you'll need to rewrite the dataloader before trying it.

https://colab.research.google.com/drive/1ijCf9LwJ3HbXMBFXi4CM6qd5IBXlh330?usp=sharing

Hi @st7ma784, can I use rotated bbox with mask?

from detectron2.

from detectron2.

We're happy to take a contribution under projects/RotatedFasterRCNN if anyone can help clean up the work done by our amazing community members.

from detectron2.

Do you mean that everything should be moved there (e.g. rrpn, rotated roi-head etc) to the project folder or only new additions (e.g. configs, example scripts etc)?

from detectron2.

I mean an example script and configs.

from detectron2.

I have not seen the update projects/RotatedFasterRCNN, and I desire to joint it; What should I do?

from detectron2.

Thanks for all of you here sharing experience, but I still have some questions about the correct dataloader of rotated bounding boxes.

Now I can write a dataloader to process some datasets without rotated boxes because I can transform the labeled data into one list of dictionaries which is just like Coco annotation format, but I really don't know what should I do when facing rotated bounding boxes as I can't find one effective data format which can handle rotated boxes. Can anyone show me a dataloader example? Please help me!

from detectron2.

Hello, Thank you guys for your great contribution on Rotated Boxes, Can any buddy explain the parameter setting on box regression weights (1,1,1,1,1), (10,10,5,5,10), (10,10,5,5,1)? it would be best if you have made a control test. Any response is appreciated.

from detectron2.

Thanks for all of you here sharing experience, but I still have some questions about the correct dataloader of rotated bounding boxes.

Now I can write a dataloader to process some datasets without rotated boxes because I can transform the labeled data into one list of dictionaries which is just like Coco annotation format, but I really don't know what should I do when facing rotated bounding boxes as I can't find one effective data format which can handle rotated boxes. Can anyone show me a dataloader example? Please help me!

Hi, I mapped the rotated bounding box for angle range in [0,90) to a new keywords as "rbbox" parallel to "bbox" in the style of coco dataset for my research dataset. for loading data I added extra_annotation_keys as below, but the format of rbbox might differ according to application/dataset/detectron2's predefined format, you might need to do some transformation work at mapper method to transform your data to dataformat which is defined here.

DatasetCatalog.register(

"balabala", lambda:load_coco_json(

json_file,image_root,dataset_name, extra_annotation_keys=["rbbox"]))from detectron2.

Does detectron2 train an instance of the rotated box?

from detectron2.

is anyone's result with multiple predicted box result with one object? how to apply NMS to the final result?

from detectron2.

@st7ma784 thank you very much for the colab code. Btw, for the evaluation part how did you generate the coco-format json file? because

convert_to_coco_json("Train",''.join("./output/"+"Train"+"_coco_format.json"))

this tries to convert BoxMode = XYWHA_ABS to BoxMode = XYWH_ABS in theconvert_to_coco_dict(dataset_name)method in coco.py file. It says that, the conversion is not supported. Could you give an idea how you acheived that?

This is still the case?

I think now it is supported. Isn't it?

from detectron2.

Are rotated bounding boxes available for Mask R-CNN too?

It seems it is not.

from detectron2.

@ppwwyyxx How to annotate a image to get angle info in bbox(x,y,w,h,a) in json file. I generally use VGG annotator and get bbox(x,y,w,h)? Please let me know which annotation tool to use. Thanks.

from detectron2.

@ppwwyyxx I am getting this warning,

WARNING [10/23 12:15:30 d2.evaluation.coco_evaluation]: No predictions from the model!

[10/23 12:15:30 d2.engine.defaults]: Evaluation results for Test in csv format:

[10/23 12:15:30 d2.evaluation.testing]: copypaste: Task: bbox

[10/23 12:15:30 d2.evaluation.testing]: copypaste: AP,AP50,AP75,APs,APm,APl

[10/23 12:15:30 d2.evaluation.testing]: copypaste: nan,nan,nan,nan,nan,nan

I have used

cfg = get_cfg()

cfg.OUTPUT_DIR = os.path.join(dataset_path, 'output')

cfg.merge_from_file(model_zoo.get_config_file("COCO-Detection/faster_rcnn_R_50_FPN_3x.yaml"))

cfg.MODEL.WEIGHTS = model_zoo.get_checkpoint_url("COCO-Detection/faster_rcnn_R_50_FPN_3x.yaml") # Let training initialize from model zoo

cfg.DATASETS.TRAIN = (["Train"])

cfg.DATASETS.TEST = (["Test"])

cfg.MODEL.MASK_ON=False

cfg.MODEL.PROPOSAL_GENERATOR.NAME = "RRPN"

cfg.MODEL.RPN.HEAD_NAME = "StandardRPNHead"

cfg.MODEL.RPN.BBOX_REG_WEIGHTS = (10,10,5,5,1)

cfg.MODEL.ANCHOR_GENERATOR.NAME = "RotatedAnchorGenerator"

cfg.MODEL.ANCHOR_GENERATOR.ANGLES = [[-90,-60,-30,0,30,60,90]]

cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST = 0.8

cfg.MODEL.ROI_HEADS.NAME = "RROIHeads"

cfg.MODEL.ROI_HEADS.BATCH_SIZE_PER_IMAGE = 512 #this is far lower than usual.

cfg.MODEL.ROI_HEADS.NUM_CLASSES =len(class_name_list)

cfg.MODEL.ROI_BOX_HEAD.POOLER_TYPE = "ROIAlignRotated"

cfg.MODEL.ROI_BOX_HEAD.BBOX_REG_WEIGHTS = (10,10,5,5,1)

cfg.MODEL.ROI_BOX_HEAD.NUM_CONV=4

cfg.MODEL.ROI_MASK_HEAD.NUM_CONV=8

cfg.SOLVER.IMS_PER_BATCH = 15 #can be up to 24 for a p100 (6 default)

cfg.SOLVER.CHECKPOINT_PERIOD=100

cfg.SOLVER.BASE_LR = 0.00125

cfg.SOLVER.GAMMA=0.5

#cfg.SOLVER.STEPS=[1000,2000,4000,8000, 12000]

cfg.SOLVER.MAX_ITER=500

trainer = MyTrainer(cfg)

trainer.resume_or_load(resume=True)

trainer.train()

Please reply

from detectron2.

from detectron2.

Related Issues (20)

- export_model.py crashes with keypoints HOT 1

- export_model.py crashes with keypoints HOT 9

- Very slow training on Apple M1 Pro HOT 2

- UnpicklingError: invalid load key, '\xef'. HOT 2

- export_model.py - list_of_lines[165] = " [1344, 1344], 1344 \n" HOT 1

- Please read & provide the following HOT 2

- The comits you are making are breaking the code!!! HOT 1

- @torch.compiler.disable - AttributeError: module 'torch' has no attribute 'compiler' HOT 7

- missing config key error HOT 2

- Please read & provide the following HOT 1

- Detectron2 about rotated object detection HOT 1

- AttributeError: Cannot find field 'gt_masks' in the given Instances! HOT 1

- DensePose的apply_net.py运行dump的选项时候,如何多gpu运行呢? HOT 1

- Encountered freezing during start training at iteration 0 HOT 2

- printing label name and bbox coordinates of predicted images

- Add device argument for multi-backends access & Ascend NPU support HOT 3

- How to convert densepose model to onnx? HOT 1

- 模型跑出来的效果超出预期

- Does this project support FCOS? HOT 1

- C++ and onnx HOT 2

Recommend Projects

-

React

React

A declarative, efficient, and flexible JavaScript library for building user interfaces.

-

Vue.js

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

-

Typescript

Typescript

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

-

TensorFlow

An Open Source Machine Learning Framework for Everyone

-

Django

The Web framework for perfectionists with deadlines.

-

Laravel

A PHP framework for web artisans

-

D3

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

-

Recommend Topics

-

javascript

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

-

web

Some thing interesting about web. New door for the world.

-

server

A server is a program made to process requests and deliver data to clients.

-

Machine learning

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

-

Visualization

Some thing interesting about visualization, use data art

-

Game

Some thing interesting about game, make everyone happy.

Recommend Org

-

Facebook

We are working to build community through open source technology. NB: members must have two-factor auth.

-

Microsoft

Open source projects and samples from Microsoft.

-

Google

Google ❤️ Open Source for everyone.

-

Alibaba

Alibaba Open Source for everyone

-

D3

Data-Driven Documents codes.

-

Tencent

China tencent open source team.

from detectron2.