Comments (21)

Very happy it worked out - sort of.

I'm now starting to work on version 2.1 which will contain all the lessons I have learned so far on how to manage and parse Duplicati emails, in addition to a LOT of reporting enhancements. I invite you to follow @dupreport on Twitter or keep checking the Duplicati Forum page to see when beta and release versions will be available.

Good luck with your testing! Let me know if you find any other issues.

from dupreport.

mr-flibble, look at the issue #18 , I had a similar problem.

Try the 2.0.3_Issue_18 branch that HandyGuySoftware has made available and see if it works for your date format.

from dupreport.

It seems that HandyGuySoftware has given up on release 2.0.4 and is moving directly to version 2.1.

from dupreport.

Hello, thanks for fast reply. I did not notice other branches.

Unfortunately, I have the same problem. I tried initdb and "dateformat = us" was added to RC file, but if I change this value or if this line is deleted, the behavior is the same.

And end of level 3 log:

EndTime: 5. 11. 2017 10:38:48

BeginTime: 5. 11. 2017 10:38:28

Duration: 00:00:19.7302375

Messages: [

Preventing removal of last fileset, use --allow-full-removal to allow removal ...,

No remote filesets were deleted,

removing file listed as Temporary: duplicati-bb4755e8c70e8422a8a79aba41ebfbe7e.dblock.zip.aes,

removing file listed as Temporary: duplicati-i939c924e87bd4bb0b85e07e9e9ee8278.dindex.zip.aes

]

Warnings: []

Errors: []

]

statusParts['failed']=[]

convert_date_time(5. 11. 2017 10:38:48)

from dupreport.

Dots? DOTS?? You can put dots in a date format??? Who knew? I really need to start travelling around the world more!

:-)

The Issue18 fix would not have fixed this problem because dR has no notion of searching for dots in a date spec (as you probably guessed by now). So, it's back to the drawing board. The good news is that I know how to fix it now so that dR can recognize a wide variety of date formats. If all goes well I should be able to post a new Issue18 update by the end of the day.

TowerBR, I wouldn't read too much into the change in version numbering targets. I'm just re-thinking how I number things going forward.

from dupreport.

:))))))) Thanks!

By the way, I can see 32 countries with dots in date format https://en.wikipedia.org/wiki/Date_format_by_country

Have a nice day!

from dupreport.

TowerBR, I wouldn't read too much into the change in version numbering targets. I'm just

re-thinking how I number things going forward.

Ok! ;-)

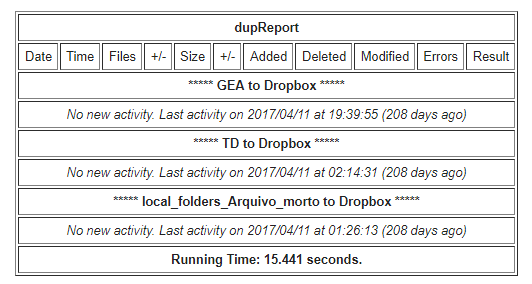

Since you are thinking of a new version, I have one more little information, see the report below. The "TD-Dropbox" job did not run "208 days ago", but yesterday (November 4th).

from dupreport.

TowerBR,

That's probably an artifact from the erroneous date recognition. Once I get the patch together we can see if that gets fixed.

from dupreport.

OK, new code uploaded to Issue_18 branch. To test it, do the following:

- In your dupReport.rc file, delete the 'dateformat' option from the [main] section.

- Run the program. It will error out telling you telling you the RC file needs configuration.

- Edit the RC file, adjusting the new 'dateformat' option in [main]. The acceptable formats are in the README file included in the branch.

- Run the program again and let me know if it fixes the date parsing issues.

Hopefully this is the one. If not, .... well let's not think about that right now. :-)

from dupreport.

For my format (DD/MM/YYYY) perfectly!

But with one additional step: I had to delete the DB.

Before the exclusion of the DB:

After:

I'll wait the next few days, when the larger backups will end, to see if these error messages will disappear.

from dupreport.

Hello, thank you for fix:)

With new version and "dateformat = DD.MM.YYYY" in config it works ! :)

EDIT: I have another issue :/

I tried another backup report (below) and I getting this error:

Traceback (most recent call last):

File "D:\Portable Python 3.2.5.1\dupReport.py", line 1036, in <module>

create_summary_report()

File "D:\Portable Python 3.2.5.1\dupReport.py", line 786, in create_summary_report

d0 = datetime.date(int(then[0]),int(then[1]), int(then[2]))

ValueError: invalid literal for int() with base 10: '2000-01-01'

End of the log:

create_summary_report()

sqlStmt=[SELECT source, destination, lastDate, lastTime, lastFileCount, lastFileSize from backupsets order by source, destination]

create_email_text()

textTup=('Duplicati Backup Summary Report\n',) fmtTup=('^',)

create_email_text()

textTup=('Date', 'Time', 'Files', '+/-', 'Size', '+/-', 'Added', 'Deleted', 'Modified', 'Errors', 'Result') fmtTup=('11', '9', '>10', '10', '>18', '18', '>10', '>10', '>10', '>10', '<11')

bkSetRows=[[('is', 'data', '2000-01-01', '00:00:00', 0, 0)]]

Src=[is] Dest=[data] lastDate=[2000-01-01] lastTime=[00:00:00] lastFileCount=[0] lastFileSize=[0]

create_email_text()

textTup=('***** is to data *****',) fmtTup=('',)

sqlStmt=[SELECT endDate, endtime, examinedFiles, sizeOfExaminedFiles, addedFiles, deletedFiles, modifiedFiles, filesWithError, parsedResult, warnings, errors, messages FROM emails WHERE sourceComp='is' AND destComp='data' AND ((endDate > '2000-01-01') OR ((endDate == '2000-01-01') AND (endtime > '00:00:00'))) order by endDate, endTime]

emailRows=[[]]

nowTxt=[2017-11-07 19:34:02.329000] now=[['2017', '11', '07']] then=[['2000-01-01']]

Report:

DeletedFiles: 0

DeletedFolders: 0

ModifiedFiles: 28

ExaminedFiles: 435583

OpenedFiles: 28

AddedFiles: 0

SizeOfModifiedFiles: 134632

SizeOfAddedFiles: 0

SizeOfExaminedFiles: 809807584083

SizeOfOpenedFiles: 28017204

NotProcessedFiles: 0

AddedFolders: 0

TooLargeFiles: 0

FilesWithError: 0

ModifiedFolders: 0

ModifiedSymlinks: 0

AddedSymlinks: 0

DeletedSymlinks: 0

PartialBackup: False

Dryrun: False

MainOperation: Backup

ParsedResult: Success

VerboseOutput: False

VerboseErrors: False

EndTime: 05.11.2017 20:44:33

BeginTime: 05.11.2017 19:00:00

Duration: 01:44:33.8171886

Messages: [

No remote filesets were deleted,

Compacting not required

]

Warnings: []

Errors: []

Config:

timeformat = HH:MM:SS

dateformat = DD.MM.YYYY

I am doing something wrong? Thanks

from dupreport.

mr-flibble,

I just uploaded new code into the Issue_18 branch. Please download that code and run the program to see if that helps. If that doesn't work, try again using the -i option to clear the database and start fresh.

Hoping this works! ;-)

from dupreport.

Hello, thanks for update! :)

I ran -i option and then script, and I get this error:

Traceback (most recent call last):

File "D:\Portable Python 3.2.5.1\dupReport.py", line 1047, in <module>

create_summary_report()

File "D:\Portable Python 3.2.5.1\dupReport.py", line 791, in create_summary_report

dateMatch = re.search(dtPat,then)

UnboundLocalError: local variable 'then' referenced before assignment

end of log

convert_date_time() dfmt=[DD.MM.YYYY] tfmt=[HH:MM:SS]

convert_date_time() dfmt=[DD.MM.YYYY] tfmt=[HH:MM:SS]

convert_date_time() dfmt=[DD.MM.YYYY] tfmt=[HH:MM:SS]

convert_date_time() dfmt=[DD.MM.YYYY] tfmt=[HH:MM:SS]

endSaveDate=[] endSaveTime=[] beginSaveDate=[] beginSaveTime=[]

build_email_sql_statement(()

messageId=[email protected] sourceComp=is destComp=data

sqlStmt=[INSERT INTO emails(messageId, sourceComp, destComp, emailDate, emailTime, deletedFiles, deletedFolders, modifiedFiles, examinedFiles, openedFiles, addedFiles, sizeOfModifiedFiles, sizeOfAddedFiles, sizeOfExaminedFiles, sizeOfOpenedFiles, notProcessedFiles, addedFolders, tooLargeFiles, filesWithError, modifiedFolders, modifiedSymlinks, addedSymlinks, deletedSymlinks, partialBackup, dryRun, mainOperation, parsedResult, verboseOutput, verboseErrors, endDate, endTime, beginDate, beginTime, duration, messages, warnings, errors) VALUES ('[email protected]', 'is', 'data', '2017-11-07', '19:24:04', 0, 0, 0, 0, 0, 0, 0, 0,0,0,0,0,0,0,0,0,0,0, '', '', '', '', '', '', '', '', '', '', '', "", "", "")]

sqlStmt=[INSERT INTO emails(messageId, sourceComp, destComp, emailDate, emailTime, deletedFiles, deletedFolders, modifiedFiles, examinedFiles, openedFiles, addedFiles, sizeOfModifiedFiles, sizeOfAddedFiles, sizeOfExaminedFiles, sizeOfOpenedFiles, notProcessedFiles, addedFolders, tooLargeFiles, filesWithError, modifiedFolders, modifiedSymlinks, addedSymlinks, deletedSymlinks, partialBackup, dryRun, mainOperation, parsedResult, verboseOutput, verboseErrors, endDate, endTime, beginDate, beginTime, duration, messages, warnings, errors) VALUES ('[email protected]', 'is', 'data', '2017-11-07', '19:24:04', 0, 0, 0, 0, 0, 0, 0, 0,0,0,0,0,0,0,0,0,0,0, '', '', '', '', '', '', '', '', '', '', '', "", "", "")]

create_summary_report()

sqlStmt=[SELECT source, destination, lastDate, lastTime, lastFileCount, lastFileSize from backupsets order by source, destination]

create_email_text()

textTup=('Duplicati Backup Summary Report\n',) fmtTup=('^',)

create_email_text()

textTup=('Date', 'Time', 'Files', '+/-', 'Size', '+/-', 'Added', 'Deleted', 'Modified', 'Errors', 'Result') fmtTup=('11', '9', '>10', '10', '>18', '18', '>10', '>10', '>10', '>10', '<11')

bkSetRows=[[('is', 'data', '2000-01-01', '00:00:00', 0, 0)]]

Src=[is] Dest=[data] lastDate=[2000-01-01] lastTime=[00:00:00] lastFileCount=[0] lastFileSize=[0]

create_email_text()

textTup=('***** is to data *****',) fmtTup=('',)

sqlStmt=[SELECT endDate, endtime, examinedFiles, sizeOfExaminedFiles, addedFiles, deletedFiles, modifiedFiles, filesWithError, parsedResult, warnings, errors, messages FROM emails WHERE sourceComp='is' AND destComp='data' AND ((endDate > '2000-01-01') OR ((endDate == '2000-01-01') AND (endtime > '00:00:00'))) order by endDate, endTime]

emailRows=[[]]

from dupreport.

Doh! That's the problem with late-nite debugging!

In line 791, replace 'then' with 'lastDate'. The full line should be:

dateMatch = re.search(dtPat,lastDate)

Clear out the DB with -i, then re-run and let me know what happens.

Thanks.

from dupreport.

sorry, no joy :)

(thank you and good night for tonight :-) )

Traceback (most recent call last): File "D:\Portable Python 3.2.5.1\dupReport.py", line 1047, in <module> create_summary_report() File "D:\Portable Python 3.2.5.1\dupReport.py", line 792, in create_summary_report dateSep = dt[dateMatch.regs[0][0]:dateMatch.regs[0][1]] NameError: global name 'dt' is not defined

end of log

create_summary_report()

sqlStmt=[SELECT source, destination, lastDate, lastTime, lastFileCount, lastFileSize from backupsets order by source, destination]

create_email_text()

textTup=('Duplicati Backup Summary Report\n',) fmtTup=('^',)

create_email_text()

textTup=('Date', 'Time', 'Files', '+/-', 'Size', '+/-', 'Added', 'Deleted', 'Modified', 'Errors', 'Result') fmtTup=('11', '9', '>10', '10', '>18', '18', '>10', '>10', '>10', '>10', '<11')

bkSetRows=[[('is', 'data', '2000-01-01', '00:00:00', 0, 0)]]

Src=[is] Dest=[data] lastDate=[2000-01-01] lastTime=[00:00:00] lastFileCount=[0] lastFileSize=[0]

create_email_text()

textTup=('***** is to data *****',) fmtTup=('',)

sqlStmt=[SELECT endDate, endtime, examinedFiles, sizeOfExaminedFiles, addedFiles, deletedFiles, modifiedFiles, filesWithError, parsedResult, warnings, errors, messages FROM emails WHERE sourceComp='is' AND destComp='data' AND ((endDate > '2000-01-01') OR ((endDate == '2000-01-01') AND (endtime > '00:00:00'))) order by endDate, endTime]

emailRows=[[]]

from dupreport.

Night, night! Look for another attempt in the morning! :-)

from dupreport.

OK, new code uploaded. This one is 100% guaranteed to absolutely maybe work. ;-)

This time I'm not saying anything more predictive than "try it out." Good luck!

from dupreport.

Thanks! :)

no error this time, but something new :-D

Report mal is same as in #21 (comment)

end of log:

[Subject=[Duplicati Backup report for is-data]

emailDate=[2017-11-07] emailTime=[19:24:04]

srcregex=[\w*-] destRegex=[-\w*]

source=[is] dest=[data] Date=[2017-11-07] Time=[19:24:04] Subject=[Duplicati Backup report for is-data]

db_search_srcdest_pair(is, data)

SELECT source, destination FROM backupsets WHERE source='is' AND destination='data'

INSERT INTO backupsets (source, destination, lastFileCount, lastFileSize, lastDate, lastTime) VALUES ('is', 'data', 0, 0, '2000-01-01', '00:00:00')

Pair [is/data] added to database

Body=[RGVsZXRlZEZpbGVzOiAwDQpEZWxldGVkRm9sZGVyczogMA0KTW9kaWZpZWRGaWxlczogMjgNCkV4YW1pbmVkRmlsZXM6IDQzNTU4Mw0KT3BlbmVkRmlsZXM6IDI4DQpBZGRlZEZpbGVzOiAwDQpTaXpl

T2ZNb2RpZmllZEZpbGVzOiAxMzQ2MzINClNpemVPZkFkZGVkRmlsZXM6IDANClNpemVPZkV4YW1p

bmVkRmlsZXM6IDgwOTgwNzU4NDA4Mw0KU2l6ZU9mT3BlbmVkRmlsZXM6IDI4MDE3MjA0DQpOb3RQ

cm9jZXNzZWRGaWxlczogMA0KQWRkZWRGb2xkZXJzOiAwDQpUb29MYXJnZUZpbGVzOiAwDQpGaWxl

c1dpdGhFcnJvcjogMA0KTW9kaWZpZWRGb2xkZXJzOiAwDQpNb2RpZmllZFN5bWxpbmtzOiAwDQpB

ZGRlZFN5bWxpbmtzOiAwDQpEZWxldGVkU3ltbGlua3M6IDANClBhcnRpYWxCYWNrdXA6IEZhbHNl

DQpEcnlydW46IEZhbHNlDQpNYWluT3BlcmF0aW9uOiBCYWNrdXANClBhcnNlZFJlc3VsdDogU3Vj

Y2Vzcw0KVmVyYm9zZU91dHB1dDogRmFsc2UNClZlcmJvc2VFcnJvcnM6IEZhbHNlDQpFbmRUaW1l

OiAwNS4xMS4yMDE3IDIwOjQ0OjMzDQpCZWdpblRpbWU6IDA1LjExLjIwMTcgMTk6MDA6MDANCkR1

cmF0aW9uOiAwMTo0NDozMy44MTcxODg2DQpNZXNzYWdlczogWw0KICAgIE5vIHJlbW90ZSBmaWxl

c2V0cyB3ZXJlIGRlbGV0ZWQsDQogICAgQ29tcGFjdGluZyBub3QgcmVxdWlyZWQNCl0NCldhcm5p

bmdzOiBbXQ0KRXJyb3JzOiBbXQ0KDQo=

]

statusParts['failed']=[]

convert_date_time() dfmt=[DD.MM.YYYY] tfmt=[HH:MM:SS]

convert_date_time() dfmt=[DD.MM.YYYY] tfmt=[HH:MM:SS]

convert_date_time() dfmt=[DD.MM.YYYY] tfmt=[HH:MM:SS]

convert_date_time() dfmt=[DD.MM.YYYY] tfmt=[HH:MM:SS]

endSaveDate=[] endSaveTime=[] beginSaveDate=[] beginSaveTime=[]

build_email_sql_statement(()

messageId=2d35830dcbc04176ab097bdc1c34d9a3@111 sourceComp=is destComp=data

sqlStmt=[INSERT INTO emails(messageId, sourceComp, destComp, emailDate, emailTime, deletedFiles, deletedFolders, modifiedFiles, examinedFiles, openedFiles, addedFiles, sizeOfModifiedFiles, sizeOfAddedFiles, sizeOfExaminedFiles, sizeOfOpenedFiles, notProcessedFiles, addedFolders, tooLargeFiles, filesWithError, modifiedFolders, modifiedSymlinks, addedSymlinks, deletedSymlinks, partialBackup, dryRun, mainOperation, parsedResult, verboseOutput, verboseErrors, endDate, endTime, beginDate, beginTime, duration, messages, warnings, errors) VALUES ('2d35830dcbc04176ab097bdc1c34d9a3@111', 'is', 'data', '2017-11-07', '19:24:04', 0, 0, 0, 0, 0, 0, 0, 0,0,0,0,0,0,0,0,0,0,0, '', '', '', '', '', '', '', '', '', '', '', "", "", "")]

sqlStmt=[INSERT INTO emails(messageId, sourceComp, destComp, emailDate, emailTime, deletedFiles, deletedFolders, modifiedFiles, examinedFiles, openedFiles, addedFiles, sizeOfModifiedFiles, sizeOfAddedFiles, sizeOfExaminedFiles, sizeOfOpenedFiles, notProcessedFiles, addedFolders, tooLargeFiles, filesWithError, modifiedFolders, modifiedSymlinks, addedSymlinks, deletedSymlinks, partialBackup, dryRun, mainOperation, parsedResult, verboseOutput, verboseErrors, endDate, endTime, beginDate, beginTime, duration, messages, warnings, errors) VALUES ('2d35830dcbc04176ab097bdc1c34d9a3@111', 'is', 'data', '2017-11-07', '19:24:04', 0, 0, 0, 0, 0, 0, 0, 0,0,0,0,0,0,0,0,0,0,0, '', '', '', '', '', '', '', '', '', '', '', "", "", "")]

create_summary_report()

sqlStmt=[SELECT source, destination, lastDate, lastTime, lastFileCount, lastFileSize from backupsets order by source, destination]

create_email_text()

textTup=('Duplicati Backup Summary Report\n',) fmtTup=('^',)

create_email_text()

textTup=('Date', 'Time', 'Files', '+/-', 'Size', '+/-', 'Added', 'Deleted', 'Modified', 'Errors', 'Result') fmtTup=('11', '9', '>10', '10', '>18', '18', '>10', '>10', '>10', '>10', '<11')

bkSetRows=[[('is', 'data', '2000-01-01', '00:00:00', 0, 0)]]

Src=[is] Dest=[data] lastDate=[2000-01-01] lastTime=[00:00:00] lastFileCount=[0] lastFileSize=[0]

create_email_text()

textTup=('***** is to data ',) fmtTup=('',)

sqlStmt=[SELECT endDate, endtime, examinedFiles, sizeOfExaminedFiles, addedFiles, deletedFiles, modifiedFiles, filesWithError, parsedResult, warnings, errors, messages FROM emails WHERE sourceComp='is' AND destComp='data' AND ((endDate > '2000-01-01') OR ((endDate == '2000-01-01') AND (endtime > '00:00:00'))) order by endDate, endTime]

emailRows=[[]]

lastDate=[2000-01-01] start=[4] finish=[5] char=[-]

nowTxt=[2017-11-09 07:58:26.955000] now=[['2017', '11', '09']] then=[['2000', '01', '01']]

d0=[2000-01-01] d1=[2017-11-09]

create_email_text()

textTup=('No new activity. Last activity on 2000-01-01 at 00:00:00 (6522 days ago)', '') fmtTup=('', '')

create_email_text()

textTup=('Running Time: 1.939 seconds.',) fmtTup=('',)

Send_email()

txt=('Duplicati Backup Summary Report\n',) format=('^',)

txt=('Date', 'Time', 'Files', '+/-', 'Size', '+/-', 'Added', 'Deleted', 'Modified', 'Errors', 'Result') format=('11', '9', '>10', '10', '>18', '18', '>10', '>10', '>10', '>10', '<11')

txt2=Date fmt2=11

txt2=Time fmt2=9

txt2=Files fmt2=>10

txt2=+/- fmt2=10

txt2=Size fmt2=>18

txt2=+/- fmt2=18

txt2=Added fmt2=>10

txt2=Deleted fmt2=>10

txt2=Modified fmt2=>10

txt2=Errors fmt2=>10

txt2=Result fmt2=<11

txt=(' is to data *****',) format=('',)

txt=('No new activity. Last activity on 2000-01-01 at 00:00:00 (6522 days ago)', '') format=('', '')

txt=('Running Time: 1.939 seconds.',) format=('',)

msgtext=Duplicati Backup Summary ReportDate Time Files+/- Size+/- Added Deleted Modified ErrorsResult

***** is to data *****No new activity. Last activity on 2000-01-01 at 00:00:00 (6522 days ago)

Running Time: 1.939 seconds.

msgHtml=

Duplicati Backup Summary Report

Date Time Files +/- Size +/- Added Deleted Modified Errors Result ***** is to data ***** No new activity. Last activity on 2000-01-01 at 00:00:00 (6522 days ago) Running Time: 1.939 seconds. SMTP Server=[<smtplib.SMTP object at 0x04FEE3F0>]

Program completed in 4.870 seconds. Exiting]

from dupreport.

Would it be possible to post the full log file here or elsewhere?

from dupreport.

Ah, never mind. I see the problem.

The program is functioning exactly as designed, just not as expected ;-)

The particular email in question (2d35830dcbc04176ab097bdc1c34d9a3@111) represents a failed run, or at least dupReport believes so. This is because dR sees the body of the email (Body=[...]) is not in standard Duplicati format (the program is seeing a string of random characters instead of Duplicati result fields). Because there are no status fields to parse in the body, none are being stored in the database.

Later, dR retrieves all the emails where the job end date ('endDate') is later than the 'last run' time as extracted from the email. Because dR couldn't parse the body of the email, it can't find an 'endDate' for that particular email and doesn't return that as part of the query. As a result, the SELECT query is returning an empty set (emailRows=[[]]). That's why the report is coming up empty.

So, how to fix this?

If the email in question is accurately represented in the log file (Body=[...]), there's not much else to do, since there's nothing intelligent to parse. If, however, you are seeing an actual Duplicati job result report in your email instead of the gibberish found in the log, that means that dR is not properly decoding the email. That's the biggest problem I've been facing; many email systems encode & decode mail differently and I can't test them all. 'Standard' isn't standard at all!

Here's something to try: in line 620, add a decode statement at the end of the line. The new line should read:

msgParts['body'] = mess.get_payload().decode('utf-8')

"Try it out..." ;-) See if that makes a difference, and let me know what happens.

I appreciate your patience and help in debugging this. We'll figure it sooner or later ;-)

from dupreport.

Hello, thank you for helping me with this:)

Otherwise I'm sorry, I should have mentioned that the last mail I forwarded via outlook. I did not realize that this may be a problem. I wanted to test an older backup with a large size :(

And line 620 make no diference.

Or if I send mail gmail gmail, I get this error:

Traceback (most recent call last):

File "D:\Portable Python 3.2.5.1\dupReport.py", line 1040, in <module>

process_mailbox_imap(mailBox)

File "D:\Portable Python 3.2.5.1\dupReport.py", line 895, in process_mailbox_imap

mParts = process_message(msg) # Process message into parts

File "D:\Portable Python 3.2.5.1\dupReport.py", line 620, in process_message

msgParts['body'] = mess.get_payload().decode('utf-8')

AttributeError: 'str' object has no attribute 'decode'

But I think this is no important issue - it it was caused by my stupid use of mail client.

Simple workaround is keep old line 620 and send mail "as RTF" from Outlook. Now I have this beautiful table :) 👍

So thank you! Now my real dupReport testing may begun ! :-)

from dupreport.

Related Issues (20)

- Fix Typos in convert.py file HOT 1

- Add OS Name & Version to Log File HOT 1

- dupReport.py installer changes folder name HOT 2

- Error crash when target destination is unavailable HOT 1

- smtplib.SMTPDataError: (501, b'Syntax error - line too long') HOT 18

- Typo in [report] section HOT 1

- Change description of -verbose command line option HOT 1

- Program crashes when can't connect to SMTP server HOT 1

- Fix Typos in docs/WhatIsDupreport.md HOT 1

- Indicate Warning or Error in subject line of the mail report HOT 16

- Only send on failure HOT 1

- Parsing failures HOT 4

- Enabling "Less secure apps" is required for Gmail access HOT 2

- "Nonetype has no attribute groups" - dupreport crash HOT 7

- Add an Ignore option to the Source-Destination Section of the .RC file HOT 1

- Need more flexibility in defining Subject Line and S-D regex in .rc file (Was: RegEx suddenly does not match anymore) HOT 16

- dupReport is ignoring Backup Warning Threshold HOT 1

- Typo in the docs for the-b option HOT 1

- Syslog output crashes ELK Logstash 8.1.2 HOT 1

- Ignored S/D Pairs still show up in LastSeen Report

Recommend Projects

-

React

React

A declarative, efficient, and flexible JavaScript library for building user interfaces.

-

Vue.js

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

-

Typescript

Typescript

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

-

TensorFlow

An Open Source Machine Learning Framework for Everyone

-

Django

The Web framework for perfectionists with deadlines.

-

Laravel

A PHP framework for web artisans

-

D3

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

-

Recommend Topics

-

javascript

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

-

web

Some thing interesting about web. New door for the world.

-

server

A server is a program made to process requests and deliver data to clients.

-

Machine learning

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

-

Visualization

Some thing interesting about visualization, use data art

-

Game

Some thing interesting about game, make everyone happy.

Recommend Org

-

Facebook

We are working to build community through open source technology. NB: members must have two-factor auth.

-

Microsoft

Open source projects and samples from Microsoft.

-

Google

Google ❤️ Open Source for everyone.

-

Alibaba

Alibaba Open Source for everyone

-

D3

Data-Driven Documents codes.

-

Tencent

China tencent open source team.

from dupreport.