Comments (11)

Good question - this is more a question of what helps empirically. E.g. in some work annealing beta helps: https://arxiv.org/abs/1511.06349

It probably depends on the problem; it's easy to try.

However, leaving beta lower than one is technically incorrect if the goal is to have a well-defined loss function that is a lower bound on the evidence.

from variational-autoencoder.

Thank you @altosaar , when I have beta = 1 my elbo doesnot decrease , but when I have beta lower than one it decrease, Where I have made mistake you think , do you think writing loss function in a

way you wrote differes results? I wrote simple KLD loss + reconstruction loss , but you wrote in a different form

from variational-autoencoder.

from variational-autoencoder.

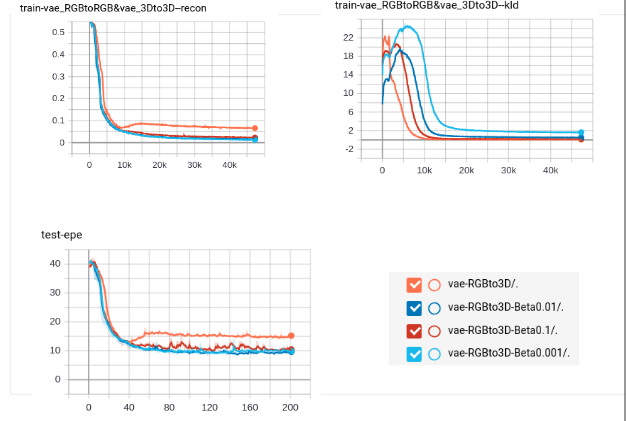

@altosaar , My plot shows that for beta=1 my results is worse than Beta lower than 1.

from variational-autoencoder.

and loss function I have used is

`from .loss import Loss

from .util import *

class BetaHLoss(Loss):

@staticmethod

def match(head_name):

return head_name.lower() in ('beat-h', 'beta-vae-h')

@classmethod

def apply_args(cls, cfg):

cls.BETA = cfg.KL.BETA

cls.ANNEAL = cfg.KL.ANNEAL_REG

cls.REC_ID = cfg.REC.ID

def __init__(self, cfg):

super(BetaHLoss, self).__init__()

BetaHLoss.apply_args(cfg)

def __call__(self, **kwargs):

x = kwargs['x']

x_recon = kwargs['x_recon']

latent_dist = kwargs['latent_dist']

step = kwargs['step']

recon_loss = reconstruction_loss(x, x_recon, self.REC_ID)

total_kld, dim_wise_kld, mean_kld = kl_divergence(*latent_dist)

anneal_w = anneal_reg(step, self.ANNEAL)

beta_vae_loss = recon_loss + self.BETA * anneal_w * total_kld

meta = {

'elbo': beta_vae_loss,

'recon': recon_loss,

'kld': total_kld,

'dim_wise_kld': dim_wise_kld,

'mean_kld': mean_kld,

'anneal_reg': anneal_w}

return beta_vae_loss, meta`

`import numpy as np

import torch.nn.functional as F

""" KLD DIVERGENCE"""

def kl_divergence(mu, logvar):

batch_size = mu.size(0)

assert batch_size != 0

klds = -0.5 * (1 + logvar - mu.pow(2) - logvar.exp())

total_kld = klds.sum(1).mean(0, True)

dimension_wise_kld = klds.mean(0)

mean_kld = klds.mean(1).mean(0, True)

return total_kld, dimension_wise_kld, mean_kld

""" RECONSTRUCTION LOSS """

def reconstruction_loss(x, x_recon, ID):

batch_size = x.size(0)

assert batch_size != 0

if ID == 'bernoulli':

recon_loss = F.binary_cross_entropy_with_logits(x_recon, x, size_average=False).div(batch_size)

elif ID == 'gaussian':

recon_loss = F.mse_loss(x_recon, x, size_average=False).div(batch_size)

else:

recon_loss = None

return recon_loss

""" ANNEAL REGULARIZATION """

def anneal_reg(step, anneal):

k = anneal.K

x0 = anneal.X0

ID = anneal.ID

if ID == 'logistic':

return float(1 / (1 + np.exp(-k * (step - x0))))

elif ID == 'linear':

return min(1., max(0., step/x0))

else:

return 1.0

`

from variational-autoencoder.

Do you think if I wrote my loss function like you , Would I get different results?

from variational-autoencoder.

from variational-autoencoder.

Thanks, that's interesting. Were you able to write the LaTeX for the ELBO? That will help us figure out if we're talking about the same thing.

…

On Wednesday, September 11, 2019, Ivamcoder @.***> wrote: Do you think if I wrote my loss function like you , Would I get different results? — You are receiving this because you were mentioned. Reply to this email directly, view it on GitHub <#22?email_source=notifications&email_token=ABISE7A7ZBPSKZBDB2KUF4TQJGFOJA5CNFSM4IVOCL32YY3PNVWWK3TUL52HS4DFVREXG43VMVBW63LNMVXHJKTDN5WW2ZLOORPWSZGOD6QJJ5Q#issuecomment-530617590>, or mute the thread https://github.com/notifications/unsubscribe-auth/ABISE7FY3ZV7SP4RFJ4MP4TQJGFOJANCNFSM4IVOCL3Q .

This is my elbo elbo=recon_loss + self.BETA * anneal_w * total_kld

from variational-autoencoder.

Shouldn't beta be the thing being annealed here? Or are you annealing anneal_w as well?

from variational-autoencoder.

Shouldn't beta be the thing being annealed here? Or are you annealing

anneal_was well?

beta took different constant values beta=0.01, 0.1, 1 multiplied by anneal_w , in fact beta change the final value of anneal_w, for example if beta=1 , the factor of KLD initialize from zero to 1, if beta=0.1 the KLD factorinitializes from zero to 0.1. I attached the figure of anneal_w in previous posts which is a logistic annealing function.

from variational-autoencoder.

from variational-autoencoder.

Related Issues (20)

- Graphs for pyTorch version HOT 3

- Adaptation to CNN HOT 1

- SystemExit HOT 4

- unable to open file: name = 'dat/binarized_mnist.hdf5' HOT 10

- Tensor size mismatch in VariationalMeanField.forward

- Why not average over batch dimension? HOT 6

- Interpretation of each dimension on the shape HOT 1

- I very much hope that you can also give this paper Code implementation HOT 3

- Possible error in loss function HOT 2

- the expected_log_likelihood is not a expected value, but only an log likelihood HOT 1

- Size of output weights file HOT 2

- deprecation warnings for tensorflow HOT 3

- AttributeError: module 'flow' has no attribute 'InverseAutoregressiveFlow'

- Surprising results with no convergence HOT 4

- A question regarding q_z HOT 2

- Regarding the loss function HOT 1

- Follow up on why inputs must be between 0 and 1 HOT 1

- Dataset is lost

- Working version for Python 3+

Recommend Projects

-

React

React

A declarative, efficient, and flexible JavaScript library for building user interfaces.

-

Vue.js

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

-

Typescript

Typescript

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

-

TensorFlow

An Open Source Machine Learning Framework for Everyone

-

Django

The Web framework for perfectionists with deadlines.

-

Laravel

A PHP framework for web artisans

-

D3

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

-

Recommend Topics

-

javascript

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

-

web

Some thing interesting about web. New door for the world.

-

server

A server is a program made to process requests and deliver data to clients.

-

Machine learning

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

-

Visualization

Some thing interesting about visualization, use data art

-

Game

Some thing interesting about game, make everyone happy.

Recommend Org

-

Facebook

We are working to build community through open source technology. NB: members must have two-factor auth.

-

Microsoft

Open source projects and samples from Microsoft.

-

Google

Google ❤️ Open Source for everyone.

-

Alibaba

Alibaba Open Source for everyone

-

D3

Data-Driven Documents codes.

-

Tencent

China tencent open source team.

from variational-autoencoder.