从PaddleOCR转换模型到PytorchOCR

- torch: 2.0.1

- paddle: 2.5.1

- 系统:win10 cpu

- ppocr目录仅做代码转换用,全部模型转换完成后删掉

- padiff为权重转换工具,全部模型转换完成后删掉

注意:不在下述列表中的模型代表还未经过验证

模型下载地址

百度云: 链接:https://pan.baidu.com/s/17NVg9VSBmrDmbX5MmubZgQ?pwd=ppdz 提取码:ppdz

| 模型 | 是否对齐 | 对齐误差 | 配置文件 |

|---|---|---|---|

| ch_PP-OCRv4_rec_distill | X | 配置不一致 | config |

| ch_PP-OCRv4_rec_teacher | Y | 1.4605024e-10 | config |

| ch_PP-OCRv4_rec_student | Y | 3.6277156e-06 | config |

| ch_PP-OCRv4_det_student | Y | 0 | config |

| ch_PP-OCRv4_det_teacher | Y | maps 7.811429e-07 cbn_maps 1.0471307e-06 |

config |

| ch_PP-OCRv4_det_cml | Y | Student_res 0.0 Student2_res 0.0 Teacher_maps 1.1398747e-06 Teacher_cbn_maps 1.2791393e-06 |

config |

| ch_PP-OCRv3_rec | Y | 4.615016e-11 | config |

| ch_PP-OCRv3_rec_distillation.yml | Y | Teacher_head_out_res 7.470646e-10 Student_head_out_res 4.615016e-11 |

config |

| ch_PP-OCRv3_det_student | Y | 1.766314e-07 | config |

| ch_PP-OCRv3_det_cml | Y | Student_res 1.766314e-07 Student2_res 3.1212483e-07 Teacher_res 8.829421e-08 |

config |

| ch_PP-OCRv3_det_dml | Y | ok | config |

| cls_mv3 | Y | 5.9604645e-08 | config |

| 模型 | 是否对齐 | 对齐误差 | 配置文件 |

|---|---|---|---|

| rec_mv3_none_none_ctc | Y | 2.114354e-09 | config |

| rec_r34_vd_none_none_ctc | Y | 3.920279e-08 | config |

| rec_mv3_none_bilstm_ctc | Y | 1.1861777e-09 | config |

| rec_r34_vd_none_bilstm_ctc | Y | 1.9336952e-08 | config |

| rec_mv3_tps_bilstm_ctc | Y | 1.1886948e-09 | config |

| rec_r34_vd_tps_bilstm_ctc | N | 0.0035705192 | config |

| rec_mv3_tps_bilstm_att | Y | 1.8528418e-09 | config |

| rec_r34_vd_tps_bilstm_att | N | 0.0006942689 | config |

| rec_r31_sar | Y | 7.348353e-08 | config |

| rec_mtb_nrtr | N | res_0 8.64 res_1 0.13501492 |

config |

功能性:

- 端到端推理

- det推理

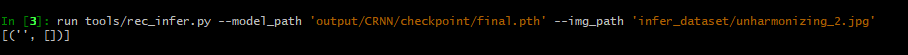

- rec推理

- cls推理

- 导出为onnx

- onnx推理

- tensorrt 推理

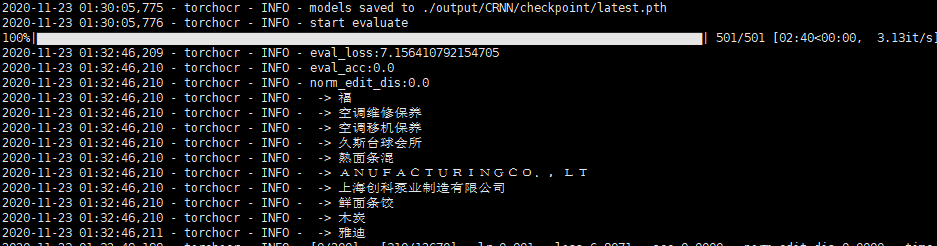

- 训练,评估,测试

参考PaddleOCR

# 单卡

CUDA_VISIBLE_DEVICES=0 python tools/train.py -c configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.yml

# 多卡

CUDA_VISIBLE_DEVICES=0,1,2,3 torchrun --nnodes=1 --nproc_per_node=4 tools/train.py --c configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.ymlCUDA_VISIBLE_DEVICES=0 python tools/eval.py -c configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.yml -o Global.checkpoints=xxx.pthpython tools/infer_rec.py -c configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.yml -o Global.pretrained_model=xxx.pthpython tools/export.py -c configs/rec/PP-OCRv3/ch_PP-OCRv3_rec_distillation.yml -o Global.pretrained_model=xxx.pth会将模型导出为onnx格式(默认,torch script未做测试),同时导出后处理和预处理参数

# det + cls + rec

python .\tools\infer\predict_system.py --det_model_dir=path/to/det/export_dir --cls_model_dir=path/to/cls/export_dir --rec_model_dir=path/to/rec/export_dir --image_dir=doc/imgs/1.jpg --use_angle_cls=true

# det

python .\tools\infer\predict_det.py --det_model_dir=path/to/det/export_dir --image_dir=doc/imgs/1.jpg

# cls

python .\tools\infer\predict_cls.py --cls_model_dir=path/to/cls/export_dir --image_dir=doc/imgs/1.jpg

# rec

python tools/infer/predict_rec.py --rec_model_dir=path/to/rec/export_dir --image_dir=doc/imgs_words/en/word_1.png

ref: