api.video is the video infrastructure for product builders. Lightning fast video APIs for integrating, scaling, and managing on-demand & low latency live streaming features in your app.

This module is made for broadcasting rtmp live stream from smartphone camera

npm install @api.video/react-native-livestreamor

yarn add @api.video/react-native-livestreamNote: if you are on iOS, you will need two extra steps:

- Don't forget to install the native dependencies with Cocoapods

cd ios && pod install- This project contains swift code, and if it's your first dependency with swift code, you need to create an empty swift file in your project (with the bridging header) from XCode. Find how to do that

To be able to broadcast, you must:

- On Android: ask for internet, camera and microphone permissions:

<manifest>

<uses-permission android:name="android.permission.INTERNET" />

<uses-permission android:name="android.permission.RECORD_AUDIO" />

<uses-permission android:name="android.permission.CAMERA" />

</manifest>Your application must dynamically require android.permission.CAMERA and android.permission.RECORD_AUDIO.

- On iOS: update Info.plist with a usage description for camera and microphone

<key>NSCameraUsageDescription</key>

<string>Your own description of the purpose</string>

<key>NSMicrophoneUsageDescription</key>

<string>Your own description of the purpose</string>- On react-native you must handle the permissions requests before starting your livestream. If permissions are not accepted you will not be able to broadcast.

import React, { useRef, useState } from 'react';

import { View, TouchableOpacity } from 'react-native';

import { LiveStreamView } from '@api.video/react-native-livestream';

const App = () => {

const ref = useRef(null);

const [streaming, setStreaming] = useState(false);

return (

<View style={{ flex: 1, alignItems: 'center' }}>

<LiveStreamView

style={{ flex: 1, backgroundColor: 'black', alignSelf: 'stretch' }}

ref={ref}

camera="back"

enablePinchedZoom={true}

video={{

fps: 30,

resolution: '720p',

bitrate: 2*1024*1024, // # 2 Mbps

gopDuration: 1, // 1 second

}}

audio={{

bitrate: 128000,

sampleRate: 44100,

isStereo: true,

}}

isMuted={false}

onConnectionSuccess={() => {

//do what you want

}}

onConnectionFailed={(e) => {

//do what you want

}}

onDisconnect={() => {

//do what you want

}}

/>

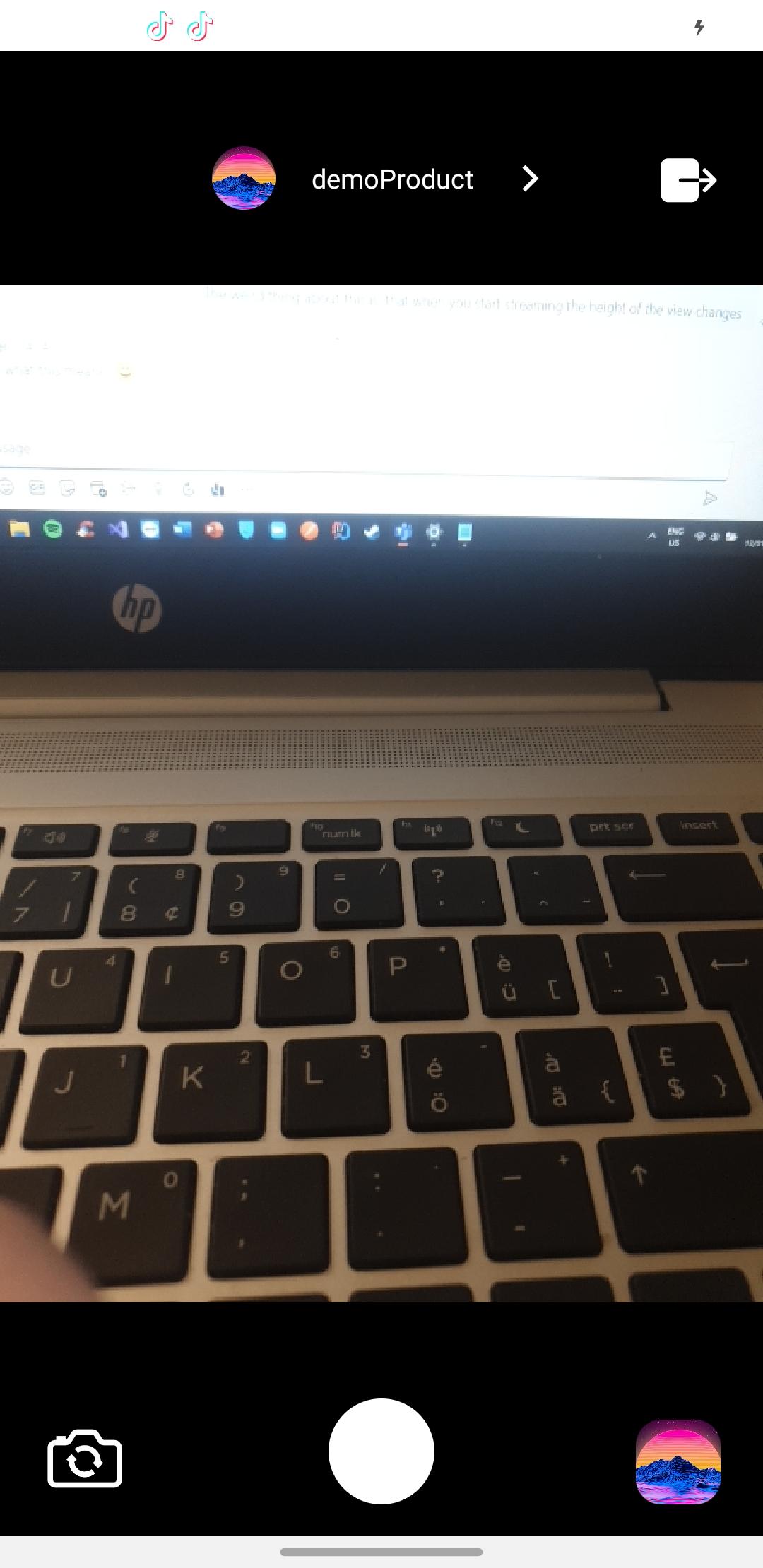

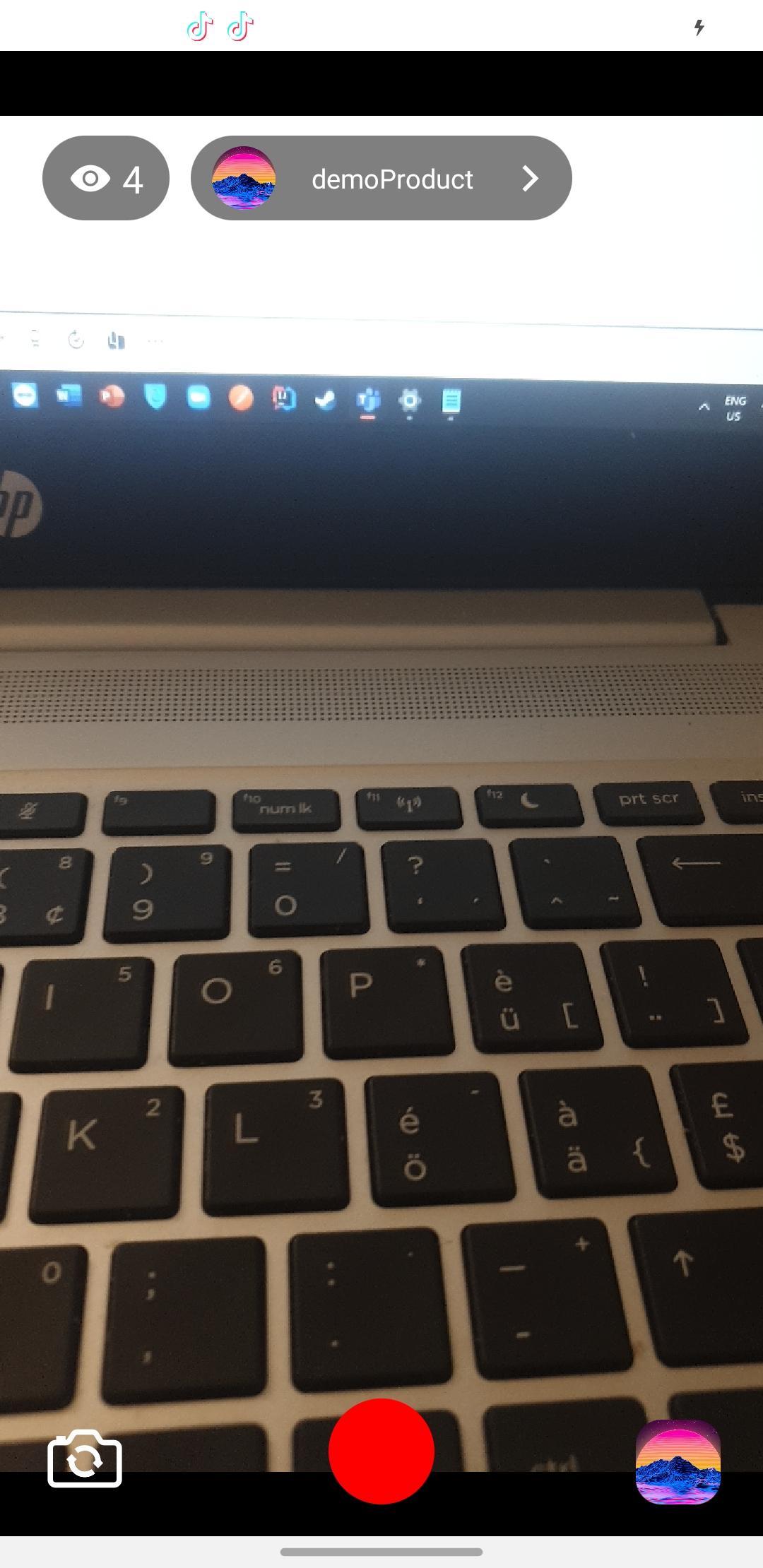

<View style={{ position: 'absolute', bottom: 40 }}>

<TouchableOpacity

style={{

borderRadius: 50,

backgroundColor: streaming ? 'red' : 'white',

width: 50,

height: 50,

}}

onPress={() => {

if (streaming) {

ref.current?.stopStreaming();

setStreaming(false);

} else {

ref.current?.startStreaming('YOUR_STREAM_KEY');

setStreaming(true);

}

}}

/>

</View>

</View>

);

}

export default App;type LiveStreamProps = {

// Styles for the view containing the preview

style: ViewStyle;

// camera facing orientation

camera?: 'front' | 'back';

video: {

// frame rate

fps: number;

// resolution

resolution: '240p' | '360p' | '480p' | '720p' | '1080p';

// video bitrate. depends on resolutions.

bitrate: number;

// duration between 2 key frames in seconds

gopDuration: number;

};

audio: {

// sample rate. Only for Android. Recommended: 44100

sampleRate: 44100;

// true for stereo, false for mono. Only for Android. Recommended: true

isStereo: true;

// audio bitrate. Recommended: 128000

bitrate: number;

};

// Mute/unmute microphone

isMuted: false;

// Enables/disables the zoom gesture handled natively

enablePinchedZoom?: boolean;

// will be called when the connection is successful

onConnectionSuccess?: () => void;

// will be called when connection failed

onConnectionFailed?: (code: string) => void;

// will be called when the live-stream is stopped

onDisconnect?: () => void;

};

type LiveStreamMethods = {

// Start the stream

// streamKey: your live stream RTMP key

// url: RTMP server url, default: rtmp://broadcast.api.video/s

startStreaming: (streamKey: string, url?: string) => void;

// Stops the stream

stopStreaming: () => void;

// Sets the zoomRatio

// Intended for use with React Native Gesture Handler, a slider or similar.

setZoomRatio: (zoomRatio) => void;

};You can try our example app, feel free to test it.

Be sure to follow the React Native installation steps before anything.

- Open a new terminal

- Clone the repository and go into it

git clone https://github.com/apivideo/api.video-reactnative-live-stream.git livestream_example_app && cd livestream_example_appInstall the packages and launch the application

yarn && yarn example android- Install the packages

yarn install- Go into

/example/iosand install the Pods

cd /example/ios && pod install- Sign your application

Open Xcode, click on "Open a project or file" and open the Example.xcworkspace file.

You can find it in YOUR_PROJECT_NAME/example/ios.

Click on Example, go in Signin & Capabilities tab, add your team and create a unique

bundle identifier.

- Launch the application, from the root of your project

yarn example iosapi.video live stream library is using external native library for broadcasting

| Plugin | README |

|---|---|

| StreamPack | StreamPack |

| HaishinKit | HaishinKit |

If you have any questions, ask us here: https://community.api.video . Or use Issues.