- Overview

- Where to Start

- Confluent Cloud

- Stream Processing

- Data Pipelines

- Confluent Platform

- Build Your Own

- Additional Demos

This is a curated list of demos that showcase Apache Kafka® event stream processing on the Confluent Platform, an event stream processing platform that enables you to process, organize, and manage massive amounts of streaming data across cloud, on-prem, and serverless deployments.

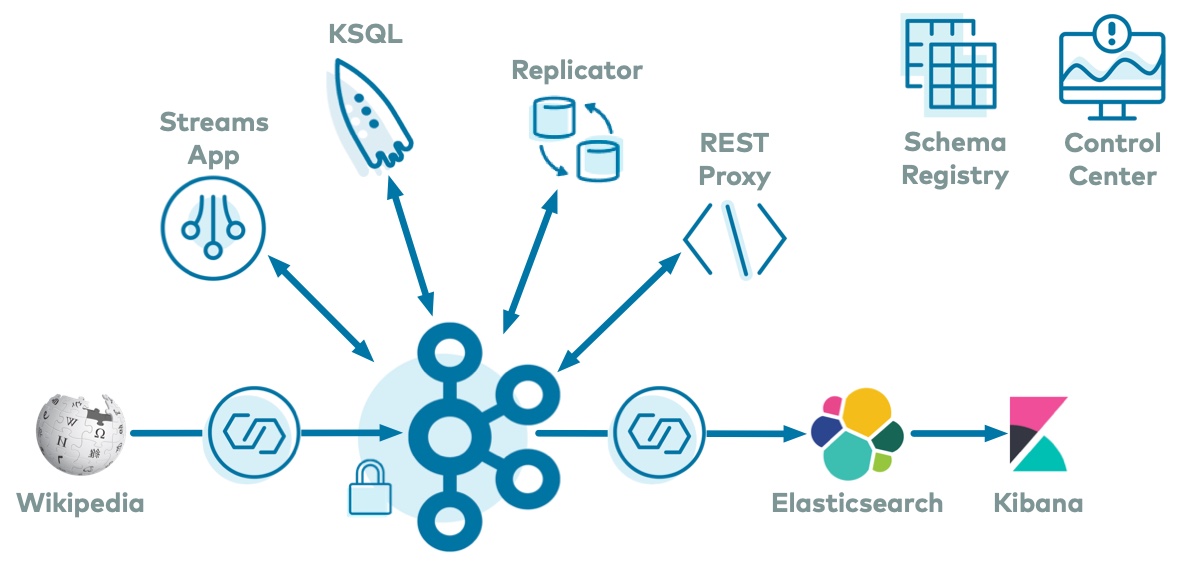

The best demo to start with is cp-demo which spins up a Kafka event streaming application using ksqlDB for stream processing, with many security features enabled, in an end-to-end streaming ETL pipeline with a source connector pulling from live data and a sink connector connecting to Elasticsearch and Kibana for visualizations.

cp-demo also comes with a tutorial and is a great configuration reference for Confluent Platform.

There are many examples from full end-to-end demos that create connectors, streams, and KSQL queries in Confluent Cloud, to resources that help you build your own demos. You can find the documentation and instructions for all Confluent Cloud demos at https://docs.confluent.io/platform/current/tutorials/examples/ccloud/docs/ccloud-demos-overview.html

| Demo | Local | Docker | Description |

|---|---|---|---|

| Confluent CLI | Y | N | Fully automated demo interacting with your Confluent Cloud cluster using the Confluent CLI  |

| Clients in Various Languages to Cloud | Y | N | Client applications, showcasing producers and consumers, in various programming languages connecting to Confluent Cloud  |

| Cloud ETL | Y | N | Fully automated cloud ETL solution using Confluent Cloud connectors (AWS Kinesis, Postgres with AWS RDS, GCP GCS, AWS S3, Azure Blob) and fully-managed ksqlDB  |

| ccloud-stack | Y | N | Creates a fully-managed stack in Confluent Cloud, including a new environment, service account, Kafka cluster, KSQL app, Schema Registry, and ACLs. The demo also generates a config file for use with client applications. |

| On-Prem Kafka to Cloud | N | Y | Module 2 of Confluent Platform demo (cp-demo) with a playbook for copying data between the on-prem and Confluent Cloud clusters  |

| DevOps for Apache Kafka® with Kubernetes and GitOps | N | N | Simulated production environment running a streaming application targeting Apache Kafka on Confluent Cloud using Kubernetes and GitOps  |

| Demo | Local | Docker | Description |

|---|---|---|---|

| Clickstream | N | Y | Automated version of the ksqlDB clickstream demo  |

| Kafka Tutorials | Y | Y | Collection of common event streaming use cases, with each tutorial featuring an example scenario and several complete code solutions  |

| Microservices ecosystem | N | Y | Microservices orders Demo Application integrated into the Confluent Platform  |

| Demo | Local | Docker | Description |

|---|---|---|---|

| Clients in Various Languages | Y | N | Client applications, showcasing producers and consumers, in various programming languages  |

| Connect and Kafka Streams | Y | N | Demonstrate various ways, with and without Kafka Connect, to get data into Kafka topics and then loaded for use by the Kafka Streams API  |

| Demo | Local | Docker | Description |

|---|---|---|---|

| Avro | Y | N | Client applications using Avro and Confluent Schema Registry  |

| CP Demo | N | Y | Confluent Platform demo (cp-demo) with a playbook for Kafka event streaming ETL deployments  |

| Kubernetes | N | Y | Demonstrations of Confluent Platform deployments using the Confluent Operator  |

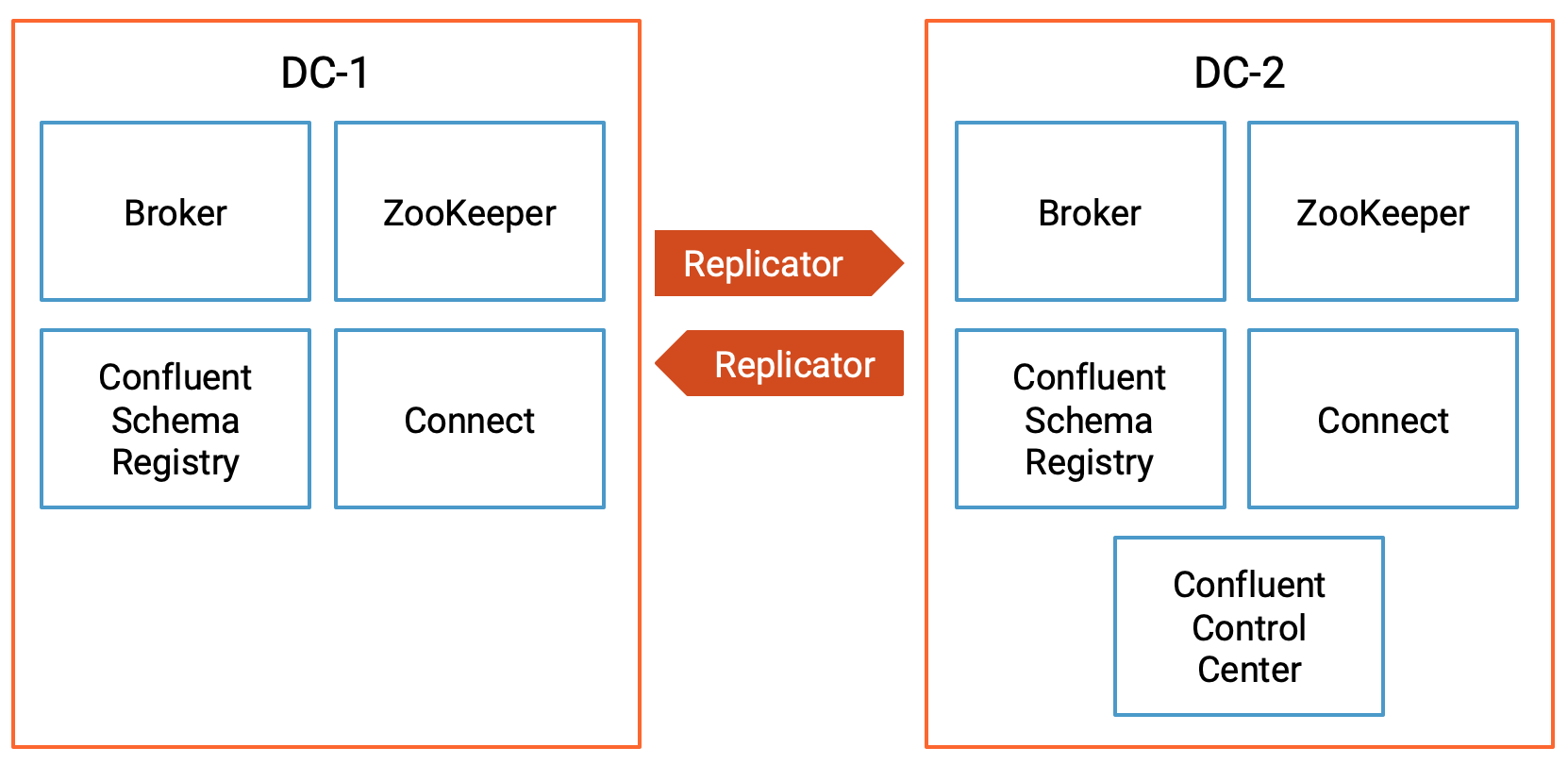

| Multi Datacenter | N | Y | Active-active multi-datacenter design with two instances of Confluent Replicator copying data bidirectionally between the datacenters  |

| Multi-Region Clusters | N | Y | Multi-Region clusters (MRC) with follower fetching, observers, and replica placement |

| Quickstart | Y | Y | Automated version of the Confluent Quickstart: for Confluent Platform on local install or Docker, community version, and Confluent Cloud  |

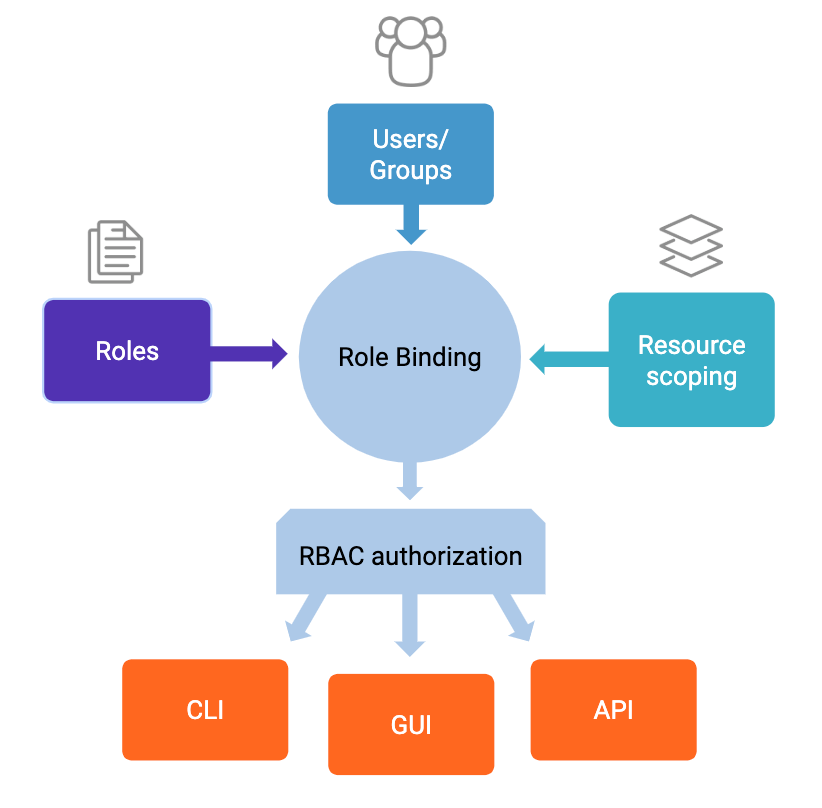

| Role-Based Access Control | Y | Y | Role-based Access Control (RBAC) provides granular privileges for users and service accounts  |

| Replicator Security | N | Y | Demos of various security configurations supported by Confluent Replicator and examples of how to implement them  |

As a next step, you may want to build your own custom demo or test environment. We have several resources that launch just the services in Confluent Cloud or on prem, with no pre-configured connectors, data sources, topics, schemas, etc. Using these as a foundation, you can then add any connectors or applications. You can find the documentation and instructions for these "build-your-own" resources at https://docs.confluent.io/platform/current/tutorials/build-your-own-demos.html.

Here are additional GitHub repos that offer an incredible set of nearly a hundred other Apache Kafka demos. They are not maintained on a per-release basis like the demos in this repo, but they are an invaluable resource.