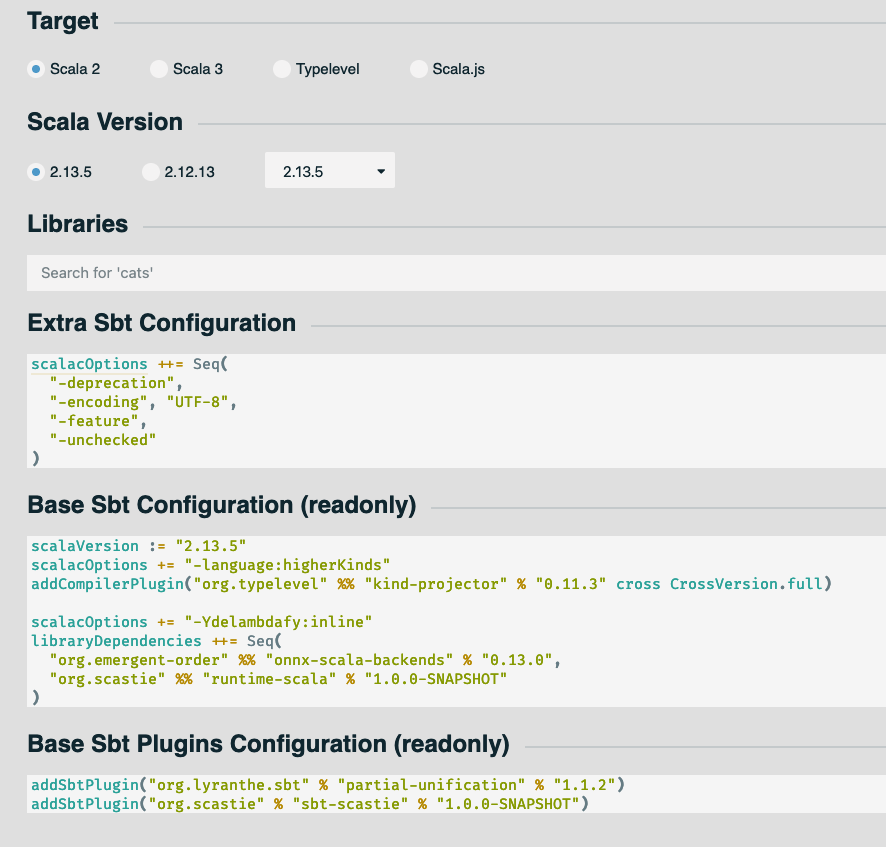

Add this to the build.sbt in your project:

libraryDependencies += "org.emergent-order" %% "onnx-scala-backends" % "0.17.0"A short, recent talk I gave about the project: ONNX-Scala: Typeful, Functional Deep Learning / Dotty Meets an Open AI Standard

First, download the model file for SqueezeNet.

You can use get_models.sh

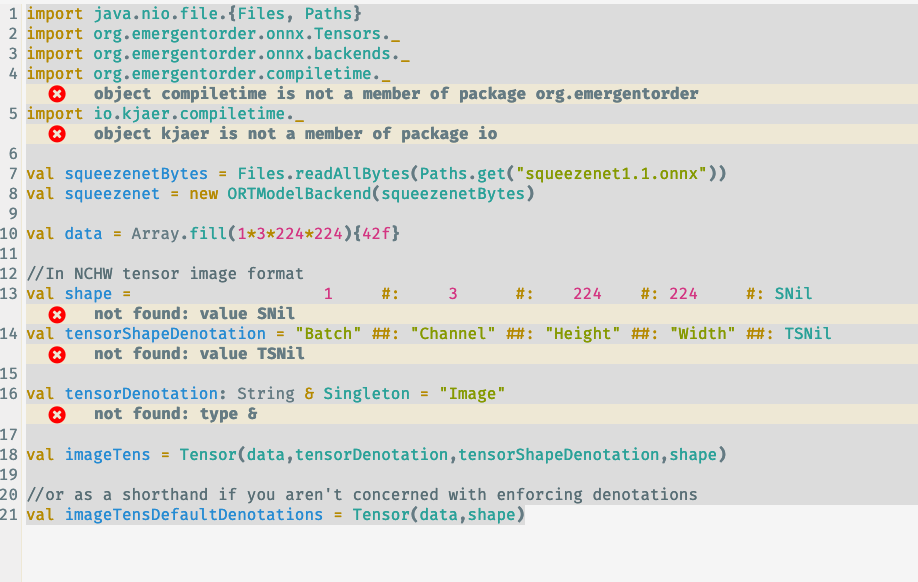

Note that all code snippets are written in Scala 3 (Dotty).

First we create an "image" tensor composed entirely of pixel value 42:

import java.nio.file.{Files, Paths}

import org.emergentorder.onnx.Tensors._

import org.emergentorder.onnx.Tensors.Tensor._

import org.emergentorder.onnx.backends._

import org.emergentorder.compiletime._

import org.emergentorder.io.kjaer.compiletime._

val squeezenetBytes = Files.readAllBytes(Paths.get("squeezenet1_1_Opset18.onnx"))

val squeezenet = new ORTModelBackend(squeezenetBytes)

val data = Array.fill(1*3*224*224){42f}

//In NCHW tensor image format

val shape = 1 #: 3 #: 224 #: 224 #: SNil

val tensorShapeDenotation = "Batch" ##: "Channel" ##: "Height" ##: "Width" ##: TSNil

val tensorDenotation: String & Singleton = "Image"

val imageTens = Tensor(data,tensorDenotation,tensorShapeDenotation,shape)

//or as a shorthand if you aren't concerned with enforcing denotations

val imageTensDefaultDenotations = Tensor(data,shape)Note that ONNX tensor content is in row-major order.

Next we run SqueezeNet image classification inference on it:

val out = squeezenet.fullModel[Float,

"ImageNetClassification",

"Batch" ##: "Class" ##: TSNil,

1 #: 1000 #: SNil](Tuple(imageTens))

// val out:

// Tensor[Float,("ImageNetClassification",

// "Batch" ##: "Class" ##: TSNil,

// 1 #: 1000 #: 1 #: 1 SNil)] = IO(...)

// ...

//The output shape

out.shape.unsafeRunSync()

// val res0: Array[Int] = Array(1, 1000, 1, 1)

val data = out.data.unsafeRunSync()

// val data: Array[Float] = Array(1.786191E-4, ...)

//The highest scoring and thus highest probability (predicted) class

data.indices.maxBy(data)

// val res1: Int = 753Referring to the ImageNet 1000 class labels, we see that the predicted class is "radiator".

Based on a simple benchmark of 100000 iterations of SqueezeNet inference, the run time is on par (within 3% of) ONNX Runtime (via Python). The discrepancy can be accounted for by the overhead of shipping data between the JVM and native memory.

When using this API, we load the provided ONNX model file and pass it as-is to the underlying ONNX backend, which is able to optimize the full graph. This is the most performant execution mode, and is recommended for off-the-shelf models / performance-critical scenarios.

This full-model API is untyped in the inputs, so it can fail at runtime. This is inevitable because we load models from disk at runtime. An upside of this is that you are free to use dynamic shapes, for example in the case of differing batch sizes per model call (assuming your model supports this via symbolic dimensions, see ONNX Shape Inference ). If your input shapes are static, feel free to wrap your calls into it in a facade with typed inputs.

ONNX-Scala is cross-built against Scala JVM, Scala.js/JavaScript and Scala Native (for Scala 3 / Dotty )

Currently at ONNX 1.14.1 (Backward compatible to at least 1.2.0 for the full model API, 1.7.0 for the fine-grained API), ONNX Runtime 1.16.3.

A complete*, versioned, numerically generic, type-safe / typeful API to ONNX(Open Neural Network eXchange, an open format to represent deep learning and classical machine learning models), derived from the Protobuf definitions and the operator schemas (defined in C++).

We also provide implementations for each operator in terms of a generic core operator method to be implemented by the backend. For more details on the low-level fine-grained API see here

The preferred high-level fine-grained API, most suitable for the end user, is NDScala

* Up to roughly the set of ops supported by ONNX Runtime Web (WebGL backend)

Automatic differentiation to enable training is under consideration (ONNX currently provides facilities for training as a tech preview only).

Featuring type-level tensor and axis labels/denotations, which along with literal types for dimension sizes allow for tensor/axes/shape/data-typed tensors. Type constraints, as per the ONNX spec, are implemented at the operation level on inputs and outputs, using union types, match types and compiletime singleton ops (thanks to @MaximeKjaer for getting the latter into dotty). Using ONNX docs for dimension and type denotation, as well as the operators doc as a reference, and inspired by Nexus, Neurocat and Named Tensors.

There is one backend per Scala platform. For the JVM the backend is based on ONNX Runtime, via their official Java API. For Scala.js / JavaScript the backend is based on the ONNX Runtime Web.

Supported ONNX input and output tensor data types:

- Byte

- Short

- Int

- Long

- Float

- Double

- Boolean

- String

Supported ONNX ops:

-

ONNX-Scala, Fine-grained API: 87/178 total

-

ONNX-Scala, Full model API: Same as below

-

ONNX Runtime Web (using Wasm backend): 165/178 total.

-

ONNX Runtime: 165/178 total

See the ONNX backend scoreboard

TODO: T5 example

You'll need sbt.

To build and publish locally:

sbt publishLocal

-

ONNX via ScalaPB - Open Neural Network Exchange / The missing bridge between Java and native C++ libraries (For access to Protobuf definitions, used in the fine-grained API to create ONNX models in memory to send to the backend)

-

Spire - Powerful new number types and numeric abstractions for Scala. (For support for unsigned ints, complex numbers and the Numeric type class in the core API)

-

Dotty - The Scala 3 compiler, also known as Dotty. (For union types (used here to express ONNX type constraints), match types, compiletime singleton ops, ...)

-

ONNX Runtime via ORT Java API - ONNX Runtime: cross-platform, high performance ML inferencing and training accelerator

-

Neurocat - From neural networks to the Category of composable supervised learning algorithms in Scala with compile-time matrix checking based on singleton-types

-

Nexus - Experimental typesafe tensors & deep learning in Scala

-

Lantern - Machine learning framework prototype in Scala. The design of Lantern is built on two important and well-studied programming language concepts, delimited continuations (for automatic differentiation) and multi-stage programming (staging for short).

-

DeepLearning.scala - A simple library for creating complex neural networks

-

tf-dotty - Shape-safe TensorFlow in Dotty