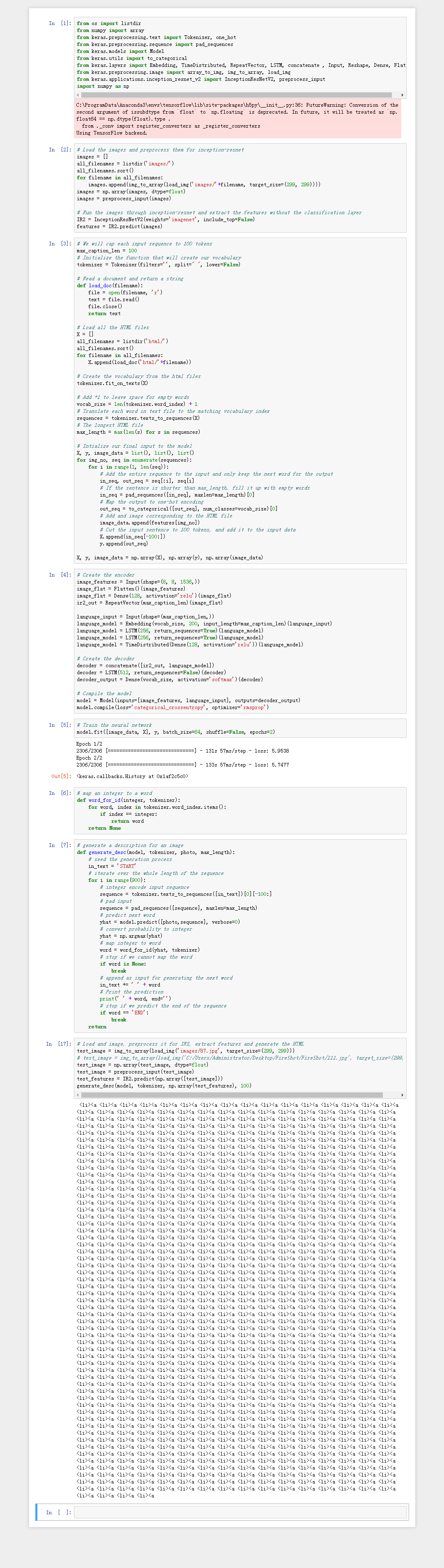

I know this is not enough to train full data set. But i cant use floydhub at the moment

`ResourceExhaustedError Traceback (most recent call last)

<ipython-input-8-deb30b24a084> in <module>()

----> 1 model.fit_generator(data_generator_simple(texts, train_features, 1, 150), steps_per_epoch=1400, epochs=50, callbacks=callbacks_list, verbose=1, max_queue_size=1)

d:\uzair\screenshot-to-code-in-keras\venv\lib\site-packages\keras\legacy\interfaces.py in wrapper(*args, **kwargs)

89 warnings.warn('Update your `' + object_name +

90 '` call to the Keras 2 API: ' + signature, stacklevel=2)

---> 91 return func(*args, **kwargs)

92 wrapper._original_function = func

93 return wrapper

d:\uzair\screenshot-to-code-in-keras\venv\lib\site-packages\keras\engine\training.py in fit_generator(self, generator, steps_per_epoch, epochs, verbose, callbacks, validation_data, validation_steps, class_weight, max_queue_size, workers, use_multiprocessing, shuffle, initial_epoch)

1424 use_multiprocessing=use_multiprocessing,

1425 shuffle=shuffle,

-> 1426 initial_epoch=initial_epoch)

1427

1428 @interfaces.legacy_generator_methods_support

d:\uzair\screenshot-to-code-in-keras\venv\lib\site-packages\keras\engine\training_generator.py in fit_generator(model, generator, steps_per_epoch, epochs, verbose, callbacks, validation_data, validation_steps, class_weight, max_queue_size, workers, use_multiprocessing, shuffle, initial_epoch)

189 outs = model.train_on_batch(x, y,

190 sample_weight=sample_weight,

--> 191 class_weight=class_weight)

192

193 if not isinstance(outs, list):

d:\uzair\screenshot-to-code-in-keras\venv\lib\site-packages\keras\engine\training.py in train_on_batch(self, x, y, sample_weight, class_weight)

1218 ins = x + y + sample_weights

1219 self._make_train_function()

-> 1220 outputs = self.train_function(ins)

1221 if len(outputs) == 1:

1222 return outputs[0]

d:\uzair\screenshot-to-code-in-keras\venv\lib\site-packages\keras\backend\tensorflow_backend.py in __call__(self, inputs)

2659 return self._legacy_call(inputs)

2660

-> 2661 return self._call(inputs)

2662 else:

2663 if py_any(is_tensor(x) for x in inputs):

d:\uzair\screenshot-to-code-in-keras\venv\lib\site-packages\keras\backend\tensorflow_backend.py in _call(self, inputs)

2629 symbol_vals,

2630 session)

-> 2631 fetched = self._callable_fn(*array_vals)

2632 return fetched[:len(self.outputs)]

2633

d:\uzair\screenshot-to-code-in-keras\venv\lib\site-packages\tensorflow\python\client\session.py in __call__(self, *args)

1452 else:

1453 return tf_session.TF_DeprecatedSessionRunCallable(

-> 1454 self._session._session, self._handle, args, status, None)

1455

1456 def __del__(self):

d:\uzair\screenshot-to-code-in-keras\venv\lib\site-packages\tensorflow\python\framework\errors_impl.py in __exit__(self, type_arg, value_arg, traceback_arg)

517 None, None,

518 compat.as_text(c_api.TF_Message(self.status.status)),

--> 519 c_api.TF_GetCode(self.status.status))

520 # Delete the underlying status object from memory otherwise it stays alive

521 # as there is a reference to status from this from the traceback due to

ResourceExhaustedError: OOM when allocating tensor with shape[131072,1024] and type float on /job:localhost/replica:0/task:0/device:GPU:0 by allocator GPU_0_bfc

[[Node: training/RMSprop/mul_56 = Mul[T=DT_FLOAT, _device="/job:localhost/replica:0/task:0/device:GPU:0"](RMSprop/lr/read, training/RMSprop/clip_by_value_18)]]

Hint: If you want to see a list of allocated tensors when OOM happens, add report_tensor_allocations_upon_oom to RunOptions for current allocation info.`

One thing i noted is that this happens at 97th sample exactly (i.e. when generator sends 97th sample for processing.). Even if i change the generator to send 97th sample on first sample, it crashes with the same error. So im guessing there is something wrong with that sample. Moreover if we skip this sample, there are few others that cause the issue too.

Edit: If i use bootstrap.ipynb to train with same sample, it works just fine. Only reason I am using bootstrap_generator is because i cannot load all the models to memory and hence have to yield chunks by using generator