In research phase we implemented hierarchyStatistics constraint the computed the entity cardinality for all nodes in the hierarchy. This solution can be used for "mega-menu" rendering but usually the application needs to display only part of the menu so we need to come up with refined design that would allow clients to fetch only the part of the menu they actually need to render and nothing more (we don't want to spend time on calculating things that would get thrown out).

User requirements

- we need to render entire menu in single query (along with the filtered entities that are assigned to a hierarchy)

- we need to render "mega-menu" separately - i.e. get top X levels of all product categories - hierarchical entities (without actually fetching the product themselves)

- when we look at specific category (hierarchical entity):

- we need to fetch parents to the root (specific levels or all)

- we need to fetch children from current node (specific levels or all)

- we need to fetch siblings of this node or any other node from previous two requirements

- we need to fetch immediate children

EvitaQL requirement changes

We will completely refactor the current hierarchyStatistics so that the statistics will be optional and will be composable in this way:

hierarchyOfReference(

"categories",

fromRoot(

"topLevel",

// only one sub constraint has sense - this is only example

stopAt(level(6), distance(2), node(filterBy(attributeEquals('someAttribute', 'someValue')))),

fetch(attributes()),

statistics()

),

fromNode(

"someDetachedHierarchy",

node(filterBy(attributeEquals('code', 'specialNode'))),

// only one sub constraint has sense - this is only example

stopAt(level(6), distance(2), node(filterBy(attributeEquals('someAttribute', 'someValue')))),

fetch(attributes()),

statistics()

),

children(

"myChildren",

// only one sub constraint has sense - this is only example

stopAt(level(6), distance(2), node(filterBy(attributeEquals('someAttribute', 'someValue')))),

fetch(attributes()),

statistics()

),

parents(

"myParents",

// only one sub constraint has sense - this is only example

stopAt(level(2), distance(2), node(filterBy(attributeEquals('someAttribute', 'someValue'))))

fetch(attributes()),

statistics(),

siblings(

stopAt(level(3)),

fetch(attributes()),

statistics()

)

),

siblings(

"mySiblings",

filterBy(attributeEquals('whatever','value')),

fetch(attributes()),

statistics()

)

)Response data structure

We will reuse current DTO: io.evitadb.api.requestResponse.extraResult.HierarchyStatistics, but it will have deeper structure:

- first Map level will be indexed by

referenceName

- second Map level will be indexed by

constraint custom name (i.e. "mySiblings" or whatever developer specifies in query)

- as a value the

io.evitadb.api.requestResponse.extraResult.HierarchyStatistics.LevelInfo will be provided and it will be altered in following way (the properties will be not null only when statistics requirement is part of the query):

- cardinality will be optional (

int -> @Nullable Integer)

@Nullable Boolean hasChildren will be added to signalize whether the node has any additional children within it

Real-life use-cases

Mega-menu example

Render 2 level deep mega-menu:

hierarchyOfReference(

"categories",

fromRoot(

"megamenu",

stopAt(level(2)),

fetch(attributes())

)

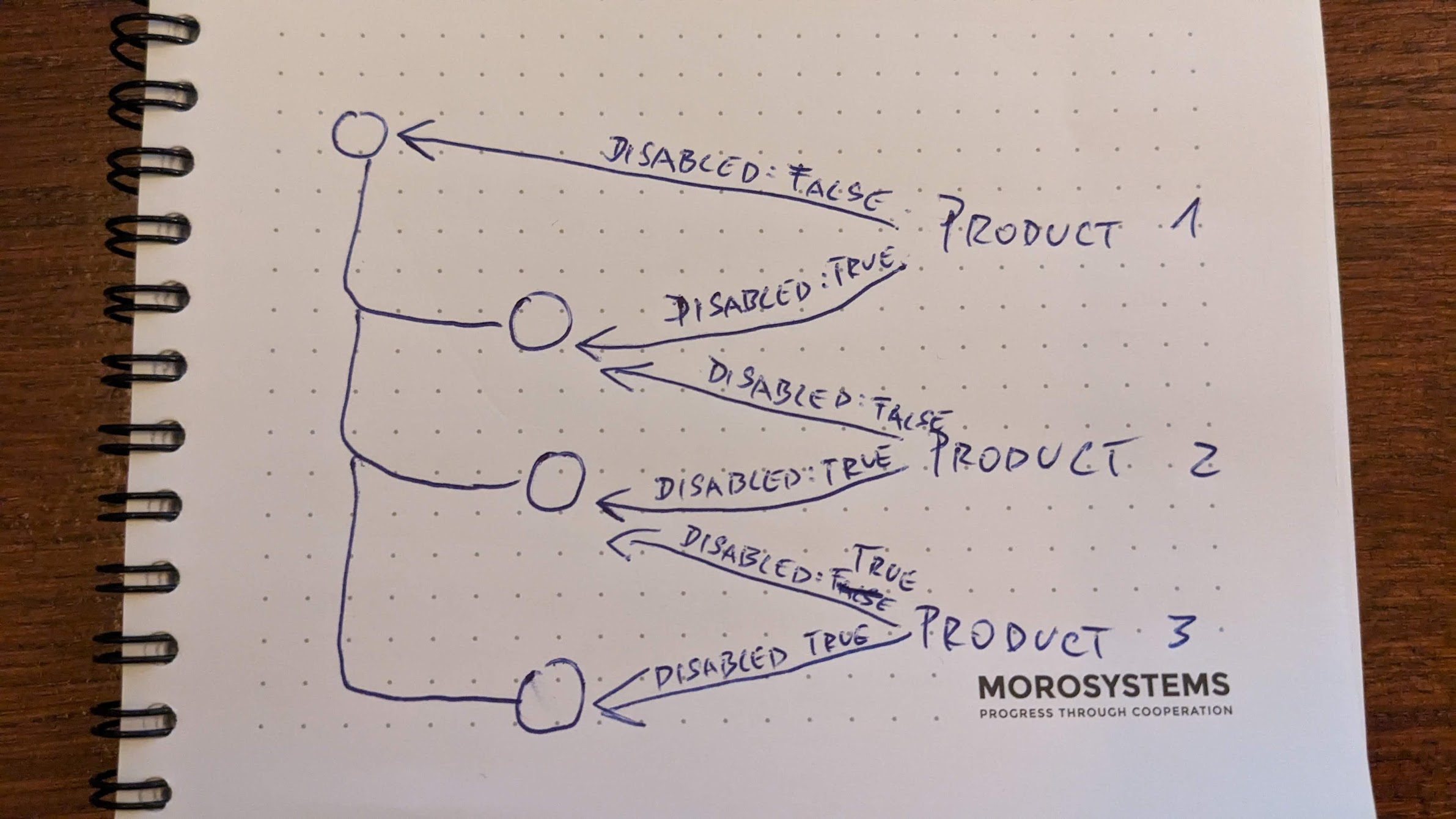

)Left menu example

Render menu of the category on the third level - we need to render category siblings, all category parents and their siblings, and also immediate children.

hierarchyOfReference(

"categories",

siblings(

"currentNodeSiblings",

fetch(attributes())

),

children(

"currentNodeChildren",

stopAt(distance(1)),

fetch(attributes())

)

parents(

"currentNodeParents",

fetch(attributes()),

siblings(

fetch(attributes())

)

)

)Render immediate children of the node

This query will be used in AJAX style gradual menu unrolling when only immediate sub level is opened.

hierarchyOfReference(

"categories",

children(

"currentNodeChildren",

stopAt(distance(1)),

fetch(attributes())

)

)GraphQL query mechanism

In GraphQL it could look like this:

hierarchy {

# reference name

categories {

# list of all nodes requested from root, on FE this needs to be converted into tree

topLevel: fromRoot(

stopAt: {

# only one of them could be used in real query, this would be validated on server

level: 6

distance: 2

node: {

# this would be same filterBy which is used when querying category entities

filterBy: {

attribute_someAttribute_equals: "someValue"

}

}

}

) {

parentId # id of parent node

path # array of node ids in tree where this node resides in

cardinality

childrenCount

entity {

primaryKey

attributes {

code

}

}

}

# list of all nodes requested from specific node, on FE this needs to be converted into tree

someDetachedHierarhcy: fromNode(

node: {

filterBy: {

attribute_code_equals: "specialNode"

}

}

stopAt: {

# only one of them could be used in real query, this would be validated on server

level: 6

distance: 2

node: {

# this would be same filterBy which is used when querying category entities

filterBy: {

attribute_someAttribute_equals: "someValue"

}

}

}

) {

parentId # id of parent node

path # array of node ids in tree where this node resides in

cardinality

childrenCount

entity {

primaryKey

attributes {

code

}

}

}

# list of all children nodes requested from current position, on FE this needs to be converted into tree

myChildren: children(

stopAt: {

# only one of them could be used in real query, this would be validated on server

level: 6

distance: 2

node: {

# this would be same filterBy which is used when querying category entities

filterBy: {

attribute_someAttribute_equals: "someValue"

}

}

}

) {

parentId # id of parent node

path # array of node ids in tree where this node resides in

cardinality

childrenCount

entity {

primaryKey

attributes {

code

}

}

}

# list of all parent nodes requested from current position

myParents: parents(

stopAt: {

# only one of them could be used in real query, this would be validated on server

level: 6

distance: 2

node: {

# this would be same filterBy which is used when querying category entities

filterBy: {

attribute_someAttribute_equals: "someValue"

}

}

}

) {

cardinality

childrenCount

entity {

primaryKey

attributes {

code

}

}

# for each node there would be flat list of sibling nodes, the level would filter where this list is returned

siblings(level: 3) {

entity {

primaryKey

}

cardinality

}

}

# list of all sibling nodes requested for current position

mySiblings: siblings(

# this would be same filterBy which is used when querying category entities

filterBy: {

attribute_whatever_equals: "value"

}

) {

cardinality

childrenCount

entity {

primaryKey

attributes {

code

}

}

}

}

}