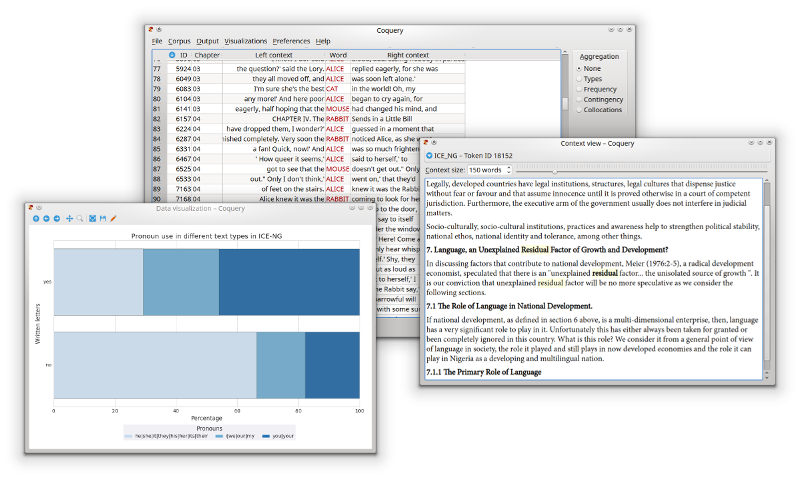

Coquery is a free corpus query tool for linguists, lexicographers, translators, and anybody who wishes to search and analyse text corpora. It is available for Windows, Linux, and Mac OS X computers.

You can either build your own corpus from a collection of text files (either PDF, MS Word, OpenDocument, HTML, or plain text) in a directory on your computer, or install a corpus module for one of the supported corpora (the corpus data files are not provided by Coquery).

Tutorials and documentation can be found on the Coquery website: http://www.coquery.org

An incomplete list of the things you can do with Coquery:

- Use the corpus manager to install one of the supported corpora

- Build your own corpus from PDF, HTML, .docx, .odt, or plain text files

- Filter your query for example by year, genre, or speaker gender

- Choose which corpus features will be included in your query results

- View every token that matches your query within its context

- Match tokens by orthography, phonetic transcription, lemma, or gloss, and restrict your query by part-of-speech

- Use string functions e.g. to test if a token contains a letter sequence

- Use the same query syntax for all installed corpora

- Automate queries by reading them from an input file

- Save query results from speech corpora as Praat TextGrids

- Summarize the query results as frequency tables or contingency tables

- Calculate entropies and relative frequencies

- Fetch collocations, and calculate association statistics like mutual information scores or conditional probabilities

- Use bar charts, heat maps, or bubble charts to visualize frequency distributions

- Illustrate diachronic changes by using time series plots

- Show the distribution of tokens within a corpus in a barcode or a beeswarm plot

- Either connect to easy-to-use internal databases, or to powerful MySQL servers

- Access large databases on a MySQL server over the network

- Create links between tables from different corpora, e.g. to provide phonetic transcriptions for tokens in an unannotated corpus

Coquery already has installers for the following linguistic corpora and lexical databases:

- British National Corpus (BNC)

- Brown Corpus

- Buckeye Corpus

- CELEX Lexical Database (English)

- Carnegie Mellon Pronunciation Dictionary (CMUdict)

- Corpus of Contemporary American English (COCA)

- Corpus of Historical American English (COHA)

- Ġabra: an open lexicon for Maltese

- ICE-Nigeria

- Switchboard-1 Telephone Speech Corpus

If the list is missing a corpus that you want to see supported in Coquery, you can either write your own corpus installer in Python using the installer API, or you can contact the Coquery maintainers and ask them for assistance.

Copyright (c) 2016 Gero Kunter

Initial development was supported by: English Linguistics Institut für Amerikanistik und Amerikanistik Heinrich-Heine-Universität Düsseldorf

Coquery is free software released under the terms of the GNU General Public license (version 3).