Open NSynth Super is an experimental physical interface for NSynth, a machine learning algorithm developed by Google Brain’s Magenta team to generate new, unique sounds that exist between different sounds. Open NSynth Super allows you to create and explore new sounds that it generates through a simple to use hardware interface that integrates easily into any musician’s production rig. To find out more, visit the NSynth Super website.

This repository contains all the instructions and files required to build an Open NSynth Super instrument from scratch, and you can use them to make your own version. Anyone can fork the code and schematics to remix the instrument in any way they wish.

This document has three chapters – an overview of the instrument itself, a 'make your own' guide, and an overview of the audio creation process.

-

The 'how it works' overview summarises the technical and user interface features of the Open NSynth Super instrument.

-

The 'make your own' guide takes you through the steps required to build an Open NSynth Super unit and load it with an example Linux image containing pre-computed sample audio, helping you to get started with the instrument straight away.

-

The audio creation overview gives a high-level summary of the audio creation pipeline, which enables you to process audio files (on a separate computer) and load the instrument with your own input sounds.

This repository also includes individual chapters on every aspect of Open NSynth Super, so you can build a unit from scratch, or hack and customise any part of it:

- Application software

- Audio creation pipeline

- Case & dials

- Firmware & microcontroller

- OS and provisioning

- PCB and hardware

Open NSynth Super is super simple to integrate into any production music rig. Like many other synthesizer modules, it receives MIDI input via a 5-pin DIN connector, and outputs audio through a 3.5mm jack cable. This simple interface allows it to work with almost any MIDI source, like keyboards, DAWs, or hardware sequencers.

The physical interface of Open NSynth Super is constructed around a square touch interface. Using dials in the corners of the touch surface, musicians can select four source sounds and use the touch interface to explore the sounds that the NSynth algorithm has generated between them. In addition, the instrument also has controls for tuning the new sounds via the fine controls.

(A) Instrument selectors & patch storage - These rotary dials are used to select the instruments that are assigned to the corners of the interface. In version 1.2.0, these selectors can be pushed down to store or clicked to recall settings patches.

(B) OLED display - A high-contrast display shows you the state of the instrument and additional information about the controls that you are interacting with.

(C) Fine controls - These six dials are used to further customize the audio output by the device:

- 'Position' sets the initial position of the wave, allowing you to cut out the attack of a waveform, or to start from the tail of a newly created sound.

- 'Attack' controls the time taken for initial run-up of level from nil to peak.

- 'Decay' controls the time taken for the subsequent run down from the attack level to the designated sustain level.

- 'Sustain' sets the level during the main sequence of the sound's duration, until the key is released.

- 'Release' controls the time taken for the level to decay from the sustain level to zero after the key is released.

- 'Volume' adjusts the overall output volume of the device.

(D) Touch interface - This is a capacitive sensor, like the mouse pad on a laptop, which is used to explore the world of new sounds that NSynth has generated between your chosen source audio.

There are several distinct components to each Open NSynth Super unit: a custom PCB with a dedicated microcontroller and firmware for handling user inputs; a Raspberry Pi 3 computer running Raspbian Linux and an openFrameworks-based audio synthesiser application; and a series of scripts for preparing audio on a GPU-equipped Linux server using the NSynth algorithm. In addition to the software, firmware, and hardware, there are files for creating a casing and dials for the unit.

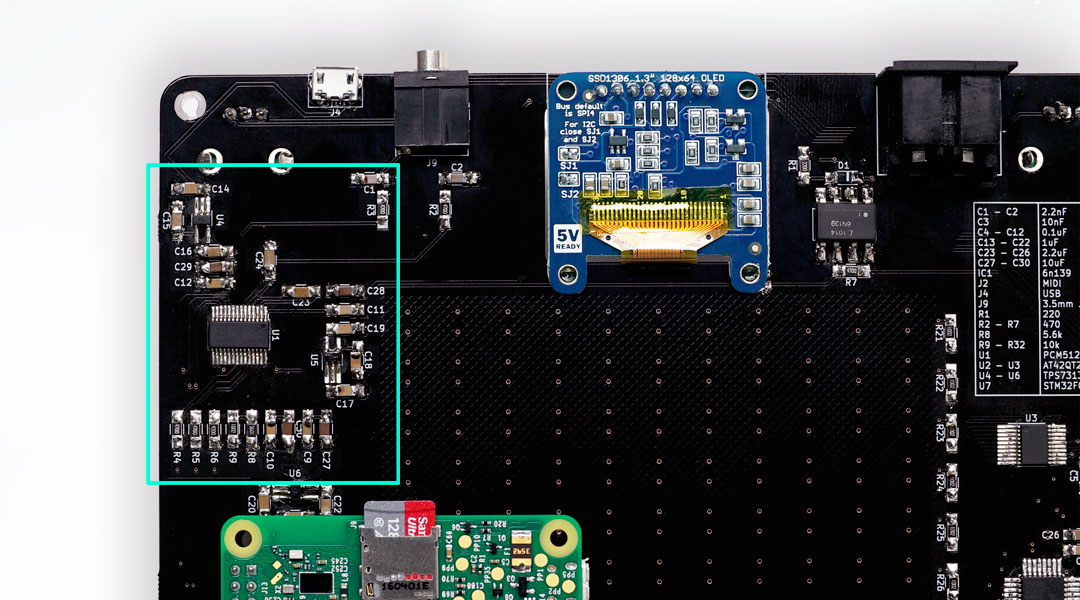

The electronics are built around a Raspberry Pi 3 running Raspbian Linux, and a custom PCB used to read the inputs and control the outputs. A microcontroller on the PCB manages the physical inputs: there are four rotary encoders on the four corners for instrument selection; six potentiometers below the interface to control the position, envelope, and volume settings; and a capacitive grid on the surface of the PCB (exposed through the top layer of the case) used to select the mixing point of the four instruments.

More information on the electronics, hardware, and firmware can be found here, and here. A complete bill of materials for the case and electronics is available in this Excel spreadsheet.

Open NSynth Super runs a multisampler audio application built on openFrameworks to process incoming MIDI note data and generate sound output. More information about this application can be found in the software readme file.

We designed a case for the electronics that can be easily manufactured with a laser cutter, and held together with standard screws and fittings. This design is easily customisable using different materials, colors, dials, and shapes. Read more about this in the case readme file.

Audio for the Open NSynth Super instrument is produced by the NSynth algorithm. Because generating audio requires a great deal of processing power, this repository includes a set of scripts that you can run on a server which will take any audio recordings of your choice and convert them into a format compatible with the instrument. For more information, see the audio readme file or the audio creation overview in this document.

This guide gives you an overview of how to create casing and electronics for Open NSynth Super, and load the device with a premade Linux image which is already set up with application software, hardware support, and example sounds.

The example image file includes the following software features:

- Raspbian Linux Lite

- Full configuration to support the Open NSynth Super mainboard

- Open NSynth Super software application pre-loaded and configured

- Sample audio data

- A read-only filesystem for robustness

You will need the following off-the-shelf items to complete this guide:

- Micro SD card (16GB or 64GB)

- Raspberry Pi 3B+

- 5V 2A micro-USB power supply

You will also need the following Open NSynth Super-specific items, which are detailed in the steps below:

- Open NSynth Super mainboard

- Laser cut Open NSynth Super case

- 3D printed dials (optional)

This repository contains a reference design for a laser-cut shell for Open NSynth Super. As well as the case design, there are two sizes of 3D-printed dials which can optionally be added to the build. Parts for the case can be cut manually, or obtained easily from a laser-cutting service by supplying these files. More detailed information and specifications for the case can be found in the case readme file.

You should make or order a case following the above guide before moving forward with the build. Don't assemble the case yet, as you will need access to the ports on the Raspberry Pi.

Open NSynth Super is built around a custom PCB with inputs for the hardware controls, audio and data I/O ports, and an OLED display for the UI. The PCB can be ordered fully assembled (this is most cost effective when ordering several boards), or be assembled by hand in a few hours. Further detail on the manufacture and assembly of the board can be found in the PCB readme file.

You will need to obtain or build your PCB before proceeding further with this guide.

In order to test the board, you will need to have a working Open NSynth Super software installation, which you will prepare at the next step. Before proceeding, connect the Raspberry Pi GPIO pins to the Open NSynth Super mainboard.

There are two preconfigured OS images available, both loaded with sample audio and fully configured for use with NSynth Super. Depending on the size of your SD card, you can choose either the 64GB image or 16GB image.

The easiest way to create the SD card is to use GUI software like Etcher, Win32DiskImager, or the SD Card Association's formatter to burn the example image to a card.

The supplied images are compressed in bz2 format. These can be decompressed on the fly when writing to an SD card using the following command:

$ bzcat <IMAGE NAME> | sudo dd of=<DISK NAME>

Connect a keyboard and screen to the Raspberry Pi, insert the SD card, and plug a USB power cable into the socket on the Open NSynth Super mainboard. You should see the Pi booting up on the connected display; when you receive a prompt, you can login with the default username and password: pi / raspberry. Note that the device might run a file system check when you first boot up from the new card – this will take about 5 minutes depending on the size of the disk.

The firmware will need to be loaded to the microcontroller on your Open NSynth Super mainboard. This allows the software application running on the Raspberry Pi to interact with the controls.

Because the file system is locked for robustness, you will need to remount the disk as read/write by running the following command on your Raspberry Pi:

$ sudo mount -o remount,rw /

Next, navigate to the firmware directory and run the install command:

$ cd /home/pi/open-nsynth-super-master/firmware/src

$ make install

This command will build and install the firmware to the microcontroller. You will receive a message on screen confirming the operation has been successful:

** Programming Started **

auto erase enabled

Info : device id = 0x10006444

Info : flash size = 32kbytes

wrote 12288 bytes from file ../bin/main.elf in 0.607889s (19.740 KiB/s)

** Programming Finished **

** Verify Started **

verified 11788 bytes in 0.050784s (226.680 KiB/s)

** Verified OK **

** Resetting Target **

adapter speed: 1001 kHz

shutdown command invoked

More detailed information about the firmware can be found in the firmware readme file. When the firmware installation is complete, you can power down the system using the following command, and move to the next step:

$ sudo poweroff

Before assembling the shell and 'finishing' the instrument, it's important to check that everything is functioning correctly. To do this, plug in a MIDI device (like a keyboard), a pair of headphones or speakers, and power on the instrument.

After a few seconds, you should see the grid interface appear on the OLED screen. Move your finger around the touch interface to test its responsiveness. Next, adjust the six controls at the base of the unit; the UI should update according to the control that you are adjusting. Finally, test the four instrument selection encoders, which will scroll through an instrument list on the screen.

To test the audio, ensure your MIDI device is broadcasting on channel 1 (the default channel), and send some notes to the device. You should hear audio coming from the speakers or headphones (if you don't hear anything, make sure the device audio is turned up, and that the envelope and position controls aren't cutting the audible part of the waveform).

Because the standard image is preconfigured, you can use it to test that your Open NSynth Super mainboard is functioning correctly. If all features are up and running, you can move on to final assembly.

Now that you have a fully functioning instrument, you can finally build it into its shell. Power the unit down, remove all connections (e.g. screen, keyboard, MIDI, power), and follow the instructions found here.

With the case assembled, firmware installed, and the device tested, you're ready to go and make music with your Open NSynth Super. There are detailed readmes in this repository for the software application, case, PCB and audio creation pipeline if you want to go into more detail, or start hacking the device.

Sounds for Open NSynth Super are created using the neural synthesis technique implemented by Google Brain’s Magenta team as part of their NSynth project. You can read more about Magenta and NSynth on their project page.

Because generating audio requires a great deal of processing power, this repository includes a set of scripts that you can run on a server which will take any audio recordings of your choice and convert them into a format compatible with the instrument. This audio pipeline is built on top of the NSynth implementation available through Magenta's GitHub page.

The pipeline has the following stages:

- Assemble input audio files and assign sounds to corners of the interface

- Calculate the embeddings of the input sounds with the NSynth model

- Interpolate between these to create a set of embeddings for newly generated sounds

- Generate the audio

- Remove crackles and other artifacts from the generated audio

- Package and deploy the audio to the device

More detailed instructions on how to run the pipeline, including how to set up and provision a GPU-equipped Linux server for processing audio, are available here.

The latest version of Open NSynth Super is 1.2.0. This version adds support for storage and recall of patches and settings, swaps the instrument selector encoders for push-button variants, and introduces note looping to enable sustaining notes beyond their original sample length. Version 1.2.0 software is backwards compatible with version 1.0.0 hardware, although the push-button patch storage feature is not available.

Version 1.0.0 is tagged in this repository. The example disk images for software version 1.0.0 are available for download as a 64GB image or 16GB image.

This is a collaborative effort between Google Creative Lab and Magenta, Kyle McDonald, and our partners at RRD Labs. This is not an official Google product.

We encourage open sourcing projects as a way of learning from each other. Please respect our and other creators’ rights, including copyright and trademark rights when present when sharing these works and creating derivative work. If you want more info on Google's policy, you can find it here. To contribute to the project, please refer to the contributing document in this repository.