@inproceedings{hou2020multiview,

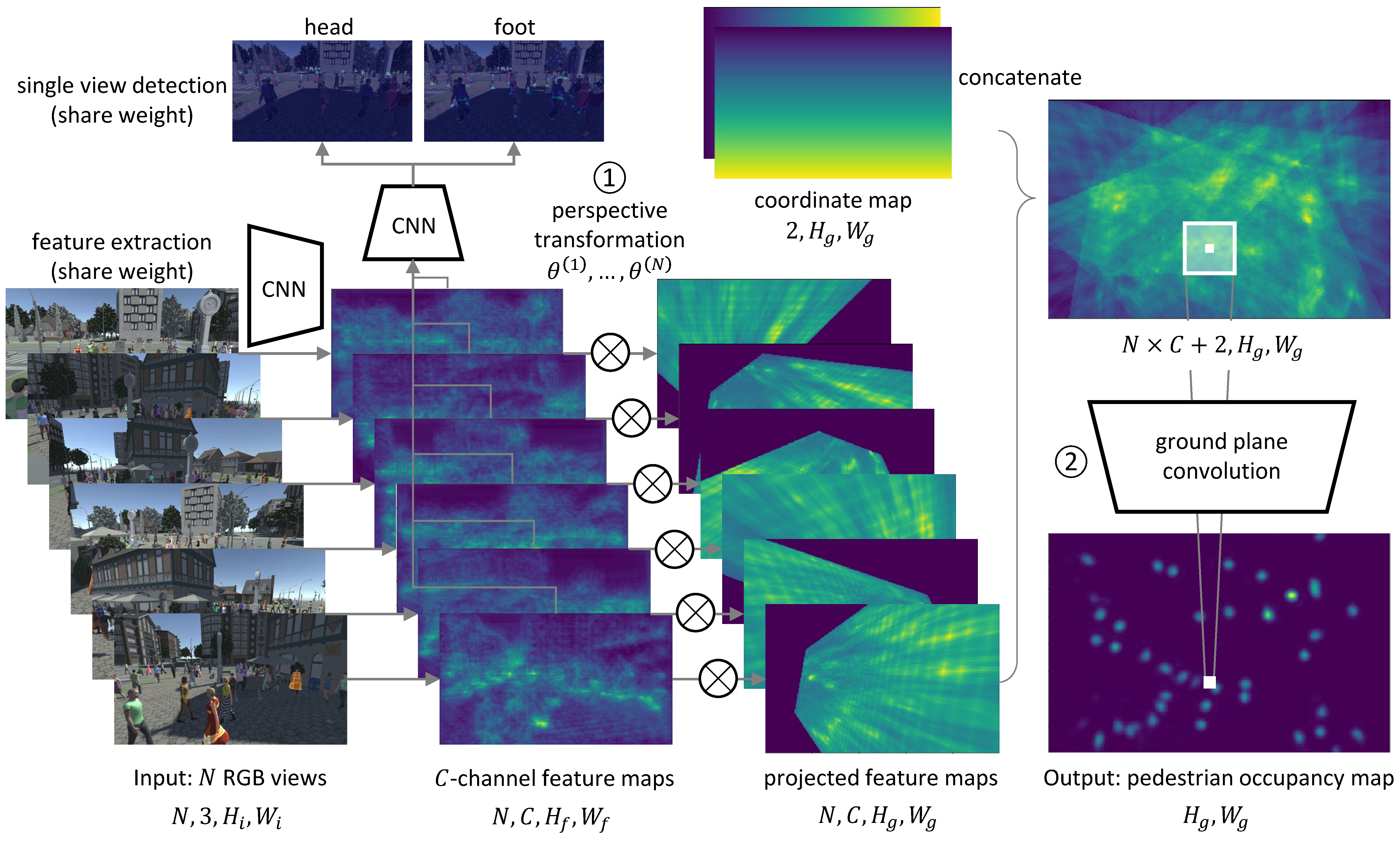

title={Multiview Detection with Feature Perspective Transformation},

author={Hou, Yunzhong and Zheng, Liang and Gould, Stephen},

booktitle={ECCV},

year={2020}

}

Please visit link for our new work MVDeTr, a transformer-powered multiview detector that achieves new state-of-the-art!

We release the PyTorch code for MVDet, a state-of-the-art multiview pedestrian detector; and MultiviewX dataset, a novel synthetic multiview pedestrian detection datatset.

| Wildtrack | MultiviewX |

|---|---|

|

|

Using pedestrian models from PersonX, in Unity, we build a novel synthetic dataset MultiviewX.

MultiviewX dataset covers a square of 16 meters by 25 meters. We quantize the ground plane into a 640x1000 grid. There are 6 cameras with overlapping field-of-view in MultiviewX dataset, each of which outputs a 1080x1920 resolution image. We also generate annotations for 400 frames in MultiviewX at 2 fps (same as Wildtrack). On average, 4.41 cameras are covering the same location.

Please refer to this link for download.

Please refer to this repo for a detailed guide & toolkits you might need.

This repo is dedicated to the code for MVDet.

This code uses the following libraries

- python 3.7+

- pytorch 1.4+ & tochvision

- numpy

- matplotlib

- pillow

- opencv-python

- kornia

- matlab & matlabengine (required for evaluation) (see this link for detailed guide)

By default, all datasets are in ~/Data/. We use MultiviewX and Wildtrack in this project.

Your ~/Data/ folder should look like this

Data

├── MultiviewX/

│ └── ...

└── Wildtrack/

└── ...

In order to train classifiers, please run the following,

CUDA_VISIBLE_DEVICES=0,1 python main.py -d wildtrackThis should automatically return evaluation results similar to the reported 88.2% MODA on Wildtrack dataset.

You can download the checkpoints at this link.