一个神奇的工具箱,拿回你的个人信息。

👉⚡使用说明 ⚡| 视频演示 | English | 获取最新维护版本 | TG交流群

🗣️ TG交流群:加入群组

点击展开👉 开发者回忆录

小明一如往常打开 Chrome 浏览器逛着论坛,贴吧,一不小心点开了网页上的广告,跳转到了京东商城,下意识去关闭窗口时发现 (OS:咦?京东怎么知道我最近心心念念的宝贝呢?刚好我正需要呢!),既然打开了那就看看商品详情吧 (OS:哎哟不错哦),那就下单试试吧!

小白听着网易云音乐的每日推荐歌单无法自拔 (OS:哇!怎么播放列表里都是我喜欢的音乐风格?网易云音乐太棒了吧!深得我心啊!黑胶会员必须来一个!),逛着知乎里的“如何优雅的XXX?”,“XXX是怎样一种体验?”,“如何评价XXX?” (OS:咦?这个问题就是我刚好想问的,原来早已有人提问!什么???还有几千条回答!!进去逛逛看!)

小达上班时不忘充实自己,逛着各大技术论坛博客园、CSDN、开源**、简书、掘金等等,发现首页的内容推荐太棒了(OS:这些技术博文太棒了,不用找就出来了),再打开自己的博客主页发现不知不觉地自己也坚持写博文也有三年了,自己的技术栈也越来越丰富(OS:怎么博客后台都不提供一个数据分析系统呢?我想看看我这几年来的发文数量,发文时间,想知道哪些博文比较热门,想看看我在哪些技术上花费的时间更多,想看看我过去的创作高峰期时在晚上呢?还是凌晨?我希望系统能给我更多指引数据让我更好的创作!)

看到以上几个场景你可能会感叹科技在进步,技术在发展,极大地改善了我们的生活方式。

但当你深入思考,你浏览的每个网站,注册的每个网站,他们都记录着你的信息你的足迹。

细思恐极的背后是自己的个人数据被赤裸裸的暴露在互联网上并且被众多的公司利用用户数据获得巨额利益,如对用户的数据收集分析后进行定制的广告推送,收取高额广告费。但作为数据的生产者却没能分享属于自己的数据收益。

如果有一个这样的工具,它能帮你拿回你的个人信息,它能帮你把分散在各种站点的个人信息聚合起来,它能帮你分析你的个人数据并给你提供建议,它能帮你把个人数据可视化让你更清楚地了解自己。

你是否会需要这样的工具呢? 你是否会喜欢这样的工具呢?

基于以上,我着手开发了 INFO-SPIDER 👇👇👇

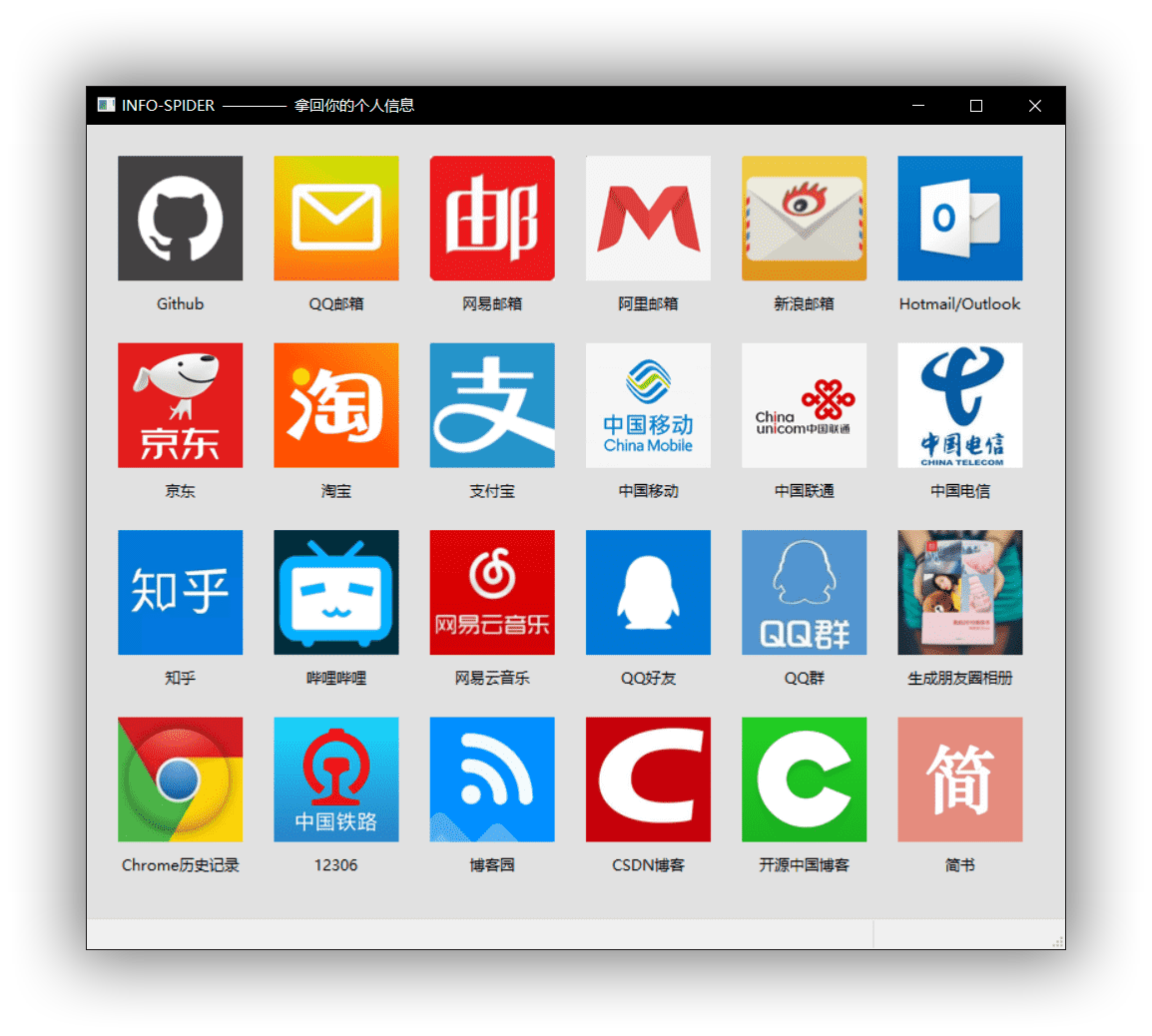

INFO-SPIDER 是一个集众多数据源于一身的爬虫工具箱,旨在安全快捷的帮助用户拿回自己的数据,工具代码开源,流程透明。并提供数据分析功能,基于用户数据生成图表文件,使得用户更直观、深入了解自己的信息。 目前支持数据源包括GitHub、QQ邮箱、网易邮箱、阿里邮箱、新浪邮箱、Hotmail邮箱、Outlook邮箱、京东、淘宝、支付宝、**移动、**联通、**电信、知乎、哔哩哔哩、网易云音乐、QQ好友、QQ群、生成朋友圈相册、浏览器浏览历史、12306、博客园、CSDN博客、开源**博客、简书。

- 安全可靠:本项目为开源项目,代码简洁,所有源码可见,本地运行,安全可靠。

- 使用简单:提供 GUI 界面,只需点击所需获取的数据源并根据提示操作即可。

- 结构清晰:本项目的所有数据源相互独立,可移植性高,所有爬虫脚本在项目的 Spiders 文件下。

- 数据源丰富:本项目目前支持多达24+个数据源,持续更新。

- 数据格式统一:爬取的所有数据都将存储为json格式,方便后期数据分析。

- 个人数据丰富:本项目将尽可能多地为你爬取个人数据,后期数据处理可根据需要删减。

- 数据分析:本项目提供个人数据的可视化分析,目前仅部分支持。

- 文档丰富:本项目包含完整全面的使用说明文档和视频教程

如果您在这一步操作遇到问题,可以获取免安装版InfoSpider

-

进入 tools 目录

-

运行

python3 main.py -

在打开的窗口点击数据源按钮, 根据提示选择数据保存路径

-

弹出的浏览器输入用户密码后会自动开始爬取数据, 爬取完成浏览器会自动关闭.

-

在对应的目录下可以查看下载下来的数据(xxx.json), 数据分析图表(xxx.html)

限量发售中...,去看看

- InfoSpider 最新维护版本

- 更全面的个人数据分析

- 免去安装程序的所有依赖环境,便捷,适合小白

- 已打包好的程序,双击即可运行程序

- 手把手教你如何打包 InfoSpider

- 开发者一对一技术支持

- 购买后即可免费获得即将发布的全新2.0版本

- GitHub

- QQ邮箱

- 网易邮箱

- 阿里邮箱

- 新浪邮箱

- Hotmail邮箱

- Outlook邮箱

- 京东

- 淘宝

- 支付宝

- **移动

- **联通

- **电信

- 知乎

- 哔哩哔哩

- 网易云音乐

- QQ好友(cjh0613)

- QQ群(cjh0613)

- 生成朋友圈相册

- 浏览器浏览历史

- 12306

- 博客园

- CSDN博客

- 开源**博客

- 简书

- 博客园

- CSDN博客

- 开源**博客

- 简书

- 提供web界面操作,适应多平台

- 对爬取的个人数据进行统计分析

- 融合机器学习技术、自然语言处理技术等对数据深入分析

- 把分析结果绘制图表直观展示

- 添加更多数据源...

- 该项目解决了个人数据分散在各种各样的公司之间,经常形成数据孤岛,多维数据无法融合的痛点。

- 作者认为该项目的最大潜力在于能把多维数据进行融合并对个人数据进行分析,是个人数据效益最大化。

- 该项目使用爬虫手段获取数据,所以程序存在时效问题(需要持续维护,根据网站的更新做出修改)。

- 该项目的结构清晰,所有数据源相互独立,可移植性高,所有爬虫脚本在项目的Spiders文件下,可移植到你的程序中。

- 目前该项目v1.0版本仅在Windows平台上测试,Python 3.7,未适配多平台。

- 计划在v2.0版本对项目进行重构,提供web端操作与数据可视化,以适配多平台。

- 本项目INFO-SPIDER代码已开源,欢迎star支持。

Thank you to JetBrains, who provide Open Source License for PyCharm!

本仓库将不定期更新,如需获取最新维护版本,请购买支持!谢谢!

点击展开 Changelog

-

2020年7月10日

- 更新GUI布局

- 添加GitHub、QQ好友、QQ群数据源

-

2020年7月12日

- 修复QQ邮箱、网易邮箱、阿里邮箱、新浪邮箱、Hotmail、Outlook数据源

- 添加生成朋友圈相册功能

-

2020年7月14日

- 修复京东、淘宝、支付宝、12306数据源

- 添加Chrome浏览记录功能

-

2020年7月17日

- 修复**移动、**联通数据源

- 添加知乎、哔哩哔哩、网易云音乐数据源

-

2020年7月19日

- 添加博客园、CSDN、开源**、简书数据源

- 编写使用说明文档

- 录制使用视频教程

-

2020年7月30日

- 添加博客园数据分析功能

- 使用pyechart绘制图表并生成html文件保存在数据目录下

-

2020年8月18日

- 修复部分bug

- 更新README.md

-

2020年9月12日

- 更换项目Logo

-

2020年10月20日

- 更新所有爬虫脚本

- 制作Python-embed版InfoSpider

- 更新logo

-

2020年11月29日

- 更新爬虫脚本

GPL-3.0