Kubevious (pronounced [kju:bvi:əs]) is a suite of app-centric assurance, validation, and introspection products for Kubernetes. It helps running modern Kubernetes applications without disasters and costly outages by continuously validating application manifests, cluster state, and configuration. Kubevious projects detect and prevent errors(typos, misconfigurations, conflicts, inconsistencies) and violations of best practices. Our secret sauce is based on the ability to validate across multiple manifests and look at the configuration from the application vantage point.

Kubevious CLI is a standalone tool that validates YAML manifests for syntax, semantics, conflicts, compliance, and security best practices violations. Can be easily used during active development and integrated into GitOps processes and CI/CD pipelines to validate changes toward live Kubernetes clusters. This is our newest development was based on the lessons learned and the foundation of the Kubevious Dashboard.

Learn more about securing your Kubernetes apps and clusters here: https://github.com/kubevious/cli

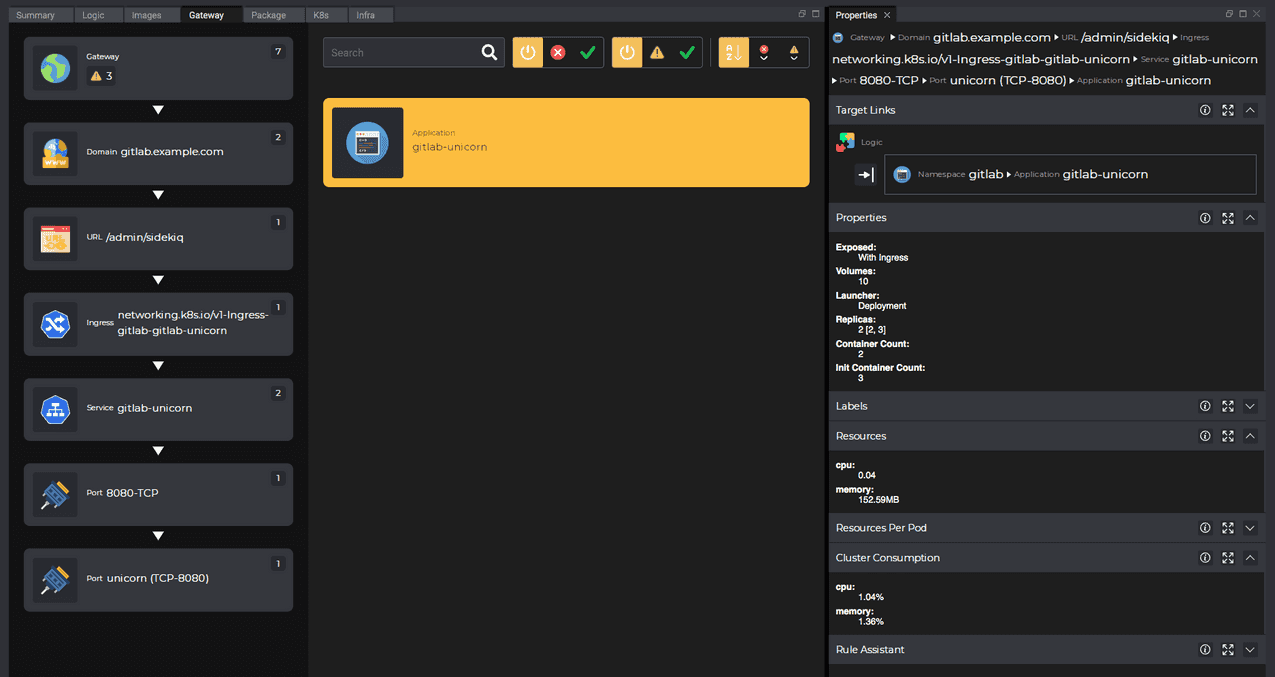

Kubevious Dashboard is a web app that delivers unique app-centric intuitive insights, introspects Kubernetes manifests, and provides troubleshooting tools for cloud-native applications. It works right out of the box and only takes a few minutes to get Kubevious up and running for existing production applications.

Learn more about introspecting Kubernetes apps and clusters here: https://github.com/kubevious/kubevious/blob/main/projects/DASHBOARD.md

Join the Kubevious Slack workspace to chat with Kubevious developers and users. This is a good place to learn about Kubevious, ask questions, and share your experiences.

We invite your participation through issues and pull requests! You can peruse the contributing guidelines.

The Kubevious project is created by AUTHORS. Governance policy is yet to be defined.

Kubevious maintains a public roadmap, which provides priorities and future capabilities we are planning on adding to Kubevious.

Kubevious is an open-source project licensed under the Apache License, Version 2.0.

- Five tools to make your K8s experience more enjoyable by Juraj Karadža

- YAKD: Yet Another Kubernetes Dashboard by KumoMind

- A Tour of Kubernetes Dashboards by Kostis Kapelonis @ Codefresh

- Kubevious - Kubernetes GUI that's not so Obvious | DevOps by Bribe By Bytes

- A Walk Through the Kubernetes UI Landscape at 6:47 by Henning Jacobs & Joaquim Rocha @ KubeCon North America 2020

- Tool of the Day: more than a dashboard, kubevious gives you a labeled, relational view of everything running in your Kubernetes cluster by Adrian Goins @ Coffee and Cloud Native

- Kubevious: Kubernetes Dashboard That Isn't A Waste Of Time by Viktor Farcic @ The DevOps Toolkit Series

- Kubevious – a Revolutionary Kubernetes Dashboard by Kostis Kapelonis @ CodeFresh

- TGI Kubernetes 113: Kubernetes Secrets Take 3 at 17:54 by Joshua Rosso @ VMware

- Let us take a dig into Kubevious by Saiyam Pathak @ Civo Cloud

- Обзор графических интерфейсов для Kubernetes by Oleg Voznesensky @ Progress4GL

- Useful Interactive Terminal and Graphical UI Tools for Kubernetes by William Lam @ VMware

If you want your article describing the experience with Kubevious posted here, please submit a PR.