Since the website is down I tried running this on my laptop but getting this error every time:

OOM when allocating tensor with shape[1,256,518,902]

I am also using bfc allocator but no luck.

GPU: Nvidia GTX 960 M (4 GB)

Is there any way to optimize it and make it run on my laptop?

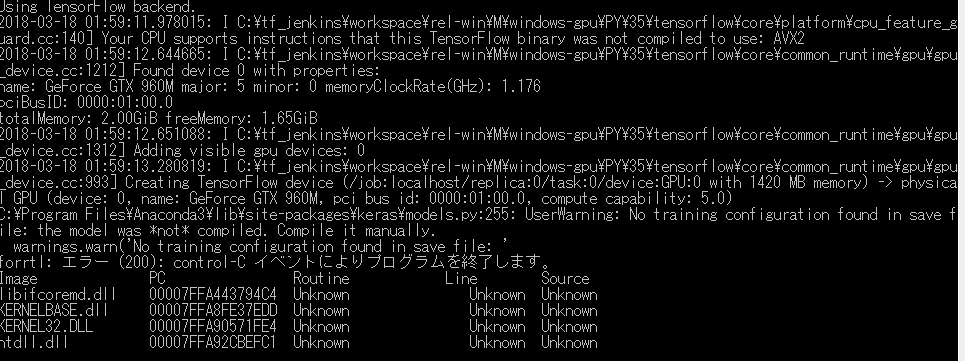

Here is complete output:

C:\ProgramData\Anaconda3\lib\site-packages\h5py_init_.py:34: FutureWarning: Conversion of the second argument of issubdtype from float to np.floating is deprecated. In future, it will be treated as np.float64 == np.dtype(float).type.

from ._conv import register_converters as _register_converters

Using TensorFlow backend.

2018-03-09 23:55:55.434317: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\platform\cpu_feature_guard.cc:137] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX AVX2

2018-03-09 23:55:56.353643: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\gpu\gpu_device.cc:1030] Found device 0 with properties:

name: GeForce GTX 960M major: 5 minor: 0 memoryClockRate(GHz): 1.176

pciBusID: 0000:02:00.0

totalMemory: 4.00GiB freeMemory: 3.35GiB

2018-03-09 23:55:56.362316: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\gpu\gpu_device.cc:1120] Creating TensorFlow device (/device:GPU:0) -> (device: 0, name: GeForce GTX 960M, pci bus id: 0000:02:00.0, compute capability: 5.0)

C:\ProgramData\Anaconda3\lib\site-packages\keras\models.py:255: UserWarning: No training configuration found in save file: the model was not compiled. Compile it manually.

warnings.warn('No training configuration found in save file: '

Bottle v0.12.13 server starting up (using WSGIRefServer())...

Listening on http://0.0.0.0:8000/

Hit Ctrl-C to quit.

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET / HTTP/1.1" 200 1926

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /style-mobile.css HTTP/1.1" 200 2574

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /src/settings.js HTTP/1.1" 200 2376

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /main.js HTTP/1.1" 200 7358

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /splash.png HTTP/1.1" 200 16188

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /src/project.js HTTP/1.1" 200 15412

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /res/import/01/01a59e074.json HTTP/1.1" 200 56857

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /res/raw-assets/texture/w.png HTTP/1.1" 200 131

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /res/raw-assets/texture/circle.png HTTP/1.1" 200 1695

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /res/raw-assets/texture/pencil.png HTTP/1.1" 200 2347

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /res/raw-assets/texture/eraser.png HTTP/1.1" 200 1628

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /res/raw-assets/texture/clear.png HTTP/1.1" 200 1065

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /res/raw-internal/image/default_radio_button_off.png HTTP/1.1" 200 631

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /res/raw-internal/image/default_radio_button_on.png HTTP/1.1" 200 847

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /res/raw-assets/texture/github.png HTTP/1.1" 200 2742

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /res/raw-internal/image/default_toggle_normal.png HTTP/1.1" 200 1174

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /res/raw-internal/image/default_toggle_checkmark.png HTTP/1.1" 200 493

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /res/raw-assets/texture/sketch.png HTTP/1.1" 200 91

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /res/raw-assets/texture/hint.png HTTP/1.1" 200 91

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /res/raw-assets/texture/result.png HTTP/1.1" 200 91

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /res/raw-assets/texture/loading.png HTTP/1.1" 200 2552

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /res/raw-assets/texture/right-arrow.png HTTP/1.1" 200 2065

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /res/raw-assets/icons/001.png HTTP/1.1" 200 49474

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /res/raw-assets/icons/002.png HTTP/1.1" 200 47019

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /res/raw-assets/icons/003.png HTTP/1.1" 200 50878

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /res/raw-assets/icons/004.png HTTP/1.1" 200 31390

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /res/raw-assets/icons/005.png HTTP/1.1" 200 102248

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /res/raw-assets/icons/006.png HTTP/1.1" 200 125063

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /res/raw-assets/icons/007.png HTTP/1.1" 200 123590

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /res/raw-assets/icons/008.png HTTP/1.1" 200 124480

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /res/raw-assets/icons/009.png HTTP/1.1" 200 132188

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /res/raw-assets/icons/010.png HTTP/1.1" 200 58897

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /res/raw-assets/icons/011.png HTTP/1.1" 200 73685

127.0.0.1 - - [09/Mar/2018 23:57:57] "GET /res/raw-assets/icons/012.png HTTP/1.1" 200 69262

received

sketchID: new

referenceID: no

sketchDenoise: true

resultDenoise: true

algrithom: quality

method: colorize

2018-03-09 23:58:43.186862: W C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:217] Allocator (GPU_0_bfc) ran out of memory trying to allocate 1.31GiB. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory is available.

2018-03-09 23:58:44.312520: W C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:217] Allocator (GPU_0_bfc) ran out of memory trying to allocate 1.07GiB. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory is available.

2018-03-09 23:58:44.340284: W C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:217] Allocator (GPU_0_bfc) ran out of memory trying to allocate 1.04GiB. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory is available.

2018-03-09 23:58:44.371173: W C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:217] Allocator (GPU_0_bfc) ran out of memory trying to allocate 1.03GiB. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory is available.

2018-03-09 23:58:44.411097: W C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:217] Allocator (GPU_0_bfc) ran out of memory trying to allocate 1.04GiB. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory is available.

2018-03-09 23:58:44.609860: W C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:217] Allocator (GPU_0_bfc) ran out of memory trying to allocate 1.02GiB. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory is available.

process: 3.984015464782715

2018-03-09 23:58:45.710617: W C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:217] Allocator (GPU_0_bfc) ran out of memory trying to allocate 1.53GiB. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory is available.

2018-03-09 23:58:47.760293: W C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:217] Allocator (GPU_0_bfc) ran out of memory trying to allocate 1.75GiB. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory is available.

2018-03-09 23:58:48.047717: W C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:217] Allocator (GPU_0_bfc) ran out of memory trying to allocate 2.63GiB. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory is available.

2018-03-09 23:58:48.317449: W C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:217] Allocator (GPU_0_bfc) ran out of memory trying to allocate 2.68GiB. The caller indicates that this is not a failure, but may mean that there could be performance gains if more memory is available.

paint: 4.139033794403076

2018-03-09 23:58:59.490450: W C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:273] Allocator (GPU_0_bfc) ran out of memory trying to allocate 456.29MiB. Current allocation summary follows.

2018-03-09 23:58:59.497622: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:627] Bin (256): Total Chunks: 869, Chunks in use: 869. 217.3KiB allocated for chunks. 217.3KiB in use in bin. 55.8KiB client-requested in use in bin.

2018-03-09 23:58:59.505725: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:627] Bin (512): Total Chunks: 316, Chunks in use: 316. 179.0KiB allocated for chunks. 179.0KiB in use in bin. 169.0KiB client-requested in use in bin.

2018-03-09 23:58:59.511378: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:627] Bin (1024): Total Chunks: 210, Chunks in use: 210. 231.5KiB allocated for chunks. 231.5KiB in use in bin. 224.0KiB client-requested in use in bin.

2018-03-09 23:58:59.520834: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:627] Bin (2048): Total Chunks: 154, Chunks in use: 154. 342.0KiB allocated for chunks. 342.0KiB in use in bin. 338.3KiB client-requested in use in bin.

2018-03-09 23:58:59.530064: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:627] Bin (4096): Total Chunks: 36, Chunks in use: 36. 178.5KiB allocated for chunks. 178.5KiB in use in bin. 176.1KiB client-requested in use in bin.

2018-03-09 23:58:59.539367: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:627] Bin (8192): Total Chunks: 3, Chunks in use: 3. 35.0KiB allocated for chunks. 35.0KiB in use in bin. 22.8KiB client-requested in use in bin.

2018-03-09 23:58:59.548509: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:627] Bin (16384): Total Chunks: 10, Chunks in use: 10. 220.0KiB allocated for chunks. 220.0KiB in use in bin. 204.8KiB client-requested in use in bin.

2018-03-09 23:58:59.557916: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:627] Bin (32768): Total Chunks: 7, Chunks in use: 7. 289.5KiB allocated for chunks. 289.5KiB in use in bin. 276.8KiB client-requested in use in bin.

2018-03-09 23:58:59.569923: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:627] Bin (65536): Total Chunks: 12, Chunks in use: 11. 939.0KiB allocated for chunks. 861.5KiB in use in bin. 720.0KiB client-requested in use in bin.

2018-03-09 23:58:59.578047: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:627] Bin (131072): Total Chunks: 30, Chunks in use: 29. 5.23MiB allocated for chunks. 5.02MiB in use in bin. 4.73MiB client-requested in use in bin.

2018-03-09 23:58:59.586689: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:627] Bin (262144): Total Chunks: 31, Chunks in use: 30. 10.29MiB allocated for chunks. 9.93MiB in use in bin. 9.10MiB client-requested in use in bin.

2018-03-09 23:58:59.594471: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:627] Bin (524288): Total Chunks: 44, Chunks in use: 43. 29.12MiB allocated for chunks. 28.28MiB in use in bin. 25.94MiB client-requested in use in bin.

2018-03-09 23:58:59.604122: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:627] Bin (1048576): Total Chunks: 47, Chunks in use: 45. 74.42MiB allocated for chunks. 71.39MiB in use in bin. 66.47MiB client-requested in use in bin.

2018-03-09 23:58:59.613319: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:627] Bin (2097152): Total Chunks: 58, Chunks in use: 57. 177.84MiB allocated for chunks. 175.42MiB in use in bin. 167.36MiB client-requested in use in bin.

2018-03-09 23:58:59.622843: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:627] Bin (4194304): Total Chunks: 18, Chunks in use: 18. 100.89MiB allocated for chunks. 100.89MiB in use in bin. 99.19MiB client-requested in use in bin.

2018-03-09 23:58:59.632966: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:627] Bin (8388608): Total Chunks: 32, Chunks in use: 29. 335.33MiB allocated for chunks. 297.21MiB in use in bin. 278.88MiB client-requested in use in bin.

2018-03-09 23:58:59.642260: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:627] Bin (16777216): Total Chunks: 4, Chunks in use: 3. 73.79MiB allocated for chunks. 55.28MiB in use in bin. 47.00MiB client-requested in use in bin.

2018-03-09 23:58:59.649994: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:627] Bin (33554432): Total Chunks: 9, Chunks in use: 9. 344.50MiB allocated for chunks. 344.50MiB in use in bin. 344.50MiB client-requested in use in bin.

2018-03-09 23:58:59.658693: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:627] Bin (67108864): Total Chunks: 2, Chunks in use: 0. 203.39MiB allocated for chunks. 0B in use in bin. 0B client-requested in use in bin.

2018-03-09 23:58:59.669691: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:627] Bin (134217728): Total Chunks: 3, Chunks in use: 1. 582.39MiB allocated for chunks. 228.14MiB in use in bin. 228.14MiB client-requested in use in bin.

2018-03-09 23:58:59.677440: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:627] Bin (268435456): Total Chunks: 2, Chunks in use: 2. 1.16GiB allocated for chunks. 1.16GiB in use in bin. 684.43MiB client-requested in use in bin.

2018-03-09 23:58:59.686447: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:643] Bin for 456.29MiB was 256.00MiB, Chunk State:

2018-03-09 23:58:59.692998: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501840000 of size 1280

2018-03-09 23:58:59.697217: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501840500 of size 256

2018-03-09 23:58:59.702969: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501840600 of size 256

2018-03-09 23:58:59.707814: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501840700 of size 256

2018-03-09 23:58:59.712697: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501840800 of size 256

2018-03-09 23:58:59.718130: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501840900 of size 256

2018-03-09 23:58:59.723223: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501840A00 of size 256

2018-03-09 23:58:59.729522: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501840B00 of size 256

2018-03-09 23:58:59.734023: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501840C00 of size 256

2018-03-09 23:58:59.738907: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501840D00 of size 256

2018-03-09 23:58:59.743119: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501840E00 of size 256

2018-03-09 23:58:59.747841: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501840F00 of size 256

2018-03-09 23:58:59.752272: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501841000 of size 256

2018-03-09 23:58:59.757151: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501841100 of size 256

2018-03-09 23:58:59.761413: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501841200 of size 256

2018-03-09 23:58:59.766590: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501841300 of size 512

2018-03-09 23:58:59.772312: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501841500 of size 512

2018-03-09 23:58:59.777223: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501841700 of size 256

2018-03-09 23:58:59.784024: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501841800 of size 256

2018-03-09 23:58:59.788095: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501841900 of size 256

2018-03-09 23:58:59.791299: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501841A00 of size 256

2018-03-09 23:58:59.795217: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501841B00 of size 1024

2018-03-09 23:58:59.800237: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501841F00 of size 1024

2018-03-09 23:58:59.804213: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501842300 of size 256

2018-03-09 23:58:59.809314: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501842400 of size 256

2018-03-09 23:58:59.813357: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501842500 of size 256

2018-03-09 23:58:59.818553: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501842600 of size 256

2018-03-09 23:58:59.822627: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501842700 of size 2048

2018-03-09 23:58:59.828159: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501842F00 of size 2048

2018-03-09 23:58:59.837465: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501843700 of size 256

2018-03-09 23:58:59.842713: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501843800 of size 256

2018-03-09 23:58:59.850432: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501843900 of size 256

2018-03-09 23:58:59.856447: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501843A00 of size 256

2018-03-09 23:58:59.861403: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501843B00 of size 256

2018-03-09 23:58:59.867902: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501843C00 of size 256

2018-03-09 23:58:59.872387: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501843D00 of size 256

2018-03-09 23:58:59.877825: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501843E00 of size 256

2018-03-09 23:58:59.883775: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501843F00 of size 256

2018-03-09 23:58:59.889394: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501844000 of size 256

2018-03-09 23:58:59.895696: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501844100 of size 256

2018-03-09 23:58:59.900265: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501844200 of size 256

2018-03-09 23:58:59.905055: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501844300 of size 256

2018-03-09 23:58:59.909824: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501844400 of size 256

2018-03-09 23:58:59.914815: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501844500 of size 256

2018-03-09 23:58:59.920315: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501844600 of size 256

2018-03-09 23:58:59.925172: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501844700 of size 256

2018-03-09 23:58:59.931405: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501844800 of size 256

2018-03-09 23:58:59.935898: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501844900 of size 256

2018-03-09 23:58:59.941071: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501844A00 of size 256

2018-03-09 23:58:59.945585: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501844B00 of size 256

2018-03-09 23:58:59.951049: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501844C00 of size 256

2018-03-09 23:58:59.955839: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501844D00 of size 256

2018-03-09 23:58:59.960666: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501844E00 of size 256

2018-03-09 23:58:59.964838: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501844F00 of size 256

2018-03-09 23:58:59.970012: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501845000 of size 256

2018-03-09 23:58:59.974132: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501845100 of size 256

2018-03-09 23:58:59.979345: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:661] Chunk at 0000000501845200 of size 512

..............

TOO MANY THESE LINES

..............

2018-03-09 23:59:10.673386: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 2 Chunks of size 12032 totalling 23.5KiB

2018-03-09 23:59:10.677302: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 2 Chunks of size 18432 totalling 36.0KiB

2018-03-09 23:59:10.682140: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 2 Chunks of size 18944 totalling 37.0KiB

2018-03-09 23:59:10.686014: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 5 Chunks of size 24576 totalling 120.0KiB

2018-03-09 23:59:10.692148: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 27648 totalling 27.0KiB

2018-03-09 23:59:10.699235: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 2 Chunks of size 36864 totalling 72.0KiB

2018-03-09 23:59:10.704364: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 2 Chunks of size 37632 totalling 73.5KiB

2018-03-09 23:59:10.708749: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 3 Chunks of size 49152 totalling 144.0KiB

2018-03-09 23:59:10.714688: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 4 Chunks of size 65536 totalling 256.0KiB

2018-03-09 23:59:10.719015: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 4 Chunks of size 73728 totalling 288.0KiB

2018-03-09 23:59:10.724131: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 92672 totalling 90.5KiB

2018-03-09 23:59:10.728415: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 108288 totalling 105.8KiB

2018-03-09 23:59:10.733435: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 124160 totalling 121.3KiB

2018-03-09 23:59:10.737712: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 5 Chunks of size 131072 totalling 640.0KiB

2018-03-09 23:59:10.742721: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 140544 totalling 137.3KiB

2018-03-09 23:59:10.747357: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 7 Chunks of size 147456 totalling 1008.0KiB

2018-03-09 23:59:10.752373: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 2 Chunks of size 163840 totalling 320.0KiB

2018-03-09 23:59:10.756592: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 202752 totalling 198.0KiB

2018-03-09 23:59:10.761599: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 214528 totalling 209.5KiB

2018-03-09 23:59:10.765902: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 11 Chunks of size 221184 totalling 2.32MiB

2018-03-09 23:59:10.770826: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 258048 totalling 252.0KiB

2018-03-09 23:59:10.774975: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 3 Chunks of size 262144 totalling 768.0KiB

2018-03-09 23:59:10.780785: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 12 Chunks of size 294912 totalling 3.38MiB

2018-03-09 23:59:10.785237: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 3 Chunks of size 331776 totalling 972.0KiB

2018-03-09 23:59:10.790770: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 5 Chunks of size 393216 totalling 1.88MiB

2018-03-09 23:59:10.796213: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 434176 totalling 424.0KiB

2018-03-09 23:59:10.801052: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 5 Chunks of size 442368 totalling 2.11MiB

2018-03-09 23:59:10.807833: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 483328 totalling 472.0KiB

2018-03-09 23:59:10.812837: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 10 Chunks of size 524288 totalling 5.00MiB

2018-03-09 23:59:10.816810: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 8 Chunks of size 589824 totalling 4.50MiB

2018-03-09 23:59:10.820827: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 3 Chunks of size 614400 totalling 1.76MiB

2018-03-09 23:59:10.824720: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 2 Chunks of size 655360 totalling 1.25MiB

2018-03-09 23:59:10.828423: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 704512 totalling 688.0KiB

2018-03-09 23:59:10.834018: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 3 Chunks of size 737280 totalling 2.11MiB

2018-03-09 23:59:10.839377: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 10 Chunks of size 786432 totalling 7.50MiB

2018-03-09 23:59:10.846921: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 884736 totalling 864.0KiB

2018-03-09 23:59:10.856114: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 921600 totalling 900.0KiB

2018-03-09 23:59:10.860966: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 3 Chunks of size 983040 totalling 2.81MiB

2018-03-09 23:59:10.873627: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 999424 totalling 976.0KiB

2018-03-09 23:59:10.884121: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 3 Chunks of size 1048576 totalling 3.00MiB

2018-03-09 23:59:10.896078: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 1105920 totalling 1.05MiB

2018-03-09 23:59:10.911837: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 3 Chunks of size 1179648 totalling 3.38MiB

2018-03-09 23:59:10.918942: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 1196032 totalling 1.14MiB

2018-03-09 23:59:10.924117: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 6 Chunks of size 1228800 totalling 7.03MiB

2018-03-09 23:59:10.928004: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 2 Chunks of size 1327104 totalling 2.53MiB

2018-03-09 23:59:10.940812: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 1441792 totalling 1.38MiB

2018-03-09 23:59:10.946805: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 1548288 totalling 1.48MiB

2018-03-09 23:59:10.955775: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 1695744 totalling 1.62MiB

2018-03-09 23:59:10.962037: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 1769472 totalling 1.69MiB

2018-03-09 23:59:10.976069: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 2 Chunks of size 1806336 totalling 3.45MiB

2018-03-09 23:59:10.986550: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 1880064 totalling 1.79MiB

2018-03-09 23:59:10.994738: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 21 Chunks of size 1990656 totalling 39.87MiB

2018-03-09 23:59:11.007173: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 2095616 totalling 2.00MiB

2018-03-09 23:59:11.013847: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 9 Chunks of size 2097152 totalling 18.00MiB

2018-03-09 23:59:11.025849: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 6 Chunks of size 2211840 totalling 12.66MiB

2018-03-09 23:59:11.037319: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 5 Chunks of size 2359296 totalling 11.25MiB

2018-03-09 23:59:11.045656: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 2 Chunks of size 2457600 totalling 4.69MiB

2018-03-09 23:59:11.057011: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 2654208 totalling 2.53MiB

2018-03-09 23:59:11.063466: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 3112960 totalling 2.97MiB

2018-03-09 23:59:11.068127: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 3244032 totalling 3.09MiB

2018-03-09 23:59:11.072244: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 3538944 totalling 3.38MiB

2018-03-09 23:59:11.077723: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 3 Chunks of size 3686400 totalling 10.55MiB

2018-03-09 23:59:11.083995: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 28 Chunks of size 3981312 totalling 106.31MiB

2018-03-09 23:59:11.090131: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 5 Chunks of size 4718592 totalling 22.50MiB

2018-03-09 23:59:11.094342: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 5029888 totalling 4.80MiB

2018-03-09 23:59:11.099689: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 11 Chunks of size 6291456 totalling 66.00MiB

2018-03-09 23:59:11.104311: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 7962624 totalling 7.59MiB

2018-03-09 23:59:11.109324: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 6 Chunks of size 8388608 totalling 48.00MiB

2018-03-09 23:59:11.114862: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 6 Chunks of size 9437184 totalling 54.00MiB

2018-03-09 23:59:11.120205: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 9693440 totalling 9.24MiB

2018-03-09 23:59:11.126771: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 11534336 totalling 11.00MiB

2018-03-09 23:59:11.131637: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 5 Chunks of size 11796480 totalling 56.25MiB

2018-03-09 23:59:11.137180: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 2 Chunks of size 11943936 totalling 22.78MiB

2018-03-09 23:59:11.143718: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 12515072 totalling 11.93MiB

2018-03-09 23:59:11.148261: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 7 Chunks of size 12582912 totalling 84.00MiB

2018-03-09 23:59:11.153382: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 2 Chunks of size 18350080 totalling 35.00MiB

2018-03-09 23:59:11.157861: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 21261056 totalling 20.28MiB

2018-03-09 23:59:11.163508: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 5 Chunks of size 33554432 totalling 160.00MiB

2018-03-09 23:59:11.168811: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 2 Chunks of size 37748736 totalling 72.00MiB

2018-03-09 23:59:11.173804: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 2 Chunks of size 58982400 totalling 112.50MiB

2018-03-09 23:59:11.181218: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 239224832 totalling 228.14MiB

2018-03-09 23:59:11.185733: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 424181760 totalling 404.53MiB

2018-03-09 23:59:11.191057: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:679] 1 Chunks of size 824147712 totalling 785.97MiB

2018-03-09 23:59:11.195922: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:683] Sum Total of in-use chunks: 2.45GiB

2018-03-09 23:59:11.201292: I C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:685] Stats:

Limit: 3282324684

InUse: 2630940672

MaxInUse: 2791483136

NumAllocs: 6116

MaxAllocSize: 1063372544

2018-03-09 23:59:11.215161: W C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\common_runtime\bfc_allocator.cc:277] *******************************************xxxx____***********************xxxxxxxxxx

2018-03-09 23:59:11.221729: W C:\tf_jenkins\home\workspace\rel-win\M\windows-gpu\PY\36\tensorflow\core\framework\op_kernel.cc:1192] Resource exhausted: OOM when allocating tensor with shape[1,256,518,902]

Traceback (most recent call last):

File "C:\ProgramData\Anaconda3\lib\site-packages\tensorflow\python\client\session.py", line 1323, in _do_call

return fn(*args)

File "C:\ProgramData\Anaconda3\lib\site-packages\tensorflow\python\client\session.py", line 1302, in _run_fn

status, run_metadata)

File "C:\ProgramData\Anaconda3\lib\site-packages\tensorflow\python\framework\errors_impl.py", line 473, in exit

c_api.TF_GetCode(self.status.status))

tensorflow.python.framework.errors_impl.ResourceExhaustedError: OOM when allocating tensor with shape[1,256,518,902]

[[Node: model_1_2/conv4/convolution = Conv2D[T=DT_FLOAT, data_format="NHWC", padding="SAME", strides=[1, 1, 1, 1], use_cudnn_on_gpu=true, _device="/job:localhost/replica:0/task:0/device:GPU:0"](model_1_2/leaky_re_lu_4/LeakyRelu/Maximum, conv4/kernel/read)]]

[[Node: mul_20/_6359 = _Recvclient_terminated=false, recv_device="/job:localhost/replica:0/task:0/device:CPU:0", send_device="/job:localhost/replica:0/task:0/device:GPU:0", send_device_incarnation=1, tensor_name="edge_124_mul_20", tensor_type=DT_FLOAT, _device="/job:localhost/replica:0/task:0/device:CPU:0"]]

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "C:\ProgramData\Anaconda3\lib\site-packages\bottle.py", line 862, in _handle

return route.call(**args)

File "C:\ProgramData\Anaconda3\lib\site-packages\bottle.py", line 1740, in wrapper

rv = callback(*a, **ka)

File "server.py", line 145, in do_paint

fin = go_tail(painting, noisy=(resultDenoise == 'true'))

File "C:\Users\Abdul Rahman\Desktop\style2paints-master\server\ai.py", line 140, in go_tail

ip3: x[None, :, :, :]

File "C:\ProgramData\Anaconda3\lib\site-packages\tensorflow\python\client\session.py", line 889, in run

run_metadata_ptr)

File "C:\ProgramData\Anaconda3\lib\site-packages\tensorflow\python\client\session.py", line 1120, in _run

feed_dict_tensor, options, run_metadata)

File "C:\ProgramData\Anaconda3\lib\site-packages\tensorflow\python\client\session.py", line 1317, in _do_run

options, run_metadata)

File "C:\ProgramData\Anaconda3\lib\site-packages\tensorflow\python\client\session.py", line 1336, in _do_call

raise type(e)(node_def, op, message)

tensorflow.python.framework.errors_impl.ResourceExhaustedError: OOM when allocating tensor with shape[1,256,518,902]

[[Node: model_1_2/conv4/convolution = Conv2D[T=DT_FLOAT, data_format="NHWC", padding="SAME", strides=[1, 1, 1, 1], use_cudnn_on_gpu=true, _device="/job:localhost/replica:0/task:0/device:GPU:0"](model_1_2/leaky_re_lu_4/LeakyRelu/Maximum, conv4/kernel/read)]]

[[Node: mul_20/_6359 = _Recvclient_terminated=false, recv_device="/job:localhost/replica:0/task:0/device:CPU:0", send_device="/job:localhost/replica:0/task:0/device:GPU:0", send_device_incarnation=1, tensor_name="edge_124_mul_20", tensor_type=DT_FLOAT, _device="/job:localhost/replica:0/task:0/device:CPU:0"]]

Caused by op 'model_1_2/conv4/convolution', defined at:

File "server.py", line 4, in

from ai import *

File "", line 971, in _find_and_load

File "", line 955, in _find_and_load_unlocked

File "", line 665, in _load_unlocked

File "", line 678, in exec_module

File "", line 219, in _call_with_frames_removed

File "C:\Users\Abdul Rahman\Desktop\style2paints-master\server\ai.py", line 79, in

noise_tail_op = noise_tail(tf.pad(ip3 / 255.0, [[0, 0], [3, 3], [3, 3], [0, 0]], 'REFLECT'))[:, 3:-3, 3:-3, :] * 255.0

File "C:\ProgramData\Anaconda3\lib\site-packages\keras\engine\topology.py", line 619, in call

output = self.call(inputs, **kwargs)

File "C:\ProgramData\Anaconda3\lib\site-packages\keras\engine\topology.py", line 2085, in call

output_tensors, _, _ = self.run_internal_graph(inputs, masks)

File "C:\ProgramData\Anaconda3\lib\site-packages\keras\engine\topology.py", line 2236, in run_internal_graph

output_tensors = _to_list(layer.call(computed_tensor, **kwargs))

File "C:\ProgramData\Anaconda3\lib\site-packages\keras\layers\convolutional.py", line 168, in call

dilation_rate=self.dilation_rate)

File "C:\ProgramData\Anaconda3\lib\site-packages\keras\backend\tensorflow_backend.py", line 3335, in conv2d

data_format=tf_data_format)

File "C:\ProgramData\Anaconda3\lib\site-packages\tensorflow\python\ops\nn_ops.py", line 751, in convolution

return op(input, filter)

File "C:\ProgramData\Anaconda3\lib\site-packages\tensorflow\python\ops\nn_ops.py", line 835, in call

return self.conv_op(inp, filter)

File "C:\ProgramData\Anaconda3\lib\site-packages\tensorflow\python\ops\nn_ops.py", line 499, in call

return self.call(inp, filter)

File "C:\ProgramData\Anaconda3\lib\site-packages\tensorflow\python\ops\nn_ops.py", line 187, in call

name=self.name)

File "C:\ProgramData\Anaconda3\lib\site-packages\tensorflow\python\ops\gen_nn_ops.py", line 630, in conv2d

data_format=data_format, name=name)

File "C:\ProgramData\Anaconda3\lib\site-packages\tensorflow\python\framework\op_def_library.py", line 787, in _apply_op_helper

op_def=op_def)

File "C:\ProgramData\Anaconda3\lib\site-packages\tensorflow\python\framework\ops.py", line 2956, in create_op

op_def=op_def)

File "C:\ProgramData\Anaconda3\lib\site-packages\tensorflow\python\framework\ops.py", line 1470, in init

self._traceback = self._graph._extract_stack() # pylint: disable=protected-access

ResourceExhaustedError (see above for traceback): OOM when allocating tensor with shape[1,256,518,902]

[[Node: model_1_2/conv4/convolution = Conv2D[T=DT_FLOAT, data_format="NHWC", padding="SAME", strides=[1, 1, 1, 1], use_cudnn_on_gpu=true, _device="/job:localhost/replica:0/task:0/device:GPU:0"](model_1_2/leaky_re_lu_4/LeakyRelu/Maximum, conv4/kernel/read)]]

[[Node: mul_20/_6359 = _Recvclient_terminated=false, recv_device="/job:localhost/replica:0/task:0/device:CPU:0", send_device="/job:localhost/replica:0/task:0/device:GPU:0", send_device_incarnation=1, tensor_name="edge_124_mul_20", tensor_type=DT_FLOAT, _device="/job:localhost/replica:0/task:0/device:CPU:0"]]

127.0.0.1 - - [09/Mar/2018 23:59:11] "POST /paint HTTP/1.1" 500 746