fastText4j原是Mynlp一个子模块,现在独立成为一个开源项目。(mynlp是一个高性能、模块化、可扩展的中文NLP工具包 )

Implementing Facebook's FastText with java. Fasttext is a library for text representation and classification by facebookresearch. It implements text classification and word embedding learning.

Features:

- Implementing with java(kotlin)

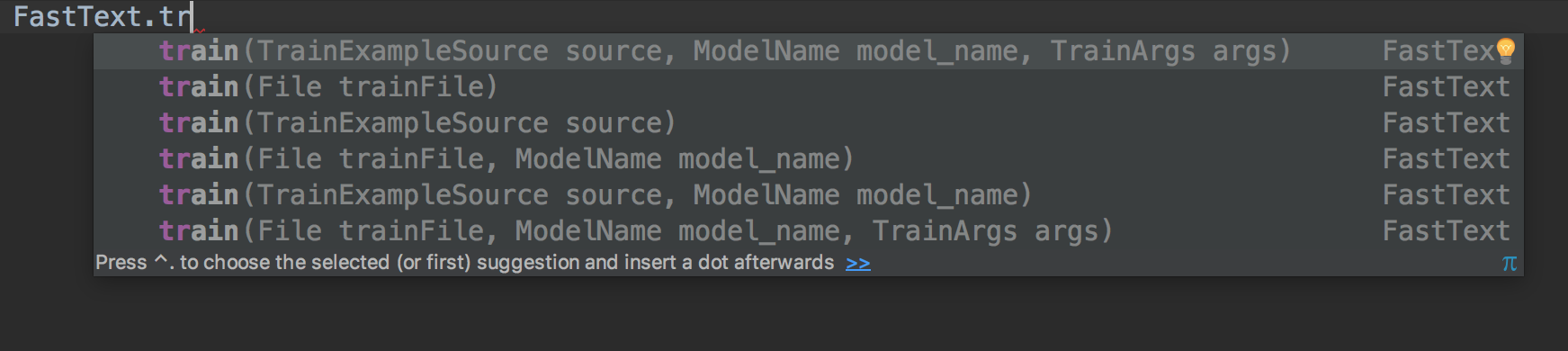

- Well-designed API

- Compatible with original C++ model file (include quantizer compression model)

- Provides training api (almost the same performance)

- Support for java file formats( can read file use mmap),read big model file with less memory

compile 'com.mayabot:fastText4j:1.2.2'

<dependency>

<groupId>com.mayabot</groupId>

<artifactId>fastText4j</artifactId>

<version>1.2.2</version>

</dependency>- ModelName.sup supervised

- ModelName.sg skipgram

- ModelName.cow cbow

//Word representation learning

FastText fastText = FastText.train(new File("train.data"), ModelName.sg);

// Text classification

FastText fastText = FastText.train(new File("train.data"), ModelName.sup);data.txt is also encoded in utf-8 with one sample each line. And it needs to do word spliting beforehand as well. There is a string starting with __label__ in each line,representing the classifying target, such as __label__正面. Each sample could have multiple label. Through the attribute 'label' in TrainArgs, you can customise the head.

save model to java format

fastText.saveModel("path/data.model");public Fasttext loadModel(String modelPath, boolean mmap)

//load from java format

FastText fastText = FastText.loadModel("path/data.model",true);

//load from c++ format

FastText fastText = FastText.loadFasttextBinModel("path/wiki.bin") FastText quantize(FastText fastText , int dsub=2, boolean qnorm=false)

//load from java format

FastText quantizerFastText = FastText.quantize(fastText,2,false);//predict the result of a word

List<FloatStringPair> predict = fastText.predict(Arrays.asList("fastText在预测标签时使用了非线性激活函数".split(" ")), 5);List<FloatStringPair> predict = fastText.nearestNeighbor("**",5);By giving three words A, B and C, return the nearest words in terms of semantic distance and their similarity list, under the condition of (A - B + C).

List<FloatStringPair> predict = fastText.analogies("国王","皇后","男",5);test agnews data set, train and predict by fastText4j

Result:

Read 5M words

Number of words: 95812

Number of labels: 4

Progress: 100.00% words/sec/thread: 5792774 lr: 0.00000 loss: 0.28018 ETA: 0h 0m 0s

Train use time 5275 ms

total=7600

right=6889

rate 0.9064473684210527

The parameters is consistant with the C++ version :

The following arguments for the dictionary are optional:

-minCount minimal number of word occurences [1]

-minCountLabel minimal number of label occurences [0]

-wordNgrams max length of word ngram [1]

-bucket number of buckets [2000000]

-minn min length of char ngram [0]

-maxn max length of char ngram [0]

-t sampling threshold [0.0001]

-label labels prefix [__label__]

The following arguments for training are optional:

-lr learning rate [0.1]

-lrUpdateRate change the rate of updates for the learning rate [100]

-dim size of word vectors [100]

-ws size of the context window [5]

-epoch number of epochs [5]

-neg number of negatives sampled [5]

-loss loss function {ns, hs, softmax} [softmax]

-thread number of threads [12]

-pretrainedVectors pretrained word vectors for supervised learning []

-saveOutput whether output params should be saved [0]

The following arguments for quantization are optional:

-cutoff number of words and ngrams to retain [0]

-retrain finetune embeddings if a cutoff is applied [0]

-qnorm quantizing the norm separately [0]

-qout quantizing the classifier [0]

-dsub size of each sub-vector [2]

Recent state-of-the-art English word vectors.

Word vectors for 157 languages trained on Wikipedia and Crawl.

Models for language identification and various supervised tasks.

Please cite 1 if using this code for learning word representations or 2 if using for text classification.

[1] P. Bojanowski*, E. Grave*, A. Joulin, T. Mikolov, Enriching Word Vectors with Subword Information

@article{bojanowski2017enriching,

title={Enriching Word Vectors with Subword Information},

author={Bojanowski, Piotr and Grave, Edouard and Joulin, Armand and Mikolov, Tomas},

journal={Transactions of the Association for Computational Linguistics},

volume={5},

year={2017},

issn={2307-387X},

pages={135--146}

}

[2] A. Joulin, E. Grave, P. Bojanowski, T. Mikolov, Bag of Tricks for Efficient Text Classification

@InProceedings{joulin2017bag,

title={Bag of Tricks for Efficient Text Classification},

author={Joulin, Armand and Grave, Edouard and Bojanowski, Piotr and Mikolov, Tomas},

booktitle={Proceedings of the 15th Conference of the European Chapter of the Association for Computational Linguistics: Volume 2, Short Papers},

month={April},

year={2017},

publisher={Association for Computational Linguistics},

pages={427--431},

}

[3] A. Joulin, E. Grave, P. Bojanowski, M. Douze, H. Jégou, T. Mikolov, FastText.zip: Compressing text classification models

@article{joulin2016fasttext,

title={FastText.zip: Compressing text classification models},

author={Joulin, Armand and Grave, Edouard and Bojanowski, Piotr and Douze, Matthijs and J{\'e}gou, H{\'e}rve and Mikolov, Tomas},

journal={arXiv preprint arXiv:1612.03651},

year={2016}

}

(* These authors contributed equally.)

fastText is BSD-licensed. facebook provide an additional patent grant.