common interview system design questions:

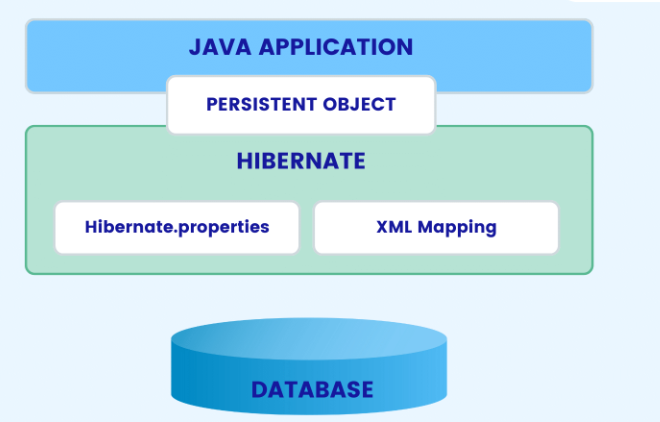

What is the architecture of the system?

The architecture of the system is typically designed to balance performance, scalability, and security requirements. It may include multiple layers of caching, load balancing, and failover mechanisms to ensure that requests are distributed evenly across multiple servers and that data is stored and retrieved efficiently.

How are requests handled?

Requests are typically handled by the system's request handler, which listens for incoming requests and dispatches them to the appropriate server or service. The request handler may also handle load balancing and failover mechanisms to ensure that requests are distributed evenly across multiple servers.

How are requests distributed across multiple servers?

Requests are typically distributed across multiple servers using a load balancing mechanism, which distributes incoming requests evenly across all available servers. The load balancer may also monitor the health of each server and route requests to healthy servers only.

How are requests stored and retrieved?

Requests are typically stored and retrieved from a database or cache using a key-value store or a cache provider. The key-value store or cache provider may use a distributed cache to store and retrieve data efficiently across multiple servers.

How is data stored and retrieved?

Data is typically stored and retrieved from a database or cache using a key-value store or a cache provider. The key-value store or cache provider may use a distributed cache to store and retrieve data efficiently across multiple servers.

How is security implemented?

Security is typically implemented using a combination of authentication, authorization, and encryption mechanisms. The system may use SSL/TLS to encrypt data in transit, and may also use encryption at rest to protect sensitive data. The system may also use rate limiting and other security mechanisms to prevent attacks and ensure that the system is secure.

How is load balancing implemented?

Load balancing is typically implemented using a load balancer or a reverse proxy. The load balancer or reverse proxy may distribute incoming requests evenly across all available servers, and may also monitor the health of each server and route requests to healthy servers only.

How is fault tolerance implemented?

Fault tolerance is typically implemented using redundancy and failover mechanisms. The system may use multiple servers to ensure that requests are distributed evenly across all available servers, and may also use failover mechanisms to switch to a backup server if a server fails.

How is scalability implemented?

Scalability is typically implemented using a combination of horizontal and vertical scaling techniques. The system may use a scaling strategy that adds or removes servers based on demand, or it may use a scaling strategy that adjusts the resources allocated to each server based on demand.

How is performance optimized?

Performance optimization is typically implemented using a combination of caching, compression, and other techniques. The system may use caching to store frequently accessed data in memory, and may also use compression to reduce the size of data that is transmitted over the network.

How is monitoring and logging implemented?

Monitoring and logging are typically implemented using a monitoring system and logging framework. The monitoring system may monitor the health of each server, the performance of each server, and the overall performance of the system, and may generate alerts or notifications if any of these metrics exceed a certain threshold. The logging framework may log all requests and responses, as well as other relevant information, to help diagnose and troubleshoot issues.

How is documentation and support provided?

Documentation and support are typically provided through a user manual, a help desk, and a knowledge base. The user manual may provide detailed instructions on how to use the system, including how to navigate the user interface, how to perform common tasks, and how to report issues. The help desk may provide support for users who encounter issues with the system, and may also provide training on how to use the system effectively. The knowledge base may provide detailed information on how the system is designed, how it works, and how to troubleshoot issues.

How is the system designed for scalability and performance?

The system is designed for scalability and performance by using a combination of caching, load balancing, and other techniques. The system may use a caching provider to store frequently accessed data in memory, and may also use a load balancer to distribute incoming requests evenly across all available servers. The system may also use a scaling strategy that adds or removes servers based on demand, or it may use a scaling strategy that adjusts the resources allocated to each server based on demand.

How is the system designed for security and reliability?

The system is designed for security and reliability by using a combination of authentication, authorization, and encryption mechanisms. The system may use SSL/TLS to encrypt data in transit, and may also use encryption at rest to protect sensitive data. The system may also use rate limiting and other security mechanisms to prevent attacks and ensure that the system is secure. The system may also use redundancy and failover mechanisms to ensure that the system is resilient to failures.

How is the system designed for maintainability and evolution?

The system is designed for maintainability and evolution by using a modular architecture that allows for easy customization and extension. The system may use a microservices architecture to break the system into smaller, independent services that can be developed, tested, and deployed independently. The system may also use a version control system to track changes to the code and documentation, and may use automated testing

Frugal Streaming:

Frugal Streaming is a technique used to approximate the results of a query over a data stream, while using minimal memory. It is often used in scenarios where the data stream is too large to fit into memory, but approximate results are acceptable. One example of Frugal Streaming is the Count-Min Sketch algorithm, which uses a fixed-size array of counters to estimate the frequency of items in a data stream. Each item is hashed to a set of counters, and the minimum count in that set is incremented. The estimate for the frequency of an item is the minimum count across all sets.

Geohash / S2 Geometry:

Geohash and S2 Geometry are two related techniques used to represent and index geographic locations on a two-dimensional surface, such as a map. Geohash is a hierarchical spatial data structure that uses a string of characters to represent a location. Each character in the string represents a subdivision of the space, with longer strings representing smaller subdivisions. S2 Geometry is a library for manipulating geometric shapes on the surface of a sphere, such as the Earth. It uses a hierarchical grid system to partition the surface of the sphere into cells of varying sizes. Both Geohash and S2 Geometry are useful for indexing and querying large datasets of geographic locations.

Leaky bucket / Token bucket:

Leaky bucket and Token bucket are two algorithms used for traffic shaping and rate limiting in computer networks. The Leaky bucket algorithm regulates the rate at which data is transmitted by imposing a constant rate of data removal from a buffer. Any data that arrives in excess of the rate is discarded. The Token bucket algorithm regulates the rate at which data is transmitted by issuing tokens at a fixed rate. Each token allows a fixed amount of data to be transmitted. If there are no tokens available, data transmission is blocked.

Loosy Counting:

Loosy Counting is a technique used to estimate the frequency of items in a data stream, while using minimal memory. It is similar to Frugal Streaming, but allows for a small amount of error in the estimates. One example of Loosy Counting is the HyperLogLog algorithm, which uses a fixed-size array of registers to estimate the number of distinct items in a data stream. Each item is hashed to a register, and the maximum number of leading zeros in the binary representation of the register values is used to estimate the number of distinct items.

Operational transformation:

Operational Transformation is a technique used to synchronize the state of a shared document or data structure across multiple clients in a distributed system. It is often used in collaborative editing applications, such as Google Docs. Operational Transformation works by transforming the operations performed by each client into a common form that can be applied in any order without affecting the final state of the document. This allows each client to see the changes made by other clients in real-time, while ensuring that the final state of the document is consistent across all clients.

Quadtree / Rtree:

Quadtree and Rtree are two related spatial data structures used for indexing and querying two-dimensional data, such as points, lines, and polygons. Quadtree is a hierarchical data structure that recursively subdivides a two-dimensional space into four quadrants, with each quadrant represented by a node in the tree. Rtree is a similar data structure that uses a hierarchical tree of rectangles to represent the data. Both Quadtree and Rtree are useful for spatial indexing and querying in applications such as geographic information systems and computer graphics.

Ray casting:

Ray casting is a technique used to render three-dimensional scenes in computer graphics. It works by tracing rays from the viewer's eye through each pixel in the image plane and into the scene. The rays are tested for intersections with objects in the scene, and the color of the pixel is determined based on the properties of the closest object. Ray casting is a computationally intensive process, but can produce high-quality images with realistic lighting and shading effects.

Reverse index:

Reverse index, also known as an inverted index, is a data structure used to index and search text documents. It works by creating an index of all the words in the documents, along with a list of the documents that contain each word. This allows for efficient searching of documents based on the words they contain. Reverse index is used in many applications, such as search engines and document management systems.

Rsync algorithm:

Rsync is a file synchronization algorithm used to efficiently transfer files between two systems over a network. It works by comparing the contents of the files on both systems and transferring only the differences between them. This can greatly reduce the amount of data that needs to be transferred, especially for large files or files that have only small changes. Rsync is commonly used for backups and for transferring large files over the internet.

Trie algorithm:

A Trie, also known as a prefix tree, is a tree-like data structure used to store and retrieve strings efficiently. Each node in the tree represents a prefix of one or more strings, and the edges represent the characters that can follow the prefix. Tries are useful for applications such as autocomplete and spell checking, where fast string lookups are required.

Coding Questions

Subsets : https://leetcode.com/problems/subsets/

public List<List<Integer>> subsets(int[] nums) {

List<List<Integer>> list = new ArrayList<>();

Arrays.sort(nums);

backtrack(list, new ArrayList<>(), nums, 0);

return list;

}

private void backtrack(List<List<Integer>> list , List<Integer> tempList, int [] nums, int start){

list.add(new ArrayList<>(tempList));

for(int i = start; i < nums.length; i++){

tempList.add(nums[i]);

backtrack(list, tempList, nums, i + 1);

tempList.remove(tempList.size() - 1);

}

}

Subsets II (contains duplicates) : https://leetcode.com/problems/subsets-ii/

public List<List<Integer>> subsetsWithDup(int[] nums) {

List<List<Integer>> list = new ArrayList<>();

Arrays.sort(nums);

backtrack(list, new ArrayList<>(), nums, 0);

return list;

}

private void backtrack(List<List<Integer>> list, List<Integer> tempList, int [] nums, int start){

list.add(new ArrayList<>(tempList));

for(int i = start; i < nums.length; i++){

if(i > start && nums[i] == nums[i-1]) continue; // skip duplicates

tempList.add(nums[i]);

backtrack(list, tempList, nums, i + 1);

tempList.remove(tempList.size() - 1);

}

}

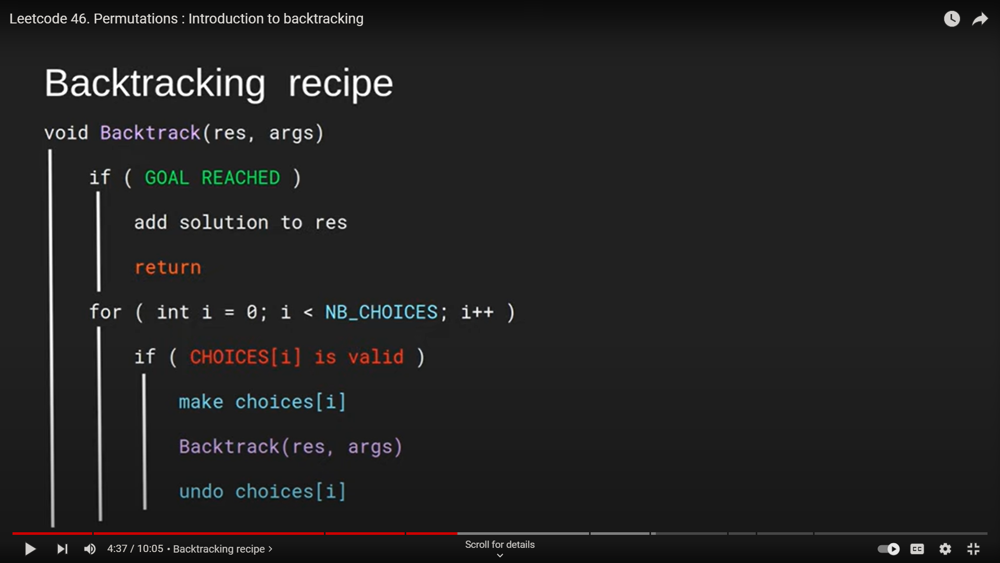

Permutations : https://leetcode.com/problems/permutations/

public List<List<Integer>> permute(int[] nums) {

List<List<Integer>> list = new ArrayList<>();

// Arrays.sort(nums); // not necessary

backtrack(list, new ArrayList<>(), nums);

return list;

}

private void backtrack(List<List<Integer>> list, List<Integer> tempList, int [] nums){

if(tempList.size() == nums.length){

list.add(new ArrayList<>(tempList));

} else{

for(int i = 0; i < nums.length; i++){

if(tempList.contains(nums[i])) continue; // element already exists, skip

tempList.add(nums[i]);

backtrack(list, tempList, nums);

tempList.remove(tempList.size() - 1);

}

}

}

Permutations II (contains duplicates) : https://leetcode.com/problems/permutations-ii/

public List<List<Integer>> permuteUnique(int[] nums) {

List<List<Integer>> list = new ArrayList<>();

Arrays.sort(nums);

backtrack(list, new ArrayList<>(), nums, new boolean[nums.length]);

return list;

}

private void backtrack(List<List<Integer>> list, List<Integer> tempList, int [] nums, boolean [] used){

if(tempList.size() == nums.length){

list.add(new ArrayList<>(tempList));

} else{

for(int i = 0; i < nums.length; i++){

if(used[i] || i > 0 && nums[i] == nums[i-1] && !used[i - 1]) continue;

used[i] = true;

tempList.add(nums[i]);

backtrack(list, tempList, nums, used);

used[i] = false;

tempList.remove(tempList.size() - 1);

}

}

}

Combination Sum : https://leetcode.com/problems/combination-sum/

public List<List<Integer>> combinationSum(int[] nums, int target) {

List<List<Integer>> list = new ArrayList<>();

Arrays.sort(nums);

backtrack(list, new ArrayList<>(), nums, target, 0);

return list;

}

private void backtrack(List<List<Integer>> list, List<Integer> tempList, int [] nums, int remain, int start){

if(remain < 0) return;

else if(remain == 0) list.add(new ArrayList<>(tempList));

else{

for(int i = start; i < nums.length; i++){

tempList.add(nums[i]);

backtrack(list, tempList, nums, remain - nums[i], i); // not i + 1 because we can reuse same elements

tempList.remove(tempList.size() - 1);

}

}

}

Combination Sum II (can't reuse same element) : https://leetcode.com/problems/combination-sum-ii/

public List<List<Integer>> combinationSum2(int[] nums, int target) {

List<List<Integer>> list = new ArrayList<>();

Arrays.sort(nums);

backtrack(list, new ArrayList<>(), nums, target, 0);

return list;

}

private void backtrack(List<List<Integer>> list, List<Integer> tempList, int [] nums, int remain, int start){

if(remain < 0) return;

else if(remain == 0) list.add(new ArrayList<>(tempList));

else{

for(int i = start; i < nums.length; i++){

if(i > start && nums[i] == nums[i-1]) continue; // skip duplicates

tempList.add(nums[i]);

backtrack(list, tempList, nums, remain - nums[i], i + 1);

tempList.remove(tempList.size() - 1);

}

}

}

Palindrome Partitioning : https://leetcode.com/problems/palindrome-partitioning/

public List<List<String>> partition(String s) {

List<List<String>> list = new ArrayList<>();

backtrack(list, new ArrayList<>(), s, 0);

return list;

}

public void backtrack(List<List<String>> list, List<String> tempList, String s, int start){

if(start == s.length())

list.add(new ArrayList<>(tempList));

else{

for(int i = start; i < s.length(); i++){

if(isPalindrome(s, start, i)){

tempList.add(s.substring(start, i + 1));

backtrack(list, tempList, s, i + 1);

tempList.remove(tempList.size() - 1);

}

}

}

}

public boolean isPalindrome(String s, int low, int high){

while(low < high)

if(s.charAt(low++) != s.charAt(high--)) return false;

return true;

}

java interview questions:

Number ways to create object in java?

Using new keyword

Using new instance

Using clone() method

Using deserialization

Using newInstance() method of Constructor class

Using new keyword:

The new keyword is used to create a new instance of a class. It allocates memory for the object and initializes its fields with their default values. Here's an example:

MyClass obj = new MyClass();

This creates a new instance of the MyClass class and assigns it to the obj variable.

Using new instance:

The newInstance() method of the Class class is used to create a new instance of a class at runtime. It is similar to using the new keyword, but allows you to create an instance of a class whose name is not known until runtime. Here's an example:

Class<?> cls = Class.forName("MyClass");

MyClass obj = (MyClass) cls.newInstance();

This creates a new instance of the MyClass class using the newInstance() method of the Class class.

Using clone() method:

The clone() method is used to create a copy of an object. It creates a new instance of the same class as the original object and copies the values of all fields from the original object to the new object. Here's an example:

MyClass obj1 = new MyClass();

MyClass obj2 = obj1.clone();

This creates a new instance of the MyClass class using the clone() method and assigns it to the obj2 variable.

Using deserialization:

Deserialization is the process of converting a serialized object back into an object in memory. It is typically used to transfer objects between different systems or to store objects in a database. Here's an example:

ObjectInputStream in = new ObjectInputStream(new FileInputStream("data.bin"));

MyClass obj = (MyClass) in.readObject();

This reads a serialized object from a file called data.bin and deserializes it into a new instance of the MyClass class.

Using newInstance() method of Constructor class:

The newInstance() method of the Constructor class is used to create a new instance of a class using a constructor at runtime. It is similar to using the new keyword, but allows you to create an instance of a class whose constructor is not known until runtime. Here's an example:

Constructor<MyClass> constructor = MyClass.class.getConstructor(String.class, int.class);

MyClass obj = constructor.newInstance("hello", 123);

This creates a new instance of the MyClass class using the newInstance() method of the Constructor class and passing arguments to the constructor.

what is use of reflections in java?

Reflection is a mechanism in Java that allows you to inspect, manipulate, and create objects at runtime. Reflection allows you to get information about classes, methods, fields, and other objects at runtime, without having to know the class or object at compile time.

Reflection is useful in a variety of situations, such as:

Dynamically loading classes at runtime

Creating objects based on user input or configuration files

Implementing frameworks that can work with any class, without having to know the class at compile time

Debugging and testing frameworks that need to work with any class

Creating proxies for objects that intercept method calls and perform additional actions

Reflection can also be used to bypass access control restrictions, which can be useful in certain situations. However, it is important to use reflection judiciously and only when necessary, as it can make your code more complex and harder to maintain.

Here are some common use cases for reflection in Java:

Dynamically loading classes at runtime:

Class<?> clazz = Class.forName("com.example.MyClass");

Object obj = clazz.newInstance();

In this example, we use the Class.forName() method to load the

MyClass class at runtime. We then use the newInstance()

method to create a new instance of the class.

Creating objects based on user input or configuration files:

String className = "com.example.MyClass";

Class<?> clazz = Class.forName(className);

Constructor<?> constructor = clazz.getConstructor(String.class);

Object obj = constructor.newInstance("John");

In this example, we use the Class.forName() method to load the

MyClass class at runtime. We then use the getConstructor()

method to get the constructor that takes a String argument. We then use the newInstance()

method to create a new instance of the class and pass in the argument "John".

Implementing frameworks that can work with any class, without having to know the class at compile time:

Class<?> clazz = Class.forName(className);

Object obj = clazz.newInstance();

Method method = clazz.getMethod("myMethod", String.class);

method.invoke(obj, "John");

In this example, we use the Class.forName() method to load the class at runtime. We then use the newInstance() method to create a new instance of the class. We then use the

getMethod() method to get the myMethod method of the class that takes a String argument. We then use the invoke() method to call the method and pass in the argument "John".

Debugging and testing frameworks that need to work with any class:

Class<?> clazz = Class.forName(className);

Object obj = clazz.newInstance();

Field field = clazz.getField("myField");

field.set(obj, "John");

In this example, we use the Class.forName() method to load the class at runtime. We then use the newInstance() method to create a new instance of the class. We then use the

getField() method to get the myField field of the class. We then use the set() method to set the value of the field to "John".

HashMap is a class in Java that implements the Map interface, which allows you to store key-value pairs. HashMap is a hash table, which means that it uses a hash function to map keys to indices in an array. When you add a key-value pair to a HashMap, the key is hashed to determine the index in the array where the value should be stored. If there is already a value stored at that index, the new value is added to the end of a linked list at that index.

Here's how HashMap works internally:

The HashMap class implements the Map interface, which means that it has methods for adding, removing, and accessing key-value pairs.

When you create a new HashMap, it is initialized with a default capacity of 16. The capacity is the number of indices in the hash table.

When you add a key-value pair to a HashMap, the key is hashed to determine the index in the array where the value should be stored. The hash function used by HashMap is a simple modulo operation, which means that the index is calculated as

hash(key) % capacity

.

If there is already a value stored at that index, the new value is added to the end of a linked list at that index. This allows multiple values to be stored at the same index.

When you access a value in a HashMap, the key is hashed to determine the index in the array where the value is stored. If there is more than one value stored at that index, you need to iterate through the linked list to find the value you are looking for.

When you remove a key-value pair from a HashMap, the key is hashed to determine the index in the array where the value is stored. If there is more than one value stored at that index, you need to iterate through the linked list to find the value you want to remove. Once you find the value, you remove it from the linked list and update the size of the HashMap.

HashMap is not synchronized, which means that it can be accessed by multiple threads simultaneously without causing data corruption. If you need to synchronize access to a HashMap, you can use a synchronized wrapper class such as

Collections.synchronizedMap()

.

Overall, HashMap is a useful data structure for storing key-value pairs in Java, especially when you need to access values quickly based on the key. However, it is important to choose the right type of HashMap (e.g. LinkedHashMap, TreeMap, etc.) based on your specific use case, as some types of HashMaps are better suited for certain types of operations.

how to make custom immutable class in java?

To create a custom immutable class in Java, you can follow these steps:

Create a public class with a private constructor and public static factory methods to create instances of the class.

Make all fields final and private.

Override the equals and hashCode methods to compare instances based on their fields.

Implement the toString method to provide a human-readable string representation of the instance.

Here's an example implementation:

public final class Point {

private final int x;

private final int y;

private Point(int x, int y) {

this.x = x;

this.y = y;

}

public static Point of(int x, int y) {

return new Point(x, y);

}

public int getX() {

return x;

}

public int getY() {

return y;

}

@Override

public boolean equals(Object o) {

if (this == o) return true;

if (o == null || getClass()!= o.getClass()) return false;

Point point = (Point) o;

return x == point.x &&

y == point.y;

}

@Override

public int hashCode() {

return Objects.hash(x, y);

}

@Override

public String toString() {

return "Point{" +

"x=" + x +

", y=" + y +

'}';

}

}

import java.util.Arrays;

public class Main {

public static int upper_bound(int[] A, int t) {

int l = 0, r = A.length - 1;

while (l <= r) {

int mid = (l + r) / 2;

if (A[mid] <= t) {

l = mid + 1;

} else {

r = mid - 1;

}

}

return l;

}

public static void main(String[] args) {

int[] A = {1, 2, 3, 4, 5};

int t = 3;

int res = upper_bound(A, t);

System.out.println(res); // Output: 3

}

}

import java.util.Arrays;

public class Main {

public static int lower_bound(int[] A, int t) {

int l = 0, r = A.length - 1;

while (l <= r) {

int mid = (l + r) / 2;

if (A[mid] < t) {

l = mid + 1;

} else {

r = mid - 1;

}

}

return l;

}

public static void main(String[] args) {

int[] A = {1, 2, 3, 4, 5};

int t = 3;

int res = lower_bound(A, t);

System.out.println(res); // Output: 2

}

}

1. What is the difference between an abstract class and an interface?

- An abstract class can have both abstract and non-abstract methods, while an interface can only have abstract methods.

- A class can only extend one abstract class, but can implement multiple interfaces.

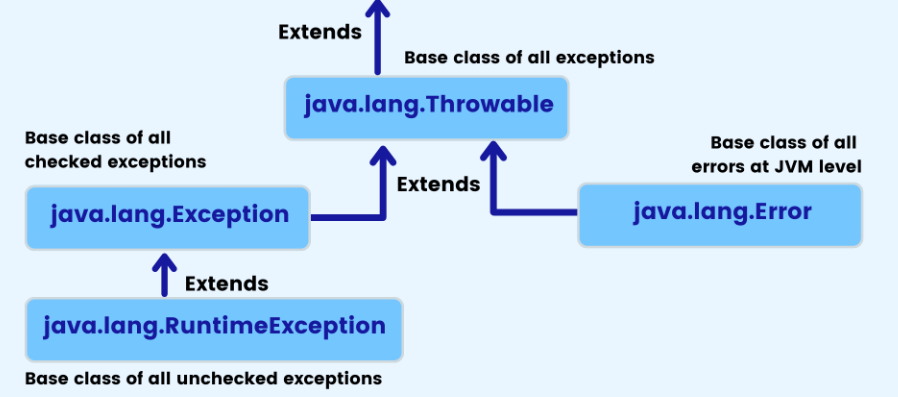

2. What is the difference between a checked and an unchecked exception?

- A checked exception must be handled by the calling method or declared in its throws clause, while an unchecked exception does not have to be handled or declared.

- Checked exceptions are typically used for recoverable errors, while unchecked exceptions are used for unrecoverable errors.

3. What is the difference between a stack and a queue?

- A stack is a Last-In-First-Out (LIFO) data structure, while a queue is a First-In-First-Out (FIFO) data structure.

- Stacks are typically used for backtracking, while queues are used for scheduling.

4. What is the difference between a HashMap and a TreeMap?

- A HashMap is an unordered collection of key-value pairs, while a TreeMap is a sorted collection of key-value pairs.

- HashMaps have constant-time performance for most operations, while TreeMaps have logarithmic-time performance.

5. What is the difference between a String, StringBuilder, and StringBuffer?

- A String is an immutable sequence of characters, while a StringBuilder and StringBuffer are mutable.

- StringBuilder is not thread-safe, while StringBuffer is thread-safe.

- StringBuilder is faster than StringBuffer, but should only be used in single-threaded environments.

Difference between Comparator and Comparable in Java ?

1. Comparable is an interface in Java, while Comparator is an interface that can be implemented by a class.

2. A class that implements Comparable can be sorted using the Arrays.sort() or Collections.sort() method, while a class that implements Comparator can be used to sort any other class that does not implement Comparable .

3. Comparable provides a natural ordering for a class, while Comparator provides a custom ordering that can be defined by the programmer.

4. The compareTo() method is used to compare two objects that implement Comparable , while the compare() method is used to compare two objects using a Comparator .

5. The compareTo() method returns a negative integer, zero, or a positive integer depending on whether the object is less than, equal to, or greater than the specified object, while the compare() method returns a negative integer, zero, or a positive integer depending on whether the first object is less than, equal to, or greater than the second object.

6. Comparable is implemented by the class that needs to be sorted, while Comparator is implemented by the class that performs the sorting.

7. Comparable provides a single sorting sequence, while Comparator can provide multiple sorting sequences.

8. Comparable can be used to sort a list of objects based on a single attribute of the object, while Comparator can be used to sort a list of objects based on multiple attributes of the object.

9. Comparable is used when the natural ordering of a class is known and fixed, while Comparator is used when the ordering of a class needs to be changed at runtime.

10. Comparable is more efficient than Comparator because it does not require an additional object to perform the comparison.

11. Comparable is implemented by the class itself, while Comparator is implemented by a separate class.

12. Comparable uses the compareTo() method, while Comparator uses the compare() method.

13. Comparable is used to sort elements in a natural order, while Comparator is used to sort elements in a custom order.

14. Comparable can be used to sort arrays, while Comparator can be used to sort collections.

15. Comparable can only provide a single sorting sequence, while Comparator can provide multiple sorting sequences.

16. Comparable is used when the natural ordering of a class is known and fixed, while Comparator is used when the ordering of a class needs to be changed at runtime.

17. Comparable is a generic interface, while Comparator is not.

18. Comparable is used for sorting objects of the same class, while Comparator can be used to sort objects of different classes.

19. Comparable is implemented by overriding the compareTo() method, while Comparator is implemented by creating a new class that implements the Comparator interface.

20. Comparable is used to define the default ordering of a class, while Comparator is used to define a custom ordering of a class.

1. ArrayList: An ArrayList is a resizable array implementation of the List interface. It can store null and duplicate values.

2. LinkedList: A LinkedList is a doubly linked list implementation of the List interface. It can store null and duplicate values.

3. HashSet: A HashSet is an implementation of the Set interface that uses a hash table for storage. It can store null values and does not allow duplicate values.

4. LinkedHashSet: A LinkedHashSet is an implementation of the Set interface that maintains the insertion order of elements. It can store null values and does not allow duplicate values.

5. TreeSet: A TreeSet is an implementation of the SortedSet interface that uses a tree structure for storage. It does not allow null values and does not allow duplicate values based on the natural ordering of the elements or a Comparator.

In Java, a marker interface is an interface that has no methods or fields, but is used to indicate certain properties or behaviors of a class that implements the interface. Some of the commonly used marker interfaces in Java are:

1. Serializable: This interface is used to indicate that a class can be serialized, i.e., its objects can be converted into a stream of bytes and stored in a file or sent over a network.

2. Cloneable: This interface is used to indicate that a class can be cloned, i.e., its objects can be duplicated without calling the constructor.

3. RandomAccess: This interface is used to indicate that a list or collection supports random access, i.e., elements can be accessed in constant time using an index.

4. SingleThreadModel: This interface is used to indicate that a servlet can handle only one request at a time, and is used for thread safety in web applications.

5. Remote: This interface is used to indicate that a class can be accessed remotely using Java Remote Method Invocation (RMI).

6. Annotation: This interface is used to define custom annotations that can be used to provide additional information about a class, method, or field.

Note that these interfaces do not provide any methods or functionality, but are used by the Java runtime environment or other libraries to determine certain properties or behaviors of the classes that implement them.

what is hash collisions in Java?

In Java, a hash collision occurs when two different keys have the same hash code. When this happens, the two keys are said to collide. This is a common occurrence in hash table implementations, which rely on hash codes to efficiently store and retrieve objects.

When a hash collision occurs, the hash table must handle the collision by storing both objects in the same bucket. This can lead to performance issues if the number of collisions is high, as the time required to search for a specific key in the bucket increases.

To handle hash collisions in Java, the hash table implementation uses a technique called chaining. In chaining, each bucket in the hash table contains a linked list of objects that have the same hash code. When a key is added to the hash table, it is added to the linked list in the appropriate bucket. When the hash table needs to retrieve an object, it first calculates the hash code of the key and then searches the linked list in the corresponding bucket for the key.

To minimize the occurrence of hash collisions, it is important to choose a good hash function. A good hash function should distribute the keys evenly across the buckets in the hash table, minimizing the number of collisions. In Java, the hashCode() method is used to generate hash codes for objects. It is important to implement this method carefully to ensure that it generates good hash codes that minimize collisions.

In summary, hash collisions can occur in Java when two different keys have the same hash code. To handle collisions, Java uses a technique called chaining, which stores objects with the same hash code in a linked list in the same bucket. To minimize collisions, it is important to choose a good hash function and implement the hashCode() method carefully.

In Java, an error is a serious problem that typically prevents the application from functioning properly and may even cause the application to crash. Errors are usually caused by external factors such as lack of system resources, hardware failures, or network problems. Examples of errors in Java include OutOfMemoryError, StackOverflowError, and VirtualMachineError.

On the other hand, an exception is a problem that occurs within the application itself and can be handled by the application. Exceptions are typically caused by incorrect input, programming errors, or unexpected conditions. Examples of exceptions in Java include NullPointerException, ArrayIndexOutOfBoundsException, and IOException.

In Java, errors and exceptions are both subclasses of the Throwable class. However, errors are unchecked exceptions, which means that they do not need to be explicitly handled or declared in a method's throws clause. Exceptions, on the other hand, are checked exceptions, which means that they must be either caught and handled or declared in a method's throws clause.

It is important to handle exceptions properly in Java to ensure that your application runs smoothly and handles errors gracefully. This can be done using try-catch blocks, which allow you to catch and handle exceptions, or by declaring exceptions in a method's throws clause, which allows you to pass the responsibility of handling the exception to the calling method.

Checked Exception:

A checked exception is a type of exception that must be declared in a method or constructor's throws clause or handled using a try-catch block. Some examples of checked exceptions in Java include:

1. IOException: This exception is thrown when an input or output operation fails, such as when reading or writing to a file.

2. SQLException: This exception is thrown when there is an error in a database operation, such as when executing a query or updating a record.

Unchecked Exception:

An unchecked exception is a type of exception that does not need to be declared in a method or constructor's throws clause and can be handled using a try-catch block. Some examples of unchecked exceptions in Java include:

1. NullPointerException: This exception is thrown when a null reference is used in a method or operation that requires a non-null value.

2. ArrayIndexOutOfBoundsException: This exception is thrown when an attempt is made to access an array element with an index that is out of bounds.

3. IllegalArgumentException: This exception is thrown when an illegal argument is passed to a method or constructor, such as a negative value for a parameter that requires a positive value.

It is important to handle both checked and unchecked exceptions appropriately in Java to ensure that your application runs smoothly and handles errors gracefully.

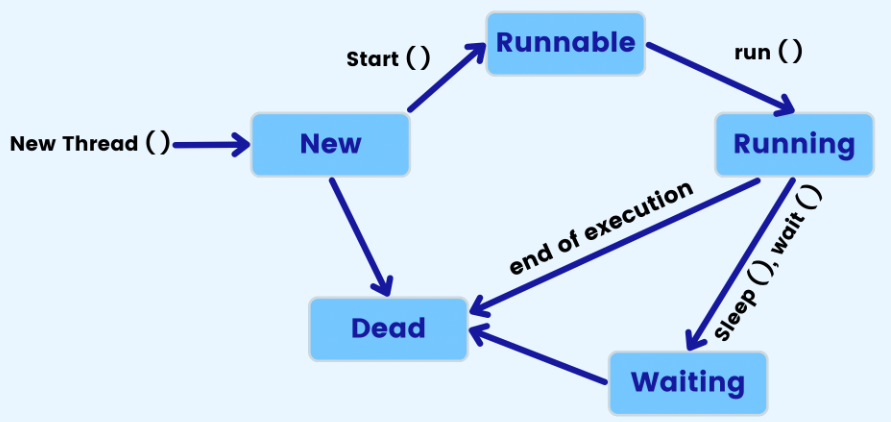

1. What is a thread?

- A thread is a lightweight process that can run concurrently with other threads within the same program.

2. What is multi-threading?

- Multi-threading is the ability of an operating system or program to manage multiple threads of execution concurrently.

3. What are the advantages of multi-threading?

- Multi-threading can improve performance by allowing multiple tasks to be executed simultaneously.

- Multi-threading can improve responsiveness by allowing the user interface to remain responsive while background tasks are executed.

- Multi-threading can simplify code by breaking complex tasks into smaller, more manageable pieces.

4. What is a race condition?

- A race condition is a situation where the behavior of a program depends on the timing of events, and different outcomes are possible depending on the order in which events occur.

5. What is synchronization?

- Synchronization is the process of controlling access to shared resources in a multi-threaded environment to prevent race conditions and ensure data consistency.

6. What is a deadlock?

- A deadlock is a situation where two or more threads are blocked, each waiting for the other to release a resource, and neither can proceed.

7. What is a thread pool?

- A thread pool is a collection of threads that can be used to execute tasks concurrently, without the overhead of creating and destroying threads for each task.

8. What is the difference between a thread and a process?

- A thread is a lightweight process that can run concurrently with other threads within the same program, while a process is a separate instance of a program that runs independently of other processes.

9. What is the Java synchronized keyword?

- The synchronized keyword is used to create a synchronized block of code, which ensures that only one thread can execute the block at a time, preventing race conditions and ensuring data consistency.

10. What is a thread-safe class?

- A thread-safe class is a class that can be safely used by multiple threads concurrently without causing race conditions or data inconsistency. This is achieved through synchronization or other concurrency control mechanisms.

1. What is a functional interface?

- A functional interface is an interface that contains only one abstract method and can be used as the basis for a lambda expression or method reference.

2. What is the purpose of a functional interface?

- The purpose of a functional interface is to provide a single abstract method that can be implemented by a lambda expression or method reference, allowing for more concise and readable code.

3. What is the @FunctionalInterface annotation?

- The @FunctionalInterface annotation is used to indicate that an interface is intended to be a functional interface. It is not strictly necessary, but can be helpful for documentation and to prevent accidental addition of additional abstract methods.

4. What is the Predicate functional interface?

- The Predicate functional interface represents a function that takes a single argument and returns a boolean value. It is often used for filtering or testing elements in a collection.

5. What is the Consumer functional interface?

- The Consumer functional interface represents a function that takes a single argument and returns no result. It is often used for performing an action on each element in a collection.

6. What is the Function functional interface?

- The Function functional interface represents a function that takes a single argument and returns a result. It is often used for transforming or mapping elements in a collection.

7. What is the Supplier functional interface?

- The Supplier functional interface represents a function that takes no arguments and returns a result. It is often used for lazy initialization or to provide default values.

8. What is the BiFunction functional interface?

- The BiFunction functional interface represents a function that takes two arguments and returns a result. It is often used for combining or merging two values.

9. What is the UnaryOperator functional interface?

- The UnaryOperator functional interface represents a function that takes a single argument of a certain type and returns a result of the same type. It is often used for transforming or modifying an object of a certain type.

10. What is the BinaryOperator functional interface?

- The BinaryOperator functional interface represents a function that takes two arguments of a certain type and returns a result of the same type. It is often used for combining or merging two objects of a certain type.

what are intermediate opeartions in stream api?

filter

: This operation takes a predicate and returns a new stream that contains only the elements that match the predicate.

map

: This operation takes a function and returns a new stream that contains the results of applying the function to each element in the original stream.

flatMap

: This operation takes a function that returns a stream and returns a new stream that contains the flattened elements of the original stream.

sorted

: This operation returns a new stream that contains the elements of the original stream sorted in ascending order.

distinct

: This operation returns a new stream that contains only the distinct elements of the original stream.

limit

: This operation returns a new stream that contains the first n elements of the original stream.

skip

: This operation returns a new stream that skips the first n elements of the original stream and then returns the remaining elements.

forEach

: This operation performs an action for each element in the stream.

reduce

: This operation reduces the elements of the stream to a single value using a binary operator.

collect

: This operation collects the elements of the stream into a collection, such as a List or a Set.

what are Terminal opeartions in stream api?

count

: This operation returns the count of elements in the stream.

anyMatch

: This operation returns true if any element in the stream matches the given predicate.

allMatch

: This operation returns true if all elements in the stream match the given predicate.

noneMatch

: This operation returns true if no element in the stream matches the given predicate.

findFirst

: This operation returns an Optional object containing the first element in the stream that matches the given predicate, or an empty Optional if no such element exists.

findAny

: This operation returns an Optional object containing any element in the stream that matches the given predicate, or an empty Optional if no such element exists.

forEach

: This operation performs an action for each element in the stream.

reduce

: This operation reduces the elements of the stream to a single value using a binary operator.

collect

: This operation collects the elements of the stream into a collection, such as a List or a Set.

min

: This operation returns the minimum element in the stream according to the given comparator.

max

: This operation returns the maximum element in the stream according to the given comparator.

sum

: This operation returns the sum of all elements in the stream.

average

: This operation returns the average of all elements in the stream.

These are just a few of the terminal operations available in the Stream API. There are many more operations that can be used to manipulate and transform streams of data.

1. What are Java 8 streams?

- Java 8 streams are a new feature that provide a way to process collections of data in a functional, declarative style. Streams allow for concise, readable code that is also parallelizable.

2. What is a stream pipeline?

- A stream pipeline is a sequence of stream operations that are combined to process a collection of data. The pipeline consists of a source, zero or more intermediate operations, and a terminal operation.

3. What is the difference between intermediate and terminal operations in a stream pipeline?

- Intermediate operations are operations that transform or filter the data in a stream, but do not produce a final result. Terminal operations are operations that produce a final result, such as a single value or a collection.

4. What is a parallel stream?

- A parallel stream is a stream that is processed concurrently across multiple threads, allowing for improved performance on multi-core processors.

5. What is a reduction operation in a stream?

- A reduction operation is a terminal operation that combines the elements of a stream into a single result. Examples of reduction operations include sum, min, max, and count.

6. What is the difference between a forEach and a forEachOrdered operation in a stream?

- The forEach operation processes the elements of a stream in an unordered manner, while the forEachOrdered operation processes the elements in the order they appear in the stream.

7. What is the difference between a map and a flatMap operation in a stream?

- The map operation transforms the elements of a stream by applying a function to each element, while the flatMap operation transforms the elements by flattening nested collections and applying a function to each element.

8. What is the filter operation in a stream?

- The filter operation returns a new stream that contains only the elements that satisfy a given predicate.

9. What is the sorted operation in a stream?

- The sorted operation returns a new stream that contains the elements of the original stream sorted according to a given comparator.

10. What is the collect operation in a stream?

- The collect operation is a terminal operation that collects the elements of a stream into a collection or other data structure. The collect operation takes a Collector object that specifies how the elements should be collected.

1. What is a thread in Java?

- A thread is a lightweight process that can run concurrently with other threads within the same program.

2. What is a process in Java?

- A process is a separate instance of a program that runs independently of other processes.

3. What is the difference between a thread and a process in Java?

- A thread is a lightweight process that runs within a process, while a process is a standalone entity that runs independently of other processes.

4. What is the Thread class in Java?

- The Thread class is a built-in class in Java that provides the framework for creating and managing threads.

5. What is the Runnable interface in Java?

- The Runnable interface is a built-in interface in Java that provides a way to define the code that will be executed in a new thread.

6. What is the difference between extending the Thread class and implementing the Runnable interface in Java?

- Extending the Thread class creates a new class that is a thread, while implementing the Runnable interface allows any class to be executed in a new thread.

7. What is the sleep() method in Java?

- The sleep() method is a built-in method in Java that causes the current thread to pause for a specified amount of time, allowing other threads to execute.

8. What is the join() method in Java?

- The join() method is a built-in method in Java that waits for a thread to complete before continuing execution of the current thread.

9. What is the start() method in Java?

- The start() method is a built-in method in Java that starts a new thread by calling the run() method of the Thread object.

10. What is the synchronized keyword in Java?

- The synchronized keyword is a built-in keyword in Java that provides a way to control access to shared resources in a multi-threaded environment, preventing race conditions and ensuring data consistency.

1. What is a multi-threaded environment in Java?

- A multi-threaded environment in Java is an environment where multiple threads of execution can run concurrently within the same program.

2. What is the purpose of multi-threading in Java?

- The purpose of multi-threading in Java is to improve performance and responsiveness by allowing multiple tasks to be executed simultaneously, without blocking the user interface or other critical processes.

3. What is a thread pool in Java?

- A thread pool in Java is a collection of pre-initialized threads that can be used to execute tasks concurrently, without the overhead of creating and destroying threads for each task.

4. What is the difference between a thread and a process in Java?

- A thread is a lightweight process that runs within a process, while a process is a standalone entity that runs independently of other processes.

5. What is a race condition in Java?

- A race condition in Java is a situation where the behavior of a program depends on the timing of events, and different outcomes are possible depending on the order in which events occur.

6. What is synchronization in Java?

- Synchronization in Java is the process of controlling access to shared resources in a multi-threaded environment, preventing race conditions and ensuring data consistency.

7. What is the synchronized keyword in Java?

- The synchronized keyword in Java is used to create a synchronized block of code, which ensures that only one thread can execute the block at a time, preventing race conditions and ensuring data consistency.

8. What is a deadlock in Java?

- A deadlock in Java is a situation where two or more threads are blocked, each waiting for the other to release a resource, and neither can proceed.

9. What is the wait() method in Java?

- The wait() method in Java is a built-in method that causes the current thread to wait until another thread notifies it to resume execution.

10. What is the notify() method in Java?

- The notify() method in Java is a built-in method that wakes up a thread that is waiting on a shared resource, allowing it to resume execution.

1. What is garbage collection in Java?

- Garbage collection in Java is the process of automatically freeing memory that is no longer in use by a program, improving memory management and reducing the risk of memory leaks.

2. What are the different types of garbage collection in Java?

- The different types of garbage collection in Java include serial, parallel, CMS (Concurrent Mark Sweep), and G1 (Garbage First).

3. What is serial garbage collection in Java?

- Serial garbage collection in Java is a simple, single-threaded approach to garbage collection that is suitable for small applications with low memory requirements.

4. What is parallel garbage collection in Java?

- Parallel garbage collection in Java is a multi-threaded approach to garbage collection that is suitable for larger applications with higher memory requirements.

5. What is CMS (Concurrent Mark Sweep) garbage collection in Java?

- CMS garbage collection in Java is a concurrent approach to garbage collection that reduces the pause times associated with garbage collection, allowing applications to be more responsive.

6. What is G1 (Garbage First) garbage collection in Java?

- G1 garbage collection in Java is a low-pause, server-style approach to garbage collection that is suitable for large, multi-processor systems with high memory requirements.

7. What is the difference between minor and major garbage collection in Java?

- Minor garbage collection in Java is a process that frees memory in the young generation of objects, while major garbage collection frees memory in the old generation of objects.

8. What is the heap in Java?

- The heap in Java is a region of memory that is used for dynamic memory allocation, including objects created by a program.

9. What is the permanent generation in Java?

- The permanent generation in Java is a region of memory that is used for storing metadata and class definitions, and is separate from the heap.

10. What is the role of the System.gc() method in Java?

- The System.gc() method in Java is a built-in method that suggests to the garbage collector that it should run, but does not guarantee that garbage collection will occur.

1. What is object-oriented programming (OOP) in Java?

- Object-oriented programming in Java is a programming paradigm that focuses on creating objects that encapsulate data and behavior, allowing for modular and reusable code.

2. What are the four basic principles of OOP in Java?

- The four basic principles of OOP in Java are encapsulation, inheritance, polymorphism, and abstraction.

3. What is encapsulation in Java?

- Encapsulation in Java is the process of hiding the implementation details of an object and exposing only the necessary information through a public interface.

4. What is inheritance in Java?

- Inheritance in Java is the process of creating a new class that inherits the properties and methods of an existing class, allowing for code reuse and specialization.

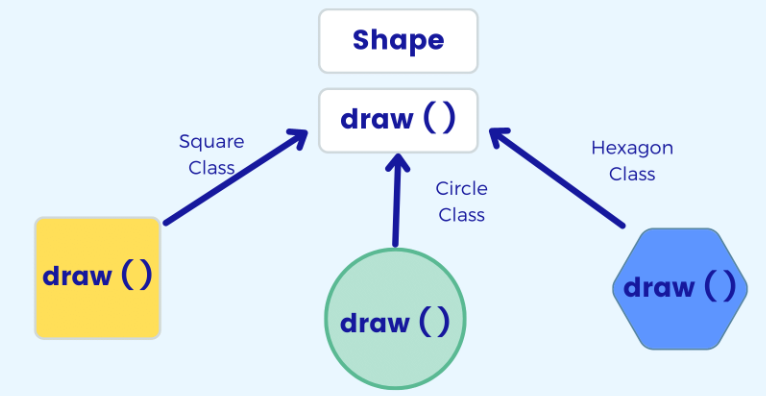

5. What is polymorphism in Java?

- Polymorphism in Java is the ability of an object to take on multiple forms, allowing for flexibility and extensibility in code design.

6. What is abstraction in Java?

- Abstraction in Java is the process of creating a simplified representation of a complex system, allowing for easier understanding and management of the system.

7. What is a class in Java?

- A class in Java is a blueprint for creating objects that define the properties and methods of the object.

8. What is an object in Java?

- An object in Java is an instance of a class that has its own set of properties and methods.

9. What is a constructor in Java?

- A constructor in Java is a special method that is used to create and initialize an object of a class.

10. What is the difference between an abstract class and an interface in Java?

- An abstract class in Java is a class that cannot be instantiated and can contain both abstract and non-abstract methods, while an interface is a collection of abstract methods that define a contract for implementing classes.

1. What is an error in Java?

- An error in Java is a serious problem that occurs at runtime and cannot be handled by the program, such as an out of memory error or a stack overflow error.

2. What is an exception in Java?

- An exception in Java is a problem that occurs at runtime and can be handled by the program, such as a null pointer exception or an arithmetic exception.

3. What is the difference between an error and an exception in Java?

- An error is a serious problem that cannot be handled by the program, while an exception is a problem that can be handled by the program.

4. What is exception handling in Java?

- Exception handling in Java is the process of handling exceptions that occur at runtime, preventing the program from crashing and providing a graceful way to handle unexpected situations.

5. What is the try-catch block in Java?

- The try-catch block in Java is a built-in mechanism for handling exceptions, where code that may throw an exception is placed in a try block, and the exception is caught and handled in a catch block.

6. What is the finally block in Java?

- The finally block in Java is a built-in mechanism for executing code that must be run regardless of whether an exception is thrown or not, such as closing a file or releasing a resource.

7. What is the throw keyword in Java?

- The throw keyword in Java is a built-in keyword that allows a program to throw a custom exception, allowing for more precise and meaningful error handling.

8. What is the throws keyword in Java?

- The throws keyword in Java is a built-in keyword that is used to declare that a method may throw a particular exception, allowing the caller to handle the exception appropriately.

9. What is the difference between checked and unchecked exceptions in Java?

- Checked exceptions are checked at compile time and must be handled by the program, while unchecked exceptions are not checked at compile time and may or may not be handled by the program.

10. What is the role of the Exception class in Java?

- The Exception class in Java is a built-in class that provides a framework for creating and handling exceptions, allowing for more robust and reliable code.

1. What is the final keyword in Java?

- The final keyword in Java is used to declare a variable, method, or class that cannot be modified or overridden once it has been defined.

2. What is the finally keyword in Java?

- The finally keyword in Java is used to define a block of code that will be executed regardless of whether an exception is thrown or not, allowing for cleanup or other tasks to be performed.

3. What is the finalize keyword in Java?

- The finalize keyword in Java is used to define a method that will be called by the garbage collector before an object is destroyed, allowing for cleanup or other tasks to be performed.

4. Can a final variable be modified in Java?

- No, a final variable cannot be modified once it has been defined.

5. Can a final method be overridden in Java?

- No, a final method cannot be overridden by a subclass in Java.

6. What is the purpose of the finally block in Java?

- The purpose of the finally block in Java is to ensure that certain code is executed regardless of whether an exception is thrown or not, allowing for cleanup or other tasks to be performed.

7. What is the difference between final and finally in Java?

- Final is used to declare a variable, method, or class that cannot be modified or overridden, while finally is used to define a block of code that will be executed regardless of whether an exception is thrown or not.

8. What is the purpose of the finalize() method in Java?

- The purpose of the finalize() method in Java is to provide a way for objects to perform cleanup or other tasks before they are destroyed by the garbage collector.

9. When is the finalize() method called in Java?

- The finalize() method is called by the garbage collector before an object is destroyed, allowing for cleanup or other tasks to be performed.

10. What is the difference between final and static in Java?

- Final is used to declare a variable, method, or class that cannot be modified or overridden, while static is used to declare a variable or method that belongs to the class itself, rather than an instance of the class.

1. What is operator precedence in Java?

- Operator precedence in Java is the order in which operators are evaluated in an expression.

2. What is the order of operator precedence in Java?

- The order of operator precedence in Java is as follows: postfix operators (e.g. ++, --), unary operators (e.g. +, -), multiplicative operators (e.g. *, /, %), additive operators (e.g. +, -), shift operators (e.g. <<, >>, >>>), relational operators (e.g. <, >, <=, >=), equality operators (e.g. ==, !=), bitwise and logical operators (e.g. &, |, ^, &&, ||), ternary operator (e.g. ? :), and assignment operators (e.g. =, +=, -=).

3. What is the purpose of operator precedence in Java?

- The purpose of operator precedence in Java is to ensure that expressions are evaluated in a consistent and predictable manner, according to the rules of the language.

4. What is the associativity of operators in Java?

- The associativity of operators in Java determines the order in which operators of the same precedence are evaluated. For example, the associativity of the addition operator (+) is left-to-right, meaning that expressions are evaluated from left to right.

5. What is the difference between postfix and prefix operators in Java?

- Postfix operators (e.g. ++, --) in Java are applied after the operand is evaluated, while prefix operators (e.g. ++, --) are applied before the operand is evaluated.

6. What is the difference between unary and binary operators in Java?

- Unary operators (e.g. +, -) in Java operate on a single operand, while binary operators (e.g. +, -) operate on two operands.

7. What is the purpose of the ternary operator in Java?

- The purpose of the ternary operator (e.g. ? :) in Java is to provide a shorthand way of writing an if-else statement, allowing for more concise and readable code.

8. What is the purpose of the shift operators in Java?

- The purpose of the shift operators (e.g. <<, >>, >>>) in Java is to shift the bits of a number to the left or right, allowing for efficient multiplication or division by powers of two.

9. What is the purpose of the bitwise and logical operators in Java?

- The purpose of the bitwise and logical operators (e.g. &, |, ^, &&, ||) in Java is to perform logical and bitwise operations on operands, allowing for more complex and flexible expressions.

10. What is the difference between the equality operator (==) and the assignment operator (=) in Java?

- The equality operator (==) in Java is used to compare two values for equality, while the assignment operator (=) is used to assign a value to a variable.

1. What is polymorphism in Java?

- Polymorphism in Java is the ability of an object to take on multiple forms, allowing for flexibility and extensibility in code design.

2. What is compile-time polymorphism in Java?

- Compile-time polymorphism in Java is the process of selecting the appropriate method or function to be called at compile time, based on the number and types of arguments passed to the method or function.

3. What is run-time polymorphism in Java?

- Run-time polymorphism in Java is the process of selecting the appropriate method or function to be called at run time, based on the type of the object that the method or function is called on.

4. What is method overloading in Java?

- Method overloading in Java is a form of compile-time polymorphism, where multiple methods with the same name but different parameters are defined in a class.

5. What is method overriding in Java?

- Method overriding in Java is a form of run-time polymorphism, where a subclass provides its own implementation of a method that is already defined in its superclass.

6. Can method overloading and method overriding be used together in Java?

- Yes, method overloading and method overriding can be used together in Java, allowing for more flexible and extensible code.

7. What is the difference between compile-time polymorphism and run-time polymorphism in Java?

- Compile-time polymorphism is resolved at compile time based on the number and types of arguments passed to a method or function, while run-time polymorphism is resolved at run time based on the type of the object that the method or function is called on.

8. What is an example of method overloading in Java?

- An example of method overloading in Java is defining two methods with the same name but different parameters, such as a calculateArea() method that can take either the length and width of a rectangle or the radius of a circle as arguments.

9. What is an example of method overriding in Java?

- An example of method overriding in Java is defining a toString() method in a subclass that provides a custom string representation of the object, instead of the default implementation in the Object class.

10. What is the benefit of using polymorphism in Java?

- The benefit of using polymorphism in Java is that it allows for more flexible and extensible code, reducing the amount of duplicate code and making it easier to add new functionality to a program.

1. What is a collection in Java?

- A collection in Java is a group of related objects that can be manipulated and stored together.

2. What are the main interfaces of the Java Collections Framework?

- The main interfaces of the Java Collections Framework are List, Set, Queue, and Map.

3. What is the difference between a List and a Set in Java?

- A List in Java is an ordered collection of objects that can contain duplicates, while a Set in Java is an unordered collection of unique objects.

4. What is the difference between a Queue and a Stack in Java?

- A Queue in Java is a collection of objects that are stored in a first-in, first-out (FIFO) order, while a Stack in Java is a collection of objects that are stored in a last-in, first-out (LIFO) order.

5. What is the purpose of the Map interface in Java?

- The purpose of the Map interface in Java is to store key-value pairs, allowing for efficient lookup and retrieval of values based on their corresponding keys.

6. What is the difference between a HashMap and a TreeMap in Java?

- A HashMap in Java is an unordered collection of key-value pairs that uses a hash function to store and retrieve values, while a TreeMap in Java is an ordered collection of key-value pairs that is sorted based on the keys.

7. What is the purpose of the Collection interface in Java?

- The purpose of the Collection interface in Java is to provide a common set of methods for manipulating and accessing collections, such as adding, removing, and iterating over elements.

8. What is the difference between an ArrayList and a LinkedList in Java?

- An ArrayList in Java is a resizable array that provides fast access to elements by index, while a LinkedList in Java is a linked list that provides fast insertion and removal of elements at both ends of the list.

9. What is the purpose of the Iterator interface in Java?

- The purpose of the Iterator interface in Java is to provide a way to iterate over the elements of a collection, allowing for efficient and flexible traversal of the collection.

10. What is the difference between a synchronized and an unsynchronized collection in Java?

- A synchronized collection in Java is thread-safe, meaning that it can be accessed by multiple threads without causing race conditions or other synchronization issues, while an unsynchronized collection in Java is not thread-safe and can cause issues when accessed by multiple threads simultaneously.

1. What is the purpose of Comparator and Comparable in Java?

- Comparator and Comparable in Java are used to define custom sorting orders for objects in a collection.

2. What is the difference between Comparator and Comparable in Java?

- Comparator in Java is an external comparison mechanism that can be used to compare two objects of a class, while Comparable in Java is an internal comparison mechanism that is implemented by a class to define its own natural ordering.

3. What is the syntax for using Comparator in Java?

- To use Comparator in Java, a separate class that implements the Comparator interface is created, and the compare() method is defined to specify the comparison logic. The Comparator object can then be passed to sorting methods such as Collections.sort() or Arrays.sort().

4. What is the syntax for using Comparable in Java?

- To use Comparable in Java, the class that needs to be sorted implements the Comparable interface, and the compareTo() method is defined to specify the comparison logic. The sorting method can then use the natural ordering defined by the class.

5. What is an example of using Comparator in Java?

- An example of using Comparator in Java is sorting a list of Person objects by their age, where the Person class does not implement Comparable. A separate AgeComparator class can be created that implements Comparator<Person>, and the compare() method can be defined to compare the ages of two Person objects.

6. What is an example of using Comparable in Java?

- An example of using Comparable in Java is sorting a list of String objects alphabetically, where the String class implements Comparable<String>. The compareTo() method is already defined in the String class to compare two strings lexicographically.

7. Can Comparator and Comparable be used together in Java?

- Yes, Comparator and Comparable can be used together in Java, allowing for more flexible and extensible sorting logic.

8. What is the benefit of using Comparator and Comparable in Java?

- The benefit of using Comparator and Comparable in Java is that they allow for custom sorting logic to be defined for objects in a collection, making it easier to sort and manipulate data in a meaningful way.

9. What is the difference between natural ordering and external ordering in Java?

- Natural ordering in Java is the default ordering behavior defined by a class, while external ordering is a custom ordering behavior defined by a separate class or mechanism such as Comparator.

10. What is the purpose of the compare() method in Java?

- The purpose of the compare() method in Java is to compare two objects of a class according to a specific ordering logic, allowing for sorting and manipulation of collections.

1. What is fail-fast in Java?

- Fail-fast in Java is a mechanism used to detect and handle errors in a collection, where any modification made to the collection during iteration will cause an immediate exception to be thrown.

2. What is fail-safe in Java?

- Fail-safe in Java is a mechanism used to prevent errors in a collection, where any modification made to the collection during iteration will not affect the iteration and will be handled safely.

3. What is the difference between fail-fast and fail-safe in Java?

- Fail-fast in Java is more efficient but less safe, as it can detect errors quickly but may cause data loss or corruption, while fail-safe in Java is less efficient but more safe, as it can prevent errors but may require more resources and time.

4. What is an example of fail-fast in Java?

- An example of fail-fast in Java is using an iterator to iterate over a list, and then modifying the list by adding or removing elements during iteration. This will cause a ConcurrentModificationException to be thrown immediately, indicating that the collection has been modified during iteration.

5. What is an example of fail-safe in Java?

- An example of fail-safe in Java is using a copy of a collection to iterate over its elements, and then modifying the original collection during iteration. This will not affect the iteration of the copy, as it is a separate object that is not affected by changes to the original collection.

6. What is the purpose of fail-fast and fail-safe in Java?

- The purpose of fail-fast and fail-safe in Java is to provide mechanisms for handling errors and preventing data loss or corruption in collections, ensuring that code is reliable and safe to use.

7. What is the benefit of using fail-safe in Java?

- The benefit of using fail-safe in Java is that it provides a more robust and reliable mechanism for handling errors in collections, reducing the risk of data loss or corruption and improving the overall quality of the code.

8. What is the downside of using fail-fast in Java?

- The downside of using fail-fast in Java is that it can be less safe and more prone to errors, especially in complex or multi-threaded environments, where modifications to collections may occur frequently and unpredictably.

9. What is the downside of using fail-safe in Java?

- The downside of using fail-safe in Java is that it can be less efficient and more resource-intensive, especially for large or complex collections, where copying or duplicating data may take a significant amount of time and memory.

10. Can fail-fast and fail-safe be used together in Java?

- Yes, fail-fast and fail-safe can be used together in Java, allowing for more flexible and extensible error handling mechanisms in collections.

Stream

: This is the main class in the Stream API, which provides a way to create and manipulate streams of data.

Collectors

: This class provides several methods for collecting streams into collections, such as Lists, Sets, and Maps.

Optional

: This class is used to represent optional values, which may or may not be present.

Predicate

: This functional interface is used to define predicates, which are used to test whether a given element meets certain criteria.

Function

: This functional interface is used to define functions, which are used to transform elements in a stream.

Consumer

: This functional interface is used to define consumers, which are used to perform an action on each element in a stream.

Comparator

: This functional interface is used to define comparators, which are used to compare elements in a stream.

IntStream

: This class is used to create streams of integers.

DoubleStream

: This class is used to create streams of doubles.

LongStream

: This class is used to create streams of longs.

Stream.of

: This method is used to create a stream from an array or a collection.

Stream.iterate

: This method is used to create an infinite stream of elements that are generated by applying a function to a previous element.

Stream.generate

: This method is used to create a stream of elements that are generated by a supplier function.

Stream.concat

: This method is used to concatenate two or more streams into a single stream.

Stream.filter

: This method is used to filter elements in a stream based on a given predicate.

Stream.map

: This method is used to transform elements in a stream using a given function.

Stream.flatMap

: This method is used to flatten elements in a stream that are themselves streams.

Stream.sorted

: This method is used to sort elements in a stream according to a given comparator.

Stream.distinct

: This method is used to remove duplicate elements from a stream.

Stream.limit

: This method is used to limit the number of elements in a stream.

Stream.skip

: This method is used to skip a certain number of elements in a stream.

Stream.forEach

: This method is used to perform an action on each element in a stream.

Stream.reduce

: This method is used to reduce elements in a stream to a single value using a given binary operator.

Stream.collect

: This method is used to collect elements in a stream into a collection.

Stream.min

: This method is used to find the minimum element in a stream according to a given comparator.

Stream.max

: This method is used to find the maximum element in a stream according to a given comparator.

Stream.sum

: This method is used to find the sum of all elements in a stream.

Stream.average

: This method is used to find the average of all elements in a stream.

the key features of Java 8:

Lambda expressions: Java 8 introduced lambda expressions, which allow you to write function-like code in a more concise and readable way.

Streams: Java 8 introduced streams, which provide a way to manipulate collections of data in a functional way. Streams can be used to filter, map, sort, and perform other operations on data.