📘Documentation | 🛠️Installation | 🚀Model Zoo | 👀Awesome Mixup | 🔍Awesome MIM | 🆕News

The main branch works with PyTorch 1.8 (required by some self-supervised methods) or higher (we recommend PyTorch 1.12). You can still use PyTorch 1.6 for supervised classification methods.

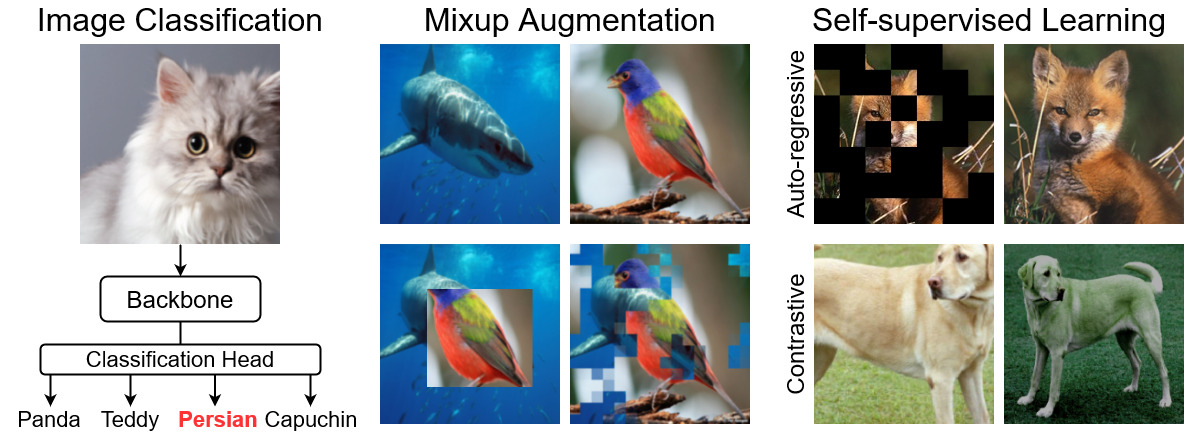

OpenMixup is an open-source toolbox for supervised, self-, and semi-supervised visual representation learning with mixup based on PyTorch, especially for mixup-related methods.

Major Features

-

Modular Design. OpenMixup follows a similar code architecture of OpenMMLab projects, which decompose the framework into various components, and users can easily build a customized model by combining different modules. OpenMixup is also transplantable to OpenMMLab projects (e.g., MMSelfSup).

-

All in One. OpenMixup provides popular backbones, mixup methods, semi-supervised, and self-supervised algorithms. Users can perform image classification (CNN & Transformer) and self-supervised pre-training (contrastive and autoregressive) under the same framework.

-

Standard Benchmarks. OpenMixup supports standard benchmarks of image classification, mixup classification, self-supervised evaluation, and provides smooth evaluation on downstream tasks with open-source projects (e.g., object detection and segmentation on Detectron2 and MMSegmentation).

-

State-of-the-art Methods. Openmixup provides awesome lists of popular mixup and self-supervised methods. OpenMixup is updating to support more state-of-the-art image classification and self-supervised methods.

Table of Contents

[2022-12-16] OpenMixup v0.2.7 is released (issue #35).

[2022-12-02] Update new features and documents of OpenMixup v0.2.6 (issue #24, issue #25, issue #31, and issue #33). Update the official implementation of MogaNet.

[2022-09-14] OpenMixup v0.2.6 is released (issue #20).

OpenMixup is compatible with Python 3.6/3.7/3.8/3.9 and PyTorch >= 1.6. Here are quick installation steps for development:

conda create -n openmixup python=3.8 pytorch=1.12 cudatoolkit=11.3 torchvision -c pytorch -y

conda activate openmixup

pip install openmim

mim install mmcv-full

git clone https://github.com/Westlake-AI/openmixup.git

cd openmixup

python setup.py developPlease refer to install.md for more detailed installation and dataset preparation.

OpenMixup supports Linux and macOS. It enables easy implementation and extensions of mixup data augmentation methods in existing supervised, self-, and semi-supervised visual recognition models. Please see get_started.md for the basic usage of OpenMixup.

Here, we provide scripts for starting a quick end-to-end training with multiple GPUs and the specified CONFIG_FILE.

bash tools/dist_train.sh ${CONFIG_FILE} ${GPUS} [optional arguments]For example, you can run the script below to train a ResNet-50 classifier on ImageNet with 4 GPUs:

CUDA_VISIBLE_DEVICES=0,1,2,3 PORT=29500 bash tools/dist_train.sh configs/classification/imagenet/resnet/resnet50_4xb64_cos_ep100.py 4After trianing, you can test the trained models with the corresponding evaluation script:

bash tools/dist_test.sh ${CONFIG_FILE} ${GPUS} ${PATH_TO_MODEL} [optional arguments]Please see Tutorials for more developing examples and tech details:

- config files

- add new dataset

- data pipeline

- add new modules

- customize schedules

- customize runtime

- benchmarks

Please refer to Mixup Benchmarks for the benchmarking results of existing mixup methods, and Model Zoos for comprehensive collection of mainstream backbones and self-supervised algorithms. We also provide the paper lists of Awesome Mixups for your reference. Checkpoints and training logs will be updated soon!

-

Backbone architectures for supervised image classification on ImageNet.

Currently supported backbones

- AlexNet (NIPS'2012) [config]

- VGG (ICLR'2015) [config]

- InceptionV3 (CVPR'2016) [config]

- ResNet (CVPR'2016) [config]

- ResNeXt (CVPR'2017) [config]

- SE-ResNet (CVPR'2018) [config]

- SE-ResNeXt (CVPR'2018) [config]

- ShuffleNetV1 (CVPR'2018)

- ShuffleNetV2 (ECCV'2018) [config]

- MobileNetV2 (CVPR'2018) [config]

- MobileNetV3 (ICCV'2019)

- EfficientNet (ICML'2019) [config]

- Res2Net (ArXiv'2019) [config]

- RegNet (CVPR'2020) [config]

- Vision-Transformer (ICLR'2021) [config]

- Swin-Transformer (ICCV'2021) [config]

- PVT (ICCV'2021) [config]

- T2T-ViT (ICCV'2021) [config]

- RepVGG (CVPR'2021) [config]

- DeiT (ICML'2021) [config]

- MLP-Mixer (NIPS'2021) [config]

- Twins (NIPS'2021) [config]

- ConvMixer (Openreview'2021) [config]

- UniFormer (ICLR'2022) [config]

- PoolFormer (CVPR'2022) [config]

- ConvNeXt (CVPR'2022) [config]

- MViTV2 (CVPR'2022) [config]

- RepMLP (CVPR'2022) [config]

- VAN (ArXiv'2022) [config]

- DeiT-3 (ECCV'2022) [config]

- LITv2 (NIPS'2022) [config]

- HorNet (NIPS'2022) [config]

- EdgeNeXt (ECCVW'2022) [config]

- EfficientFormer (ArXiv'2022) [config]

- MogaNet (ArXiv'2022) [config]

-

Mixup methods for supervised image classification.

Currently supported mixup methods

- Mixup (ICLR'2018) [config]

- CutMix (ICCV'2019) [config]

- ManifoldMix (ICML'2019) [config]

- FMix (ArXiv'2020) [config]

- AttentiveMix (ICASSP'2020) [config]

- SmoothMix (CVPRW'2020) [config]

- SaliencyMix (ICLR'2021) [config]

- PuzzleMix (ICML'2020) [config]

- GridMix (Pattern Recognition'2021) [config]

- ResizeMix (ArXiv'2020) [config]

- AlignMix (CVPR'2022) [config]

- AutoMix (ECCV'2022) [config]

- SAMix (ArXiv'2021) [config]

- DecoupleMix (ArXiv'2022) [config]

Currently supported datasets for mixups

- ImageNet [download] [config]

- CIFAR-10 [download] [config]

- CIFAR-100 [download] [config]

- Tiny-ImageNet [download] [config]

- CUB-200-2011 [download] [config]

- FGVC-Aircraft [download] [config]

- StandfoldCars [download]

- Places205 [download] [config]

- iNaturalist-2017 [download] [config]

- iNaturalist-2018 [download] [config]

-

Self-supervised algorithms for visual representation learning.

Currently supported self-supervised algorithms

- Relative Location (ICCV'2015) [config]

- Rotation Prediction (ICLR'2018) [config]

- DeepCluster (ECCV'2018) [config]

- NPID (CVPR'2018) [config]

- ODC (CVPR'2020) [config]

- MoCov1 (CVPR'2020) [config]

- SimCLR (ICML'2020) [config]

- MoCoV2 (ArXiv'2020) [config]

- BYOL (NIPS'2020) [config]

- SwAV (NIPS'2020) [config]

- DenseCL (CVPR'2021) [config]

- SimSiam (CVPR'2021) [config]

- Barlow Twins (ICML'2021) [config]

- MoCoV3 (ICCV'2021) [config]

- BEiT (ICLR'2022) [config

- MAE (CVPR'2022) [config]

- SimMIM (CVPR'2022) [config]

- MaskFeat (CVPR'2022) [config]

- CAE (ArXiv'2022) [config]

- A2MIM (ArXiv'2022) [config]

Please refer to changelog.md for more details and release history.

This project is released under the Apache 2.0 license. See LICENSE for more information.

- OpenMixup is an open-source project for mixup methods created by researchers in CAIRI AI Lab. We encourage researchers interested in visual representation learning and mixup methods to contribute to OpenMixup!

- This repo borrows the architecture design and part of the code from MMSelfSup and MMClassification.

If you find this project useful in your research, please consider star our GitHub repo and cite tech report:

@misc{2022openmixup,

title = {{OpenMixup}: Open Mixup Toolbox and Benchmark for Visual Representation Learning},

author = {Siyuan Li and Zicheng Liu and Zedong Wang and Di Wu and Stan Z. Li},

journal = {GitHub repository},

howpublished = {\url{https://github.com/Westlake-AI/openmixup}},

year = {2022}

}@article{li2022openmixup,

title = {OpenMixup: Open Mixup Toolbox and Benchmark for Visual Representation Learning},

author = {Siyuan Li and Zedong Wang and Zicheng Liu and Di Wu and Stan Z. Li},

journal = {ArXiv},

year = {2022},

volume = {abs/2209.04851}

}For help, new features, or reporting bugs associated with OpenMixup, please open a GitHub issue and pull request with the tag "help wanted" or "enhancement". For now, the direct contributors include: Siyuan Li (@Lupin1998), Zedong Wang (@Jacky1128), and Zicheng Liu (@pone7). We thank all public contributors and contributors from MMSelfSup and MMClassification!

This repo is currently maintained by:

- Siyuan Li ([email protected]), Westlake University

- Zedong Wang ([email protected]), Westlake University

- Zicheng Liu ([email protected]), Westlake University