Self identifying hashes

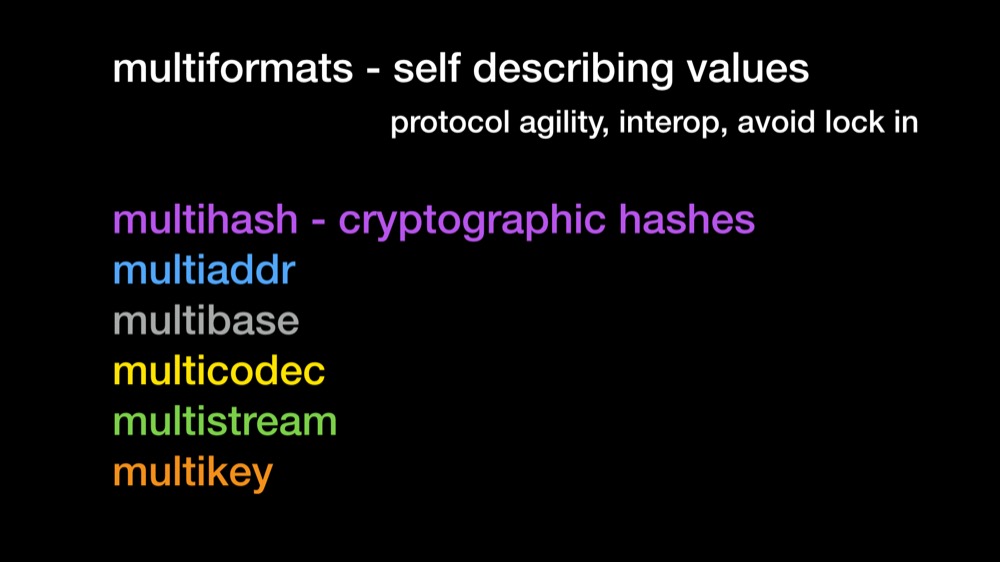

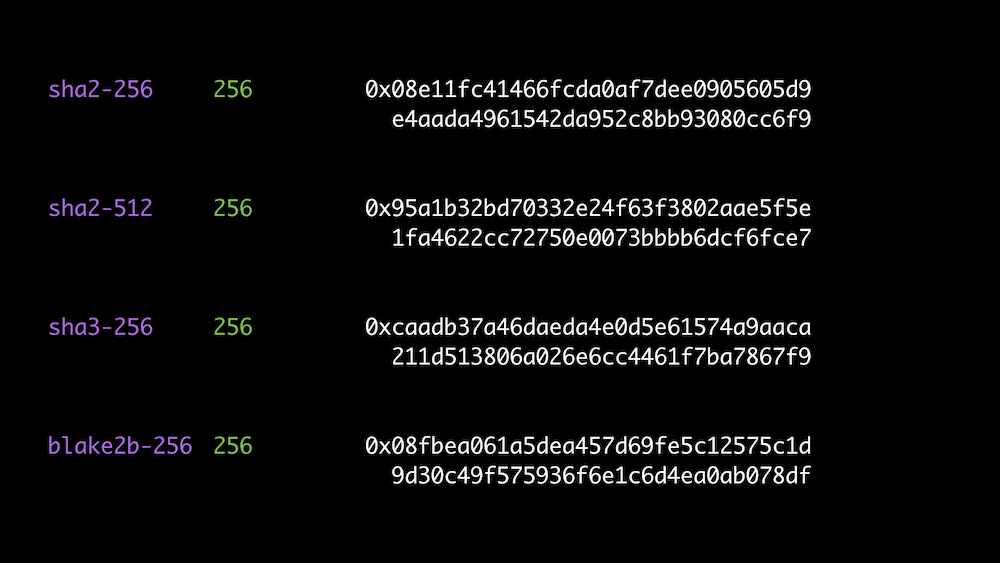

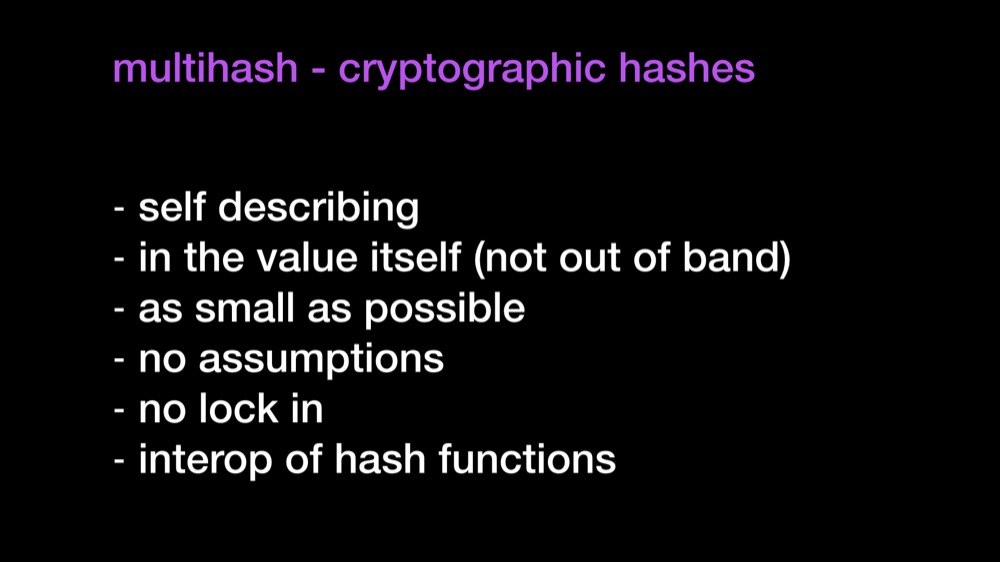

Multihash is a protocol for differentiating outputs from various well-established cryptographic hash functions, addressing size + encoding considerations.

It is useful to write applications that future-proof their use of hashes, and allow multiple hash functions to coexist. See jbenet/random-ideas#1 for a longer discussion.

- Table of Contents

- Example

- Format

- Implementations

- Table for Multihash

- Notes

- Visual Examples

- Maintainers

- Contribute

- License

Outputs of <encoding>.encode(multihash(<digest>, <function>)):

# sha1 - 0x11 - sha1("multihash")

111488c2f11fb2ce392acb5b2986e640211c4690073e # sha1 in hex

CEKIRQXRD6ZM4OJKZNNSTBXGIAQRYRUQA47A==== # sha1 in base32

5dsgvJGnvAfiR3K6HCBc4hcokSfmjj # sha1 in base58

ERSIwvEfss45KstbKYbmQCEcRpAHPg== # sha1 in base64

# sha2-256 0x12 - sha2-256("multihash")

12209cbc07c3f991725836a3aa2a581ca2029198aa420b9d99bc0e131d9f3e2cbe47 # sha2-256 in hex

CIQJZPAHYP4ZC4SYG2R2UKSYDSRAFEMYVJBAXHMZXQHBGHM7HYWL4RY= # sha256 in base32

QmYtUc4iTCbbfVSDNKvtQqrfyezPPnFvE33wFmutw9PBBk # sha256 in base58

EiCcvAfD+ZFyWDajqipYHKICkZiqQgudmbwOEx2fPiy+Rw== # sha256 in base64

Note: You should consider using multibase to base-encode these hashes instead of base-encoding them directly.

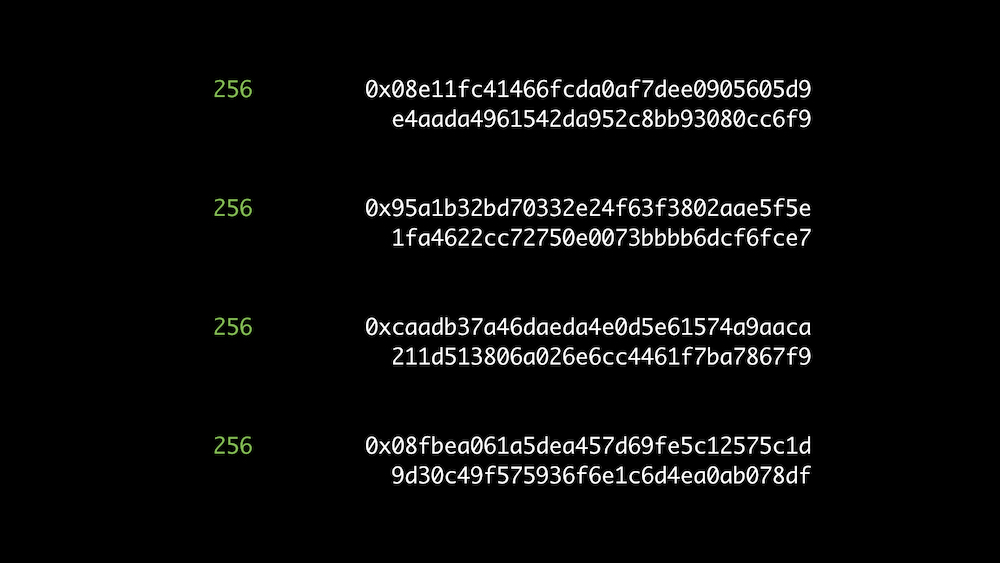

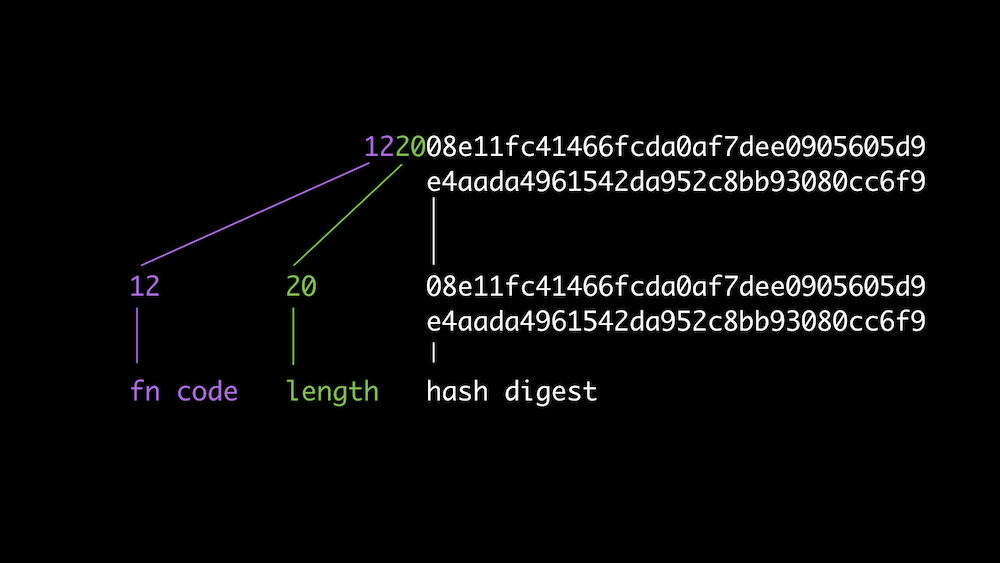

<varint hash function code><varint digest size in bytes><hash function output>

Binary example (only 4 bytes for simplicity):

fn code dig size hash digest

-------- -------- -----------------------------------

00010001 00000100 10110110 11111000 01011100 10110101

sha1 4 bytes 4 byte sha1 digest

Why have digest size as a separate number?

Because otherwise you end up with a function code really meaning "function-and-digest-size-code". Makes using custom digest sizes annoying, and is less flexible.

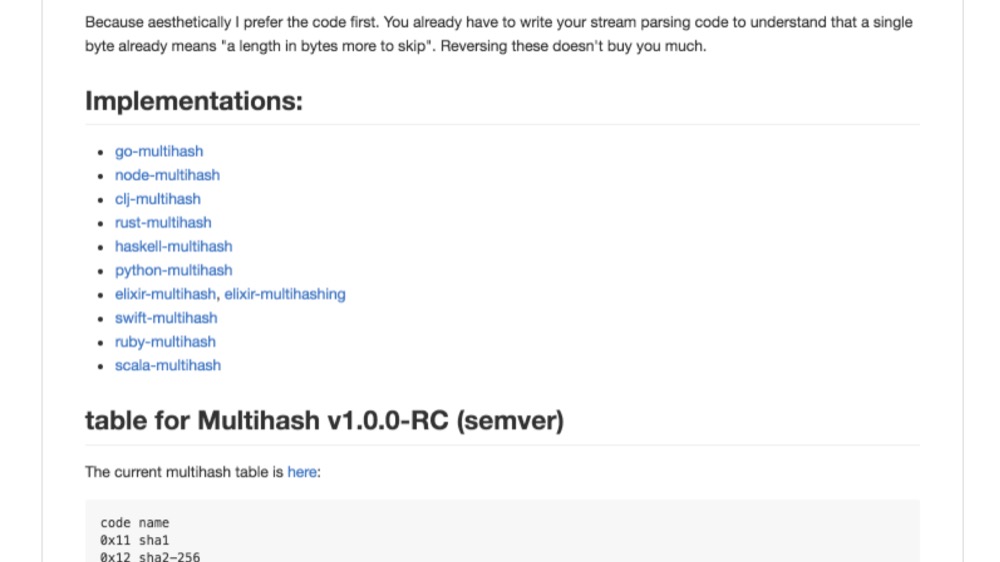

Why isn't the size first?

Because aesthetically I prefer the code first. You already have to write your stream parsing code to understand that a single byte already means "a length in bytes more to skip". Reversing these doesn't buy you much.

Why varints?

So that we have no limitation on functions or lengths.

What kind of varints?

A Most Significant Bit unsigned varint (also called base-128 varints), as defined by the multiformats/unsigned-varint.

Don't we have to agree on a table of functions?

Yes, but we already have to agree on functions, so this is not hard. The table even leaves some room for custom function codes.

- clj-multihash

- cpp-multihash

- dart-multihash

- elixir-multihash

- go-multihash

- haskell-multihash

- js-multihash

- js-multiformats

- js-multihash (archived)

- java-multihash

- kotlin-multihash

- net-multihash

- nim-libp2p

- ocaml-multihash

- php-multihash

- python-multihash

- multiformats/py-multihash

- ivilata/pymultihash

multihashsub-module of Python module multiformats

- ruby-multihash

- rust-multihash

- scala-multihash

- swift-multihash

We use a single Multicodec table across all of our multiformat projects. The shared namespace reduces the chances of accidentally interpreting a code in the wrong context. Multihash entries are identified with a multihash value in the tag column.

The current table lives here

Cannot find a good standard on this. Found some different IANA ones:

- https://www.iana.org/assignments/tls-parameters/tls-parameters.xhtml#tls-parameters-18

- http://tools.ietf.org/html/rfc6920#section-9.4

They disagree. :(

Obviously multihash values bias the first two bytes. Do not expect them to be uniformly distributed. The entropy size is len(multihash) - 2. Skip the first two bytes when using them with bloom filters, etc. Why not _ap_pend instead of _pre_pend? Because when reading a stream of hashes, you can know the length of the whole value, and allocate the right amount of memory, skip it, or discard it.

Obsolete and deprecated hash functions are included in this list. MD4, MD5 and SHA-1 should no longer be used for cryptographic purposes, but since many such hashes already exist they are included in this specification and may be implemented in multihash libraries.

Multihash is intended for "well-established cryptographic hash functions" as non-cryptographic hash functions are not suitable for content addressing systems. However, there may be use-cases where it is desireable to identify non-cryptographic hash functions or their digests by use of a multihash. Non-cryptographic hash functions are identified in the Multicodec table with a tag hash value in the tag column.

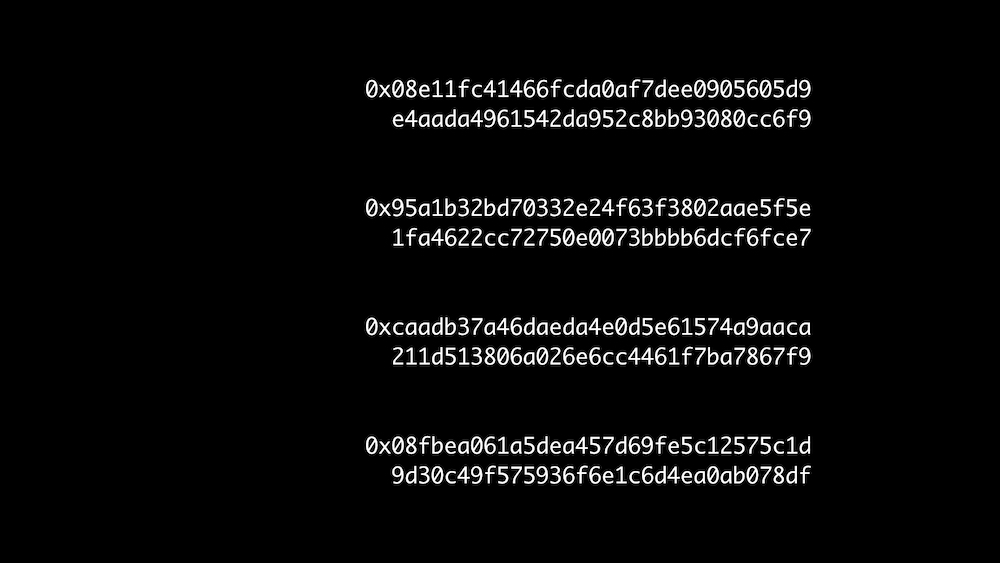

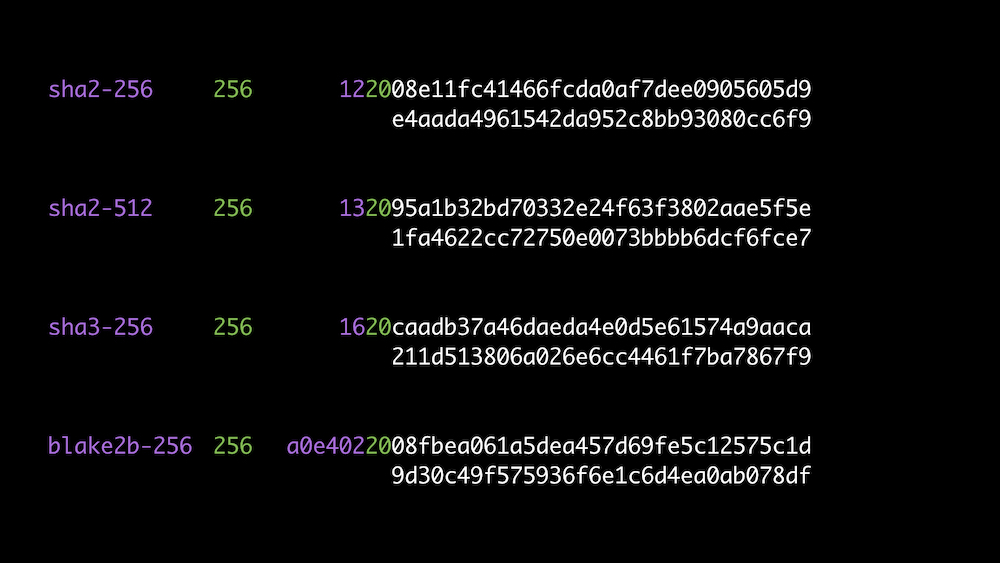

These are visual aids that help tell the story of why Multihash matters.

Contributions welcome. Please check out the issues.

Check out our contributing document for more information on how we work, and about contributing in general. Please be aware that all interactions related to multiformats are subject to the IPFS Code of Conduct.

Small note: If editing the README, please conform to the standard-readme specification.

This repository is only for documents. All of these are licensed under the CC-BY-SA 3.0 license © 2016 Protocol Labs Inc. Any code is under a MIT © 2016 Protocol Labs Inc.