Done by @NEGU93 - J. Agustin Barrachina

Using this library, the only difference with a Tensorflow code is that you should use cvnn.layers module instead of tf.keras.layers.

This is a library that uses Tensorflow as a back-end to do complex-valued neural networks as CVNNs are barely supported by Tensorflow and not even supported yet for pytorch (reason why I decided to use Tensorflow for this library). To the authors knowledge, this is the first library that actually works with complex data types instead of real value vectors that are interpreted as real and imaginary part.

Update:

- Since v1.12 (28 June 2022), Complex32 and Complex Convolutions in PyTorch.

- Since v0.2 (25 Jan 2021) complexPyTorch uses complex64 dtype.

- Since v1.6 (28 July 2020), pytorch now supports complex vectors and complex gradient as BETA. But still have the same issues that Tensorflow has, so no reason to migrate yet.

Please Read the Docs

Using Anaconda

conda install -c negu93 cvnn

Using PIP

pip install cvnn

From "outside" everything is the same as when using Tensorflow.

import numpy as np

import tensorflow as tf

# Assume you already have complex data... example numpy arrays of dtype np.complex64

(train_images, train_labels), (test_images, test_labels) = get_dataset() # to be done by each user

model = get_model() # Get your model

# Compile as any TensorFlow model

model.compile(optimizer='adam', metrics=['accuracy'],

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True))

model.summary()

# Train and evaluate

history = model.fit(train_images, train_labels, epochs=epochs, validation_data=(test_images, test_labels))

test_loss, test_acc = model.evaluate(test_images, test_labels, verbose=2)The main difference is that you will be using cvnn layers instead of Tensorflow layers.

There are some options on how to do it as shown here:

import cvnn.layers as complex_layers

def get_model():

model = tf.keras.models.Sequential()

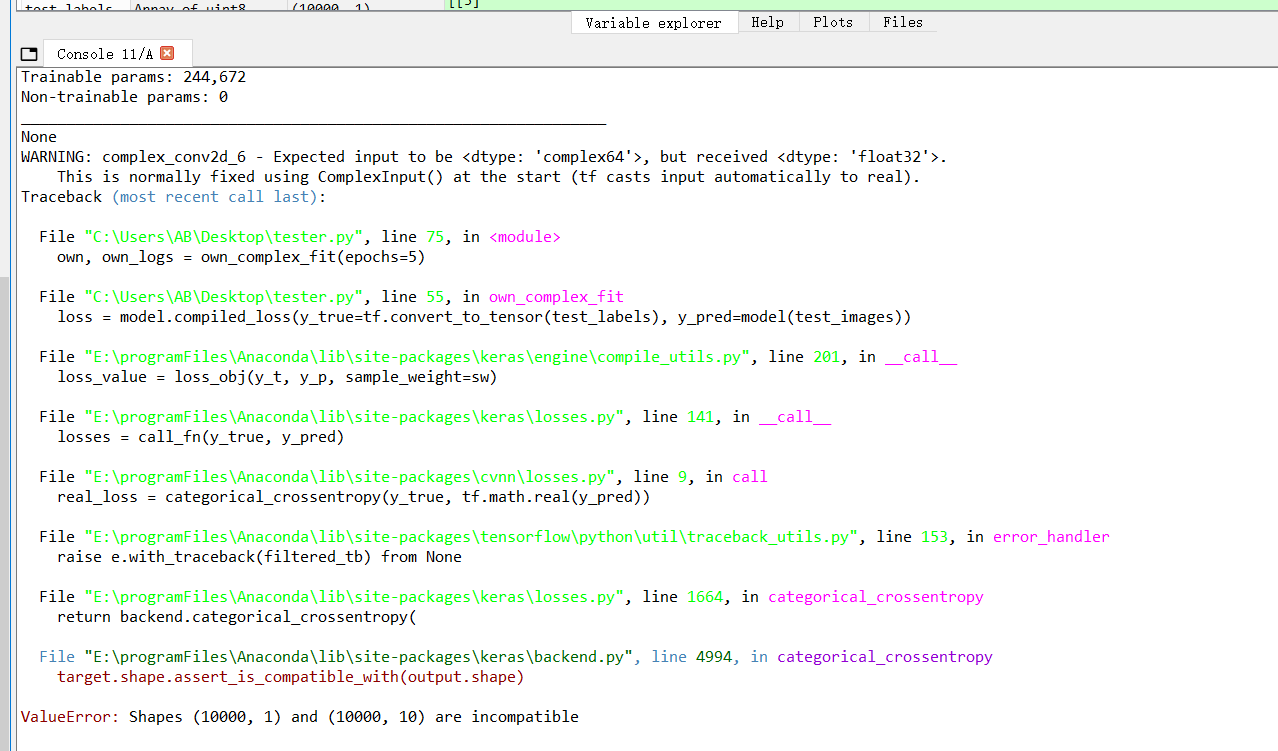

model.add(complex_layers.ComplexInput(input_shape=(32, 32, 3))) # Always use ComplexInput at the start

model.add(complex_layers.ComplexConv2D(32, (3, 3), activation='cart_relu'))

model.add(complex_layers.ComplexAvgPooling2D((2, 2)))

model.add(complex_layers.ComplexConv2D(64, (3, 3), activation='cart_relu'))

model.add(complex_layers.ComplexMaxPooling2D((2, 2)))

model.add(complex_layers.ComplexConv2D(64, (3, 3), activation='cart_relu'))

model.add(complex_layers.ComplexFlatten())

model.add(complex_layers.ComplexDense(64, activation='cart_relu'))

model.add(complex_layers.ComplexDense(10, activation='convert_to_real_with_abs'))

# An activation that casts to real must be used at the last layer.

# The loss function cannot minimize a complex number

return modelimport cvnn.layers as complex_layers

def get_model():

inputs = complex_layers.complex_input(shape=(128, 128, 3))

c0 = complex_layers.ComplexConv2D(32, activation='cart_relu', kernel_size=3)(inputs)

c1 = complex_layers.ComplexConv2D(32, activation='cart_relu', kernel_size=3)(c0)

c2 = complex_layers.ComplexMaxPooling2D(pool_size=(2, 2), strides=(2, 2), padding='valid')(c1)

t01 = complex_layers.ComplexConv2DTranspose(5, kernel_size=2, strides=(2, 2), activation='cart_relu')(c2)

concat01 = tf.keras.layers.concatenate([t01, c1], axis=-1)

c3 = complex_layers.ComplexConv2D(4, activation='cart_relu', kernel_size=3)(concat01)

out = complex_layers.ComplexConv2D(4, activation='cart_relu', kernel_size=3)(c3)

return tf.keras.Model(inputs, out)I currently work as a full-time employee and therefore the mantainance of this repository has been reduced or stopped. I would happily welcome anyone who wishes to fork the project or volunteer to step in as a maintainer or owner, allowing the project to keep going.

I am a PhD student from Ecole CentraleSupelec with a scholarship from ONERA and the DGA

I am basically working with Complex-Valued Neural Networks for my PhD topic. In the need of making my coding more dynamic I build a library not to have to repeat the same code over and over for little changes and accelerate therefore my coding.

Alway prefer the Zenodo citation.

Next you have a model but beware to change the version and date accordingly.

@software{j_agustin_barrachina_2022_7303587,

author = {J Agustin Barrachina},

title = {NEGU93/cvnn: Complex-Valued Neural Networks},

month = nov,

year = 2022,

publisher = {Zenodo},

version = {v2.0},

doi = {10.5281/zenodo.7303587},

url = {https://doi.org/10.5281/zenodo.7303587}

}For any issues please report them in here

This library is tested using pytest.