Nature-inspired algorithms are a very popular tool for solving optimization problems. Numerous variants of nature-inspired algorithms have been developed (paper 1, paper 2) since the beginning of their era. To prove their versatility, those were tested in various domains on various applications, especially when they are hybridized, modified or adapted. However, implementation of nature-inspired algorithms is sometimes a difficult, complex and tedious task. In order to break this wall, NiaPy is intended for simple and quick use, without spending time for implementing algorithms from scratch.

- Free software: MIT license

- Documentation: https://niapy.readthedocs.io/en/stable/

- Python versions: 3.9.x, 3.10.x, 3.11.x, 3.12.x

- Dependencies: click here

Our mission is to build a collection of nature-inspired algorithms and create a simple interface for managing the optimization process. NiaPy offers:

- numerous optimization problem implementations,

- use of various nature-inspired algorithms without struggle and effort with a simple interface,

- easy comparison between nature-inspired algorithms, and

- export of results in various formats such as Pandas DataFrame, JSON or even Excel.

Install NiaPy with pip:

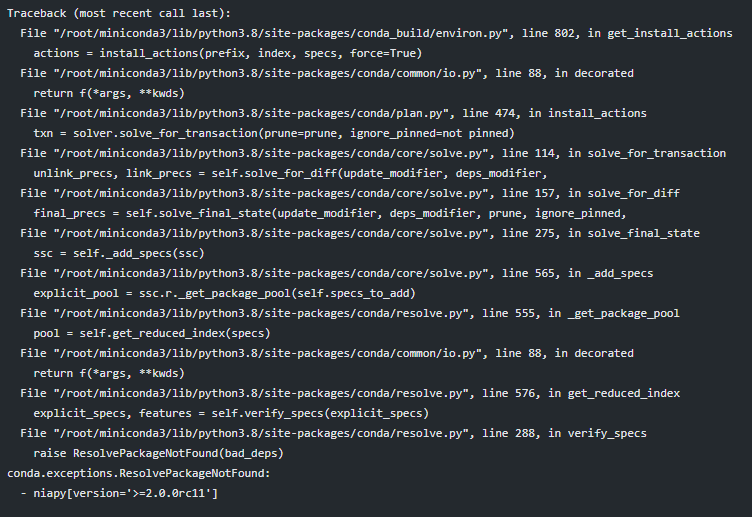

pip install niapyTo install NiaPy with conda, use:

conda install -c niaorg niapyTo install NiaPy on Fedora, use:

dnf install python3-niapyTo install NiaPy on Arch Linux, please use an AUR helper:

yay -Syyu python-niapyTo install NiaPy on Alpine Linux, please enable Community repository and use:

apk add py3-niapyTo install NiaPy on NixOS, please use:

nix-env -iA nixos.python310Packages.niapyTo install NiaPy on Void Linux, use:

xbps-install -S python3-niapyIn case you want to install directly from the source code, use:

pip install git+https://github.com/NiaOrg/NiaPy.gitClick here for the list of implemented algorithms.

Click here for the list of implemented test problems.

After installation, you can import NiaPy as any other Python module:

$ python

>>> import niapy

>>> niapy.__version__Let's go through a basic and advanced example.

Let’s say, we want to try out PSO against the Pintér problem function. Firstly, we have to create new file, with name, for example basic_example.py. Then we have to import chosen algorithm from NiaPy, so we can use it. Afterwards we initialize ParticleSwarmAlgorithm class instance and run the algorithm. Given bellow is the complete source code of basic example.

from niapy.algorithms.basic import ParticleSwarmAlgorithm

from niapy.task import Task

# we will run 10 repetitions of Weighted, velocity clamped PSO on the Pinter problem

for i in range(10):

task = Task(problem='pinter', dimension=10, max_evals=10000)

algorithm = ParticleSwarmAlgorithm(population_size=100, w=0.9, c1=0.5, c2=0.3, min_velocity=-1, max_velocity=1)

best_x, best_fit = algorithm.run(task)

print(best_fit)Given example can be run with python basic_example.py command and should give you similar output as following:

0.008773534890863646

0.036616190934621755

186.75116812592546

0.024186452828927896

263.5697469837348

45.420706924365916

0.6946753611091367

7.756100204780568

5.839673314425907

0.06732518679742806In this example we will show you how to implement a custom problem class and use it with any of implemented algorithms. First let's create new file named advanced_example.py. As in the previous examples we wil import algorithm we want to use from niapy module.

For our custom optimization function, we have to create new class. Let's name it MyProblem. In the initialization method of MyProblem class we have to set the dimension, lower and upper bounds of the problem. Afterwards we have to override the abstract method _evaluate which takes a parameter x, the solution to be evaluated, and returns the function value. Now we should have something similar as is shown in code snippet bellow.

import numpy as np

from niapy.task import Task

from niapy.problems import Problem

from niapy.algorithms.basic import ParticleSwarmAlgorithm

# our custom problem class

class MyProblem(Problem):

def __init__(self, dimension, lower=-10, upper=10, *args, **kwargs):

super().__init__(dimension, lower, upper, *args, **kwargs)

def _evaluate(self, x):

return np.sum(x ** 2)Now, all we have to do is to initialize our algorithm as in previous examples and pass an instance of our MyProblem class as the problem argument.

my_problem = MyProblem(dimension=20)

for i in range(10):

task = Task(problem=my_problem, max_iters=100)

algo = ParticleSwarmAlgorithm(population_size=100, w=0.9, c1=0.5, c2=0.3, min_velocity=-1, max_velocity=1)

# running algorithm returns best found minimum

best_x, best_fit = algo.run(task)

# printing best minimum

print(best_fit)Now we can run our advanced example with following command: python advanced_example.py. The results should be similar to those bellow.

0.002455614050761476

0.000557652972392164

0.0029791325679865413

0.0009443595274525336

0.001012658824492069

0.0006837236892816072

0.0026789725774685495

0.005017746993004601

0.0011654473402322196

0.0019074442166293853For more usage examples please look at examples folder.

More advanced examples can also be found in the NiaPy-examples repository.

Are you using NiaPy in your project or research? Please cite us!

Vrbančič, G., Brezočnik, L., Mlakar, U., Fister, D., & Fister Jr., I. (2018).

NiaPy: Python microframework for building nature-inspired algorithms.

Journal of Open Source Software, 3(23), 613\. <https://doi.org/10.21105/joss.00613>

@article{NiaPyJOSS2018,

author = {Vrban{\v{c}}i{\v{c}}, Grega and Brezo{\v{c}}nik, Lucija

and Mlakar, Uro{\v{s}} and Fister, Du{\v{s}}an and {Fister Jr.}, Iztok},

title = {{NiaPy: Python microframework for building nature-inspired algorithms}},

journal = {{Journal of Open Source Software}},

year = {2018},

volume = {3},

issue = {23},

issn = {2475-9066},

doi = {10.21105/joss.00613},

url = {https://doi.org/10.21105/joss.00613}

}

TY - JOUR

T1 - NiaPy: Python microframework for building nature-inspired algorithms

AU - Vrbančič, Grega

AU - Brezočnik, Lucija

AU - Mlakar, Uroš

AU - Fister, Dušan

AU - Fister Jr., Iztok

PY - 2018

JF - Journal of Open Source Software

VL - 3

IS - 23

DO - 10.21105/joss.00613

UR - http://joss.theoj.org/papers/10.21105/joss.00613

Thanks goes to these wonderful people (emoji key):

This project follows the all-contributors specification. Contributions of any kind are welcome!

We encourage you to contribute to NiaPy! Please check out the Contributing to NiaPy guide for guidelines about how to proceed.

Everyone interacting in NiaPy's codebases, issue trackers, chat rooms and mailing lists is expected to follow the NiaPy code of conduct.

This package is distributed under the MIT License. This license can be found online at http://www.opensource.org/licenses/MIT.

This framework is provided as-is, and there are no guarantees that it fits your purposes or that it is bug-free. Use it at your own risk!