LitStudy is a Python package that enables analysis of scientific literature from the comfort of a Jupyter notebook. It provides the ability to select scientific publications and study their metadata through the use of visualizations, network analysis, and natural language processing.

In essence, this package offers five main features:

- Extract metadata from scientific documents sourced from various locations. The data is presented in a standardized interface, allowing for the combination of data from different sources.

- Filter, select, deduplicate, and annotate collections of documents.

- Compute and plot general statistics for document sets, such as statistics on authors, venues, and publication years.

- Generate and plot various bibliographic networks as interactive visualizations.

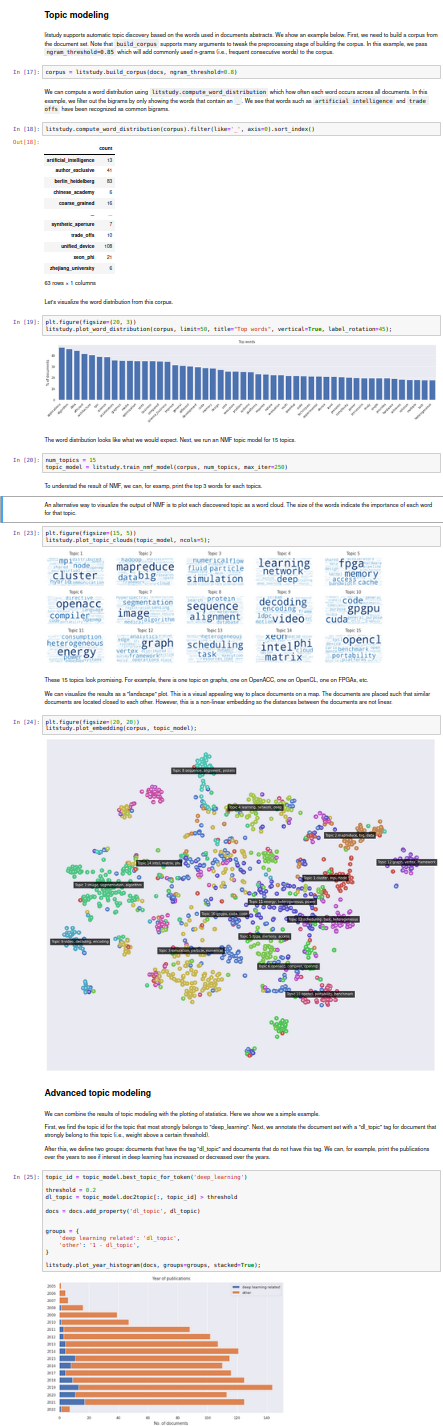

- Topic discovery using natural language processing (NLP) allows for the automatic discovery of popular topics.

If you have any questions or run into an error, see the Frequently Asked Questions section of the documentation. If your question or error is not on the list, please check the GitHub issue tracker for a similar issue or create a new issue.

LitStudy supports the following data sources. The table below lists which metadata is fully (✓) or partially (*) provided by each source.

| Name | Title | Authors | Venue | Abstract | Citations | References |

|---|---|---|---|---|---|---|

| Scopus | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| SemanticScholar | ✓ | ✓ | ✓ | ✓ | * (count only) | ✓ |

| CrossRef | ✓ | ✓ | ✓ | ✓ | * (count only) | ✓ |

| DBLP | ✓ | ✓ | ✓ | |||

| arXiv | ✓ | ✓ | ✓ | |||

| IEEE Xplore | ✓ | ✓ | ✓ | ✓ | * (count only) | |

| Springer Link | ✓ | ✓ | ✓ | ✓ | * (count only) | |

| CSV file | ✓ | ✓ | ✓ | ✓ | ||

| bibtex file | ✓ | ✓ | ✓ | ✓ | ||

| RIS file | ✓ | ✓ | ✓ | ✓ |

An example notebook is available in notebooks/example.ipynb and here.

LitStudy is available on PyPI! Full installation guide is available here.

pip install litstudyOr install the latest development version directly from GitHub:

pip install git+https://github.com/NLeSC/litstudyDocumentation is available here.

The package has been tested for Python 3.7. Required packages are available in requirements.txt.

litstudy supports several data sources.

Some of these sources (such as semantic Scholar, CrossRef, and arXiv) are openly available.

However to access the Scopus API, you (or your institute) requires a Scopus subscription and you need to request an Elsevier Developer API key (see Elsevier Developers).

For more information, see the guide by pybliometrics.

Apache 2.0. See LICENSE.

See CHANGELOG.md.

See CONTRIBUTING.md.

If you use LitStudy in your work, please cite the following publication:

S. Heldens, A. Sclocco, H. Dreuning, B. van Werkhoven, P. Hijma, J. Maassen & R.V. van Nieuwpoort (2022), "litstudy: A Python package for literature reviews", SoftwareX 20

As BibTeX:

@article{litstudy,

title = {litstudy: A Python package for literature reviews},

journal = {SoftwareX},

volume = {20},

pages = {101207},

year = {2022},

issn = {2352-7110},

doi = {https://doi.org/10.1016/j.softx.2022.101207},

url = {https://www.sciencedirect.com/science/article/pii/S235271102200125X},

author = {S. Heldens and A. Sclocco and H. Dreuning and B. {van Werkhoven} and P. Hijma and J. Maassen and R. V. {van Nieuwpoort}},

}Don't forget to check out these other amazing software packages!

- ScientoPy: Open-source Python based scientometric analysis tool.

- pybliometrics: API-Wrapper to access Scopus.

- ASReview: Active learning for systematic reviews.

- metaknowledge: Python library for doing bibliometric and network analysis in science.

- tethne: Python module for bibliographic network analysis.

- VOSviewer: Software tool for constructing and visualizing bibliometric networks.