omec-project / upf Goto Github PK

View Code? Open in Web Editor NEW4G/5G Mobile Core User Plane

4G/5G Mobile Core User Plane

Describe the bug

$ docker exec bess-pfcpiface pfcpiface -config /conf/upf.json -simulate create Error response from daemon: Container 7ac699b7cb5bbc10d054b0015bf78a4a12d298f3c3b2ab18ebea310d5b4ca2ac is restarting, wait until the container is running

To Reproduce

Make changes for sim mode.

DOCKER_BUILDKIT=0 ./docker_setup.sh

docker exec bess-pfcpiface pfcpiface -config /conf/upf.json -simulate create

Expected behavior

The containers should not be in constant restarting status:

$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

7ac699b7cb5b upf-epc-pfcpiface:0.3.0-dev "/bin/pfcpiface -con…" 14 hours ago Restarting (1) 53 seconds ago bess-pfcpiface

f2667a20e0d9 upf-epc-bess:0.3.0-dev "bessctl http 0.0.0.…" 14 hours ago Up 25 minutes bess-web

489765ae6d37 upf-epc-bess:0.3.0-dev "bessd -f -grpc-url=…" 14 hours ago Restarting (1) 49 seconds ago bess

6a84f1041df3 k8s.gcr.io/pause "/pause" 14 hours ago Up 25 minutes 0.0.0.0:8000->8000/tcp, 0.0.0.0:8080->8080/tcp, 0.0.0.0:10514->10514/tcp pause

$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

7ac699b7cb5b upf-epc-pfcpiface:0.3.0-dev "/bin/pfcpiface -con…" 15 hours ago Restarting (1) 28 seconds ago bess-pfcpiface

f2667a20e0d9 upf-epc-bess:0.3.0-dev "bessctl http 0.0.0.…" 15 hours ago Up 31 minutes bess-web

489765ae6d37 upf-epc-bess:0.3.0-dev "bessd -f -grpc-url=…" 15 hours ago Restarting (1) 23 seconds ago bess

6a84f1041df3 k8s.gcr.io/pause "/pause" 15 hours ago Up 31 minutes 0.0.0.0:8000->8000/tcp, 0.0.0.0:8080->8080/tcp, 0.0.0.0:10514->10514/tcp pause

Logs

Logs with the actual error and context

Additional context

$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

upf-epc-pfcpiface 0.3.0-dev 8876bf29c0bd 15 hours ago 24MB

<none> <none> 5d6ad667bc0b 15 hours ago 968MB

<none> <none> c3d446cda428 15 hours ago 6.83GB

<none> <none> b6337c50f3ac 15 hours ago 895MB

upf-epc-cpiface 0.3.0-dev 69f0fc5a163e 15 hours ago 90.4MB

<none> <none> ef48a5672195 15 hours ago 1.03GB

upf-epc-bess 0.3.0-dev 0393a2968fff 15 hours ago 501MB

python 3.9.3-slim 2fa94649b3f0 4 days ago 114MB

golang latest 0debfc3e0c9e 5 days ago 862MB

alpine latest 49f356fa4513 6 days ago 5.61MB

ubuntu bionic 3339fde08fc3 12 days ago 63.3MB

nefelinetworks/bess_build latest eb716387e211 11 months ago 887MB

k8s.gcr.io/pause latest 350b164e7ae1 6 years ago 240kB

Describe the bug

On Ubuntu 20.04 (ubuntu-20.04.2-live-server-amd64.iso) running setup script:

upf-epc$ ./docker_setup

...

${ifaces[$i]}= ens803f2

sudo ip netns exec pause ip link add "${ifaces[$i]}" type veth peer name "${ifaces[$i]}" -vdev

setting the network namespace "pause" failed: Invalid argument

NOTES:

docker run --name pause -td --restart unless-stopped Unable to find image 'k8s.gcr.io/pause:latest' locally latest: Pulling from pause a3ed95caeb02: Pull complete 4964c72cd024: Pull complete Digest: sha256:a78c2d6208eff9b672de43f880093100050983047b7b0afe0217d3656e1b0d5f Status: Downloaded newer image for k8s.gcr.io/pause:latest 71f5f5368b031171102d97411b098e5720c23378a6682c384047aa601f4a453f sandbox=$(docker inspect --format={{.NetworkSettings.SandboxKey}} pause) sudo ln -s "$sandbox" /var/run/netns/pause To Reproduce

Steps to reproduce the behavior:

Setup VM with ubuntu-20.04.2-live-server-amd64.iso

https://github.com/omec-project/upf-epc/blob/master/INSTALL.md

./docker_setup.sh

Expected behavior

A clear and concise description of what you expected to happen.

NOTE: The following error stops the processing of the rest of the docker setup script.

setting the network namespace "pause" failed: Invalid argument

Logs

Logs with the actual error and context

Additional context

Add any other context about the problem here.

$ ls -l /var/run/netns/pause lrwxrwxrwx 1 root root 35 Apr 5 14:51 /var/run/netns/pause -> /run/snap.docker/netns/7a96792d4d96

ls -l /run/netns total 0 lrwxrwxrwx 1 root root 35 Apr 5 14:51 pause -> /run/snap.docker/netns/7a96792d4d96

ls -l /run/snap.docker/netns/7a96792d4d96 -rw-r--r-- 1 root root 0 Apr 5 14:51 /run/snap.docker/netns/7a96792d4d96

$ docker images REPOSITORY TAG IMAGE ID CREATED SIZE upf-epc-pfcpiface 0.3.0-dev 03d5fae8a594 34 minutes ago 24MB upf-epc-cpiface 0.3.0-dev cf732878cb43 34 minutes ago 90.4MB upf-epc-bess 0.3.0-dev 0659ae2bbdd4 35 minutes ago 411MB k8s.gcr.io/pause latest 350b164e7ae1 6 years ago 240kB

$ docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 71f5f5368b03 k8s.gcr.io/pause "/pause" 34 minutes ago Up 34 minutes 0.0.0.0:8000->8000/tcp, 0.0.0.0:8080->8080/tcp, 0.0.0.0:10514->10514/tcp pause

# lsns NS TYPE NPROCS PID USER COMMAND 4026531835 cgroup 324 1 root /sbin/init maybe-ubiquity 4026531836 pid 323 1 root /sbin/init maybe-ubiquity 4026531837 user 324 1 root /sbin/init maybe-ubiquity 4026531838 uts 320 1 root /sbin/init maybe-ubiquity 4026531839 ipc 323 1 root /sbin/init maybe-ubiquity 4026531840 mnt 310 1 root /sbin/init maybe-ubiquity 4026531860 mnt 1 105 root kdevtmpfs 4026531992 net 323 1 root /sbin/init maybe-ubiquity 4026532535 mnt 1 654 root /lib/systemd/systemd-udevd 4026532536 uts 1 654 root /lib/systemd/systemd-udevd 4026532561 mnt 1 861 systemd-timesync /lib/systemd/systemd-timesyncd 4026532562 uts 1 861 systemd-timesync /lib/systemd/systemd-timesyncd 4026532563 mnt 1 922 systemd-network /lib/systemd/systemd-networkd 4026532564 mnt 1 931 systemd-resolve /lib/systemd/systemd-resolved 4026532656 mnt 1 45474 root /pause 4026532657 uts 1 45474 root /pause 4026532658 ipc 1 45474 root /pause 4026532659 mnt 6 2237 root dockerd --group docker --exec-root=/run/snap.docker --data-root=/var/ 4026532663 pid 1 45474 root /pause 4026532701 mnt 1 1144 root /lib/systemd/systemd-logind 4026532702 mnt 1 1135 root /usr/sbin/irqbalance --foreground 4026532703 uts 1 1144 root /lib/systemd/systemd-logind 4026532729 net 1 45474 root /pause

# lsns | grep mnt 4026531840 mnt 311 1 root /sbin/init maybe-ubiquity 4026531860 mnt 1 105 root kdevtmpfs 4026532535 mnt 1 654 root /lib/systemd/systemd-udevd 4026532561 mnt 1 861 systemd-timesync /lib/systemd/systemd-timesyncd 4026532563 mnt 1 922 systemd-network /lib/systemd/systemd-networkd 4026532564 mnt 1 931 systemd-resolve /lib/systemd/systemd-resolved 4026532656 mnt 1 45474 root /pause 4026532659 mnt 6 2237 root dockerd --group docker --exec-root=/run/snap.docker --data-root=/var/snap/docker/common/var-lib-docker --pidfile=/run/snap.docker/docker.pid --config-file=/var/snap/docker/796/config/daemon.json 4026532701 mnt 1 1144 root /lib/systemd/systemd-logind 4026532702 mnt 1 1135 root /usr/sbin/irqbalance --foreground

# ls -l /proc/mounts lrwxrwxrwx 1 root root 11 Apr 5 13:43 /proc/mounts -> self/mounts /proc# ls -l self/mounts -r--r--r-- 1 root root 0 Apr 5 15:34 self/mounts

docker version

Client: Version: 19.03.13 API version: 1.40 Go version: go1.13.15 Git commit: cd8016b6bc Built: Fri Feb 5 15:56:39 2021 OS/Arch: linux/amd64 Experimental: false

Server: Engine: Version: 19.03.13 API version: 1.40 (minimum version 1.12) Go version: go1.13.15 Git commit: bd33bbf Built: Fri Feb 5 15:58:24 2021 OS/Arch: linux/amd64 Experimental: false containerd: Version: v1.3.7 GitCommit: 8fba4e9a7d01810a393d5d25a3621dc101981175 runc: Version: 1.0.0-rc10 GitCommit: docker-init: Version: 0.18.0 GitCommit: fec3683

It would be great if eNB and s1u interface of UPF can be in a same subnet.

Hi,

Please can the upf-epc be used with another SMF?

I saw the following information in the installation guide:

UPF-EPC communicates with the CP via ZMQ. Please adjust interface.cfg accordingly.

Does this mean that the upf-epc can only communicate to the CP (SMF) via ZMQ and not PFCP?

Is your feature request related to a problem? Please describe.

Dockerfile is not minimal

Describe the solution you'd like

Separate out the installation of build and runtime install steps into scripts to keep Dockerfile minimal.

Describe the bug

Bess UPF updates the action parameter in FAR to forwardU or ForwardD.

To Reproduce

Basic attach test case

Expected behavior

Set value of action as per spec (notifyCP, buffer, drop, forward).

Logs

Additional context

Describe the bug

I am trying to push some traffic through the BESS pipeline using the sim mode.

Unfortunately, all traffic ends up failing on the pdrLookup module.

To Reproduce

$: sudo ./docker_setup.sh

[...]

$: echo $?

0

$: sudo docker exec -it bess ./bessctl show module pdrLookup

pdrLookup::WildcardMatch(8 fields, 0 rules)

Per-packet metadata fields:

src_iface: read 1 bytes at offset 20

tunnel_ipv4_dst: read 4 bytes at offset 24

teid: read 4 bytes at offset 28

dst_ip: read 4 bytes at offset 36

src_ip: read 4 bytes at offset 40

dst_port: read 2 bytes at offset 22

src_port: read 2 bytes at offset 32

ip_proto: read 1 bytes at offset 21

pdr_id: write 4 bytes (no downstream reader)

fseid: write 4 bytes at offset 8

ctr_id: write 4 bytes at offset 12

far_id: write 4 bytes at offset 16

Input gates:

0: batches 0 packets 0 pktParse:1 ->

Output gates:

0: batches 0 packets 0 -> 0:preQoSCounter Track::track0

1: batches 0 packets 0 -> 0:gtpuDecap Track::track0

2: batches 77054768 packets 2465752576 -> 0:pdrLookupFail Track::track0

Deadends: 0 Expected behavior

I expect to see traffic going past the pdrLookup and through the pipeline, or something like that. :)

Logs

See To Reproduce.

Additional context

I am using Debian 10 and Docker 19.03.12.

All I do is just enable sim mode and set a fitting CPU set:

$: git diff

diff --git a/conf/upf.json b/conf/upf.json

index 607d654..0d0f31d 100644

--- a/conf/upf.json

+++ b/conf/upf.json

@@ -3,6 +3,7 @@

"": "mode: af_xdp",

"": "mode: af_packet",

"": "mode: sim",

+ "mode": "sim",

"": "max UE sessions",

"max_sessions": 50000,

diff --git a/docker_setup.sh b/docker_setup.sh

index 086ad2f..e92aa04 100755

--- a/docker_setup.sh

+++ b/docker_setup.sh

@@ -16,7 +16,7 @@ bessd_port=10514

mode="dpdk"

#mode="af_xdp"

#mode="af_packet"

-#mode="sim"

+mode="sim"

# Gateway interface(s)

#

@@ -150,7 +150,7 @@ fi

# Run bessd

docker run --name bess -td --restart unless-stopped \

- --cpuset-cpus=12-13 \

+ --cpuset-cpus=9-11 \

--ulimit memlock=-1 -v /dev/hugepages:/dev/hugepages \

-v "$PWD/conf":/opt/bess/bessctl/conf \

--net container:pause \Thanks!

PS: Kudos for making your BESS patches upstream.

PFCP DDN support needs to be implemented, to correctly page the UE and deliver downlink packets

UPF-EPC doesn't include support for dedicated bearer (D-B) support. An initial list of what needs to be done:

Hello, Mr./Mrs, I am trying to deploy dpdk upf on the docker. However, after running "run up4" command, I found that vdev interface for mirror link cannot be attached. The error logs is shown below. The "show port" result show that only two port are created. How could I solve this problem? Thanks!

cat /tmp/bessd.INFO

I0121 09:47:14.218289 640 pmd.cc:268] Initializing Port:0 with memory from socket 0

I0121 09:47:14.295985 640 pmd.cc:268] Initializing Port:1 with memory from socket 0

I0121 09:47:14.431156 640 pmd.cc:268] Initializing Port:0 with memory from socket 0

I0121 09:47:14.501525 640 dpdk.cc:72] rte_pmd_init_internals(): net_af_packet0: could not set PACKETASS on AF_PACKET socket for enp63s0f0-vdev:Protocol not available

I0121 09:47:14.506814 640 dpdk.cc:72] EAL: Error: Invalid memory

I0121 09:47:14.506852 640 dpdk.cc:72] EAL: Error: Invalid memory

I0121 09:47:14.514791 640 dpdk.cc:72] EAL: Driver cannot attach the device (net_af_packet0)

I0121 09:47:14.514803 640 dpdk.cc:72] EAL: Failed to attach device on primary process

I0121 09:47:14.660923 640 pmd.cc:268] Initializing Port:1 with memory from socket 0

I0121 09:47:14.732236 640 dpdk.cc:72] rte_pmd_init_internals(): net_af_packet1: could not set PACKETASS on AF_PACKET socket for enp63s0f1-vdev:Protocol not available

I0121 09:47:14.743819 640 dpdk.cc:72] EAL: Error: Invalid memory

I0121 09:47:14.743860 640 dpdk.cc:72] EAL: Error: Invalid memory

I0121 09:47:14.759796 640 dpdk.cc:72] EAL: Driver cannot attach the device (net_af_packet1)

I0121 09:47:14.759846 640 dpdk.cc:72] EAL: Failed to attach device on primary process

This will help other get up and running with UPF in major clouds. If its not possible with managed k8s offerings, then running directly on the node.

Similar to NGIC repo.

Describe the bug

docker logs bess

...

I0415 16:37:31.036574 1 pmd.cc:68] 0 DPDK PMD ports have been recognized:

I0415 16:37:31.036687 1 vport.cc:318] vport: BESS kernel module is not loaded. Loading...

sh: 1: **insmod: not found**

W0415 16:37:31.044246 1 vport.cc:330] **Cannot load kernel module /bin/kmod/bess.ko**

I0415 16:37:31.044337 1 bessctl.cc:1931] Server listening on 0.0.0.0:10514

...

To Reproduce

git clone https://github.com/krsna1729/upf-epc.git

Setup for "sim" mode (docker_setup.sh and conf/upf.json)

DOCKER_BUILDKIT=0 ./docker_setup.sh

Expected behavior

Expecting those files to be part of the image/container.

Logs

See above

Additional context

# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

8dd757760598 upf-epc-pfcpiface:0.3.0-dev "/bin/pfcpiface -con…" 32 minutes ago Up 32 minutes bess-pfcpiface

1408c520dd6f upf-epc-bess:0.3.0-dev "bessctl http 0.0.0.…" 32 minutes ago Up 32 minutes bess-web

5ca6aa7e27cf upf-epc-bess:0.3.0-dev "bessd -f -grpc-url=…" 33 minutes ago Up 33 minutes bess

fc1d0d4dc878 k8s.gcr.io/pause "/pause" 33 minutes ago Up 33 minutes 0.0.0.0:8000->8000/tcp, :::8000->8000/tcp, 0.0.0.0:8080->8080/tcp, :::8080->8080/tcp, 0.0.0.0:10514->10514/tcp, :::10514->10514/tcp pause

Describe the bug

... Status: Downloaded newer image for golang:latest ---> cbf5edf38f6b

Step 48/60 : RUN go get github.com/golang/protobuf/[email protected] ---> Running in c550c0937f5f

^[[91mgo: downloading github.com/golang/protobuf v1.4.3

^[[0m^[[91mgo: downloading google.golang.org/protobuf v1.23.0

^[[0mRemoving intermediate container c550c0937f5f ---> 10553e7a6537

Step 49/60 : FROM bess-build AS go-pb ---> 5d2aed9d22c8

Step 50/60 : COPY --from=protoc-gen /go/bin/protoc-gen-go /bin ---> 37185347647e

Step 51/60 : RUN mkdir /bess_pb && protoc -I /usr/include -I /protobuf/ /protobuf/*.proto /protobuf/ports/*.proto --go_out=plugins=grpc:/bess_pb

---> Running in ee6a85b3e69b

^[[91m2021/04/14 18:33:54 WARNING: Missing 'go_package' option in "error.proto", please specify it with the full Go package path as a future release of protoc-gen-go will require this be specified.

See https://developers.google.com/protocol-buffers/docs/reference/go-generated#package for more information.

2021/04/14 18:33:54 WARNING: Missing 'go_package' option in "bess_msg.proto", please specify it with the full Go package path as a future release of protoc-gen-go will require this be specified.

See https://developers.google.com/protocol-buffers/docs/reference/go-generated#package for more information.

2021/04/14 18:33:54 WARNING: Missing 'go_package' option in "util_msg.proto", please specify it with the full Go package path as a future release of protoc-gen-go will require this be specified.

See https://developers.google.com/protocol-buffers/docs/reference/go-generated#package for more information.

2021/04/14 18:33:54 WARNING: Missing 'go_package' option in "module_msg.proto", please specify it with the full Go package path as a future release of protoc-gen-go will require this be specified.

See https://developers.google.com/protocol-buffers/docs/reference/go-generated#package for more information.

2021/04/14 18:33:54 WARNING: Missing 'go_package' option in "service.proto", please specify it with the full Go package path as a future release of protoc-gen-go will require this be specified.

See https://developers.google.com/protocol-buffers/docs/reference/go-generated#package for more information.

2021/04/14 18:33:54 WARNING: Missing 'go_package' option in "ports/port_msg.proto", please specify it with the full Go package path as a future release of protoc-gen-go will require this be specified.

See https://developers.google.com/protocol-buffers/docs/reference/go-generated#package for more information.

...

Error response from daemon: Container eb0541ef9914b05c3ee576791c84defe90045f022eaeefa0550e92545e898aec is restarting, wait until the container is running

To Reproduce

tried both 3.9.3-slim and 3.9.4-slim in Dockerfile, but it didn't matter

Also tried "dpdk" mode using https://github.com/krsna1729/upf-epc.git repo, and same issues.

$ hugeadm --pool-list

Size Minimum Current Maximum Default

2097152 1024 1024 1024 *

1073741824 1 1 1

$ cat /proc/meminfo | grep Huge

AnonHugePages: 2048 kB

ShmemHugePages: 0 kB

HugePages_Total: 1024

HugePages_Free: 1024

HugePages_Rsvd: 0

HugePages_Surp: 0

Hugepagesize: 2048 kB

bare metal Ubuntu 18.04 (4.15.0-76-generic) with two nic cards

DOCKER_BUILDKIT=0 ./docker_setup.sh

Expected behavior

There should be 4 containers running, however only one "pause" is running and the following is constantly restarting:

"bessd -f -grpc-url=…" 2 minutes ago Restarting

Logs

Additional context

Both the docker_setup.sh and upf.json files are only in "sim" mode.

Question1: If the docker_setup.sh was only in "dpdk" mode, then what mode should be set in the upf.json file?

Question2: Should docker_setup.sh ever have both "dpdk" and "sim" modes set at the same time?

Describe the bug

$ docker run --name pktgen -td --restart unless-stopped \

--cpuset-cpus=2-5 --ulimit memlock=-1 --cap-add IPC_LOCK \ -v /dev/hugepages:/dev/hugepages -v "$PWD/conf":/opt/bess/bessctl/conf \ --device=/dev/vfio/vfio --device=/dev/vfio/176 \ upf-epc-bess1mg:"$(<VERSION)" -grpc-url=0.0.0.0:10514

f9c8bac4c0dcf839dd1664ed29bbce64ffe9b6cee1c5d181277aad284b0df5f3

docker: Error response from daemon: error gathering device information while adding custom device "/dev/vfio/176": no such file or directory.

To Reproduce

Setup sim for pktgen:

DOCKER_BUILDKIT=0 ./docker_setup.sh' 'docker exec bess-pfcpiface pfcpiface -config /conf/upf.json -simulate create'

docker run --name pktgen -td --restart unless-stopped \ --cpuset-cpus=2-5 --ulimit memlock=-1 --cap-add IPC_LOCK \ -v /dev/hugepages:/dev/hugepages -v "$PWD/conf":/opt/bess/bessctl/conf \ --device=/dev/vfio/vfio --device=/dev/vfio/176 \ upf-epc-bess1mg:"$(<VERSION)" -grpc-url=0.0.0.0:10514

Expected behavior

pktgen container should start, so one can start the following command:

$ docker exec -it pktgen ./bessctl run pktgen

Logs

bess log

I0409 18:44:15.949054 1 main.cc:62] Launching BESS daemon in process mode... I0409 18:44:15.949154 1 main.cc:75] bessd unknown I0409 18:44:15.957208 1 bessd.cc:456] Loading plugin (attempt 1): /bin/modules/sequential_update.so I0409 18:44:15.972048 1 memory.cc:128] /sys/devices/system/node/possible not available. Assuming a single-node system... I0409 18:44:15.972066 1 dpdk.cc:167] Initializing DPDK EAL with options: ["bessd", "--master-lcore", "127", "--lcore", "127@9-10", "--no-shconf", "--legacy-mem", "--socket-mem", "1024", "--huge-unlink"]

EAL: Detected 16 lcore(s) EAL: Detected 1 NUMA nodes Option --master-lcore is deprecated use main-lcore EAL: Selected IOVA mode 'PA'

EAL: No available hugepages reported in hugepages-1048576kB

I0409 18:44:15.977942 1 dpdk.cc:66] EAL: Probing VFIO support...

I0409 18:44:16.488131 1 dpdk.cc:66] EAL: Invalid NUMA socket, default to 0

I0409 18:44:16.488155 1 dpdk.cc:66] EAL: Invalid NUMA socket, default to 0

I0409 18:44:16.488279 1 dpdk.cc:66] EAL: No legacy callbacks, legacy socket not created

Segment 0-0: IOVA:0x2ab000000, len:2097152, virt:0x100200000, socket_id:0, hugepage_sz:2097152, nchannel:0, nrank:0 fd:8

...

I0409 18:44:16.489435 1 packet_pool.cc:49] Creating DpdkPacketPool for 262144 packets on node 0 I0409 18:44:16.489444 1 packet_pool.cc:70] PacketPool0 requests for 262144 packets I0409 18:44:16.541139 1 packet_pool.cc:157] PacketPool0 has been created with 262144 packets I0409 18:44:16.541249 1 pmd.cc:68] 0 DPDK PMD ports have been recognized: I0409 18:44:16.541272 1 vport.cc:318] vport: BESS kernel module is not loaded. Loading... I0409 18:44:16.550199 1 bessctl.cc:1931] Server listening on 0.0.0.0:10514 I0409 18:44:16.551793366 1 server_builder.cc:247] Synchronous server. Num CQs: 1, Min pollers: 1, Max Pollers: 1, CQ timeout (msec): 1000 I0409 18:44:26.255146 12 bessctl.cc:487] *** All workers have been paused *** I0409 18:44:26.591234 38 worker.cc:319] Worker 0(0x7f6239bab700) is running on core 9 (socket 0) I0409 18:44:26.662286 40 bessctl.cc:691] Checking scheduling constraints I0409 18:44:26.662710 40 bessctl.cc:516] *** Resuming ***

bess-pfcpiface log

... 2021/04/12 13:54:47 messages.go:623: SMF address 172.17.0.1:8805 2021/04/12 13:54:49 conn.go:100: read udp 172.18.0.2:8805: i/o timeout 2021/04/12 13:54:49 messages.go:565: cpConnected : false msg false 2021/04/12 13:54:49 messages.go:574: cpConnected false msg - false 2021/04/12 13:54:52 messages.go:592: Retry pfcp connection setup 172.17.0.1 2021/04/12 13:54:52 messages.go:555: SPGWC/SMF hostname 172.17.0.1 2021/04/12 13:54:52 messages.go:557: SPGWC/SMF address IP inside manageSmfConnection 172.17.0.1 2021/04/12 13:54:52 messages.go:605: n4DstIp 172.17.0.1 2021/04/12 13:54:52 messages.go:623: SMF address 172.17.0.1:8805

Additional context

$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

f9c8bac4c0dc upf-epc-bess1mg:0.3.0-dev "bessd -f -grpc-url=…" About a minute ago Created pktgen

9472186c3269 upf-epc-pfcpiface:0.3.0-dev "/bin/pfcpiface -con…" 2 days ago Up 2 days bess-pfcpiface

072a692acdd7 upf-epc-bess1mg:0.3.0-dev "bessctl http 0.0.0.…" 2 days ago Up 2 days bess-web

867a4c42f7f5 upf-epc-bess1mg:0.3.0-dev "bessd -f -grpc-url=…" 2 days ago Up 2 days bess

46157802d793 k8s.gcr.io/pause "/pause" 2 days ago Up 2 days 0.0.0.0:8000->8000/tcp, 0.0.0.0:8080->8080/tcp, 0.0.0.0:10514->10514/tcp pause

Describe the bug

Did some tests with Spirent packet generator. The GTPv1 packets (with next extension header type) are using up 4 B to store types (instead of 1 byte). We need to verify this. A PR will be submitted to fix this issue. Not sure whether this will be a correct patch.

To Reproduce

Run Spirent Landslide udp-fireball-upf-nodal testcase that was created by the TSR team.

Expected behavior

See above.

Logs

Attached

ts0_eth2.zip

Additional context

Add any other context about the problem here.

Is your feature request related to a problem? Please describe.

No.

Describe the solution you'd like

FIB library was added in 19.11. Performance seems of ADD/DELETE seems to be orders of magnitude quicker and lookup is on par. Investigate use of this library for routing.

https://gist.github.com/krsna1729/af44263fa651085696bd0a211c41a231

Describe alternatives you've considered

Continue with LPM.

There is a requirement to pass 'key' in Little Endian Format rather then Big Endian to test Metering functionality.

Is your feature request related to a problem? Please describe.

From #272. https://docs.pyroute2.org/ipdb_toc.html

Warning The IPDB module is deprecated and obsoleted by NDB. Please consider using NDB instead.

5167ebd37b53eaf67be9827b7c0dca90be586961fba943befb25c9ec03be3851

8419a05764c58c2240eed4d517ca02d6e70962f341a401cea474ad337444edb1

Deprecation warning https://docs.pyroute2.org/ipdb_toc.html

Deprecation warning https://docs.pyroute2.org/ipdb_toc.html

Deprecation warning https://docs.pyroute2.org/ipdb_toc.html

Deprecation warning https://docs.pyroute2.org/ipdb_toc.html

max_ip_defrag_flows value not set. Not installing IP4Defrag module.

ip_frag_with_eth_mtu value not set. Not installing IP4Frag module.

Setting up port access on worker ids [0]

Setting up port core on worker ids [0]

d8e4117c4cec033309e1f4b8b3d1ad459996fb895ea8063cfeba38fc71f61475

9bb8c2c10f8249bcef5688c7e71642eed90c4ab98eff105451fd0966cb751291

Describe the solution you'd like

Move routectl from IPDB to NDB available within pyroute2

Describe alternatives you've considered

port entire routectl to golang and use it in pfcpiface initialization.

Dpdk Hash library supports up-to 64 bulk keys processing, UPF-EPC crashes when packet size is increased to 64 from 32 (current size).

Describe the bug

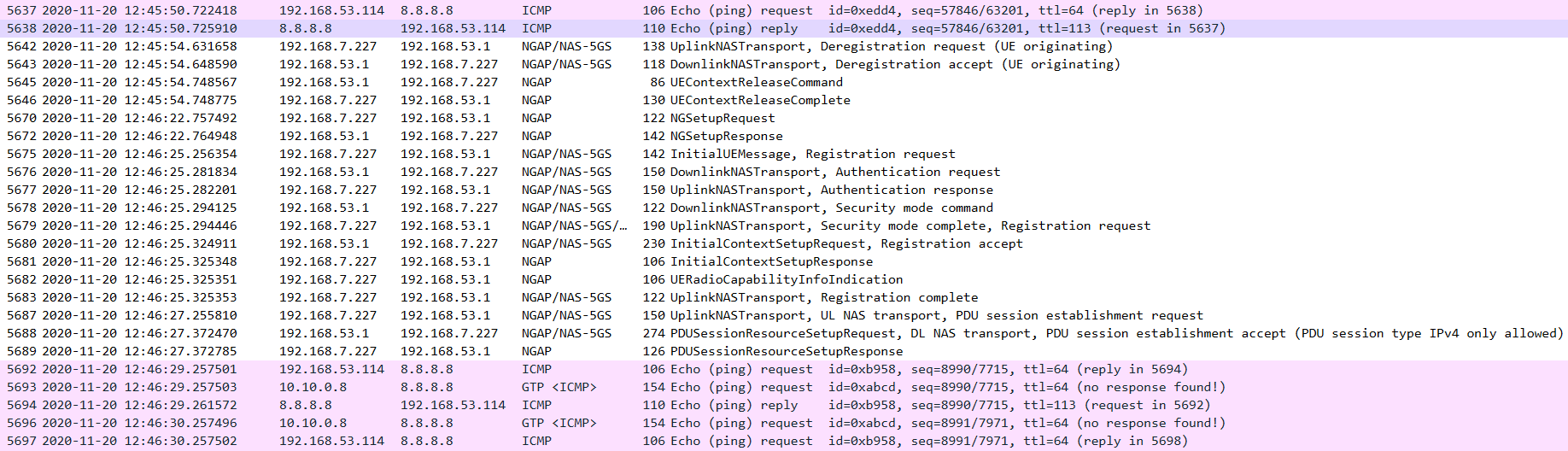

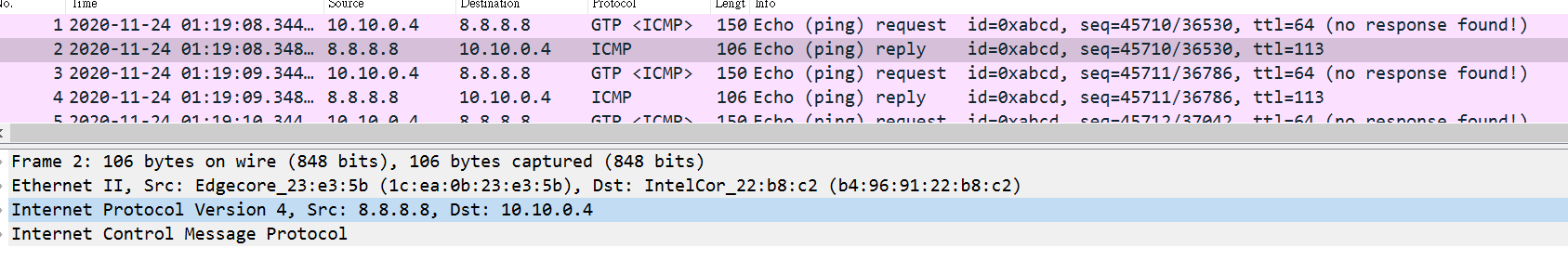

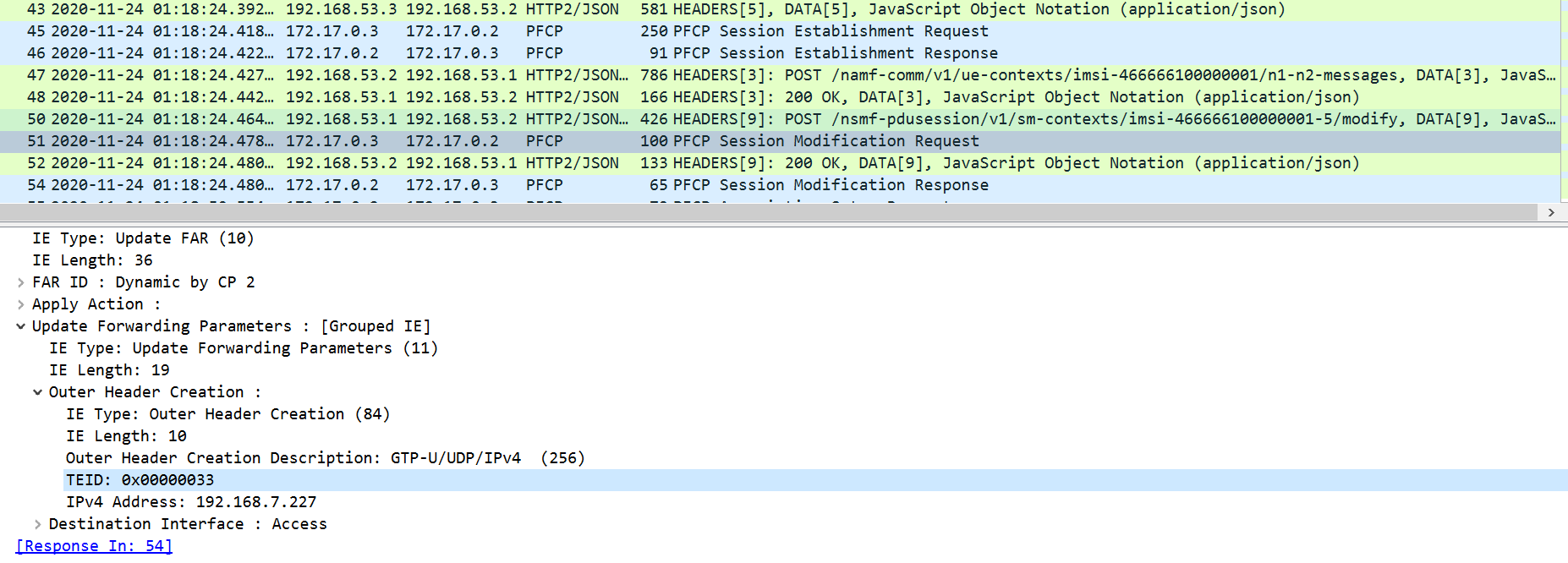

Hello, Mr./Mrs, It is great to know this UPF project, we are trying to have an integration test of our 5G core network and your upf, and the UE and gNB are simulated by Spirent as you did.

Most of controll plan signaling are correct as below.

(You could take a look of attach file for detail, mirror_switch_n2_n3_n6.zip.)

As you can see, UE start sending the icmp ping request to 8.8.8.8 after registration and pdu session establishment are completed, and 8.8.8.8 did reply the icmp packet.

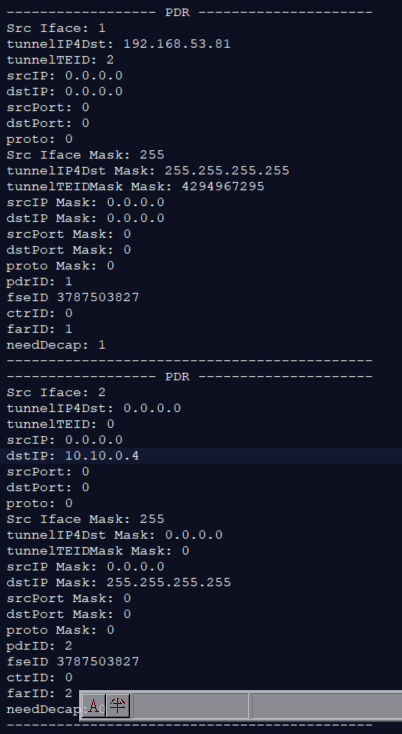

However, something went wrong while downlink traffic. Since I didn't see the ping reply (gtp icmp) packet, I check the GUI of DPDK-UPF and found out that downlink packet could not match the pdrLookUp table.

Logs

Here are some logs that I have been try to figure out what might be wrong.

First, I tcpdumped the input port of pdrLookUp function, I would like make sure that contexts of icmp reply packet are correct :

(You could take a look of attach file for detail, beforePdrLookUp.zip )

and I also tcpdumped the both output port 1 and 2 of pdrLookUp function, it looks like this:

Output port 1 of pdrLookUP (correct, uplink and decap):

(You could take a look of attach file for detail, afterPdrLookUpPort1.zip.)

Output port 2 of pdrLookUP (mismatch, downlink and encap):

(You could take a look of attach file for detail, afterPdrLookUpPort2.zip.)

However, I could not see any abnormal contexts.

Hence I check the log of bess-pfcpiface if the packet shall match PDR and FAR rule.

(here is the detail logs of bess-pfcpifcae,bess-pfcpiface.zip.)

For downlink traffic, please correct me if I make any mistake, since the dst IP of the reply packet is 10.10.0.4 and mask is 255.255.255.255 (unique IP), it shall hit the second PDR rule only. I think perhaps something went wrong here but I could not dig deeper into this bug.

Really appreciate if you could share any further suggestion or method to debug.

Thank you in advance !

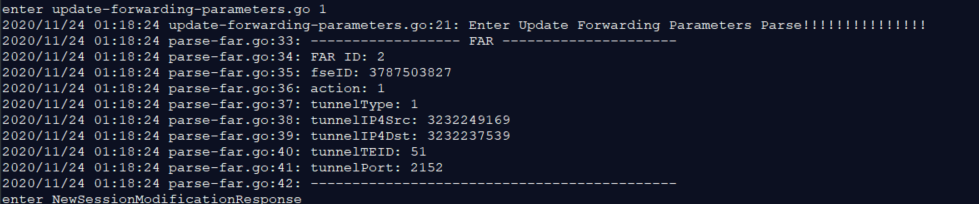

Additional context

Once if the reply packets did match the second rule of pdrLookUp table, I think it shall goes to hit the FAR ID2 rule next, and will be encapsulated to a gtp packet, that from 192.168.53.81:2152 (DEC 3232249169, N3 of omec-UPF) goes to 192.168.7.227:2152 (DEC 3232237539, N3 of spirent gNB), and the TEID is 33 (DEC 51).

(Here are the details packets between smf and upf, smfInPod.zip.)

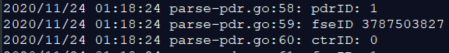

An Implied question report

I noticed that even though the wireshark shows the F-SEID is 0xe5063cf3e1c0b8d3, pfcpiface log gave a fseID 3787503827, which is just 4 bytes, 0xe1c0b8d3. I dont know if this should be a problem.

Describe the bug

Setup configuration for sim mode. And set the mac address appropriately.

# DOCKER_BUILDKIT=0 ./docker_setup.sh &> logs/20210413a_docker_setup.sh_sim_only_w_mac_addrs

# docker exec bess-pfcpiface pfcpiface -config /conf/upf.json -pfcpsim

2021/04/13 19:18:51 main.go:127: {sim 50000 {ens803f2} {ens803f3} {172.17.0.1 spgwc false 10.250.0.0/16 8080} {172.17.0.1/32 onos 51001 10.250.0.0/24} false {16.0.0.1 11.1.1.129 13.1.1.199 6.6.6.6 9.9.9.9 0x30000000 0x90000000}}

2021/04/13 19:18:51 bess.go:249: setUpfInfo bess

2021/04/13 19:18:51 bess.go:253: IP pool : 10.250.0.0/16

2021/04/13 19:18:51 main.go:114: Access IP: 198.18.0.1

2021/04/13 19:18:51 main.go:114: Core IP: 198.19.0.1

2021/04/13 19:18:51 bess.go:268: Dest address 172.17.0.1

2021/04/13 19:18:51 bess.go:270: SPGWU/UPF address IP: 172.18.0.2

2021/04/13 19:18:51 bess.go:279: bessIP localhost:10514

2021/04/13 19:18:51 pfcpsim.go:31: sent heartbeat request to: 127.0.0.1:8805

2021/04/13 19:18:51 pfcpsim.go:36: read udp 127.0.0.1:44286->127.0.0.1:8805: recvfrom: connection refused

To Reproduce

See above.

gRPC sim mode seems to work.

Expected behavior

Logs

Additional context

What is the difference the the config setup between "gRPC sim mode" and "PFCP sim mode" Thanks

Is your feature request related to a problem? Please describe.

No. But it may turn out to be a useful feature to display ongoing statistics.

Describe the solution you'd like

Users can inquire about the status of each submodule of the pipeline on the fly.

Describe alternatives you've considered

A clear and concise description of any alternative solutions or features you've considered.

Additional context

Add any other context or screenshots about the feature request here.

Hi,

Please I think the k8s manifest file upf-k8s.yaml needs to be updated, I could not find some of the parameter in conf/upf.json file.

Also kindly change the manifest from POD to Deployment.

Exact match and wild card modules dnt have module destructor implemented (Even in vanilla bess), which leads to resource leakage in come cases .

-->this issue is reproducible with Unit test case only.

In current DDN feature fix, we receive the fseid from fast Path to notify that downlink data has arrived for idle UE.

Then we loop around the PDRs and search for Downlink PDR and send that information in the Session Report Request towards SPGW/SMF.

Instead of searching the Downlink PDR, we should receive the PDR ID , for which the packet matched, from the fast path along with the fseid. This PDR ID can then be sent to SPGW in session report request instead of searching the Downlink PDR and the sending that.

pfcpiface/messages.go

serep.Header.SEID = session.remoteSEID

var pdrID uint32

for _, pdr := range session.pdrs {

if pdr.srcIface == core {

pdrID = pdr.pdrID

break

}

}

Describe the bug

Latest version of pyroute2 (0.6.1) requires mitogen python package, causing the below command to fail

./bessctl run up4

To Reproduce

Steps to reproduce the behavior:

docker system prune -af --volumes

./docker_setup.sh

Expected behavior

docker_setup.sh to not error out on this command

./bessctl run up4

Logs

0326a5f9ace0ad6b3c8d7fac13d049957f86ff629647410e99a592a2001852d2

a75476ef7453fa28bc332207c972d44ba8980be369bff78cfcec128ac9570373

*** Error: Unhandled exception in the configuration script (most recent call last)

File "/opt/bess/bessctl/conf/up4.bess", line 6, in <module>

from conf.parser import *

File "/opt/bess/bessctl/conf/parser.py", line 8, in <module>

from conf.utils import *

File "/opt/bess/bessctl/conf/utils.py", line 14, in <module>

from pyroute2 import NDB

File "/usr/local/lib/python3.9/site-packages/pyroute2/__init__.py", line 69, in <module>

globals()[entry_point.name] = loaded = entry_point.load()

File "/usr/local/lib/python3.9/importlib/metadata.py", line 77, in load

module = import_module(match.group('module'))

File "/usr/local/lib/python3.9/importlib/__init__.py", line 127, in import_module

return _bootstrap._gcd_import(name[level:], package, level)

File "/usr/local/lib/python3.9/site-packages/pr2modules/cli/console.py", line 5, in <module>

from pr2modules.ndb.main import NDB

File "/usr/local/lib/python3.9/site-packages/pr2modules/ndb/main.py", line 184, in <module>

from .source import (Source,

File "/usr/local/lib/python3.9/site-packages/pr2modules/ndb/source.py", line 80, in <module>

from pr2modules.iproute.remote import RemoteIPRoute

File "/usr/local/lib/python3.9/site-packages/pr2modules/iproute/remote.py", line 3, in <module>

import mitogen.core

ModuleNotFoundError: No module named 'mitogen'

Command failed: run up4

Additional context

Adding mitogen allows us to go forward, but there is warning regarding IPDB usage

@@ -82,6 +82,7 @@ RUN apt-get update && \

flask \

grpcio \

iptools \

+ mitogen \

protobuf \

psutil \

pyroute2 \5167ebd37b53eaf67be9827b7c0dca90be586961fba943befb25c9ec03be3851

8419a05764c58c2240eed4d517ca02d6e70962f341a401cea474ad337444edb1

Deprecation warning https://docs.pyroute2.org/ipdb_toc.html

Deprecation warning https://docs.pyroute2.org/ipdb_toc.html

Deprecation warning https://docs.pyroute2.org/ipdb_toc.html

Deprecation warning https://docs.pyroute2.org/ipdb_toc.html

max_ip_defrag_flows value not set. Not installing IP4Defrag module.

ip_frag_with_eth_mtu value not set. Not installing IP4Frag module.

Setting up port access on worker ids [0]

Setting up port core on worker ids [0]

d8e4117c4cec033309e1f4b8b3d1ad459996fb895ea8063cfeba38fc71f61475

9bb8c2c10f8249bcef5688c7e71642eed90c4ab98eff105451fd0966cb751291

Another option tried was pinning pyroute2 version to 0.5.19 which was the previous working one.

@@ -84,7 +84,7 @@ RUN apt-get update && \

iptools \

protobuf \

psutil \

- pyroute2 \

+ pyroute2==0.5.19 \

scapy && \

apt-get --purge remove -y \

gccWe would need Outer header removal and Outer header creation for this to work. Currently there is FAR Tunnel and FAR Forward. FAR Tunnel is not a flag in the FAR. So maybe its better to split the tunneling creation/removal part from the FAR Execution?

PCAP file would confirm how the PFCP IEs look like for N9

performance Improvement in concurrent Pipeline

=> ERROR [go-pb 2/2] RUN mkdir /bess_pb && protoc -I /usr/include -I /protobuf/ /protobuf/*.proto /protobuf/ports/*.proto --go_out=plugins=grpc:/bess_pb 0.5s

------

> [go-pb 2/2] RUN mkdir /bess_pb && protoc -I /usr/include -I /protobuf/ /protobuf/*.proto /protobuf/ports/*.proto --go_out=plugins=grpc:/bess_pb: #28 0.423 protoc-gen-go: unable to determine Go import path for "error.proto"

#28 0.423

#28 0.423 Please specify either:

#28 0.423 • a "go_package" option in the .proto source file, or

#28 0.423 • a "M" argument on the command line.

#28 0.423

#28 0.423 See https://developers.google.com/protocol-buffers/docs/reference/go-generated#package for more information.

#28 0.423

#28 0.425 --go_out: protoc-gen-go: Plugin failed with status code 1.

v1.4.3 seems to be the last working version.

Is your feature request related to a problem? Please describe.

Yes. python 2.x is EOL.

Describe the solution you'd like

Porting conf/ to python 3.x.

Describe alternatives you've considered

Continue as is which is not acceptable.

#296 added basic QER lookup support. #268 Implements the QoS module that will replace the exactmatch with a specific module for metering. Below listed are things needed to integrate -

Describe the bug

BESS daemon crashes on running simulate create command

To Reproduce

Configure docker_setup.sh and conf/upf.json in sim mode.

./docker_setup.sh;

docker exec bess-pfcpiface pfcpiface -config /conf/upf.json -simulate create;

Expected behavior

Successful installation of rules without BESS daemon crash

Logs

F0507 08:34:31.306718 32 debug.cc:405] A critical error has occured. Aborting...

Signal: 11 (Segmentation fault), si_code: 1 (SEGV_MAPERR: address not mapped to object)

pid: 1, tid: 32, address: 0x8, IP: 0x560a28e831c4

Backtrace (recent calls first) ---

(0): bessd(_ZN13WildcardMatch12ProcessBatchEP7ContextPN4bess11PacketBatchE+0x6d4) [0x560a28e831c4]

(1): bessd(_ZNK4TaskclEP7Context+0x10d) [0x560a28c7722d]

(2): bessd(_ZN4bess16DefaultScheduler12ScheduleLoopEv+0x1c8) [0x560a28c737d8]

(3): bessd(_ZN6Worker3RunEPv+0x201) [0x560a28c70031]

(4): bessd(_Z10run_workerPv+0x7c) [0x560a28c7030c]

(5): bessd(+0x1429fde) [0x560a299d6fde]

(6): /lib/x86_64-linux-gnu/libpthread.so.0(+0x7fa2) [0x7f76e80c4fa2]

start_thread at ??:?

(7): /lib/x86_64-linux-gnu/libc.so.6(clone+0x3e) [0x7f76e7e534ce]

clone at ??:?

Additional context

Pausing the daemon before inserting works as expected. f589be

git checkout -b hotfix-sim

git revert f589be

This will have 2 parts -

Is your feature request related to a problem? Please describe.

Yes. Unable to unit test changes.

Describe the solution you'd like

We already have code to do simtest of add/delete sessions. We should test scripts which do not

rely on interfaces and CP. This way basic tests can be done in Actions.

Describe alternatives you've considered

Additional context

Hello,

I have managed to start all the components of the setup and send traffic to the upf. However, I don’t get the right latency measurements. There are the timestamp and the measure step in the pipeline but all the measurements of the upf_latency_ns metric are 0.

The logs of bess container show some warnings:

W0923 10:48:21.765167 37 metadata.cc:77] Metadata attr timestamp/8 of module ens1f0_measure has no upstream module that sets the value!

W0923 10:48:21.765180 37 metadata.cc:77] Metadata attr timestamp/8 of module ens1f1_measure has no upstream module that sets the value!

The start and the end of the pipeline are:

Do I need to do anything else to activate the latency metrics or is there a bug?

Thank you in advance,

Georgia

pktgen is running while running below commands. 4 workers. 50K sessions and 50K flows. packet size 128

$ docker exec bess-pfcpiface pfcpiface -config /conf/upf.json -simulate create;

2021/06/03 14:12:20 main.go:131: { 50000 {access} {core} {172.17.0.1 false 10.250.0.0/16 8080 } {172.17.0.1/32 onos 51001 10.250.0.0/24} false {16.0.0.1 11.1.1.129 13.1.1.199 6.6.6.6 9.9.9.9 0x30000000 0x90000000} 0 0 }

2021/06/03 14:12:20 upf.go:83: Dest address 172.17.0.1

2021/06/03 14:12:20 upf.go:85: SPGWU/UPF address IP: 172.17.0.2

2021/06/03 14:12:20 upf.go:100: UPF Node IP : 0.0.0.0

2021/06/03 14:12:20 upf.go:101: UPF Local IP : 172.17.0.2

2021/06/03 14:12:20 bess.go:275: setUpfInfo bess

2021/06/03 14:12:20 bess.go:279: IP pool : 10.250.0.0/16

2021/06/03 14:12:20 main.go:118: Access IP: 198.18.0.1

2021/06/03 14:12:20 main.go:118: Core IP: 198.19.0.1

2021/06/03 14:12:20 bess.go:288: bessIP localhost:10514

2021/06/03 14:12:20 main.go:170: create sessions: 50000

2021/06/03 14:12:20 bess.go:256: dial error: dial unixpacket /tmp/notifycp: connect: no such file or directory

2021/06/03 14:12:51 bess.go:443: Sessions/s: 1598.9820863664613

$ docker exec bess-pfcpiface pfcpiface -config /conf/upf.json -simulate delete;

2021/06/03 14:13:01 main.go:131: { 50000 {access} {core} {172.17.0.1 false 10.250.0.0/16 8080 } {172.17.0.1/32 onos 51001 10.250.0.0/24} false {16.0.0.1 11.1.1.129 13.1.1.199 6.6.6.6 9.9.9.9 0x30000000 0x90000000} 0 0 }

2021/06/03 14:13:01 upf.go:83: Dest address 172.17.0.1

2021/06/03 14:13:01 upf.go:85: SPGWU/UPF address IP: 172.17.0.2

2021/06/03 14:13:01 upf.go:100: UPF Node IP : 0.0.0.0

2021/06/03 14:13:01 upf.go:101: UPF Local IP : 172.17.0.2

2021/06/03 14:13:01 bess.go:275: setUpfInfo bess

2021/06/03 14:13:01 bess.go:279: IP pool : 10.250.0.0/16

2021/06/03 14:13:01 main.go:118: Access IP: 198.18.0.1

2021/06/03 14:13:01 main.go:118: Core IP: 198.19.0.1

2021/06/03 14:13:01 bess.go:288: bessIP localhost:10514

2021/06/03 14:13:01 main.go:170: delete sessions: 50000

2021/06/03 14:13:01 bess.go:256: dial error: dial unixpacket /tmp/notifycp: connect: no such file or directory

2021/06/03 14:13:31 bess.go:443: Sessions/s: 1652.459380108746

Is your feature request related to a problem? Please describe.

Going from 1 worker to 2 worker is currently prevented by counter module. #158 (comment)

This was updated to be Worker::kMaxWorkers just to get going with multi-queue/worker implementation.

Describe the solution you'd like

Make the counter module's datastructure multi-writer concurrent.

Describe alternatives you've considered

None

Additional context

None

Is your feature request related to a problem? Please describe.

No

Describe the solution you'd like

Currently we structure the module source code around BESS's core source code. Instead should we refactor it to follow sample_plugin.

Describe alternatives you've considered

We can continue the way we are doing it today

Additional context

No

Bess do not support concurrent hash table support so operation(Insert/Lookup etc) can't happen simultaneously and Daemon has to be paused for inserting Rules, which ultimately leads to packet loss.

Concurrent pipelines will also be useful when it comes to scaling and adding more pipelines parallely.

one option is to link Dpdk hash library with Bess.

I tried running docker_setup.sh in both dpdk and sim mode, but I always get the error "Error response from daemon: Container c1e2ddc79fd165e318f8b467a59a8f08683792d7ef8e761bd9ed95d4b0e662a4 is restarting, wait until the container is running"

I have pasted the startup logs below, please let me know if I am missing something. Thanks.

[upf-epc# dpdk-devbind -s

0000:02:06.0 '82545EM Gigabit Ethernet Controller (Copper) 100f' drv=vfio-pci unused=e1000

0000:02:07.0 '82545EM Gigabit Ethernet Controller (Copper) 100f' drv=vfio-pci unused=e1000

[upf-epc# lspci -n -s 0000:02:06.0

02:06.0 0200: 8086:100f (rev 01)

[upf-epc# lspci -n -s 0000:02:07.0

02:07.0 0200: 8086:100f (rev 01)

I made below change in docker_setup.sh as I just have one vfio

#DEVICES=${DEVICES:-'--device=/dev/vfio/48 --device=/dev/vfio/49 --device=/dev/vfio/vfio'}

DEVICES=${DEVICES:-'--device=/dev/vfio/5 --device=/dev/vfio/vfio'}

[upf-epc# ./docker_setup.sh

pause

bess

Error response from daemon: No such container: bess-routectl

Error response from daemon: No such container: bess-web

Error response from daemon: No such container: bess-cpiface

Error response from daemon: No such container: bess-pfcpiface

pause

bess

Error: No such container: bess-routectl

Error: No such container: bess-web

Error: No such container: bess-cpiface

Error: No such container: bess-pfcpiface

for target in bess cpiface pfcpiface; do

DOCKER_BUILDKIT=1 docker build --pull --build-arg MAKEFLAGS=-j4 --build-arg CPU --build-arg ENABLE_NTF=0

--target $target

--tag upf-epc-$target:0.3.0-dev

--label org.opencontainers.image.source="https://github.com/omec-project/upf-epc"

--label org.label.schema.version="0.3.0-dev"

--label org.label.schema.vcs.url="https://github.com/omec-project/upf-epc.git"

--label org.label.schema.vcs.ref="unknown"

--label org.label.schema.build.date="2021-06-07T18:29:48Z"

--label org.opencord.vcs.commit.date="unknown"

.

|| exit 1;

done

[+] Building 800.6s (31/31) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 4.27kB 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for ghcr.io/omec-project/upf-epc/bess_build:latest 2.0s

=> [internal] load metadata for docker.io/library/python:3.9.5-slim 2.5s

=> [bess-build 1/17] FROM ghcr.io/omec-project/upf-epc/bess_build@sha256:93d4174d661ac5e90cd261e97b0e2dc65c266a496807242e841d174297ea48b5 59.3s

=> => resolve ghcr.io/omec-project/upf-epc/bess_build@sha256:93d4174d661ac5e90cd261e97b0e2dc65c266a496807242e841d174297ea48b5 0.0s

=> => sha256:ed0ffe63a351dc5701eef3fb742fc3b67b0c3add6b4a6cae9cbb66ec5f5971c4 115B / 115B 0.0s

=> => sha256:d2e110be24e168b42c1a2ddbc4a476a217b73cccdba69cdcb212b812a88f5726 857B / 857B 0.0s

=> => sha256:889a7173dcfeb409f9d88054a97ab2445f5a799a823f719a5573365ee3662b6f 189B / 189B 0.0s

=> => sha256:188f335b3cffef4ff475044747b7d6f57618395222d3641be1bab9f056bbc855 248B / 248B 0.0s

=> => sha256:e743d447eb1fd36dbf13cbbf8f7fac73e638f3ba33e97258f62596a979634504 344B / 344B 0.0s

=> => sha256:1a5a599674c6ee01ae95280534d79508e0e83d6647fa955a1eefeaf938c77176 253.00MB / 253.00MB 0.0s

=> => sha256:3ccf95a3c2895092e1b77d34a0f475574a0a906f450abd1b8ec3ba40c3218bcd 113B / 113B 0.0s

=> => sha256:83e4096d08cbb6a45fef7be033811a2f281009acc0549fa5eb6e9f56ca45ea80 683.36MB / 683.36MB 0.0s

=> => sha256:93d4174d661ac5e90cd261e97b0e2dc65c266a496807242e841d174297ea48b5 2.61kB / 2.61kB 0.0s

=> => sha256:b03859e89aff370f6156c52afd303cec466095bf75b38ec3af33abaf95753723 6.28kB / 6.28kB 0.0s

=> => sha256:4bbfd2c87b7524455f144a03bf387c88b6d4200e5e0df9139a9d5e79110f89ca 26.70MB / 26.70MB 0.0s

=> => sha256:328aa0e08772a2c37b04465d213ed2d9fa9d965c95edbf8d44c35f4ddf5a69f5 1.03kB / 1.03kB 0.0s

=> => sha256:b630e9f43464e1f0c77fd40d0e9104f9120adf1d06d1bb8f170d4b5fedbaeb63 439B / 439B 0.0s

=> => extracting sha256:4bbfd2c87b7524455f144a03bf387c88b6d4200e5e0df9139a9d5e79110f89ca 1.2s

=> => extracting sha256:d2e110be24e168b42c1a2ddbc4a476a217b73cccdba69cdcb212b812a88f5726 0.0s

=> => extracting sha256:889a7173dcfeb409f9d88054a97ab2445f5a799a823f719a5573365ee3662b6f 0.0s

=> => extracting sha256:188f335b3cffef4ff475044747b7d6f57618395222d3641be1bab9f056bbc855 0.0s

=> => extracting sha256:328aa0e08772a2c37b04465d213ed2d9fa9d965c95edbf8d44c35f4ddf5a69f5 0.0s

=> => extracting sha256:e743d447eb1fd36dbf13cbbf8f7fac73e638f3ba33e97258f62596a979634504 0.0s

=> => extracting sha256:b630e9f43464e1f0c77fd40d0e9104f9120adf1d06d1bb8f170d4b5fedbaeb63 0.0s

=> => extracting sha256:1a5a599674c6ee01ae95280534d79508e0e83d6647fa955a1eefeaf938c77176 20.5s

=> => extracting sha256:3ccf95a3c2895092e1b77d34a0f475574a0a906f450abd1b8ec3ba40c3218bcd 0.0s

=> => extracting sha256:83e4096d08cbb6a45fef7be033811a2f281009acc0549fa5eb6e9f56ca45ea80 35.2s

=> => extracting sha256:ed0ffe63a351dc5701eef3fb742fc3b67b0c3add6b4a6cae9cbb66ec5f5971c4 0.0s

=> [internal] load build context 0.0s

=> => transferring context: 399.62kB 0.0s

=> [bess 1/8] FROM docker.io/library/python:3.9.5-slim@sha256:80b238ba357d98813bcc425f505dfa238f49cf5f895492fc2667af118dccaa44 0.1s

=> => resolve docker.io/library/python:3.9.5-slim@sha256:80b238ba357d98813bcc425f505dfa238f49cf5f895492fc2667af118dccaa44 0.0s

=> => sha256:609da079b03acb885de40c50f41c9d84f0aaba3fc0142abf01b0b5331de2b2b3 7.63kB / 7.63kB 0.0s

=> => sha256:69692152171afee1fd341febc390747cfca2ff302f2881d8b394e786af605696 27.15MB / 27.15MB 0.0s

=> => sha256:59773387c0e7ec4b901649cd3530cde9a32e6a76fccaf0b015119c329cb6af78 2.77MB / 2.77MB 0.0s

=> => sha256:3fc84e535e87f5dfd4ab5a3086531224f67051d7f7853c659d3dd0002b7c5a45 10.93MB / 10.93MB 0.0s

=> => sha256:68ebeebdab6f71c7a8dc8532e56ed6d69171502240306980ae78e8d13b03dde4 234B / 234B 0.0s

=> => sha256:3d3af2ef8baa6583768806f88dc0bf0a4aab6ee256cb2278e2412ddc035f3577 2.64MB / 2.64MB 0.0s

=> => sha256:80b238ba357d98813bcc425f505dfa238f49cf5f895492fc2667af118dccaa44 1.86kB / 1.86kB 0.0s

=> => sha256:1e554b804d653d5d49271bd15c6d53665be5f3097a9fc14deb2c7405e10fc406 1.37kB / 1.37kB 0.0s

=> [bess 2/8] RUN apt-get update && apt-get install -y --no-install-recommends gcc libgraph-easy-perl iproute2 iptables iputi 76.8s

=> [bess-build 2/17] RUN apt-get update && apt-get -y install --no-install-recommends ca-certificates libelf-dev 34.8s

=> [bess-build 3/17] WORKDIR /libbpf 0.0s

=> [bess-build 4/17] RUN curl -L https://github.com/libbpf/libbpf/tarball/v0.3 | tar xz -C . --strip-components=1 && cp include/uapi/linux/if_xdp.h /usr/include/ 9.1s

=> [bess-build 5/17] WORKDIR /bess 0.0s

=> [bess-build 6/17] RUN curl -L https://github.com/NetSys/bess/tarball/dpdk-2011 | tar xz -C . --strip-components=1 3.3s

=> [bess-build 7/17] COPY patches/dpdk/* deps/ 0.0s

=> [bess-build 8/17] COPY patches/bess patches 0.0s

=> [bess-build 9/17] RUN cat patches/* | patch -p1 && ./build.py dpdk 465.3s

=> [bess-build 10/17] RUN rm -f /usr/lib64/libbpf.so* 1.3s

=> [bess-build 11/17] RUN mkdir -p plugins 0.5s

=> [bess-build 12/17] RUN mv sample_plugin plugins 0.5s

=> [bess-build 13/17] COPY install_ntf.sh . 0.0s

=> [bess-build 14/17] RUN ./install_ntf.sh 0.7s

=> [bess-build 15/17] COPY core/ core/ 0.4s

=> [bess-build 16/17] COPY build_bess.sh . 0.0s

=> [bess-build 17/17] RUN ./build_bess.sh && cp bin/bessd /bin && mkdir -p /bin/modules && cp core/modules/.so /bin/modules && mkdir -p /opt/bess && 214.4s

=> [bess 3/8] COPY --from=bess-build /opt/bess /opt/bess 0.1s

=> [bess 4/8] COPY --from=bess-build /bin/bessd /bin/bessd 0.5s

=> [bess 5/8] COPY --from=bess-build /bin/modules /bin/modules 0.1s

=> [bess 6/8] COPY conf /opt/bess/bessctl/conf 0.1s

=> [bess 7/8] RUN ln -s /opt/bess/bessctl/bessctl /bin 0.6s

=> [bess 8/8] WORKDIR /opt/bess/bessctl 0.0s

=> exporting to image 5.9s

=> => exporting layers 5.9s

=> => writing image sha256:bc8a06cc2e8fb7845bca892671a507d7f607276adedd313cf10fe7c4e50a5378 0.0s

=> => naming to docker.io/library/upf-epc-bess:0.3.0-dev 0.0s

[+] Building 113.8s (32/32) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 38B 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for ghcr.io/omec-project/upf-epc/bess_build:latest 1.1s

=> [internal] load metadata for docker.io/library/ubuntu:bionic 2.6s

=> CACHED [bess-build 1/17] FROM ghcr.io/omec-project/upf-epc/bess_build@sha256:93d4174d661ac5e90cd261e97b0e2dc65c266a496807242e841d174297ea48b5 0.0s

=> [cpiface 1/3] FROM docker.io/library/ubuntu:bionic@sha256:67b730ece0d34429b455c08124ffd444f021b81e06fa2d9cd0adaf0d0b875182 0.1s

=> => resolve docker.io/library/ubuntu:bionic@sha256:67b730ece0d34429b455c08124ffd444f021b81e06fa2d9cd0adaf0d0b875182 0.0s

=> => sha256:67b730ece0d34429b455c08124ffd444f021b81e06fa2d9cd0adaf0d0b875182 1.41kB / 1.41kB 0.0s

=> => sha256:ceed028aae0eac7db9dd33bd89c14d5a9991d73443b0de24ba0db250f47491d2 943B / 943B 0.0s

=> => sha256:81bcf752ac3dc8a12d54908ecdfe98a857c84285e5d50bed1d10f9812377abd6 3.32kB / 3.32kB 0.0s

=> => sha256:4bbfd2c87b7524455f144a03bf387c88b6d4200e5e0df9139a9d5e79110f89ca 26.70MB / 26.70MB 0.0s

=> => sha256:d2e110be24e168b42c1a2ddbc4a476a217b73cccdba69cdcb212b812a88f5726 857B / 857B 0.0s

=> => sha256:889a7173dcfeb409f9d88054a97ab2445f5a799a823f719a5573365ee3662b6f 189B / 189B 0.0s

=> [internal] load build context 0.0s

=> => transferring context: 61.55kB 0.0s

=> [cpiface-build 2/7] RUN apt-get update -y && apt-get install -y libzmq3-dev libjsoncpp-dev 101.4s

=> CACHED [bess-build 2/17] RUN apt-get update && apt-get -y install --no-install-recommends ca-certificates libelf-dev 0.0s

=> CACHED [bess-build 3/17] WORKDIR /libbpf 0.0s

=> CACHED [bess-build 4/17] RUN curl -L https://github.com/libbpf/libbpf/tarball/v0.3 | tar xz -C . --strip-components=1 && cp include/uapi/linux/if_xdp.h /usr/i 0.0s

=> CACHED [bess-build 5/17] WORKDIR /bess 0.0s

=> CACHED [bess-build 6/17] RUN curl -L https://github.com/NetSys/bess/tarball/dpdk-2011 | tar xz -C . --strip-components=1 0.0s

=> CACHED [bess-build 7/17] COPY patches/dpdk/ deps/ 0.0s

=> CACHED [bess-build 8/17] COPY patches/bess patches 0.0s

=> CACHED [bess-build 9/17] RUN cat patches/* | patch -p1 && ./build.py dpdk 0.0s

=> CACHED [bess-build 10/17] RUN rm -f /usr/lib64/libbpf.so* 0.0s

=> CACHED [bess-build 11/17] RUN mkdir -p plugins 0.0s

=> CACHED [bess-build 12/17] RUN mv sample_plugin plugins 0.0s

=> CACHED [bess-build 13/17] COPY install_ntf.sh . 0.0s

=> CACHED [bess-build 14/17] RUN ./install_ntf.sh 0.0s

=> CACHED [bess-build 15/17] COPY core/ core/ 0.0s

=> CACHED [bess-build 16/17] COPY build_bess.sh . 0.0s

=> CACHED [bess-build 17/17] RUN ./build_bess.sh && cp bin/bessd /bin && mkdir -p /bin/modules && cp core/modules/.so /bin/modules && mkdir -p /opt/bess 0.0s

=> [cpiface 2/3] RUN apt-get update && apt-get install -y --no-install-recommends libgoogle-glog0v5 libzmq5 && rm -rf /var/lib/apt/lists/ 99.5s

=> [cpiface-build 3/7] WORKDIR /cpiface 0.0s

=> [cpiface-build 4/7] COPY cpiface . 0.1s

=> [cpiface-build 5/7] COPY --from=bess-build /pb pb 0.2s

=> [cpiface-build 6/7] COPY core/utils/gtp_common.h . 0.0s

=> [cpiface-build 7/7] RUN make -j4 && cp zmq-cpiface /bin 9.0s

=> [cpiface 3/3] COPY --from=cpiface-build /bin/zmq-cpiface /bin 0.1s

=> exporting to image 0.1s

=> => exporting layers 0.1s

=> => writing image sha256:d2d1358ca3f230c389c3adc8e2426137ba0252eb99138df642875bd8bcc1b7c7 0.0s

=> => naming to docker.io/library/upf-epc-cpiface:0.3.0-dev 0.0s

[+] Building 34.7s (14/14) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 38B 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for docker.io/library/alpine:latest 2.4s

=> [internal] load metadata for docker.io/library/golang:latest 2.4s

=> [internal] load build context 0.6s

=> => transferring context: 16.04MB 0.6s

=> [pfcpiface 1/4] FROM docker.io/library/alpine@sha256:69e70a79f2d41ab5d637de98c1e0b055206ba40a8145e7bddb55ccc04e13cf8f 0.6s

=> => resolve docker.io/library/alpine@sha256:69e70a79f2d41ab5d637de98c1e0b055206ba40a8145e7bddb55ccc04e13cf8f 0.0s

=> => extracting sha256:540db60ca9383eac9e418f78490994d0af424aab7bf6d0e47ac8ed4e2e9bcbba 0.4s

=> => sha256:69e70a79f2d41ab5d637de98c1e0b055206ba40a8145e7bddb55ccc04e13cf8f 1.64kB / 1.64kB 0.0s

=> => sha256:def822f9851ca422481ec6fee59a9966f12b351c62ccb9aca841526ffaa9f748 528B / 528B 0.0s

=> => sha256:6dbb9cc54074106d46d4ccb330f2a40a682d49dda5f4844962b7dce9fe44aaec 1.47kB / 1.47kB 0.0s

=> => sha256:540db60ca9383eac9e418f78490994d0af424aab7bf6d0e47ac8ed4e2e9bcbba 2.81MB / 2.81MB 0.0s

=> [pfcpiface-build 1/4] FROM docker.io/library/golang@sha256:360bc82ac2b24e9ab6e5867eebac780920b92175bb2e9e1952dce15571699baa 16.8s

=> => resolve docker.io/library/golang@sha256:360bc82ac2b24e9ab6e5867eebac780920b92175bb2e9e1952dce15571699baa 0.0s

=> => sha256:65d943ee54c1fa196b54ab9a6673174c66eea04cfa1a4ac5b0328b74f066a4d9 51.84MB / 51.84MB 0.0s

=> => sha256:db807893dccf12b2476ed4f38061fb7b0e0091c1b91ebedd23fdd39924c33988 156B / 156B 0.0s

=> => sha256:be0e3a0f3ffa448b0bcbb9019edca692b8278407a44dc138c60e6f12f0218f87 1.79kB / 1.79kB 0.0s

=> => sha256:b09f7387a7195b1cfe0144557a8e33af2174426a4b76cb89e499093803d02e7b 7.00kB / 7.00kB 0.0s

=> => sha256:e8d62473a22dec9ffef056b2017968a9dc484c8f836fb6d79f46980b309e9138 7.83MB / 7.83MB 0.0s

=> => sha256:f2253e6fbefa9189360a7818eb45effaed9bcb4ff631741a32609b39a2b2c1a2 68.74MB / 68.74MB 0.0s

=> => sha256:186c77a2a53344f8e525fa90d18a869f8bebc3fa4aa6586d2040e2611eab57f8 129.05MB / 129.05MB 0.0s

=> => sha256:360bc82ac2b24e9ab6e5867eebac780920b92175bb2e9e1952dce15571699baa 2.36kB / 2.36kB 0.0s

=> => sha256:d960726af2bec62a87ceb07182f7b94c47be03909077e23d8226658f80b47f87 50.43MB / 50.43MB 0.0s

=> => sha256:8962bc0fad55b13afedfeb6ad5cb06fd065461cf3e1ae4e7aeae5eeb17b179df 10.00MB / 10.00MB 0.0s

=> => extracting sha256:d960726af2bec62a87ceb07182f7b94c47be03909077e23d8226658f80b47f87 2.7s

=> => extracting sha256:e8d62473a22dec9ffef056b2017968a9dc484c8f836fb6d79f46980b309e9138 0.4s

=> => extracting sha256:8962bc0fad55b13afedfeb6ad5cb06fd065461cf3e1ae4e7aeae5eeb17b179df 0.4s

=> => extracting sha256:65d943ee54c1fa196b54ab9a6673174c66eea04cfa1a4ac5b0328b74f066a4d9 2.8s

=> => extracting sha256:f2253e6fbefa9189360a7818eb45effaed9bcb4ff631741a32609b39a2b2c1a2 2.9s

=> => extracting sha256:186c77a2a53344f8e525fa90d18a869f8bebc3fa4aa6586d2040e2611eab57f8 6.3s

=> => extracting sha256:db807893dccf12b2476ed4f38061fb7b0e0091c1b91ebedd23fdd39924c33988 0.0s

=> [pfcpiface 2/4] COPY conf /opt/bess/bessctl/conf 0.2s

=> [pfcpiface 3/4] COPY conf/p4/bin/p4info.bin conf/p4/bin/p4info.txt conf/p4/bin/bmv2.json /bin/ 0.2s

=> [pfcpiface-build 2/4] WORKDIR /pfcpiface 0.5s

=> [pfcpiface-build 3/4] COPY pfcpiface . 0.6s

=> [pfcpiface-build 4/4] RUN CGO_ENABLED=0 go build -mod=vendor -o /bin/pfcpiface 13.9s

=> [pfcpiface 4/4] COPY --from=pfcpiface-build /bin/pfcpiface /bin 0.1s

=> exporting to image 0.1s

=> => exporting layers 0.1s

=> => writing image sha256:fe407ca92ec96a3482fbe528cd504da367a3278c0c572616a7cbb2803f07878f 0.0s

=> => naming to docker.io/library/upf-epc-pfcpiface:0.3.0-dev 0.0s

0c38d6b99c6b6e2cef11efa9e6e7e33503e425490304e8fef18e173be27deebe

c1e2ddc79fd165e318f8b467a59a8f08683792d7ef8e761bd9ed95d4b0e662a4

Error response from daemon: Container c1e2ddc79fd165e318f8b467a59a8f08683792d7ef8e761bd9ed95d4b0e662a4 is restarting, wait until the container is running

Is your feature request related to a problem? Please describe.

This feature request is not quite related to a problem.

Some time, while the UPF data traffic works abnormally, user may like to check the routing table or PFCP inform, such as contains of FAR/PDR.

Is it possible that the user is able to set the log level of each PFCP, so that we may get the informs like below

Is your feature request related to a problem? Please describe.

Build time is currently high due to grpc compilation from source.

Describe the solution you'd like

Explore use of packages or binary downloads.

I am try in to rum upf-epc in simulation mode, only change I made to ./docker_setup.sh is to update the cpu cores to be used.

I made all changes as specified in the INSTALL.md file. But kept getting this error.

...

[+] Building 0.9s (14/14) FINISHED

=> exporting to image 0.1s

=> => exporting layers 0.0s

=> => writing image sha256:4f306e1b1239be4aa5b6cae458209366e9176f6fb91f3f7700dc682a4fc9f7c9 0.0s

=> => naming to docker.io/library/upf-epc-pfcpiface:0.3.0-dev 0.0s

56ce2651377ea1d3fa25d5537587cbc4f00918ea26d1af72b4a4c56605b5e5a3

56a90cb01ea9e97546d90bee6b45a2d3de5097338838f2e80d193e38a7d579c0

I0225 02:48:19.409056 1 main.cc:62] Launching BESS daemon in process mode...

I0225 02:48:19.410311 1 main.cc:75] bessd unknown

I0225 02:48:19.416368 1 bessd.cc:456] Loading plugin (attempt 1): /bin/modules/sequential_update.so

I0225 02:48:19.419627 1 memory.cc:128] /sys/devices/system/node/possible not available. Assuming a single-node system...

I0225 02:48:19.419680 1 dpdk.cc:167] Initializing DPDK EAL with options: ["bessd", "--master-lcore", "127", "--lcore", "127@2-3", "--no-shconf", "--legacy-mem", "--socket-mem", "1024", "--huge-unlink"]

EAL: Detected 4 lcore(s)

EAL: Detected 1 NUMA nodes

Option --master-lcore is deprecated use main-lcore

EAL: Selected IOVA mode 'VA'

EAL: No available hugepages reported in hugepages-2048kB

EAL: FATAL: Cannot get hugepage information.

EAL: Cannot get hugepage information.

F0225 02:48:19.431933 1 dpdk.cc:170] rte_eal_init() failed: ret = -1 rte_errno = 13 (Permission denied)

*** Check failure stack trace: ***

F0225 02:48:19.451370 1 debug.cc:405] Backtrace (recent calls first) ---

(0): bessd(+0x6d76d3) [0x559388c4d6d3]

(1): bessd(_ZN4bess8InitDpdkEi+0x6b) [0x559388c4daeb]

(2): bessd(_ZN4bess10PacketPool18CreateDefaultPoolsEm+0x36) [0x559388c40666]

(3): bessd(main+0x22e) [0x5593889a6fce]

(4): /lib/x86_64-linux-gnu/libc.so.6(__libc_start_main+0xea) [0x7fa13b42f09a]

(5): bessd(_start+0x29) [0x559388c381c9]

*** Check failure stack trace: ***

Error response from daemon: Container 56a90cb01ea9e97546d90bee6b45a2d3de5097338838f2e80d193e38a7d579c0 is restarting, wait until the container is running

root@comp5g:/home/user5g/upf-epc#

I basically have a 24 core rack server system on which I want to deploy a dummy EPC network.

I am new to telecommunication so please bear with me.

Please guide me.

Hi all,

I'm trying to run the docker_setup.sh, but I'm getting the following error:

Error response from daemon: No such container: bess

Error response from daemon: No such container: bess-routectl

Error response from daemon: No such container: bess-web

Error response from daemon: No such container: bess-cpiface

Error: No such container: bess

Error: No such container: bess-routectl

Error: No such container: bess-web

Error: No such container: bess-cpiface

[+] Building 1.4s (29/29) FINISHED

=> [internal] load build definition from Dockerfile 0.2s

=> => transferring dockerfile: 38B 0.1s

=> [internal] load .dockerignore 0.1s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for docker.io/nefelinetworks/bess_build:latest 0.9s

=> [internal] load metadata for docker.io/library/python:2.7-slim 0.9s

=> [internal] helper image for file operations 0.0s

=> [bess-build 1/14] FROM docker.io/nefelinetworks/bess_build@sha256:da7cf6ae598704fc1a009f81a3988e8a3c964238e6e7a4ed78c944f39f8632d0 0.0s

=> [bess 1/6] FROM docker.io/library/python:2.7-slim@sha256:2117cd714ad2212aaac09aac7088336241214c88dc7105e50d19c269fde6e0f6 0.0s

=> [internal] load build context 0.0s

=> => transferring context: 1.03kB 0.0s

=> CACHED [bess 2/6] RUN apt-get update && apt-get install -y --no-install-recommends libgraph-easy-perl iproute2 0.0s

=> CACHED [pip 2/3] RUN apt-get update && apt-get install -y gcc 0.0s

=> CACHED [pip 3/3] RUN pip install --no-cache-dir psutil 0.0s

=> CACHED [bess 3/6] COPY --from=pip /usr/local/lib/python2.7/site-packages/psutil /usr/local/lib/python2.7/site-packages/psutil 0.0s

=> CACHED [bess-build 2/14] RUN apt-get update && apt-get -y install --no-install-recommends build-essential ca-certifica 0.0s

=> CACHED [bess-build 3/14] RUN wget -qO linux.tar.xz https://cdn.kernel.org/pub/linux/kernel/v5.x/linux-5.1.15.tar.xz 0.0s

=> CACHED [bess-build 4/14] RUN mkdir linux && tar -xf linux.tar.xz -C linux --strip-components 1 && cp linux/include/uapi/linux/if_x 0.0s

=> CACHED [bess-build 5/14] RUN git clone -b v19.11 -q --depth 1 http://dpdk.org/git/dpdk /dpdk 0.0s

=> CACHED [bess-build 6/14] RUN sed -ri 's,(IGB_UIO=).*,\1n,' config/common_linux* && sed -ri 's,(KNI_KMOD=).*,\1n,' config/common_linux* 0.0s

=> CACHED [bess-build 7/14] RUN apt-get update && apt-get install -y wget unzip ca-certificates git 0.0s

=> CACHED [bess-build 8/14] RUN wget -qO bess.zip https://github.com/NetSys/bess/archive/master.zip && unzip bess.zip 0.0s

=> CACHED [bess-build 9/14] COPY core/modules/ core/modules/ 0.0s

=> CACHED [bess-build 10/14] COPY core/utils/ core/utils/ 0.0s

=> CACHED [bess-build 11/14] COPY protobuf/ protobuf/ 0.0s

=> CACHED [bess-build 12/14] COPY patches/bess patches 0.0s

=> CACHED [bess-build 13/14] RUN cp -a /dpdk deps/dpdk-19.11 && cat patches/* | patch -p1 0.0s

=> CACHED [bess-build 14/14] RUN CXXARCHFLAGS="-march=native -Werror=format-truncation -Warray-bounds -fbounds-check -fno-strict-overflow -fn 0.0s

=> CACHED [bess 4/6] COPY --from=bess-build /opt/bess /opt/bess 0.0s

=> CACHED [bess 5/6] COPY --from=bess-build /bin/bessd /bin 0.0s

=> CACHED [bess 6/6] RUN ln -s /opt/bess/bessctl/bessctl /bin 0.0s

=> exporting to image 0.0s

=> => exporting layers 0.0s

=> => writing image sha256:5722b05406b00299cc7f6978145b732ea5d78ea0e69070e556ced81551d0f9eb 0.0s

=> => naming to docker.io/library/spgwu 0.0s

[+] Building 1.0s (32/32) FINISHED

=> [internal] load build definition from Dockerfile 0.1s

=> => transferring dockerfile: 38B 0.0s

=> [internal] load .dockerignore 0.1s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for docker.io/nefelinetworks/bess_build:latest 0.5s

=> [internal] load metadata for docker.io/library/ubuntu:18.04 0.5s

=> [bess-build 1/14] FROM docker.io/nefelinetworks/bess_build@sha256:da7cf6ae598704fc1a009f81a3988e8a3c964238e6e7a4ed78c944f39f8632d0 0.0s

=> [internal] helper image for file operations 0.0s

=> [internal] load build context 0.1s

=> => transferring context: 15.93kB 0.1s

=> [cpiface 1/5] FROM docker.io/library/ubuntu:18.04@sha256:04d48df82c938587820d7b6006f5071dbbffceb7ca01d2814f81857c631d44df 0.0s

=> CACHED [cpiface 2/5] RUN apt-get update && apt-get install -y --no-install-recommends libgoogle-glog0v5 libzmq5 && 0.0s

=> CACHED [cpiface-build 2/7] RUN apt-get update && apt-get install -y autoconf automake clang build-essentia 0.0s

=> CACHED [cpiface-build 3/7] RUN cd /opt && git clone -q https://github.com/grpc/grpc.git && cd grpc && git checkout 216fa1cab3a 0.0s

=> CACHED [cpiface-build 4/7] COPY cpiface /cpiface 0.0s

=> CACHED [bess-build 2/14] RUN apt-get update && apt-get -y install --no-install-recommends build-essential ca-certifica 0.0s

=> CACHED [bess-build 3/14] RUN wget -qO linux.tar.xz https://cdn.kernel.org/pub/linux/kernel/v5.x/linux-5.1.15.tar.xz 0.0s

=> CACHED [bess-build 4/14] RUN mkdir linux && tar -xf linux.tar.xz -C linux --strip-components 1 && cp linux/include/uapi/linux/if_x 0.0s

=> CACHED [bess-build 5/14] RUN git clone -b v19.11 -q --depth 1 http://dpdk.org/git/dpdk /dpdk 0.0s

=> CACHED [bess-build 6/14] RUN sed -ri 's,(IGB_UIO=).*,\1n,' config/common_linux* && sed -ri 's,(KNI_KMOD=).*,\1n,' config/common_linux* 0.0s

=> CACHED [bess-build 7/14] RUN apt-get update && apt-get install -y wget unzip ca-certificates git 0.0s

=> CACHED [bess-build 8/14] RUN wget -qO bess.zip https://github.com/NetSys/bess/archive/master.zip && unzip bess.zip 0.0s

=> CACHED [bess-build 9/14] COPY core/modules/ core/modules/ 0.0s

=> CACHED [bess-build 10/14] COPY core/utils/ core/utils/ 0.0s

=> CACHED [bess-build 11/14] COPY protobuf/ protobuf/ 0.0s

=> CACHED [bess-build 12/14] COPY patches/bess patches 0.0s

=> CACHED [bess-build 13/14] RUN cp -a /dpdk deps/dpdk-19.11 && cat patches/* | patch -p1 0.0s

=> CACHED [bess-build 14/14] RUN CXXARCHFLAGS="-march=native -Werror=format-truncation -Warray-bounds -fbounds-check -fno-strict-overflow -fn 0.0s

=> CACHED [cpiface-build 5/7] COPY --from=bess-build /protobuf /protobuf 0.0s

=> CACHED [cpiface-build 6/7] COPY core/utils/gtp_common.h /cpiface 0.0s

=> CACHED [cpiface-build 7/7] RUN cd /cpiface && make -j4 PBDIR=/protobuf && cp zmq-cpiface /bin/ 0.0s

=> CACHED [cpiface 3/5] COPY --from=cpiface-build /opt/grpc/libs/opt /opt/grpc/libs/opt 0.0s

=> CACHED [cpiface 4/5] RUN echo "/opt/grpc/libs/opt" > /etc/ld.so.conf.d/grpc.conf && ldconfig 0.0s

=> CACHED [cpiface 5/5] COPY --from=cpiface-build /bin/zmq-cpiface /bin 0.0s

=> exporting to image 0.1s

=> => exporting layers 0.0s

=> => writing image sha256:b97a3c64e7dce8defd3064a2a5f6c3fdd68751080cf68043fe118b3f89ca5945 0.0s

=> => naming to docker.io/library/cpiface 0.0s

e52838a3aed2dd47c7539e348331a54d7fdf03eaebc045c267f13f30d2e6cd49

docker: Error response from daemon: linux runtime spec devices: error gathering device information while adding custom device "/dev/vfio/48": no such file or directory.```

I'm running it on a VM with Ubuntu, 8GB of RAM and 4 vCPUs

I was checking the /dev/vfio/ and the only folder inside of it is vfio (again).

I'm facing an issue when testing the performance of UFP :

Performance of one way(UL or DL) is about 2M pps,

but two way(UL and DL) is still 2M pps, not as expected 4M pps.

(Using two DPDK bound NICs for N3 an N6.)

Really appreciate if any further suggestion.

Thank you in advance !

I tried to make the performance of two-way touch 4M pps, but these are not workable :

(1) Pinning multiple cores

(2) 2 worker threads

(1) Pinning multiple cores :

Isolate 4 cores on the same NUMA node, but only one core is 100% loading.

At host (/etc/default/grub) :

GRUB_CMDLINE_LINUX="isolcpus=2,4,6,8"

In script(docker_setup.sh) :

# Run bessd

docker run --name bess -td --restart unless-stopped

--cpuset-cpus="2,4,6,8" --cpus 4

...

After running docker_setup.sh and no UL/DL data, command "htop" : 100% loading is only one core, not four cores.

(2) 2 worker threads :

There is error when executing docker_setup.sh, if workers=2.

In conf/upf.json :

"": "Number of worker threads. Default: use 1 thread to run both (uplink/downlink) pipelines",

"workers": 2,

Executing docker_setup.sh :

Error and stop.

Error log : ([P.S.] enp3s0f0 : N3 NIC / enp3s0f1 : N6 NIC )

401 module.cc:224] Mismatch in number of workers for module enp3s0f0Merge min required 1 max allowed 64 attached workers 0

401 module.cc:224] Mismatch in number of workers for module enp3s0f0SrcEther min required 1 max allowed 64 attached workers 0

401 module.cc:224] Mismatch in number of workers for module enp3s0f0_measure min required 1 max allowed 64 attached workers 0

401 module.cc:224] Mismatch in number of workers for module enp3s0f1Merge min required 1 max allowed 64 attached workers 0

401 module.cc:224] Mismatch in number of workers for module enp3s0f1SrcEther min required 1 max allowed 64 attached workers 0

401 module.cc:224] Mismatch in number of workers for module enp3s0f1_measure min required 1 max allowed 64 attached workers 0

401 module.cc:224] Mismatch in number of workers for module postDLQoSCounter min required 1 max allowed 1 attached workers 2

401 module.cc:229] Violates thread safety, returning fatal error

401 bessctl.cc:726] Module postDLQoSCounter failed check 2

401 bessctl.cc:730] --- FATAL CONSTRAINT FAILURE ---

401 module.cc:224] Mismatch in number of workers for module postULQoSCounter min required 1 max allowed 1 attached workers 2

401 module.cc:229] Violates thread safety, returning fatal error

401 bessctl.cc:726] Module postULQoSCounter failed check 2

401 bessctl.cc:730] --- FATAL CONSTRAINT FAILURE ---

401 module.cc:224] Mismatch in number of workers for module preQoSCounter min required 1 max allowed 1 attached workers 2

401 module.cc:229] Violates thread safety, returning fatal error

401 bessctl.cc:726] Module preQoSCounter failed check 2

401 bessctl.cc:730] --- FATAL CONSTRAINT FAILURE ---

Describe the bug

Did some tests with Spirent packet generator. The GTP packets (with sequence numbers) are using up 4 B to store sequence numbers (instead of 2 bytes). We need to verify this. A PR (#8) has been submitted to fix this issue. Not sure whether this is a correct patch.

To Reproduce

Run Spirent Landslide gold testcase that was created by the Spirent team for the Intel TSR group.

Expected behavior

See above.

Logs

PCAP files may be uploaded soon.

Additional context

The original ngic-rtc code also reserves 4B field to store sequence numbers. Someone needs to verify the field size from the 3GPP specs as well.

A declarative, efficient, and flexible JavaScript library for building user interfaces.

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

An Open Source Machine Learning Framework for Everyone

The Web framework for perfectionists with deadlines.

A PHP framework for web artisans

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

Some thing interesting about web. New door for the world.

A server is a program made to process requests and deliver data to clients.

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

Some thing interesting about visualization, use data art

Some thing interesting about game, make everyone happy.

We are working to build community through open source technology. NB: members must have two-factor auth.

Open source projects and samples from Microsoft.

Google ❤️ Open Source for everyone.

Alibaba Open Source for everyone

Data-Driven Documents codes.

China tencent open source team.