OpenUnReID is an open-source PyTorch-based codebase for both unsupervised learning (USL) and unsupervised domain adaptation (UDA) in the context of object re-ID tasks. It provides strong baselines and multiple state-of-the-art methods with highly refactored codes for both pseudo-label-based and domain-translation-based frameworks. It works with Python >=3.5 and PyTorch >=1.1.

We are actively updating this repo, and more methods will be supported soon. Contributions are welcome.

- Distributed training & testing with multiple GPUs and multiple machines.

- High flexibility on various combinations of datasets, backbones, losses, etc.

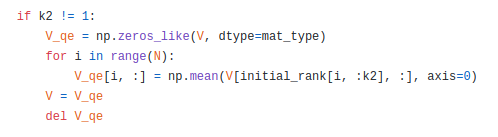

- GPU-based pseudo-label generation and k-reciprocal re-ranking with quite high speed.

- Plug-and-play domain-specific BatchNorms for any backbones, sync BN is also supported.

- Mixed precision training is supported, achieving higher efficiency.

- A strong cluster baseline, providing high extensibility on designing new methods.

- State-of-the-art methods and performances for both USL and UDA problems on object re-ID.

Please refer to MODEL_ZOO.md for trained models and download links, and please refer to LEADERBOARD.md for the leaderboard on public benchmarks.

| Method | Reference | USL | UDA |

|---|---|---|---|

| UDA_TP | PR'20 (arXiv'18) | ✓ | ✓ |

| SPGAN | CVPR'18 | n/a | ✓ |

| SSG | ICCV'19 | ongoing | ongoing |

| strong_baseline | Sec. 3.1 in ICLR'20 | ✓ | ✓ |

| MMT | ICLR'20 | ✓ | ✓ |

| SpCL | NeurIPS'20 | ✓ | ✓ |

| SDA | arXiv'20 | n/a | ongoing |

[2020-08-02] Add the leaderboard on public benchmarks: LEADERBOARD.md

[2020-07-30] OpenUnReID v0.1.1 is released:

- Support domain-translation-based frameworks, CycleGAN and SPGAN.

- Support mixed precision training (

torch.cuda.ampin PyTorch>=1.6), use it by addingTRAIN.amp Trueat the end of training commands.

[2020-07-01] OpenUnReID v0.1.0 is released.

Please refer to INSTALL.md for installation and dataset preparation.

Please refer to GETTING_STARTED.md for the basic usage of OpenUnReID.

OpenUnReID is released under the Apache 2.0 license.

If you use this toolbox or models in your research, please consider cite:

@inproceedings{ge2020mutual,

title={Mutual Mean-Teaching: Pseudo Label Refinery for Unsupervised Domain Adaptation on Person Re-identification},

author={Yixiao Ge and Dapeng Chen and Hongsheng Li},

booktitle={International Conference on Learning Representations},

year={2020},

url={https://openreview.net/forum?id=rJlnOhVYPS}

}

@inproceedings{ge2020selfpaced,

title={Self-paced Contrastive Learning with Hybrid Memory for Domain Adaptive Object Re-ID},

author={Yixiao Ge and Feng Zhu and Dapeng Chen and Rui Zhao and Hongsheng Li},

booktitle={Advances in Neural Information Processing Systems},

year={2020}

}

Some parts of openunreid are learned from torchreid and fastreid. We would like to thank for their projects, which have boosted the research of supervised re-ID a lot. We hope that OpenUnReID could well benefit the research community of unsupervised re-ID by providing strong baselines and state-of-the-art methods.

This project is developed by Yixiao Ge (@yxgeee), Tong Xiao (@Cysu), Zhiwei Zhang (@zwzhang121).