npm install three @react-three/fiber @react-three/xr@latestA simple scene with a mesh that toggles its material color between "red" and "blue" when clicked through touching or pointing. |

|

|---|

import { Canvas } from '@react-three/fiber'

import { XR, createXRStore } from '@react-three/xr'

import { useState } from 'react'

const store = createXRStore()

export function App() {

const [red, setRed] = useState(false)

return (

<>

<button onClick={() => store.enterAR()}>Enter AR</button>

<Canvas>

<XR store={store}>

<mesh pointerEventsType={{ deny: 'grab' }} onClick={() => setRed(!red)} position={[0, 1, -1]}>

<boxGeometry />

<meshBasicMaterial color={red ? 'red' : 'blue'} />

</mesh>

</XR>

</Canvas>

</>

)

}const store = createXRStore()create a xr storestore.enterAR()call enter AR when clicking on a button<XR>...</XR>wrap your content with the XR component

... or read this guide for converting a react-three/fiber app to XR.

- 💾 Store

- 👌 Interactions

- 🔧 Options

- 🧊 Object Detection

- ✴ Origin

- 🪄 Teleport

- 🕹️ Gamepad

- ➕ Secondary Input Sources

- 📺 Layers

- 🎮 Custom Controller/Hands/...

- ⚓️ Anchors

- 📱 Dom Overlays

- 🎯 Hit Test

- ⛨ Guards

- 🤳 XR Gestures

- 🕺 Tracked Body

- ↕ react-three/controls

- from @react-three/xr v5

- from natuerlich

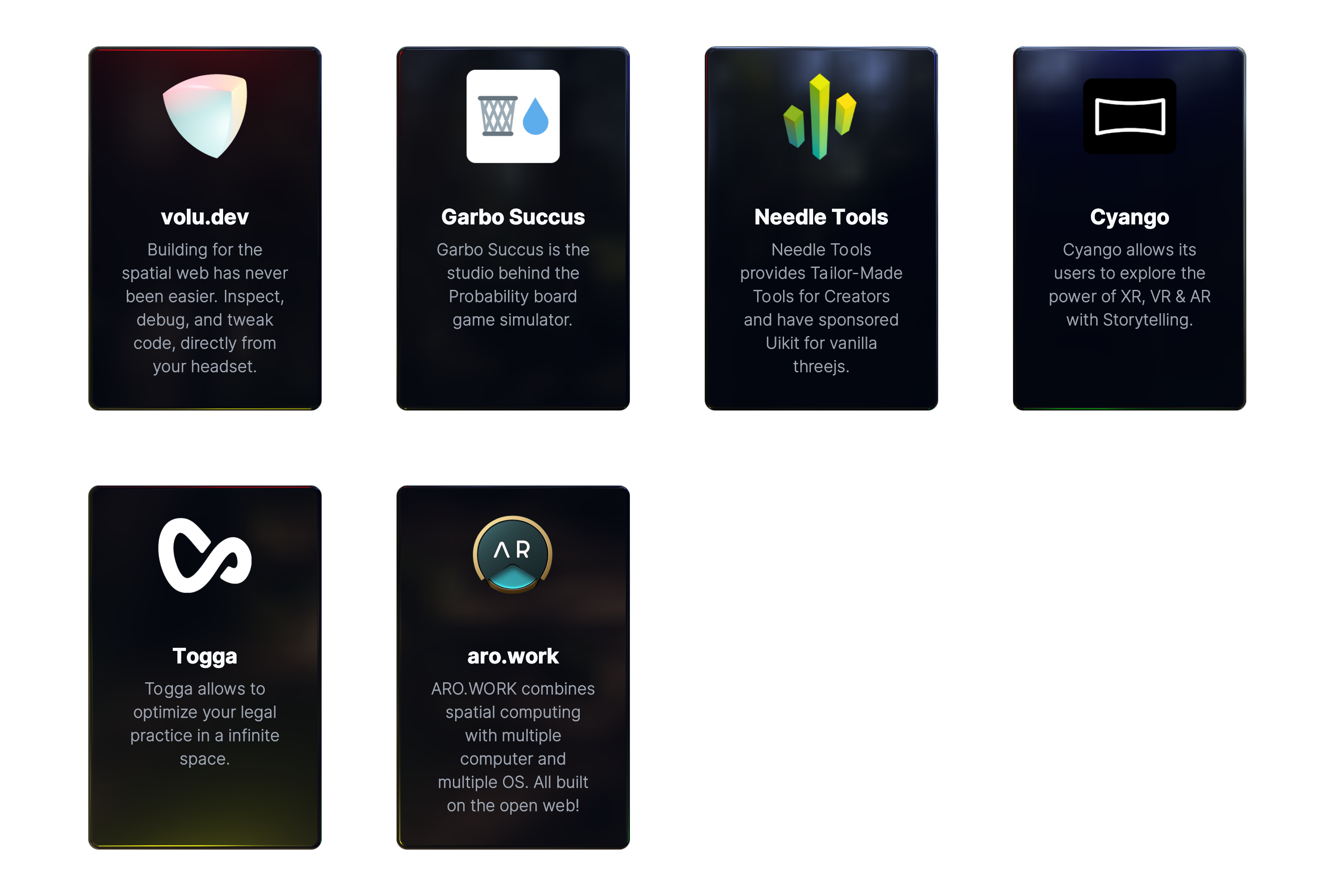

This project is supported by a few companies and individuals building cutting-edge 3D Web & XR experiences. Check them out!