This repository includes Feature Engineering by Python.

# import the libraries for run all sections

import cv2

import numpy as np

import pylab as pl

import pandas as pd

from skimage import color

from scipy import ndimage as ndi

import matplotlib.image as mpimg

from matplotlib import patches

import matplotlib.pyplot as plt

import glob

import sys

import os

import mahotas as mt

def get_fps(src_dir):

video = cv2.VideoCapture(src_dir);

# Find OpenCV version

(major_ver, minor_ver, subminor_ver) = (cv2.__version__).split('.')

if int(major_ver) < 3 :

fps = video.get(cv2.cv.CV_CAP_PROP_FPS)

print ("Frames per second using video.get(cv2.cv.CV_CAP_PROP_FPS): {0}".format(fps))

else :

fps = video.get(cv2.CAP_PROP_FPS)

print ("Frames per second using video.get(cv2.CAP_PROP_FPS) : {0}".format(fps))

video.release();

src_dir = "/media/rezwan/Study/Thesis/Feature_Extraction_Code/Sample_Videos"

sourceDir = src_dir + '/*.mp4'

vList = glob.glob(sourceDir)

dataFrameArr = []

for i in range(len(vList)):

vDirName = vList[i]

head, tail = os.path.split(vDirName)

print(tail)

# Call `get_fps` function for calculate the frame per second(fps) of a video.

get_fps(vDirName)2️⃣ Extract images from video. Source

def video_to_frames(video_filename, dst_File, tail):

"""Extract frames from video"""

path = dst_File + "/" + tail[:-4]

print(path)

os.mkdir(path)

cap = cv2.VideoCapture(video_filename)

video_length = int(cap.get(cv2.CAP_PROP_FRAME_COUNT)) - 1

frames = []

if cap.isOpened() and video_length > 0:

frame_ids = [0]

if video_length >= 4:

frame_ids = [0,

round(video_length * 0.25),

round(video_length * 0.5),

round(video_length * 0.75),

video_length - 1]

count = 0

success, image = cap.read()

while success:

if count in frame_ids:

frames.append(image)

success, image = cap.read()

cv2.imwrite(os.path.join(path, str(count) + '.jpg'), image)

count += 1

# return frames

dst_File = "/media/rezwan/Study/Thesis/Feature_Extraction_Code/Images"

src_dir = "/media/rezwan/Study/Thesis/Feature_Extraction_Code/Sample_Videos"

sourceDir = src_dir + '/*.mp4'

vList = glob.glob(sourceDir)

dataFrameArr = []

for i in range(len(vList)):

vDirName = vList[i]

head, tail = os.path.split(vDirName)

# get frames from video

video_to_frames(vDirName, dst_File, tail)- S85_F56_GL=12.9-850LEDFon_F=100

- S85_F56_GL=12.9-850LEDFon_F=105

- S91_F46_GL=7.9-940LEDFon_F=115

- S91_F46_GL=7.9-940LEDFon_F=120

- S140_M75_GL=11.5-850LEDFon_F=120

- S140_M75_GL=11.5-940LEDFon_F=100

(1) Local Binary Patterns(LBP). Source

def get_pixel(img, center, x, y):

new_value = 0

try:

if img[x][y] >= center:

new_value = 1

except:

pass

return new_value

def lbp_calculated_pixel(img, x, y):

'''

64 | 128 | 1

----------------

32 | 0 | 2

----------------

16 | 8 | 4

'''

center = img[x][y]

val_ar = []

val_ar.append(get_pixel(img, center, x-1, y+1)) # top_right

val_ar.append(get_pixel(img, center, x, y+1)) # right

val_ar.append(get_pixel(img, center, x+1, y+1)) # bottom_right

val_ar.append(get_pixel(img, center, x+1, y)) # bottom

val_ar.append(get_pixel(img, center, x+1, y-1)) # bottom_left

val_ar.append(get_pixel(img, center, x, y-1)) # left

val_ar.append(get_pixel(img, center, x-1, y-1)) # top_left

val_ar.append(get_pixel(img, center, x-1, y)) # top

power_val = [1, 2, 4, 8, 16, 32, 64, 128]

val = 0

for i in range(len(val_ar)):

val += val_ar[i] * power_val[i]

return val

def LBP(img):

height, width, channel = img.shape

img_gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

img_lbp = np.zeros((height, width,3), np.uint8)

for i in range(0, height):

for j in range(0, width):

img_lbp[i, j] = lbp_calculated_pixel(img_gray, i, j)

return img_lbp

if __name__ == '__main__':

path = "/media/rezwan/Study/Thesis/Feature_Extraction_Code/dataset/images"

dst_path = "/media/rezwan/Study/Thesis/Feature_Extraction_Code/dataset/LBP"

if not os.path.exists(dst_path):

os.mkdir(dst_path)

for ff in os.listdir(path):

imgg = cv2.imread(os.path.join(path,ff))

# Read the orginal images

# cv2.imshow(str(ff),imgg)

# if cv2.waitKey(0) & 0xff == 27:

# cv2.destroyAllWindows()

# Apply operation on Images (LBP)

res1 = LBP(imgg)

cv2.imwrite(os.path.join(dst_path, str(ff)[:-4] + '_lbp.jpg'), res1)- Inputs:

- Outputs:

(2) Scale-Invariant Feature Transform(SIFT). Source

def SIFT(img):

gray= cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

# sift = cv2.SIFT()

sift = cv2.xfeatures2d.SIFT_create()

# kp = sift.detect(gray,None)

kp = sift.detect(gray)

# kp, des = sift.detectAndCompute(gray,None)

img = cv2.drawKeypoints(gray, kp, None, flags=cv2.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS)

return img

if __name__ == '__main__':

path = "/media/rezwan/Study/Thesis/Feature_Extraction_Code/dataset/images"

dst_path = "/media/rezwan/Study/Thesis/Feature_Extraction_Code/dataset/SIFT"

if not os.path.exists(dst_path):

os.mkdir(dst_path)

for ff in os.listdir(path):

imgg = cv2.imread(os.path.join(path,ff))

# Read the orginal images

# cv2.imshow(str(ff),imgg)

# if cv2.waitKey(0) & 0xff == 27:

# cv2.destroyAllWindows()

# Apply operation on Images (LBP)

res1 = SIFT(imgg)

cv2.imwrite(os.path.join(dst_path, str(ff)[:-4] + '_sift.jpg'), res1)- Inputs:

- Outputs:

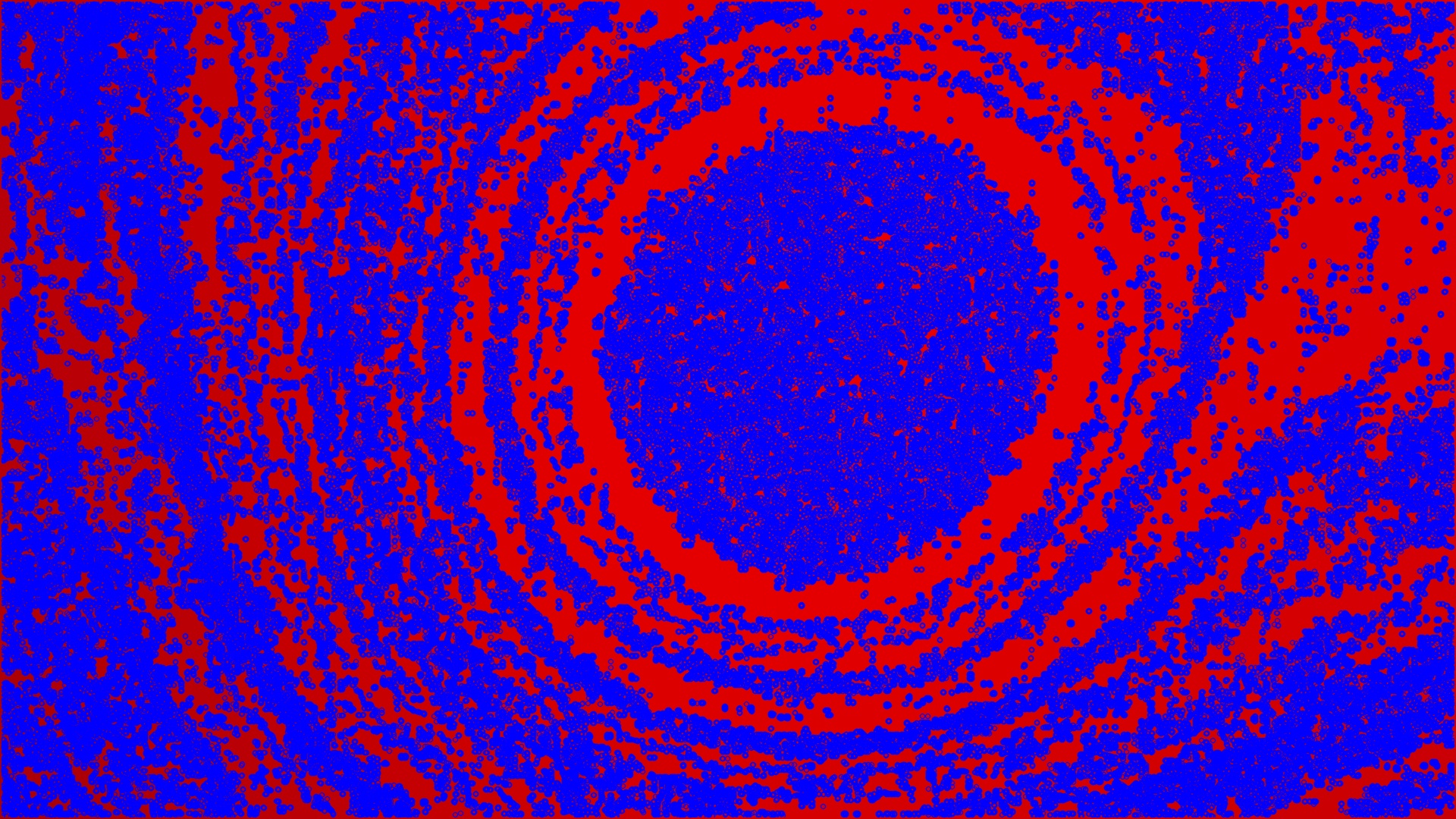

(3) Gabor Filter. Source

# define gabor filter bank with different orientations and at different scales

def build_filters():

filters = []

ksize = 9

#define the range for theta and nu

for theta in np.arange(0, np.pi, np.pi / 8):

for nu in np.arange(0, 6*np.pi/4 , np.pi / 4):

kern = cv2.getGaborKernel((ksize, ksize), 1.0, theta, nu, 0.5, 0, ktype=cv2.CV_32F)

kern /= 1.5*kern.sum()

filters.append(kern)

return filters

#function to convolve the image with the filters

def process(img, filters):

accum = np.zeros_like(img)

for kern in filters:

fimg = cv2.filter2D(img, cv2.CV_8UC3, kern)

np.maximum(accum, fimg, accum)

return accum

if __name__ == '__main__':

#instantiating the filters

filters = build_filters()

f = np.asarray(filters)

# #reading the input image

# imgg = cv2.imread(test,0)

path = "/media/rezwan/Study/Thesis/Feature_Extraction_Code/dataset/images"

dst_path = "/media/rezwan/Study/Thesis/Feature_Extraction_Code/dataset/GaborFilter"

if not os.path.exists(dst_path):

os.mkdir(dst_path)

for ff in os.listdir(path):

imgg = cv2.imread(os.path.join(path,ff))

# Read the orginal images

cv2.imshow(str(ff),imgg)

if cv2.waitKey(0) & 0xff == 27:

cv2.destroyAllWindows()

# Apply operation on Images (Gabor Filter)

res1 = process(imgg, f)

cv2.imwrite(os.path.join(dst_path, str(ff)[:-4] + '_Gabor.jpg'), res1)

cv2.imshow(str(ff)[:-4] + '_Gabor.jpg',res1)

if cv2.waitKey(0) & 0xff == 27:

cv2.destroyAllWindows()

#initializing the feature vector

feat = []

#calculating the local energy for each convolved image

for j in range(40):

res = process(imgg, f[j])

temp = 0

for p in range(128):

for q in range(128):

temp = temp + res[p][q]*res[p][q]

feat.append(temp)

#calculating the mean amplitude for each convolved image

for j in range(40):

res = process(imgg, f[j])

temp = 0

for p in range(128):

for q in range(128):

temp = temp + abs(res[p][q])

feat.append(temp)

#feat matrix is the feature vector for the image

print(np.array(feat))

del feat- Inputs:

- Outputs:

(4) Harris Corner Detection. Source

def HarrisCorner(img):

org_img = img

gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

gray = np.float32(gray)

dst = cv2.cornerHarris(gray,2,3,0.04)

#result is dilated for marking the corners, not important

dst = cv2.dilate(dst,None)

# Threshold for an optimal value, it may vary depending on the image.

img[dst>0.01*dst.max()]=[0,0,255]

return img

# cv2.imwrite(dst_file + '/150_HarrisCorner.jpg',img)

# cv2.imshow('HarrisCorner',img)

# if cv2.waitKey(0) & 0xff == 27:

# cv2.destroyAllWindows()

if __name__ == '__main__':

path = "/media/rezwan/Study/Thesis/Feature_Extraction_Code/dataset/images"

dst_path = "/media/rezwan/Study/Thesis/Feature_Extraction_Code/dataset/HarrisCorner"

if not os.path.exists(dst_path):

os.mkdir(dst_path)

for ff in os.listdir(path):

imgg = cv2.imread(os.path.join(path,ff))

# Read the orginal images

# cv2.imshow(str(ff),imgg)

# if cv2.waitKey(0) & 0xff == 27:

# cv2.destroyAllWindows()

# Apply operation on Images (LBP)

res1 = HarrisCorner(imgg)

cv2.imwrite(os.path.join(dst_path, str(ff)[:-4] + '_HCorner.jpg'), res1)- Inputs:

- Outputs:

(5) FAST Algorithm for Corner Detection. Source

def FAST(img):

# Initiate FAST object with default values

fast = cv2.FastFeatureDetector_create(threshold=0)

# find and draw the keypoints

kp = fast.detect(img,None)

img2 = cv2.drawKeypoints(img, kp, None,color=(255,0,0))

print("Threshold: ", fast.getThreshold())

print("nonmaxSuppression: ", fast.getNonmaxSuppression())

print("neighborhood: ", fast.getType())

print("Total Keypoints with nonmaxSuppression: ", len(kp))

# cv2.imwrite('fast_true.png',img2)

# cv2.imshow('fast_true',img2)

# if cv2.waitKey(0) & 0xff == 27:

# cv2.destroyAllWindows()

# Disable nonmaxSuppression

fast.setNonmaxSuppression(0)

kp = fast.detect(img,None)

print ("Total Keypoints without nonmaxSuppression: ", len(kp))

img3 = cv2.drawKeypoints(img, kp, None, color=(255,0,0))

return img3

# # cv2.imwrite('fast_false.png',img3)

# cv2.imshow('fast_false',img3)

# if cv2.waitKey(0) & 0xff == 27:

# cv2.destroyAllWindows()

if __name__ == '__main__':

path = "/media/rezwan/Study/Thesis/Feature_Extraction_Code/dataset/images"

dst_path = "/media/rezwan/Study/Thesis/Feature_Extraction_Code/dataset/FAST"

if not os.path.exists(dst_path):

os.mkdir(dst_path)

for ff in os.listdir(path):

imgg = cv2.imread(os.path.join(path,ff))

# Read the orginal images

# cv2.imshow(str(ff),imgg)

# if cv2.waitKey(0) & 0xff == 27:

# cv2.destroyAllWindows()

# Apply operation on Images (LBP)

res1 = FAST(imgg)

cv2.imwrite(os.path.join(dst_path, str(ff)[:-4] + '_FAST.jpg'), res1)- Inputs:

- Outputs:

(6) Texture Recognition using Haralick Texture. Source

def harlick_extract_features(image):

# calculate haralick texture features for 4 types of adjacency

textures = mt.features.haralick(image)

ht_mean = textures.mean(axis=0)

return textures

if __name__ == '__main__':

path = "/media/rezwan/Study/Thesis/Feature_Extraction_Code/dataset/images"

dst_path = "/media/rezwan/Study/Thesis/Feature_Extraction_Code/dataset/Haralick"

if not os.path.exists(dst_path):

os.mkdir(dst_path)

for ff in os.listdir(path):

imgg = cv2.imread(os.path.join(path,ff))

# Read the orginal images

# cv2.imshow(str(ff),imgg)

# if cv2.waitKey(0) & 0xff == 27:

# cv2.destroyAllWindows()

# Apply operation on Images

res1 = harlick_extract_features(imgg)

cv2.imwrite(os.path.join(dst_path, str(ff)[:-4] + '_Haralick.jpg'), res1)- Inputs:

- Outputs:

(7) Shi-Tomasi Corner Detector & Good Features to Track. Source

def goodFeaturesToTrack(img):

gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

corners = cv2.goodFeaturesToTrack(gray,25,0.01,10)

corners = np.int0(corners)

for i in corners:

x,y = i.ravel()

cv2.circle(img,(x,y),3,255,-1)

# cv2.imshow('goodFeaturesToTrack', img)

# if cv2.waitKey(0) & 0xff == 27:

# cv2.destroyAllWindows()

return img

if __name__ == '__main__':

path = "/media/rezwan/Study/Thesis/Feature_Extraction_Code/dataset/images"

dst_path = "/media/rezwan/Study/Thesis/Feature_Extraction_Code/dataset/Shi-Tomasi "

if not os.path.exists(dst_path):

os.mkdir(dst_path)

for ff in os.listdir(path):

imgg = cv2.imread(os.path.join(path,ff))

# Read the orginal images

# cv2.imshow(str(ff),imgg)

# if cv2.waitKey(0) & 0xff == 27:

# cv2.destroyAllWindows()

# Apply operation on Images

res1 = goodFeaturesToTrack(imgg)

cv2.imwrite(os.path.join(dst_path, str(ff)[:-4] + '_shi.jpg'), res1)- Inputs:

- Outputs:

(8) Fourier Transform. Source

def fourier(img):

# img = cv2.cvtColor(img, cv2.COLOR_RGB2GRAY )

f = np.fft.fft2(img)

fshift = np.fft.fftshift(f)

magnitude_spectrum = 20*np.log(np.abs(fshift))

return magnitude_spectrum

# rows, cols = img.shape

# crow,ccol = rows/2 , cols/2

# fshift[crow-30:crow+30, ccol-30:ccol+30] = 0

# f_ishift = np.fft.ifftshift(fshift)

# img_back = np.fft.ifft2(f_ishift)

# img_back = np.abs(img_back)

# return img_back

if __name__ == '__main__':

path = "/media/rezwan/Study/Thesis/Feature_Extraction_Code/dataset/images"

dst_path = "/media/rezwan/Study/Thesis/Feature_Extraction_Code/dataset/Fourier"

if not os.path.exists(dst_path):

os.mkdir(dst_path)

for ff in os.listdir(path):

imgg = cv2.imread(os.path.join(path,ff))

# Read the orginal images

# cv2.imshow(str(ff),imgg)

# if cv2.waitKey(0) & 0xff == 27:

# cv2.destroyAllWindows()

# Apply operation on Images

res1 = fourier(imgg)

cv2.imwrite(os.path.join(dst_path, str(ff)[:-4] + '_fourier.jpg'), res1)- Inputs:

- Outputs: