Characteristic Guidance Web UI is an extension of for the Stable Diffusion web UI (AUTOMATIC1111). It offers a theory-backed guidance sampling method with improved sample and control quality at high CFG scale (10-30).

This is the official implementation of Characteristic Guidance: Non-linear Correction for Diffusion Model at Large Guidance Scale. We are happy to announce that this work has been accepted by ICML 2024.

Characteristic guidance offers improved sample generation and control at high CFG scale. Try characteristic guidance for

- Detail refinement

- Fixing quality issues, like

- Weird colors and styles

- Bad anatomy (not guaranteed 🤣, works better on Stable Diffusion XL)

- Strange backgrounds

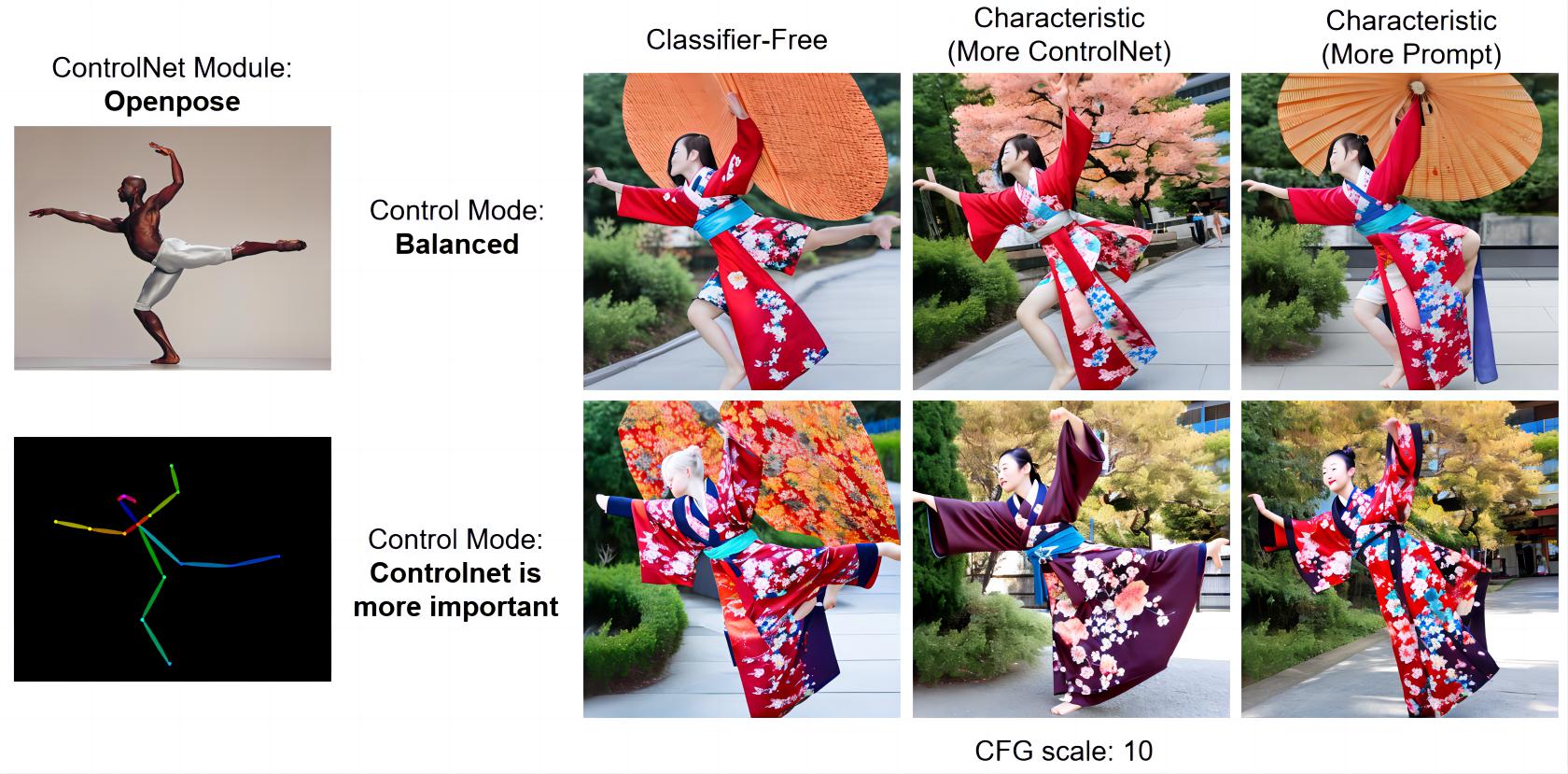

Characteristic guidance is compatible with every existing sampling methods in Stable Diffusion WebUI. It now have preliminary support for ControlNet.

For more information and previews, please visit our project website: Characteristic Guidance Project Website.

Q&A: What's the difference with Dynamical Thresholding?

They are distinct and independent methods, can be used either independently or in conjunction.

- Characteristic Guidance: Corrects both context and color, works at the given CFG scale, iteratively corrects input of the U-net according to the Fokker-Planck equation.

- Dynamical Thresholding: Mainly focusing on color, works to mimic lower CFG scales, clips and rescales output of the U-net.

Using Characteristic Guidance and Dynamical Thresholding simutaneously may further reduce saturation.

Before installing and using the Characteristic Guidance Web UI, ensure that you have the following prerequisites met:

- Stable Diffusion WebUI (AUTOMATIC1111): Your system must have the Stable Diffusion WebUI by AUTOMATIC1111 installed. This interface is the foundation on which the Characteristic Guidance Web UI operates.

- Version Requirement: The extension is developed for Stable Diffusion WebUI v1.6.0 or higher. It may works for previous versions but not guaranteed.

Follow these steps to install the Characteristic Guidance Web UI extension:

- Navigate to the "Extensions" tab in the Stable Diffusion web UI.

- In the "Extensions" tab, select the "Install from URL" option.

- Enter the URL

https://github.com/scraed/CharacteristicGuidanceWebUI.gitinto the "URL for extension's git repository" field. - Click on the "Install" button.

- After waiting for several seconds, a confirmation message should appear indicating successful installation: "Installed into stable-diffusion-webui\extensions\CharacteristicGuidanceWebUI. Use the Installed tab to restart".

- Proceed to the "Installed" tab. Here, click "Check for updates", followed by "Apply and restart UI" for the changes to take effect. Note: Use these buttons for future updates to the CharacteristicGuidanceWebUI as well.

The Characteristic Guidance Web UI features an interactive interface for both txt2img and img2img mode.

The characteristic guidance is slow compared to classifier-free guidance. We recommend the user to generate image with classifier-free guidance at first, then try characteristic guidance with the same prompt and seed to enhance the image.

EnableCheckbox: Toggles the activation of the Characteristic Guidance features.

Check ConvergenceButton: Allows users to test and visualize the convergence of their settings. Adjust the regularization parameters if the convergence is not satisfactory.

In practice, convergence is not always guaranteed. If characteristic guidance fails to converge at a certain time step, classifier-free guidance will be adopted at that time step.

Below are the parameters you can adjust to customize the behavior of the guidance correction:

Regularization Strength: Range 0.0 to 10.0 (default: 1). Adjusts the strength of regularization at the beginning of sampling, larger regularization means easier convergence and closer alignment with CFG (Classifier Free Guidance).Regularization Range Over Time: Range 0.01 to 10.0 (default: 1). Modifies the range of time being regularized, larger time means slow decay in regularization strength hence more time steps being regularized, affecting convergence difficulty and the extent of correction.Max Num. Characteristic Iteration: Range 1 to 50 (default: 50). Determines the maximum number of characteristic iterations per sampling time step.Num. Basis for Correction: Range 0 to 10 (default: 0). Sets the number of bases for correction, influencing the amount of correction and convergence behavior. More basis means better quality but harder convergence. Basis number = 0 means batch-wise correction, > 0 means channel-wise correction.CHG Start Step: Range 0 to 0.25 (default: 0). Characteristic guidance begins to influence the process from the specified percentage of steps, indicated byCHG Start Step.CHG End Step: Range 0.25 to 1 (default: 0). Characteristic guidance ceases to have an effect from the specified percentage of steps, denoted byCHG End Step. Setting this value to approximately 0.4 can significantly speed up the generation process without substantially altering the outcome.ControlNet Compatible ModeMore Prompt: Controlnet is turned off when iteratively solving characteristic guidance correction.More ControlNet: Controlnet is turned on when iteratively solving characteristic guidance correction.

Reuse Correction of Previous Iteration: Range 0.0 to 1.0 (default: 1.0). Controls the reuse of correction from previous iterations to reduce abrupt changes during generation.Log 10 Tolerance for Iteration Convergence: Range -6 to -2 (default: -4). Adjusts the tolerance for iteration convergence, trading off between speed and image quality.Iteration Step Size: Range 0 to 1 (default: 1.0). Sets the step size for each iteration, affecting the speed of convergence.Regularization Annealing Speed: Range 0.0 to 1.0 (default: 0.4). How fast the regularization strength decay to desired rate. Smaller value potentially easing convergence.Regularization Annealing Strength: Range 0.0 to 5 (default: 0.5). Determines the how important regularization annealing is in characteristic guidance interations. Higher value means higher priority to bring regularization level to specified regularization strength. Affecting the balance between annealing and convergence.AA Iteration Memory Size: Range 1 to 10 (default: 2). Specifies the memory size for AA (Anderson Acceleration) iterations, influencing convergence speed and stability.

Please experiment with different settings, especially regularization strength and time range, to achieve better convergence for your specific use case. (According to my experience, high CFG scale need relatively large regularization strength and time range for convergence, while low CFG scale prefers lower regularization strength and time range for more guidance correction.)

Here is my recommended approach for parameter setting:

- Start by running characteristic guidance with the default parameters (Use

Regularization Strength=5 for Stable Diffusion XL). - Verify convergence by clicking the

Check Convergencebutton. - If convergence is achieved easily:

- Decrease the

Regularization StrengthandRegularization Range Over Timeto enhance correction. - If the

Regularization Strengthis already minimal, consider increasing theNum. Basis for Correctionfor improved performance.

- Decrease the

- If convergence is not reached:

- Increment the

Max Num. Characteristic Iterationto allow for additional iterations. - Should convergence still not occur, raise the

Regularization StrengthandRegularization Range Over Timefor increased regularization.

- Increment the

- Thanks to @v0xie: The UI now supports two more parameters.

CHG Start Step: Range 0 to 0.25 (default: 0). Characteristic guidance begins to influence the process from the specified percentage of steps, indicated byCHG Start Step.CHG End Step: Range 0.25 to 1 (default: 0). Characteristic guidance ceases to have an effect from the specified percentage of steps, denoted byCHG End Step. Setting this value to approximately 0.4 can significantly speed up the generation process without substantially altering the outcome.

- Effect: Move parameter

Reuse Correction of Previous Iterationto advanced parameters. Its default value is set to 1 to accelerate convergence. It is now using the same update direction as the caseReuse Correction of Previous Iteration= 0 regardless of its value. - User Action Required: Please delete "ui-config.json" from the stable diffusion WebUI root directory for the update to take effect.

- Issue: Infotext with

Reuse Correction of Previous Iteration> 0 may not generate the same image as previous version.

- Effect: Now the Num. Basis for Correction can takes value 0 which means batch-wise correction instead of channel-wise correction. It is a more suitable default value since it converges faster.

- User Action Required: Please delete "ui-config.json" from the stable diffusion WebUI root directory for the update to take effect.

- Effect: Now the extension still works if the prompt have more than 75 tokens.

- Effect: Now the extension supports models trained in V-prediction mode.

- Effect: Now the extension supports the 'AND' word in positive prompt.

- Current Limitations: Note that characteristic guidance only give correction between positive and negative prompt. Therefore positive prompts combined by 'AND' will be averaged when computing the correction.

- Extended Settings Range:

Regularization Strength&Regularization Range Over Timecan now go up to 10. - Effect: Reproduce classifier-free guidance results at high values of

Regularization Strength&Regularization Range Over Time. - User Action Required: Please delete "ui-config.json" from the stable diffusion WebUI root directory for the update to take effect.

- Early Support: We're excited to announce preliminary support for ControlNet.

- Current Limitations: As this is an early stage, expect some developmental issues. The integration of ControlNet and characteristic guidance remains a scientific open problem (which I am investigating). Known issues include:

- Iterations failing to converge when ControlNet is in reference mode.

- Thanks to @w-e-w: The UI now supports infotext reading.

- How to Use: Check out this PR for detailed instructions.

If you utilize characteristic guidance in your research or projects, please consider citing our paper:

@misc{zheng2023characteristic,

title={Characteristic Guidance: Non-linear Correction for DDPM at Large Guidance Scale},

author={Candi Zheng and Yuan Lan},

year={2023},

eprint={2312.07586},

archivePrefix={arXiv},

primaryClass={cs.CV}

}