scrapy-plugins / scrapy-playwright Goto Github PK

View Code? Open in Web Editor NEW🎭 Playwright integration for Scrapy

License: BSD 3-Clause "New" or "Revised" License

🎭 Playwright integration for Scrapy

License: BSD 3-Clause "New" or "Revised" License

It would be nice to log all playwright requests/responses going on when rendering a page, just for users to see what's going on when they think spider is hanging, not doing anything. Setting could be optional, doesn't have to be enabled by default, log messages could look exactly like scrapy ones, just with proper information that it is not scrapy request/response but playwright

2021-10-14 13:56:17 [playwright-firefox] DEBUG: browser received response (200) <GET https://www.propertyfinder.ae/en/search> (referer: None)

This could help when debugging. On the other hand, it might add a lot of noise to logs, so it can be optional, not enabled by default.

You can do it in downloader handler by adding page.on("response"), page.on("request") handlers that will invoke log formatter

Is there a way to use ElementHandle inside coroutines?

From the doc example:

href_element = await page.query_selector("a")

await href_element.click()

What are the similarities of scrapy-playwright with the python version of playwright. What are the classes, functions of scrapy-playwright that correspond to those of playwright. Thanks !

Hey setting the timeout in

PLAYWRIGHT_DEFAULT_NAVIGATION_TIMEOUT

Also in

PLAYWRIGHT_LAUNCH_OPTIONS

Seems to not work logs shows default 30000

I have a few concerns with the number of instances available to page when using playwright integration with scrapy.

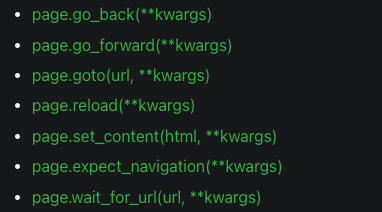

Perhaps I have not yet fully understood the integration (lack of documentation does no help); However, I have found that the following are not compatible:

AttributeError: 'Page' object has no attribute 'waitForNavigation'

I usually work a lot with fillings forms and clicking buttons that would redirect me to the next page. There does not seem to be a compatible instance to wait long enough until the page loads. I have tried waitForSelector, however this is not effective enough.

Here's what I am working with:

import scrapy

from scrapy_playwright.page import PageCoroutine

class DoorSpider(scrapy.Spider):

name = 'door'

start_urls = ['https://nextdoor.co.uk/login/']

def start_requests(self):

for url in self.start_urls:

yield scrapy.Request(

url=url,

callback = self.parse,

meta= dict(

playwright = True,

playwright_include_page = True,

playwright_page_coroutines = [

PageCoroutine("click", selector = ".onetrust-close-btn-handler.onetrust-close-btn-ui.banner-close-button.onetrust-lg.ot-close-icon"),

PageCoroutine("waitForNavigation"),

PageCoroutine("fill", "#id_email", 'my_email'),

PageCoroutine("fill", "#id_password", 'my_password'),

PageCoroutine("waitForNavigation"),

PageCoroutine("click", selector="#signin_button"),

PageCoroutine("waitForNavigation"),

PageCoroutine("screenshot", path="cookies.png", full_page=True)

]

)

)

def parse(self, response):

yield {

'data':response.body

}

The screenshot would show me still in the log-in page. I need to add a timer to wait until the page loads for the next page, I figured waitForUrl would work as the url changes after the log-in page but scrapy_playwright does not accept it as an argument. Therefore, what can I use in place of this?

I create browser context for each proxy, it will use a lot of memory, so how to let Browser context close automatic.

If I use await page.context.close() will cause Target page, context or browser has been closed problem

Hello,

this script use to respect scrapy memory_usage

Now it no longer does…

it does not do anything when enabled now

Hello ,

I am wondering when crawling what is the correct way to catch/handle errors coming from playwright.

Example something like this with scrapy

try:

……

expect (some playwright error):

pass

#do something

playwright error example

playwright._impl._api_types.Error: proxy refused

Hello I'm trying to use scrapy-playwright with https://github.com/mattes/rotating-proxy

Example without proxies in request

# Settings

DOWNLOAD_HANDLERS = {

"http": "scrapy_playwright.handler.ScrapyPlaywrightDownloadHandler",

# "https": "scrapy_playwright.handler.ScrapyPlaywrightDownloadHandler",

}

TWISTED_REACTOR = "twisted.internet.asyncioreactor.AsyncioSelectorReactor"

# PLAYWRIGHT_LAUNCH_OPTIONS = {

# "headless" : False

# }

# https://github.com/mattes/rotating-proxy

PLAYWRIGHT_CONTEXT_ARGS = {

'proxy': {

"server": "http://127.0.0.1:5566"

}

}

PLAYWRIGHT_MAX_CONTEXTS = 3

PLAYWRIGHT_MAX_PAGES_PER_CONTEXT = 2

CONCURRENT_REQUESTS = 6

CONCURRENT_REQUESTS_PER_IP = 6

# test spider

import scrapy

class TestSpider(scrapy.Spider):

name = 'test'

def start_requests(self):

for url in range(10):

yield scrapy.Request('https://api.my-ip.io/ip', meta={"playwright": True}, dont_filter=True)

def parse(self, response):

print(response.text)

# output

110.93.143.20

2021-07-12 12:31:28 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://api.my-ip.io/ip> (referer: None)

110.93.143.20

110.93.143.20

110.93.143.20

2021-07-12 12:31:28 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://api.my-ip.io/ip> (referer: None)

2021-07-12 12:31:28 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://api.my-ip.io/ip> (referer: None)

2021-07-12 12:31:28 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://api.my-ip.io/ip> (referer: None)

110.93.143.20

110.93.143.20

110.93.143.20

110.93.143.20

110.93.143.20

110.93.143.20

Example with proxies in request

# Spider with proxies in request

import scrapy

import json

class TestSpider(scrapy.Spider):

name = 'test'

def start_requests(self):

for url in range(10):

yield scrapy.Request('https://api.my-ip.io/ip', meta={"playwright": True, "proxy": "http://127.0.0.1:5566"}, dont_filter=True)

def parse(self, response):

print(response.text)

# output with proxies in request

185.191.124.150

2021-07-12 12:36:11 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://api.my-ip.io/ip> (referer: None)

185.191.124.150

2021-07-12 12:36:11 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://api.my-ip.io/ip> (referer: None)

185.191.124.150

2021-07-12 12:36:11 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://api.my-ip.io/ip> (referer: None)

2021-07-12 12:36:11 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://api.my-ip.io/ip> (referer: None)

2021-07-12 12:36:11 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://api.my-ip.io/ip> (referer: None)

185.191.124.150

199.249.230.152

94.140.114.213

2021-07-12 12:36:11 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://api.my-ip.io/ip> (referer: None)

2021-07-12 12:36:11 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://api.my-ip.io/ip> (referer: None)

109.70.100.37

185.191.124.150

2021-07-12 12:36:11 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://api.my-ip.io/ip> (referer: None)

2021-07-12 12:36:11 [scrapy.core.engine] DEBUG: Crawled (200) <GET https://api.my-ip.io/ip> (referer: None)

193.32.126.161

107.189.31.102

Hi,

As mentioned in the README, playwright settings are set inside settings.py.

However, is there a way to set them per page request?

Pass in a different user agent per request. I was hoping to pass something to the meta field.

Cheers

I'm trying to use scrapy-playwright with firefox and proxies and it's not easy.

In Playwright-Python and Node as well just passing proxy config to server is not enough because authorization headers are missing on request, so I need to set extra headers with page.

Looks like this in pure playwright (no scrapy yet)

import asyncio

import logging

import os

import re

import sys

from playwright.async_api import async_playwright

from w3lib.http import basic_auth_header

logger = logging.getLogger(__name__)

logger.setLevel(logging.DEBUG)

handler = logging.StreamHandler(sys.stdout)

handler.setLevel(logging.DEBUG)

formatter = logging.Formatter('%(asctime)s - %(name)s - %(levelname)s - %(message)s')

handler.setFormatter(formatter)

logger.addHandler(handler)

async def handle_request(request):

logger.debug(f"Browser request: <"

f"{request.method} {request.url}>")

async def handle_response(response):

# Log responses, just so you know what's going on when Scrapy

# seems to be inactive

msg = f"Browser crawled ({response.status}): "

logger.debug(msg + response.url)

body = await response.body()

logger.debug(body)

async def main():

url = 'https://httpbin.org/headers'

CRAWLERA_APIKEY = os.environ.get('CRAWLERA_APIKEY')

CRAWLERA_URL = os.environ.get('CRAWLERA_HOST')

proxy_auth = basic_auth_header(CRAWLERA_APIKEY, '')

proxy_settings = {

"proxy": {

"server": CRAWLERA_URL,

"username": CRAWLERA_APIKEY,

"password": ''

},

"ignore_https_errors": True

}

DEFAULT_HEADERS = {

'Proxy-Authorization': proxy_auth.decode(),

"X-Crawlera-Profile": "pass",

"X-Crawlera-Cookies": "disable",

}

async with async_playwright() as p:

browser_type = p.firefox

timeout = 90000

msg = f"starting rendering page with timeout {timeout}ms"

logger.info(msg)

# Launching new browser

browser = await browser_type.launch()

context = await browser.new_context(**proxy_settings)

page = await context.new_page()

# XXX try to run it with/without this line

# it gives 407 without it, 200 with

await page.set_extra_http_headers(DEFAULT_HEADERS)

page.on('request', handle_request)

page.on('response', handle_response)

await page.goto(url, timeout=timeout)

asyncio.run(main())Without setting extra headers I get this:

python proxies.py

2021-10-15 12:41:19,819 - __main__ - INFO - starting rendering page with timeout 90000ms

2021-10-15 12:41:21,123 - __main__ - DEBUG - Browser request: <GET https://httpbin.org/headers>

2021-10-15 12:41:21,707 - __main__ - DEBUG - Browser crawled (407): https://httpbin.org/headers

2021-10-15 12:41:21,713 - __main__ - DEBUG - b''

with setting extra headers I get good response and I can see traffic in my proxy logs

python proxies.py

2021-10-15 12:42:28,019 - __main__ - INFO - starting rendering page with timeout 90000ms

2021-10-15 12:42:29,549 - __main__ - DEBUG - Browser request: <GET https://httpbin.org/headers>

2021-10-15 12:42:30,594 - __main__ - DEBUG - Browser crawled (200): https://httpbin.org/headers

2021-10-15 12:42:30,597 - __main__ - DEBUG - b'{\n "headers": {\n "Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8", \n "Accept-Encoding": "gzip, deflate, br", \n "Accept-Language": "en-US,en;q=0.5", \n "Host": "httpbin.org", \n "Sec-Fetch-Dest": "document", \n "Sec-Fetch-Mode": "navigate", \n "Sec-Fetch-Site": "cross-site", \n "Upgrade-Insecure-Requests": "1", \n "User-Agent": "Mozilla/5.0 (X11; Linux x86_64; rv:92.0) Gecko/20100101 Firefox/92.0", \n "X-Amzn-Trace-Id": "Root=1-61695b16-097385cb043d01b63d71eb58"\n }\n}\n'

Now I'm trying to do the same thing in scrapy-playwright, and I run into problems.

I cannot easily set extra headers now. I can set event handlers, but request event handler according to docs does not allow modifying request object. Setting extra headers need to be done before page.go() and after context created, there is no easy way to do it in spider object now, unless I'm missing something. If I am missing something let me know.

To bypass this I subclassed downloader handler.

from scrapy import Request

from scrapy_playwright.handler import ScrapyPlaywrightDownloadHandler

from properties.settings import DEFAULT_PLAYWRIGHT_PROXY_HEADERS

class SetHeadersDownloadHandler(ScrapyPlaywrightDownloadHandler):

async def _create_page(self, request: Request):

page = await super()._create_page(request)

await page.set_extra_http_headers(DEFAULT_PLAYWRIGHT_PROXY_HEADERS)

return pageand defined it in settings. This is a hack as create_page is not meant to be modified, but it works for setting authorization. Still I get 407.

The only way I can make it work is by disabling scrapy-playwright route handler, so I comment out these lines here: https://github.com/scrapy-plugins/scrapy-playwright/blob/master/scrapy_playwright/handler.py#L151

between 151 and 161.

Now I get proper result, spider gets 200 responses, no 407 in logs and traffic going via proxy.

Scrapy spider code:

import logging

import os

import scrapy

from playwright.async_api import Response, Request

logger = logging.getLogger(__name__)

async def handle_response(response: Response):

logger.info(response.url + " " + str(response.status))

logger.info(response.headers)

return

async def handle_request(request: Request):

logger.info(request.headers)

CRAWLERA_APIKEY = os.environ.get('CRAWLERA_APIKEY')

CRAWLERA_URL = os.environ.get('CRAWLERA_HOST')

class SomeSpider(scrapy.Spider):

name = 'example'

start_urls = [

"http://httpbin.org/headers"

]

custom_settings = {

"DOWNLOAD_HANDLERS": {

"http": "some_project.downloader.SetHeadersDownloadHandler",

"https": "some_project.downloader.SetHeadersDownloadHandler"

},

"PLAYWRIGHT_CONTEXTS": {

1: {

"ignore_https_errors": True,

"proxy": {

"server": CRAWLERA_URL,

"username": CRAWLERA_APIKEY,

"password": "",

}

}

}

}

default_meta = {

"playwright": True,

"playwright_context": 1,

"playwright_page_event_handlers": {

"response": handle_response,

"request": handle_request

}

}

def start_requests(self):

for x in self.start_urls:

yield scrapy.Request(

x, meta=self.default_meta

)

def parse(self, response):

for url in ["http://httpbin.org/get", "http://httpbin.org/ip"]:

yield scrapy.Request(url, callback=self.hello,

meta=self.default_meta)

def hello(self, response):

logger.debug(response.body)i get this error , i use basic usages, [ "ProactorEventLoop is not supported, got: " ]

Can you help me

Hi,

This issue related to #18

The error still occurred with scrapy-playwright 0.0.4. The Scrapy script crawled about 2500 domains in 10k from majestic and crashed with the last error JavaScript heap out of memory. So I think this is a bug.

My main code:

domain = self.get_domain(url=url)

context_name = domain.replace('.', '_')

yield scrapy.Request(

url=url,

meta={

"playwright": True,

"playwright_page_coroutines": {

"screenshot": PageCoroutine("screenshot", domain + ".png"),

},

# Create new content

"playwright_context": context_name,

},

)

My env:

Python 3.8.10

Scrapy 2.5.0

playwright 1.12.1

scrapy-playwright 0.0.04

The detail of error:

2021-07-17 14:47:48 [scrapy.spidermiddlewares.httperror] INFO: Ignoring response <403 https://www.costco.com/>: HTTP status code is not handled or not allowed

FATAL ERROR: CALL_AND_RETRY_LAST Allocation failed - JavaScript heap out of memory

1: 0xa18150 node::Abort() [/home/ubuntu/.local/lib/python3.8/site-packages/playwright/driver/node]

2: 0xa1855c node::OnFatalError(char const*, char const*) [/home/ubuntu/.local/lib/python3.8/site-packages/playwright/driver/node]

3: 0xb9715e v8::Utils::ReportOOMFailure(v8::internal::Isolate*, char const*, bool) [/home/ubuntu/.local/lib/python3.8/site-packages/playwright/driver/node]

4: 0xb974d9 v8::internal::V8::FatalProcessOutOfMemory(v8::internal::Isolate*, char const*, bool) [/home/ubuntu/.local/lib/python3.8/site-packages/playwright/driver/node]

5: 0xd54755 [/home/ubuntu/.local/lib/python3.8/site-packages/playwright/driver/node]

6: 0xd650a8 v8::internal::Heap::AllocateRawWithRetryOrFail(int, v8::internal::AllocationType, v8::internal::AllocationOrigin, v8::internal::AllocationAlignment) [/home/ubuntu/.local/lib/python3.8/site-packages/playwright/driver/node]

7: 0xd2bd9d v8::internal::Factory::NewFixedArrayWithFiller(v8::internal::RootIndex, int, v8::internal::Object, v8::internal::AllocationType) [/home/ubuntu/.local/lib/python3.8/site-packages/playwright/driver/node]

8: 0xd2be90 v8::internal::Handle<v8::internal::FixedArray> v8::internal::Factory::NewFixedArrayWithMap<v8::internal::FixedArray>(v8::internal::RootIndex, int, v8::internal::AllocationType) [/home/ubuntu/.local/lib/python3.8/site-packages/playwright/driver/node]

9: 0xf5abd0 v8::internal::OrderedHashTable<v8::internal::OrderedHashMap, 2>::Allocate(v8::internal::Isolate*, int, v8::internal::AllocationType) [/home/ubuntu/.local/lib/python3.8/site-packages/playwright/driver/node]

10: 0xf5ac81 v8::internal::OrderedHashTable<v8::internal::OrderedHashMap, 2>::Rehash(v8::internal::Isolate*, v8::internal::Handle<v8::internal::OrderedHashMap>, int) [/home/ubuntu/.local/lib/python3.8/site-packages/playwright/driver/node]

11: 0xf5b2cb v8::internal::OrderedHashTable<v8::internal::OrderedHashMap, 2>::EnsureGrowable(v8::internal::Isolate*, v8::internal::Handle<v8::internal::OrderedHashMap>) [/home/ubuntu/.local/lib/python3.8/site-packages/playwright/driver/node]

12: 0x1051b38 v8::internal::Runtime_MapGrow(int, unsigned long*, v8::internal::Isolate*) [/home/ubuntu/.local/lib/python3.8/site-packages/playwright/driver/node]

13: 0x140a8f9 [/home/ubuntu/.local/lib/python3.8/site-packages/playwright/driver/node]

Aborted (core dumped)

2021-07-17 14:48:34 [scrapy.extensions.logstats] INFO: Crawled 2533 pages (at 15 pages/min), scraped 2362 items (at 12 items/min)

Temporary fix: I replaced line 166 with await page.context.close() to close current context in handler.py because my script had one context per one domain. It will fix the error Allocation failed - JavaScript heap out of memory and the Scrapy script crawled all 10k domains, but the successful rate was about 72% in comparison with no added code (about 85% successful rate). Also, when I added the new code, the new error was:

2021-07-17 15:04:59 [scrapy.core.scraper] ERROR: Error downloading <GET http://usatoday.com>

Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/twisted/internet/defer.py", line 1416, in _inlineCallbacks

result = result.throwExceptionIntoGenerator(g)

File "/usr/lib/python3/dist-packages/twisted/python/failure.py", line 491, in throwExceptionIntoGenerator

return g.throw(self.type, self.value, self.tb)

File "/home/ubuntu/.local/lib/python3.8/site-packages/scrapy/core/downloader/middleware.py", line 44, in process_request

return (yield download_func(request=request, spider=spider))

File "/usr/lib/python3/dist-packages/twisted/internet/defer.py", line 824, in adapt

extracted = result.result()

File "/home/ubuntu/python/scrapy-playwright/scrapy_playwright/handler.py", line 138, in _download_request

result = await self._download_request_with_page(request, page)

File "/home/ubuntu/python/scrapy-playwright/scrapy_playwright/handler.py", line 149, in _download_request_with_page

response = await page.goto(request.url)

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/async_api/_generated.py", line 6006, in goto

await self._async(

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_page.py", line 429, in goto

return await self._main_frame.goto(**locals_to_params(locals()))

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_frame.py", line 117, in goto

await self._channel.send("goto", locals_to_params(locals()))

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_connection.py", line 36, in send

return await self.inner_send(method, params, False)

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_connection.py", line 54, in inner_send

result = next(iter(done)).result()

playwright._impl._api_types.Error: Navigation failed because page was closed!

...

2021-07-17 19:31:15 [asyncio] ERROR: Task exception was never retrieved

future: <Task finished name='Task-38926' coro=<Route.continue_() done, defined at /home/ubuntu/.local/lib/python3.8/site-packages/playwright/async_api/_generated.py:544> exception=Error('Target page, context or browser has been closed')>

Traceback (most recent call last):

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/async_api/_generated.py", line 582, in continue_

await self._async(

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_network.py", line 207, in continue_

await self._channel.send("continue", cast(Any, overrides))

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_connection.py", line 36, in send

return await self.inner_send(method, params, False)

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_connection.py", line 54, in inner_send

result = next(iter(done)).result()

playwright._impl._api_types.Error: Target page, context or browser has been closed

....

2021-07-18 03:51:34 [scrapy.core.scraper] ERROR: Error downloading <GET http://bbc.co.uk>

Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/twisted/internet/defer.py", line 1416, in _inlineCallbacks

result = result.throwExceptionIntoGenerator(g)

File "/usr/lib/python3/dist-packages/twisted/python/failure.py", line 491, in throwExceptionIntoGenerator

return g.throw(self.type, self.value, self.tb)

File "/home/ubuntu/.local/lib/python3.8/site-packages/scrapy/core/downloader/middleware.py", line 44, in process_request

return (yield download_func(request=request, spider=spider))

File "/usr/lib/python3/dist-packages/twisted/internet/defer.py", line 824, in adapt

extracted = result.result()

File "/home/ubuntu/python/scrapy-playwright/scrapy_playwright/handler.py", line 138, in _download_request

result = await self._download_request_with_page(request, page)

File "/home/ubuntu/python/scrapy-playwright/scrapy_playwright/handler.py", line 165, in _download_request_with_page

body = (await page.content()).encode("utf8")

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/async_api/_generated.py", line 5914, in content

await self._async("page.content", self._impl_obj.content())

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_page.py", line 412, in content

return await self._main_frame.content()

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_frame.py", line 325, in content

return await self._channel.send("content")

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_connection.py", line 36, in send

return await self.inner_send(method, params, False)

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_connection.py", line 54, in inner_send

result = next(iter(done)).result()

playwright._impl._api_types.Error: Execution context was destroyed, most likely because of a navigation.

BY default, playwright has a timeout of 30 seconds for a lot of its behaviors, including:

You can set 0 to disable timeout for these actions in playwright, referring to Playwright API reference

Current code(line 68, scrape_playwright/hander.py) doesn't work when the value is 0:

self.default_navigation_timeout: Optional[int] = ( crawler.settings.getint("PLAYWRIGHT_DEFAULT_NAVIGATION_TIMEOUT") or None )

As you can see, then PLAYWRIGHT_DEFAULT_NAVIGATION_TIMEOUT is 0, the value of this expression is still None, for

0 or None

is still None in Python.

Rightnow, I just subclass ScrapyPlaywrightDownloadHandler to work around this issue.

Thank you.

I'm practicing with a playwright and scrapy integration towards clicking on a selector with a hidden selector. The aim is to click the selector and wait for the other two hidden selectors to load, then click on one of these and then move on. However, I'm getting the following error:

waiting for selector "option[value='type-2']"

selector resolved to hidden <option value="type-2" defaultvalue="">Type 2 (I started uni on or after 2012)</option>

attempting click action

waiting for element to be visible, enabled and stable

element is not visible - waiting...

I think the issue is when the selector is clicked, it disappears for some reason. I have implemented a wait on the selector, but the issue still persists.

from scrapy.crawler import CrawlerProcess

import scrapy

from scrapy_playwright.page import PageCoroutine

class JobSpider(scrapy.Spider):

name = 'job_play'

custom_settings = {

'USER_AGENT':'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/15.2 Safari/605.1.15',

}

def start_requests(self):

yield scrapy.Request(

url = 'https://www.student-loan-calculator.co.uk/',

callback = self.parse,

meta= dict(

playwright = True,

playwright_include_page = True,

playwright_page_coroutines = [

PageCoroutine("fill", "#salary", '28000'),

PageCoroutine("fill", "#debt", '25000'),

PageCoroutine("click", selector="//select[@id='loan-type']"),

PageCoroutine('wait_for_selector', "//select[@id='loan-type']"),

PageCoroutine('click', selector = "//select[@id='loan-type']/option[2]"),

PageCoroutine('wait_for_selector', "//div[@class='form-row calculate-button-row']"),

PageCoroutine('click', selector = "//button[@class='btn btn-primary calculate-button']"),

PageCoroutine('wait_for_selector', "//div[@class='container results-table-container']"),

PageCoroutine("wait_for_timeout", 5000),

]

),

)

def parse(self, response):

container = response.xpath("//div[@class='container results-table-container']")

for something in container:

yield {

'some':something

}

if __name__ == "__main__":

process = CrawlerProcess(

settings={

"TWISTED_REACTOR": "twisted.internet.asyncioreactor.AsyncioSelectorReactor",

"DOWNLOAD_HANDLERS": {

"https": "scrapy_playwright.handler.ScrapyPlaywrightDownloadHandler",

"http": "scrapy_playwright.handler.ScrapyPlaywrightDownloadHandler",

},

"CONCURRENT_REQUESTS": 32,

"FEED_URI":'loans.jl',

"FEED_FORMAT":'jsonlines',

}

)

process.crawl(JobSpider)

process.start()

Alternatively, this works fine with Selenium using the same xpaths, so I question whether this can handle invisible elements? Furthermore, is there a playwright equivalent to seleniums function clear() to clear text from a tag?

Something that can implement this with playwright:

debt = ['29900','28900','27900','26900', ]

salary = ['39900','34900','27900','26900', ]

for sal, deb in zip(salary, debt):

driver = webdriver.Chrome()

driver.get("https://www.student-loan-calculator.co.uk/")

driver.find_element(By.XPATH, "//input[@id='salary']"

).clear()

driver.find_element(By.XPATH, "//input[@id='salary']"

).send_keys(sal)

driver.find_element(By.XPATH, "//input[@id='debt']"

).clear()

driver.find_element(By.XPATH, "//input[@id='debt']"

).send_keys(deb)

driver.find_element(By.XPATH, "//select[@id='loan-type']").click()

driver.find_element(By.XPATH, "//select[@id='loan-type']/option[2]").click()

driver.find_element(By.XPATH, "//button[@class='btn btn-primary calculate-button']").click()

driver = driver.page_source

table = pd.read_html(driver)

#soup = BeautifulSoup(driver, 'lxml')

print(table)

I am scraping a page with 500 items but i can't get all the URL of the images because some of them (the majority) are not loaded before the browser is closed. Some src attributes have their correct URL but for those that are not loaded i got data:image/gif;base64,R0lGODlhAQABAAAAACH5BAEKAAEALAAAAAABAAEAAAICTAEAOw==

Here is part of my code, but i got AttributeError: 'ScrapyPlaywrightDownloadHandler' object has no attribute 'contexts'

when i write PageCoroutine("wait_for_timeout", 120000)

def start_requests(self):

yield scrapy.Request(

url=self.casami_url_list_product,

# meta={"playwright": True},

meta=dict(playwright=True,

playwright_include_page=True,

playwright_page_coroutines=[

PageCoroutine(

'wait_for_selector', "//div[contains(@class, 'products-list')]"),

PageCoroutine("evaluate", "window.scrollBy(0, document.body.scrollHeight)"),

PageCoroutine("wait_for_timeout", 120000), # got AttributeError: 'ScrapyPlaywrightDownloadHandler' object has no attribute 'contexts' when declared

PageCoroutine("screenshot", path=Path(__file__).parent / "recovered.png", full_page=True)

])

)

# async def parse(self, response):

def parse(self, response):

all_product_items = response.xpath("//div[contains(@class, 'products-list')]"

"//div[contains(@class, 'product-layout product-grid')]")

product_name_xpath = "//div[contains(@class, 'product-image-container')]/a/@title"

product_image_url_xpath = "//div[contains(@class, 'product-image-container')]//img/@src"

product_price_xpath = "//div[contains(@class, 'price')]/span[contains(@class,'price-new')]/text()"

product_detail_url_xpath = "//div[contains(@class, 'product-image-container')]/a/@href"

for product_item in all_product_items:

yield {"name": product_item.xpath("." + product_name_xpath).get(),

"price": product_item.xpath("." + product_price_xpath).get(),

"image_url": product_item.xpath("." + product_image_url_xpath).get(),

"product_detail_url": product_item.xpath("." + product_detail_url_xpath).get()}

Thanks for reply

I have been trying to figure out how to scrape all the pages provided by the following url link in my script. Unfortunately, I've noticed that playwright is unable to scrape all the data quickly. It's tremendously slow when integrating with python. For my purpose, it takes a very slow time to scrape pages. However, my main issue is that it stops before scraping all the pages. For example, my script below should run through all 55 pages, however when I look at the output, several pages are missing, especially from 24 - 30. What's causing this issue?

I have double checked that it was not an issue with the links by scraping only those pages, and it works. So it's probably an issue with the playwright to scrapy implementation which will likely need to be looked into.

What might be causing this issue?

from scrapy.crawler import CrawlerProcess

import scrapy

from scrapy_playwright.page import PageCoroutine

headers1 = {'authority': 'www.jobsite.co.uk',

'method': 'POST',

'path': '/Account/AccountDetails/GetUsersEmailAddress',

'scheme': 'https',

'accept': '*/*',

'accept-encoding': 'gzip, deflate, br',

'accept-language': 'en-GB,en-US;q=0.9,en;q=0.8',

'content-length': '0',

'cookie': 'VISITOR_ID=3c553849d1dc612f60515f04d9316813; INEU=1; PJBJOBSEEKER=1; LOCATIONJOBTYPEID=3079; AnonymousUser=MemberId=c3e94bc3-3fcf-423d-bc25-e5a5818cd2b9&IsAnonymous=True; visitorid=46315422-d835-4a38-b428-4b9c5d6243d3; s_fid=6468EA5E39AF374B-2F7C971BB196D965; sc_vid=7c12948068d2cb92c1f1622aeaabc62d; bm_sz=040B815C79F42322C6F55B9A84059612~YAAQkjMHYIT0S/19AQAAdntxMA5K8hYQ/xEiGMe5kfWsY2+92eOVpwnewEY3vnLuUAjKAoVn2uSc2MzFaqBHPm0WC+sc06cnqNlX38HRuIOFGmX9bGf1X19+IWQ9fmRcCpTgdEgVQXn5I+JxiWbVQQ/b+BDWc/7sO4SSp4dohaK4HtUBBn9dM816ldpcebE6wARHqJ1/rU2D7CQWDlwZKElVWpckfSqbkRAGZU2LcR236v7y7VvEgQXDbLZt1+hK1XQdwGOk/IqCUJ/S8tN5+bv61EoN48XNoa59wW1nXXJ/Nt7dJ6k=~4408632~4277301; listing_page__qualtrics=run; SsaSessionCookie=ab1f18f8-ec5d-4009-a914-95d2f882a97f; s_cc=true; SessionCookie=e6f10e4e-d580-4080-b16e-4a0005e1250b; bm_mi=02373D7983A008BAA61DFB006C1C8A0E~QKtA+MDyql6ENWQY8PRxd7Pqtaufphkw420ior2myH3DZ6Ae19lIXWpswSDF1rxxpCOMw1A/Xj42mDE45UQBoYGNtM/WLe8kQUicaFQ14Ins6++/b8PJ4xOJdMAzc/JImoYfkrrTk4S32JJvvPvneHe14i0EboPJo0IfpWuVuAPK4z0pY8kP2jAi0AkWvLF1G0QNYSU48wpAXe3WmnZOTGnP4zqowbVMiZiFt4sf5yR3CZSS9wV2TDNedylo0bieo1FUG7zqZiEbO/mFsWcRBI1180TWS6BGAAbBEZvxyw0HnlqrsPIp5quduoSS8Ehd; gpv_pn=%2FJobSearch%2FResults.aspx; s_sq=%5B%5BB%5D%5D; bm_sv=F6E62687D7A0F5C67D3AF1CABD6B9744~fDgputVUji95V4kU8PeHKlB8aj5CZ3qqhrtnsQRccZT2TKTMz/FUh5DwGwGO5oL7Y3wv0jsshnBru8dpe3/nUrtBcQ+14jM0dn5AqDN6LrxRfmTkiwnAcczsZZH2/Xj2fVroEyjGwc6FRdbCXZYL8Kvie1so2TY11EOz62OtcLw=; ak_bmsc=23E489A2DC2C230BB1D33B05DBA9BFEB~000000000000000000000000000000~YAAQ1DMHYDj7Z+19AQAAuhiDMA5vZLtn2bkBpZKSIYdvX1kjNcbAGcvQkRrju3gAjN4luOn2/AXFtXR4Vf23GoBabEa0H6ti6nj7K5wYDnaRL7IxtdNQiXNeh9Y4JSPFC8qoJ28QZu4CgEuYtITCS31GhjHOkORGy4//xNOE9SgwfqJ6OYLOV7ynQ4xrMGzo9Z62kCq48cIOkex/jCf1acEm56q1c6iY0qV/ide37GOWm+iAE/Y6irsdg6u+h3ZnF4r6fSFLhVmAL8jRN4j4m4QcY8O6E+L88V5r9cWDxHqviyBpuq2MIVT/UUmJmveSusPdJaHSykqozx1n8MciFQDT4YVoFv26ZzzLP6UPHHmGQVUAMBejmVp4Z4Rku5uzWpmJqunufp0oXBbj8VO90NzhAjxrVbkcmUN4fsdCBPi1a/E3GY4WHoVy1IFAOq1JIFReOfrK9oodOkAlNLvEmw==; FreshUserTemp=https://www.jobsite.co.uk/jobs/degree-mathematics?s=header; TJG-Engage=1; CONSENTMGR=c1:0%7Cc2:0%7Cc3:0%7Cc4:0%7Cc5:0%7Cc6:0%7Cc7:0%7Cc8:0%7Cc9:1%7Cc10:0%7Cc11:0%7Cc12:0%7Cc13:0%7Cc14:0%7Cc15:0%7Cts:1641492245529%7Cconsent:true; utag_main=v_id:017e24e57d970023786b817ac51005079001e071009e2$_sn:19$_se:21$_ss:0$_st:1641494045530$ses_id:1641490250874%3Bexp-session$_pn:11%3Bexp-session$PersistedFreshUserValue:0.1%3Bexp-session$PersistedClusterId:OTHER--9999%3Bexp-session; s_ppvl=%2FJobSearch%2FResults.aspx%2C13%2C13%2C743%2C1600%2C741%2C1600%2C900%2C2%2CP; s_ppv=%2FJobSearch%2FResults.aspx%2C43%2C13%2C2545%2C1105%2C741%2C1600%2C900%2C2%2CP; EntryUrl=/jobs/Degree-Accounting-and-Finance?s=header; SearchResults="96078524,96081944,96081940,96081932,96028790,96023400,95997230,95988474,95911593,95886582,95822047,95794932,95717150,96049263,96104930,96103354,96097166,96094806,96103713,96104313,96092943,96078775,96091168,96097009,96106218"; _abck=508823E0A454CEF8D6A48101DB66BDB8~-1~YAAQZIlJF/Qx/rR9AQAA6CaQMAcuV5W+q0rR81SHANopxLna+xREkvs/PgVa+4GQrKei8nvkruKjFI1ij6jDgOcLUe+NwnbBvvnhhAf3QYmHwP9plWN11rY4azCfJX98LZ8WTUvDj3qai57Y/xVKGQtvm8/rDrZODEUq4HM1iieSnIopTWGQv5UQYUbPr/IIyi3ajN+BCywOEhCk/1TqDAdQpEmfdwAyyMm2dae0UrPYPL9TtQhJR5md0vudL6ViRDANKpm3XhiWQ/gQzNEVhzvTufprBqOfvqWYImvZtByld5i8Szqhffs78xg2LE4FE4dXeZDEz5/DlV0S3KUIZdTU/tpwqDzTMECOKiG6EgR5s2YQxgwpbbzFRFJ0N8tjHO2VcMzmyfHH7pOfoW6acVh+Ww5skZbN1kB8fA==~-1~||1-IIljDRDehm-1-10-1000-2||~-1',

'origin': 'https://www.jobsite.co.uk',

'referer': 'https://www.jobsite.co.uk/jobs/Degree-Accounting-and-Finance?s=header',

'sec-ch-ua': '" Not A;Brand";v="99", "Chromium";v="96", "Google Chrome";v="96"',

'sec-ch-ua-mobile': '?0',

'sec-ch-ua-platform': '"macOS"',

'sec-fetch-dest': 'empty',

'sec-fetch-mode': 'cors',

'sec-fetch-site': 'same-origin',

'user-agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.110 Safari/537.36x-requested-with: XMLHttpRequest'

}

class JobSpider(scrapy.Spider):

name = 'job_play'

start_urls = []

#html = f'https://www.jobsite.co.uk/jobs/{}' We implement this if it works

custom_settings = {

'USER_AGENT':'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/15.2 Safari/605.1.15',

}

def start_requests(self):

url = 'https://www.jobsite.co.uk/jobs/degree-mathematics'

import requests

from bs4 import BeautifulSoup

r = requests.get(url, headers = headers1)

soup = BeautifulSoup(r.content, 'lxml')

pages = soup.select('.PageLink-sc-1v4g7my-0.gwcKwa[data-at]')

data = []

for i in pages:

data = pages[-1].text.strip()

for i in range(1, int(data)+1):

self.start_urls.append(f'https://www.jobsite.co.uk/jobs/degree-mathematics?page={i}')

for urls in self.start_urls:

yield scrapy.FormRequest(

url = urls,

callback = self.parse,

meta= dict(

playwright = True,

playwright_include_page = True,

playwright_page_coroutines = [

PageCoroutine('wait_for_selector', 'div.row.job-results-row')

]

),

cb_kwargs = {

}

)

def parse(self, response):

for jobs in response.xpath("//a[contains(@class, 'sc-fzqBkg')][last()]"):

yield response.follow(

jobs,

callback=self.parse_jobs,

meta= dict(

playwright = True,

playwright_include_page = True,

playwright_page_coroutines = [

PageCoroutine('wait_for_selector', '.container.job-content')

]

)

)

async def parse_jobs(self, response):

yield {

"url": response.url,

"title": response.xpath("//h1[@class='brand-font']//text()").get(),

"price": response.xpath("//li[@class='salary icon']//div//text()").get(),

"organisation": response.xpath("//a[@id='companyJobsLink']//text()").get()

}

if __name__ == "__main__":

process = CrawlerProcess(

settings={

"TWISTED_REACTOR": "twisted.internet.asyncioreactor.AsyncioSelectorReactor",

"DOWNLOAD_HANDLERS": {

"https": "scrapy_playwright.handler.ScrapyPlaywrightDownloadHandler",

"http": "scrapy_playwright.handler.ScrapyPlaywrightDownloadHandler",

},

"FEED_URI":'jobs_test2.jl',

"FEED_FORMAT":'jsonlines',

}

)

process.crawl(JobSpider)

process.start()

But also it seems to open up multiple chrome browsers which eat up a lot of my RAM during the processing. It also seems to require a lot of RAM power to execute the job of scraping the following links above:

The scraper's ability to output items seems unaffected, but at the end of the playwright request's response callback I get the following.

2021-01-26 11:58:58 machinename asyncio[21762] ERROR Task exception was never retrieved

future: <Task finished coro=<Route.continue_() done, defined at /Users/jessica/.local/share/virtualenvs/upwork-scrapers-2AkzeUYG/lib/python3.7/site-packages/playwright/async_api.py:481> exception=Error('Target page, context or browser has been closed')>

Traceback (most recent call last):

File "/Users/jessica/.local/share/virtualenvs/upwork-scrapers-2AkzeUYG/lib/python3.7/site-packages/playwright/async_api.py", line 508, in continue_

postData=postData,

File "/Users/jessica/.local/share/virtualenvs/upwork-scrapers-2AkzeUYG/lib/python3.7/site-packages/playwright/_network.py", line 195, in continue_

await self._channel.send("continue", cast(Any, overrides))

File "/Users/jessica/.local/share/virtualenvs/upwork-scrapers-2AkzeUYG/lib/python3.7/site-packages/playwright/_connection.py", line 36, in send

return await self.inner_send(method, params, False)

File "/Users/jessica/.local/share/virtualenvs/upwork-scrapers-2AkzeUYG/lib/python3.7/site-packages/playwright/_connection.py", line 47, in inner_send

result = await callback.future

playwright._types.Error: Target page, context or browser has been closed

issue: Hi, I have a problem. It is when I use ctrl+c to close the scrapy, it will stuck in the await self._proc.wait(). So the code not exited normally.

device: MacBook Pro (13-inch, M1, 2020)

env: Python 3.7 with rosetta

code:

in /usr/local/lib/python3.7/site-packages/playwright/_impl/_transport.py

async def wait_until_stopped(self) -> None:

await self._stopped_future

print(type(self._proc))

print("-------")

# await self._proc.communicate(input=None)

await self._proc.wait()

print("xxxxxxx")log:

2022-01-27 19:10:58 [scrapy_playwright] INFO: Closing context

2022-01-27 19:10:58 [scrapy_playwright] INFO: Closing browser

2022-01-27 19:10:58 [asyncio] DEBUG: poll took 2.941 ms: 1 events

2022-01-27 19:10:58 [asyncio] DEBUG: poll took 11.594 ms: 1 events

2022-01-27 19:10:58 [asyncio] DEBUG: poll took 3.039 ms: 1 events

2022-01-27 19:10:58 [scrapy_playwright] INFO: Closed browser

2022-01-27 19:10:58 [scrapy_playwright] INFO: 8633280000

2022-01-27 19:10:58 [scrapy_playwright] INFO: MainThread

111

222

<class 'playwright._impl._transport.PipeTransport'>

2022-01-27 19:10:58 [asyncio] DEBUG: poll took 1.659 ms: 2 events

2022-01-27 19:10:58 [asyncio] INFO: <_UnixReadPipeTransport fd=17 polling> was closed by peer

2022-01-27 19:10:58 [asyncio] INFO: <_UnixWritePipeTransport fd=16 idle bufsize=0> was closed by peer

2022-01-27 19:10:58 [asyncio] DEBUG: poll took 3.045 ms: 1 events

2022-01-27 19:10:58 [asyncio] INFO: <_UnixReadPipeTransport fd=15 polling> was closed by peer

<class 'asyncio.subprocess.Process'>

-------Hi there.

I'm new to scrapy-playwright .after I read whole doc and issues, I can't find option to launch_persistent_context. Is there any thing i missed or it's not support yet?

Thanks

Hi,

I executed many tests to scrape thousand of urls, and confirmed the error Ineffective mark-compacts near heap limit Allocation failed - JavaScript heap out of memory, the error occurred when I crawled about 2500 urls from https://majestic.com/reports/majestic-million and Scrapy script crashed. I tested on:

multiple-contexts branch from #13 with creating new context per domain thanks to example in context exampleSo, I guess this is a bug.

The detail of error:

FATAL ERROR: Ineffective mark-compacts near heap limit Allocation failed - JavaScript heap out of memory

1: 0xa18150 node::Abort() [/home/ubuntu/.local/lib/python3.8/site-packages/playwright/driver/node]

2: 0xa1855c node::OnFatalError(char const*, char const*) [/home/ubuntu/.local/lib/python3.8/site-packages/playwright/driver/node]

3: 0xb9715e v8::Utils::ReportOOMFailure(v8::internal::Isolate*, char const*, bool) [/home/ubuntu/.local/lib/python3.8/site-packages/playwright/driver/node]

4: 0xb974d9 v8::internal::V8::FatalProcessOutOfMemory(v8::internal::Isolate*, char const*, bool) [/home/ubuntu/.local/lib/python3.8/site-packages/playwright/driver/node]

5: 0xd54755 [/home/ubuntu/.local/lib/python3.8/site-packages/playwright/driver/node]

6: 0xd54de6 v8::internal::Heap::RecomputeLimits(v8::internal::GarbageCollector) [/home/ubuntu/.local/lib/python3.8/site-packages/playwright/driver/node]

7: 0xd616a5 v8::internal::Heap::PerformGarbageCollection(v8::internal::GarbageCollector, v8::GCCallbackFlags) [/home/ubuntu/.local/lib/python3.8/site-packages/playwright/driver/node]

8: 0xd62555 v8::internal::Heap::CollectGarbage(v8::internal::AllocationSpace, v8::internal::GarbageCollectionReason, v8::GCCallbackFlags) [/home/ubuntu/.local/lib/python3.8/site-packages/playwright/driver/node]

9: 0xd6500c v8::internal::Heap::AllocateRawWithRetryOrFail(int, v8::internal::AllocationType, v8::internal::AllocationOrigin, v8::internal::AllocationAlignment) [/home/ubuntu/.local/lib/python3.8/site-packages/playwright/driver/node]

10: 0xd32eac v8::internal::Factory::NewRawOneByteString(int, v8::internal::AllocationType) [/home/ubuntu/.local/lib/python3.8/site-packages/playwright/driver/node]

11: 0xd3366d v8::internal::Factory::NewStringFromUtf8(v8::internal::Vector<char const> const&, v8::internal::AllocationType) [/home/ubuntu/.local/lib/python3.8/site-packages/playwright/driver/node]

12: 0xbaf47f v8::String::NewFromUtf8(v8::Isolate*, char const*, v8::NewStringType, int) [/home/ubuntu/.local/lib/python3.8/site-packages/playwright/driver/node]

13: 0xaf5340 node::StringBytes::Encode(v8::Isolate*, char const*, unsigned long, node::encoding, v8::Local<v8::Value>*) [/home/ubuntu/.local/lib/python3.8/site-packages/playwright/driver/node]

14: 0x9f21e6 [/home/ubuntu/.local/lib/python3.8/site-packages/playwright/driver/node]

15: 0x1390d8d [/home/ubuntu/.local/lib/python3.8/site-packages/playwright/driver/node]

Note:

Ineffective mark-compacts near heap limit Allocation failed - JavaScript heap out of memory and he recommends to use new context for new domain, more detail in playwrightHi!

https://github.com/microsoft/playwright/releases/tag/v1.7.0 added the browser storage API. How would this be used with this package? Could it be described somewhere in the docs, or is it not possible?

The code:

scrapy.Request(

url=url,

cookies=cookies,

...

)

It is not available.

But I use this method:await page.context.add_cookies(cookies=c) in parse, it is available, but this method is too bad.

I'm onwards trying to extract the last page number from a dynamic site. I've made my xpaths general, so that they can be used for dynamic loading. However! it returns 3 when I yield the number. I know that my xpath is correct and it should be getting the page number.

My question is whether this is because a full-window is not implemented, and it somehow grabs the wrong number?

Here's what I have tried:

import scrapy

from scrapy_playwright.page import PageCoroutine

class JobSpider(scrapy.Spider):

name = 'test_play'

start_urls = ['https://jobsite.co.uk/jobs/Degree-Accounting-and-Finance']

custom_settings = {

'USER_AGENT':'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/15.2 Safari/605.1.15'

}

def start_requests(self):

for url in self.start_urls:

yield scrapy.Request(

url = url,

callback = self.parse,

meta = dict(

playwright=True,

playwright_include_page = True,

playwright_page_coroutines = [

PageCoroutine('wait_for_selector', 'div.row.job-results-row')

]

))

def parse(self, response):

last_page = response.xpath('((//div)[position() mod 5=2]//div[@class]//a)[position() mod 65=53][last()]//text()').get()

#last_page=int(last_page)

#for page in range(2, last_page + 1):

yield {

'last_page':last_page

}

Returns:

{'last_page': 3}

when i set TWISTED_REACTOR in spider.custom_settings and send a request, then raise an error:

scrapy.exceptions.NotSupported: Unsupported URL scheme 'https': The installed reactor (twisted.internet.selectreactor.SelectReactor) does not match the requested one (twisted.internet.asyncioreactor.AsyncioSelectorReactor)

I've got a problem where my Request objects are not getting garbage collected but pile up until memory runs out. I've checked this with trackref and objgraph and it looks to me like something in the ScrapyPlaywrightDownloadHandler is keeping a reference to all the Requests? Attached is the objgraph output for the first Request after a few have been handled.

It would be good to have a way to manage multiple browsers contexts, either defined at the start of the crawl (in settings, for instance) or created/destroyed during the crawl.

/cc @kalessin

Hey there,

I wanted to try and get started with playwright, and what I did to start off was simply add the meta = {'playwright':True} arguments to the scrapy.Request in an already working spider.

However, now the spider immediately stops working and gives the error:

AttributeError: 'ScrapyPlaywrightDownloadHandler' object has no attribute 'contexts'

I already added the following lines to the settings.py of my script:

DOWNLOAD_HANDLERS = {

"http": "scrapy_playwright.handler.ScrapyPlaywrightDownloadHandler",

"https": "scrapy_playwright.handler.ScrapyPlaywrightDownloadHandler",

}

TWISTED_REACTOR = "twisted.internet.asyncioreactor.AsyncioSelectorReactor"

Full log:

2022-01-21 19:12:47 [scrapy.utils.signal] ERROR: Error caught on signal handler: <bound method DownloadHandlers._close of <scrapy.core.downloader.handlers.DownloadHandlers object at 0x7fcddf904220>>

Traceback (most recent call last):

File "/home/jeroen/anaconda3/envs/scratch/lib/python3.8/site-packages/twisted/internet/defer.py", line 1657, in _inlineCallbacks

result = current_context.run(

File "/home/jeroen/anaconda3/envs/scratch/lib/python3.8/site-packages/twisted/python/failure.py", line 500, in throwExceptionIntoGenerator

return g.throw(self.type, self.value, self.tb)

File "/home/jeroen/anaconda3/envs/scratch/lib/python3.8/site-packages/scrapy/core/downloader/handlers/__init__.py", line 81, in _close

yield dh.close()

File "/home/jeroen/anaconda3/envs/scratch/lib/python3.8/site-packages/twisted/internet/defer.py", line 1657, in _inlineCallbacks

result = current_context.run(

File "/home/jeroen/anaconda3/envs/scratch/lib/python3.8/site-packages/twisted/python/failure.py", line 500, in throwExceptionIntoGenerator

return g.throw(self.type, self.value, self.tb)

File "/home/jeroen/anaconda3/envs/scratch/lib/python3.8/site-packages/scrapy_playwright/handler.py", line 146, in close

yield deferred_from_coro(self._close())

File "/home/jeroen/anaconda3/envs/scratch/lib/python3.8/site-packages/twisted/internet/defer.py", line 1031, in adapt

extracted = result.result()

File "/home/jeroen/anaconda3/envs/scratch/lib/python3.8/site-packages/scrapy_playwright/handler.py", line 149, in _close

self.contexts.clear()

AttributeError: 'ScrapyPlaywrightDownloadHandler' object has no attribute 'contexts'

Seems something is wrong with my setup, anyone got an idea where the issue lies?

I am new to scrapy-playwright soo there is a chance I have missed the option but I did try my best to make page.fill option work using PageCoroutine function but I am not successful basically I want to pass "page.fill('#to_date', '2021-12-17')" to the browser before I could collect response if it is possible with an existing solution please help me with an example to login to quotes to scrap using page.fill to enter user name and password it will help other newbies too.

Hello,

I'm using scrapy-playwright package to capture screenshot and get html content of 2000 websites, my main code looks simple:

def start_requests(self):

....

yield scrapy.Request(

url=url,

meta={"playwright": True, "playwright_include_page": True},

)

....

async def parse(self, response):

page = response.meta["playwright_page"]

...

await page.screenshot(path=screenshot_file_full_path)

html = await page.content()

await page.close()

...

There are many errors when I ran the script, I change CONCURRENT_REQUESTS from 30 to 1 but the results was no different.

My test included 2000 websites, but the Scrapy script scraped only 511 results (about 25% successful rate) and the script is running without more results and error logs.

Please guide me to fix this, thanks in advance,

My error logs:

2021-06-22 11:49:53 [scrapy.core.scraper] ERROR: Error downloading <GET https://www.ask.com>

Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/twisted/internet/defer.py", line 1416, in _inlineCallbacks

result = result.throwExceptionIntoGenerator(g)

File "/usr/lib/python3/dist-packages/twisted/python/failure.py", line 491, in throwExceptionIntoGenerator

return g.throw(self.type, self.value, self.tb)

File "/home/ubuntu/.local/lib/python3.8/site-packages/scrapy/core/downloader/middleware.py", line 44, in process_request

return (yield download_func(request=request, spider=spider))

File "/usr/lib/python3/dist-packages/twisted/internet/defer.py", line 824, in adapt

extracted = result.result()

File "/home/ubuntu/.local/lib/python3.8/site-packages/scrapy_playwright/handler.py", line 140, in _download_request

result = await self._download_request_with_page(request, spider, page)

File "/home/ubuntu/.local/lib/python3.8/site-packages/scrapy_playwright/handler.py", line 160, in _download_request_with_page

response = await page.goto(request.url)

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/async_api/_generated.py", line 6006, in goto

await self._async(

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_page.py", line 429, in goto

return await self._main_frame.goto(**locals_to_params(locals()))

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_frame.py", line 117, in goto

await self._channel.send("goto", locals_to_params(locals()))

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_connection.py", line 36, in send

return await self.inner_send(method, params, False)

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_connection.py", line 54, in inner_send

result = next(iter(done)).result()

playwright._impl._api_types.TimeoutError: Timeout 30000ms exceeded.

=========================== logs ===========================

navigating to "https://www.ask.com", waiting until "load"

============================================================

Note: use DEBUG=pw:api environment variable to capture Playwright logs.

2021-06-22 11:51:24 [scrapy.core.scraper] ERROR: Error downloading <GET https://www.tvzavr.ru>

Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/twisted/internet/defer.py", line 1416, in _inlineCallbacks

result = result.throwExceptionIntoGenerator(g)

File "/usr/lib/python3/dist-packages/twisted/python/failure.py", line 491, in throwExceptionIntoGenerator

return g.throw(self.type, self.value, self.tb)

File "/home/ubuntu/.local/lib/python3.8/site-packages/scrapy/core/downloader/middleware.py", line 44, in process_request

return (yield download_func(request=request, spider=spider))

File "/usr/lib/python3/dist-packages/twisted/internet/defer.py", line 824, in adapt

extracted = result.result()

File "/home/ubuntu/.local/lib/python3.8/site-packages/scrapy_playwright/handler.py", line 140, in _download_request

result = await self._download_request_with_page(request, spider, page)

File "/home/ubuntu/.local/lib/python3.8/site-packages/scrapy_playwright/handler.py", line 160, in _download_request_with_page

response = await page.goto(request.url)

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/async_api/_generated.py", line 6006, in goto

await self._async(

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_page.py", line 429, in goto

return await self._main_frame.goto(**locals_to_params(locals()))

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_frame.py", line 117, in goto

await self._channel.send("goto", locals_to_params(locals()))

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_connection.py", line 36, in send

return await self.inner_send(method, params, False)

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_connection.py", line 54, in inner_send

result = next(iter(done)).result()

playwright._impl._api_types.TimeoutError: Timeout 30000ms exceeded.

=========================== logs ===========================

navigating to "https://www.tvzavr.ru", waiting until "load"

============================================================

Note: use DEBUG=pw:api environment variable to capture Playwright logs.

.....

2021-06-22 11:51:06 [asyncio] ERROR: Task exception was never retrieved

future: <Task finished name='Task-11634' coro=<Route.continue_() done, defined at /home/ubuntu/.local/lib/python3.8/site-packages/playwright/async_api/_generated.py:544> exception=Error('Target page, context or browser has been closed')>

Traceback (most recent call last):

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/async_api/_generated.py", line 582, in continue_

await self._async(

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_network.py", line 207, in continue_

await self._channel.send("continue", cast(Any, overrides))

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_connection.py", line 36, in send

return await self.inner_send(method, params, False)

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_connection.py", line 54, in inner_send

result = next(iter(done)).result()

playwright._impl._api_types.Error: Target page, context or browser has been closed

2021-06-22 11:51:06 [asyncio] ERROR: Task exception was never retrieved

future: <Task finished name='Task-11640' coro=<Route.continue_() done, defined at /home/ubuntu/.local/lib/python3.8/site-packages/playwright/async_api/_generated.py:544> exception=Error('Target page, context or browser has been closed')>

Traceback (most recent call last):

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/async_api/_generated.py", line 582, in continue_

await self._async(

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_network.py", line 207, in continue_

await self._channel.send("continue", cast(Any, overrides))

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_connection.py", line 36, in send

return await self.inner_send(method, params, False)

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_connection.py", line 54, in inner_send

result = next(iter(done)).result()

playwright._impl._api_types.Error: Target page, context or browser has been closed

2021-06-22 11:51:06 [asyncio] ERROR: Task exception was never retrieved

future: <Task finished name='Task-11641' coro=<Route.continue_() done, defined at /home/ubuntu/.local/lib/python3.8/site-packages/playwright/async_api/_generated.py:544> exception=Error('Target page, context or browser has been closed')>

Traceback (most recent call last):

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/async_api/_generated.py", line 582, in continue_

await self._async(

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_network.py", line 207, in continue_

await self._channel.send("continue", cast(Any, overrides))

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_connection.py", line 36, in send

return await self.inner_send(method, params, False)

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_connection.py", line 54, in inner_send

result = next(iter(done)).result()

playwright._impl._api_types.Error: Target page, context or browser has been closed

2021-06-22 11:51:06 [asyncio] ERROR: Task exception was never retrieved

future: <Task finished name='Task-11652' coro=<Route.continue_() done, defined at /home/ubuntu/.local/lib/python3.8/site-packages/playwright/async_api/_generated.py:544> exception=Error('Target page, context or browser has been closed')>

Traceback (most recent call last):

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/async_api/_generated.py", line 582, in continue_

await self._async(

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_network.py", line 207, in continue_

await self._channel.send("continue", cast(Any, overrides))

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_connection.py", line 36, in send

return await self.inner_send(method, params, False)

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_connection.py", line 54, in inner_send

result = next(iter(done)).result()

playwright._impl._api_types.Error: Target page, context or browser has been closed

2021-06-22 11:51:06 [asyncio] ERROR: Task exception was never retrieved

future: <Task finished name='Task-11657' coro=<Route.continue_() done, defined at /home/ubuntu/.local/lib/python3.8/site-packages/playwright/async_api/_generated.py:544> exception=Error('Target page, context or browser has been closed')>

Traceback (most recent call last):

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/async_api/_generated.py", line 582, in continue_

await self._async(

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_network.py", line 207, in continue_

await self._channel.send("continue", cast(Any, overrides))

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_connection.py", line 36, in send

return await self.inner_send(method, params, False)

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_connection.py", line 54, in inner_send

result = next(iter(done)).result()

playwright._impl._api_types.Error: Target page, context or browser has been closed

2021-06-22 11:51:06 [asyncio] ERROR: Task exception was never retrieved

future: <Task finished name='Task-11669' coro=<Route.continue_() done, defined at /home/ubuntu/.local/lib/python3.8/site-packages/playwright/async_api/_generated.py:544> exception=Error('Target page, context or browser has been closed')>

Traceback (most recent call last):

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/async_api/_generated.py", line 582, in continue_

await self._async(

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_network.py", line 207, in continue_

await self._channel.send("continue", cast(Any, overrides))

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_connection.py", line 36, in send

return await self.inner_send(method, params, False)

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_connection.py", line 54, in inner_send

result = next(iter(done)).result()

playwright._impl._api_types.Error: Target page, context or browser has been closed

2021-06-22 11:51:06 [asyncio] ERROR: Task exception was never retrieved

future: <Task finished name='Task-11670' coro=<Route.continue_() done, defined at /home/ubuntu/.local/lib/python3.8/site-packages/playwright/async_api/_generated.py:544> exception=Error('Target page, context or browser has been closed')>

Traceback (most recent call last):

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/async_api/_generated.py", line 582, in continue_

await self._async(

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_network.py", line 207, in continue_

await self._channel.send("continue", cast(Any, overrides))

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_connection.py", line 36, in send

return await self.inner_send(method, params, False)

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_connection.py", line 54, in inner_send

result = next(iter(done)).result()

playwright._impl._api_types.Error: Target page, context or browser has been closed

2021-06-22 11:51:06 [asyncio] ERROR: Task exception was never retrieved

future: <Task finished name='Task-11671' coro=<Route.continue_() done, defined at /home/ubuntu/.local/lib/python3.8/site-packages/playwright/async_api/_generated.py:544> exception=Error('Target page, context or browser has been closed')>

Traceback (most recent call last):

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/async_api/_generated.py", line 582, in continue_

await self._async(

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_network.py", line 207, in continue_

await self._channel.send("continue", cast(Any, overrides))

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_connection.py", line 36, in send

return await self.inner_send(method, params, False)

File "/home/ubuntu/.local/lib/python3.8/site-packages/playwright/_impl/_connection.py", line 54, in inner_send

result = next(iter(done)).result()

playwright._impl._api_types.Error: Target page, context or browser has been closed

.....

My Scrapy settings looks like:

CONCURRENT_REQUESTS = 30

...

# Playwright settings

PLAYWRIGHT_CONTEXT_ARGS = {'ignore_https_errors':True}

PLAYWRIGHT_DEFAULT_NAVIGATION_TIMEOUT = 30000

...

RETRY_ENABLED = True

RETRY_TIMES = 3

My env:

Ubuntu 20.04 and MacOS 11.2.3

Python 3.8.5

Scrapy 2.5.0

playwright 1.12.1

scrapy-playwright 0.0.3

I´d like to open the webpage with playwright but with the usage from one of the working rotating proxies. The main benefit of the rotating proxy lib is, that the not working proxies get filtered by the time.

A really bad workaround, which didn´t handle the not working proxies, is to get a random proxy from a list and setting it at the kwargs like:

...

"playwright_context_kwargs": {

"proxy": {

"server": get_random_proxy(),

...

}

}

But is there any soltution to work with both of the libs?

I am having a problem where the spider is creating a lot of pages and it is causing memory issues. It would be nice if we could control the maximum amount of pages before sending a new _download_request to create a new one. This happens a lot when I start using the page from 'playwright-page' in a parse method, because it will bypass Scrapy request workflow. Can this be done using signals?

Hello! Thank you for your hard work on this scrapy-plugin - it has been really valuable!

I am having some issues running scrapy-playwright in an AWS lambda docker container. Running chromium and playwright in lambda is a pretty well understood problem, but for some reason the combination of playwright + scrapy in a docker container does not seem to work well.

I am getting the following error:

playwright._impl._api_types.Error: Target page, context or browser has been closed2021-10-14 22:24:57

[scrapy.core.scraper] ERROR: Error downloading <GET https://example.com>

I have recreated a simple example here: https://github.com/maxneuvians/scrapy-playwright-lambda-debug

In working on this example I have found that chromium crashes if I pass the '--single-process' flag. If I comment it out, scrapy runs fine. If you want to test it you can do the following:

docker build -t sp-test -f Dockerfile .

docker run -p 9000:8080 sp-test

curl -XPOST "http://localhost:9000/2015-03-31/functions/function/invocations" -d '{}'

The docker container will build it in a way that AWS lambda expects it to be invoked. If you post the curl you will see scrapy executing successfully. Uncomment the flag, re-build and run, and it throws the error.

Unfortunately, conventional wisdom on the internet seems to imply that '--single-process' is a mandatory flag for headless chromium on AWS Lambda (Looking at puppeteer and other implementations, ex: https://github.com/JupiterOne/playwright-aws-lambda/blob/main/src/chromium.ts#L63).

Let me know if you have any ideas that could help!

Hi, elacuesta,

I use your handler in my Scrapy and it runs well and can crawl the information I need. However, some error occurs before the process in item and pipeline. Here is an example:

2021-12-27 16:50:26 [asyncio] ERROR: Task exception was never retrieved

future: <Task finished name='Task-184' coro=<Route.continue_() done, defined at /home/ethanz/.local/lib/python3.8/site-packages/playwright/async_api/_generated.py:710> exception=Error('Target page, context or browser has been closed')>

Traceback (most recent call last):

File "/home/ethanz/.local/lib/python3.8/site-packages/playwright/async_api/_generated.py", line 748, in continue_

await self._async(

File "/home/ethanz/.local/lib/python3.8/site-packages/playwright/_impl/_network.py", line 239, in continue_

await self._channel.send("continue", cast(Any, overrides))

File "/home/ethanz/.local/lib/python3.8/site-packages/playwright/_impl/_connection.py", line 39, in send

return await self.inner_send(method, params, False)

File "/home/ethanz/.local/lib/python3.8/site-packages/playwright/_impl/_connection.py", line 63, in inner_send

result = next(iter(done)).result()

playwright._impl._api_types.Error: Target page, context or browser has been closed

Sometimes, this error occurs 5 or 6 times per running, but I also met the situation that no errors. In addition, the difference among these errors are the numbers of the task, that is Task-180, Task-181, Task-182, et al.

I guess the error is about the coroutine or asynsio but I am not familiar with them. Do you know what is going on? Do need to change any settings? Thanks! BTW, I am using VM, ubuntu 20.04 on Windows 10.

Regards,

Ethan

I am running a scraper that opens a new page in playwright for certain types of links and would like to throttle these requests by say setting a max number of pages than can be open at any one time and adding them to a queue. How should I go about that? Currently it seems to open too many instances and overwhelms the cpu. Thanks in advance.

Hi elacuesta,

I am new to playwright and scrapy. And I met a problem that after I set the configuration (Download Handler and Twisted reactor), the main code is just the 'Basic usage' you showed in Readme.md. And after I run the code in windows, It shows that "TypeError: ProactorEventLoop is not supported, got: ", I think it is about the Twisted part but I dont know what exactly happened. Did I make any mistakes? Thanks!

Regards,

Ethan

Hello,

I found this example very useful for my case, but I am wondering if there is a way to use session token (which we get from GET request in start_requests) inside parse function to make post request with headers and body.

In my case the problem is that I have a for loop inside start_requests and when I get session storage token and start making another Request inside parse function, I get huge memory increase. If I close the context and page, then some products are missing during the crawling.

Could you please give an example how to use scrapy-playwright in order to make GET Request in start_requests and then make POST Request inside parse using session token?

Thank you in advance.

Mostly to ensure, that I'm out of options, except migrating to Linux (or using WSL2).

Windows 10, Python 3.8.5, Scrapy 2.4.1, playwright-1.9.2, scrapy-playwright 0.0.3

TL;DR: asyncioEventLoop built on top of SelectorEventLoop, and by design need from there addReader (or maybe something else), and won't work with ProactorEventLoop. But also, subprocesses on windows supported only in ProactorEventLoop, and not implemented in SelectorEventLoop.

The reasons mostly described here: https://docs.python.org/3/library/asyncio-platforms.html#asyncio-windows-subprocess

With process = CrawlerProcess(get_project_settings()) in starter.py:

from scrapy.utils.reactor import install_reactor

install_reactor('twisted.internet.asyncioreactor.AsyncioSelectorReactor')

In settings.py:

DOWNLOAD_HANDLERS = {

"http": "scrapy_playwright.handler.ScrapyPlaywrightDownloadHandler",

"https": "scrapy_playwright.handler.ScrapyPlaywrightDownloadHandler",

}

TWISTED_REACTOR = "twisted.internet.asyncioreactor.AsyncioSelectorReactor"

For Twisted == 20.3.0:

starter.py", line 8, in <module>

install_reactor('twisted.internet.asyncioreactor.AsyncioSelectorReactor')

File "C:\Users\i\miniconda3\envs\yu\lib\site-packages\scrapy\utils\reactor.py", line 66, in install_reactor

asyncioreactor.install(eventloop=event_loop)

File "C:\Users\i\miniconda3\envs\yu\lib\site-packages\twisted\internet\asyncioreactor.py", line 320, in install

reactor = AsyncioSelectorReactor(eventloop)

File "C:\Users\i\miniconda3\envs\yu\lib\site-packages\twisted\internet\asyncioreactor.py", line 69, in __init__

super().__init__()

File "C:\Users\i\miniconda3\envs\yu\lib\site-packages\twisted\internet\base.py", line 571, in __init__

self.installWaker()

File "C:\Users\i\miniconda3\envs\yu\lib\site-packages\twisted\internet\posixbase.py", line 286, in installWaker

self.addReader(self.waker)

File "C:\Users\i\miniconda3\envs\yu\lib\site-packages\twisted\internet\asyncioreactor.py", line 151, in addReader

self._asyncioEventloop.add_reader(fd, callWithLogger, reader,

File "C:\Users\i\miniconda3\envs\yu\lib\asyncio\events.py", line 501, in add_reader

raise NotImplementedError

NotImplementedError

For Twisted-21.2.0:

starter.py", line 8, in <module>

install_reactor('twisted.internet.asyncioreactor.AsyncioSelectorReactor')

File "C:\Users\i\miniconda3\envs\yu\lib\site-packages\scrapy\utils\reactor.py", line 66, in install_reactor

asyncioreactor.install(eventloop=event_loop)

File "C:\Users\i\miniconda3\envs\yu\lib\site-packages\twisted\internet\asyncioreactor.py", line 307, in install

reactor = AsyncioSelectorReactor(eventloop)

File "C:\Users\i\miniconda3\envs\yu\lib\site-packages\twisted\internet\asyncioreactor.py", line 60, in __init__

raise TypeError(

TypeError: SelectorEventLoop required, instead got: <ProactorEventLoop running=False closed=False debug=False>

(writing things below just for easier googling for errors, because of course those actions will not help):

Also, if we try to set for CrawlerProcess in starter.py:

asyncio.set_event_loop_policy(asyncio.WindowsSelectorEventLoopPolicy()) before installing reactor or just set SelectorEventLoop here:

install_reactor('twisted.internet.asyncioreactor.AsyncioSelectorReactor', event_loop_path='asyncio.SelectorEventLoop') - we will get NotImplementedError

Even if we not using starter script and will start spider from terminal with scrapy crawl spider_name with

ASYNCIO_EVENT_LOOP = "asyncio.SelectorEventLoop" in settings.py

future: <Task finished name='Task-4' coro=<Connection.run() done, defined at c:\users\i\miniconda3\envs\yu\lib\site-packages\playwright\_impl\_connection.py:163> exception=NotImplementedError()>

Traceback (most recent call last):

File "c:\users\i\miniconda3\envs\yu\lib\site-packages\playwright\_impl\_connection.py", line 166, in run

await self._transport.run()

File "c:\users\i\miniconda3\envs\yu\lib\site-packages\playwright\_impl\_transport.py", line 60, in run

proc = await asyncio.create_subprocess_exec(

File "c:\users\i\miniconda3\envs\yu\lib\asyncio\subprocess.py", line 236, in create_subprocess_exec

transport, protocol = await loop.subprocess_exec(

File "c:\users\i\miniconda3\envs\yu\lib\asyncio\base_events.py", line 1630, in subprocess_exec

transport = await self._make_subprocess_transport(

File "c:\users\i\miniconda3\envs\yu\lib\asyncio\base_events.py", line 491, in _make_subprocess_transport

raise NotImplementedError

NotImplementedError

i use , playwright + bs4 , to scrape the web with lazy loading , using mouse.wheel and time sleep its work to scrape contents , how to use in playwright scrapy plugin? "mouse.wheel" , i use page coroutine still not working , to display content i need 40seconds for engine to parse it

class AwesomeSpider(scrapy.Spider):

name = "awesome"

def start_requests(self):

# GET request

yield scrapy.Request("https://httpbin.org/get", meta={"playwright": True})

# POST request

yield scrapy.FormRequest(

url="https://httpbin.org/post",

formdata={"foo": "bar"},

meta={"playwright": True},

)

def parse(self, response):

# 'response' contains the page as seen by the browser

yield {"url": response.url}

#finished,loop back

Hey im unfamiliar how to loop back on this with this feature.

in your example say , how would you loop back to def parse ? Im familiar with scrapy features but that way is not working

Hi, I noticed that Playwright offers two APIs - one synchronous and one asynchronous.

It seems the handler you wrote is tied to the asynchronous API.

For my project, I plan to write an equivalent handler that uses the synchronous API. Do you think writing this synchronous handler would be possible? (I plan to not use the AsyncioSelectorReactor reactor)

Thank you!

I'm trying to catch various exceptions (timeout, dns error etc) in middleware to retry the download (possibly in a new context) but it looks like the exception never gets there?

If I'm reading this right then the exception gets caught in the _download_request() function in handler.py, which in turn is called from start_crawler() in scrapy via the asyncioreactor. It looks like it never enters the regular scrapy error flow, or am I missing something?

I'm testing right now by telling it to index an URL that doesn't exist which returns playwright._impl._api_types.Error: net::ERR_NAME_NOT_RESOLVED but the error looks the same as a timeout otherwise. Maybe it doesn't enter the regular flow just because it is the first URL it tries?

The error exists at arbitrary times. Maybe after viewing 500 pages, or on the very first one.

Example to reproduce

import re

from scrapy import Spider, Request

from scrapy.crawler import CrawlerProcess

from scrapy.selector import Selector

from scrapy.utils.project import get_project_settings

class Spider1(Spider):

name = "spider1"

custom_settings = {

"FEEDS": {

"members.json": {

"format": "jsonlines",

"encoding": "utf-8",

"store_empty": False,

},

}

}

URL_MEMBERS_POSTFIX = "?act=members&offset=0"