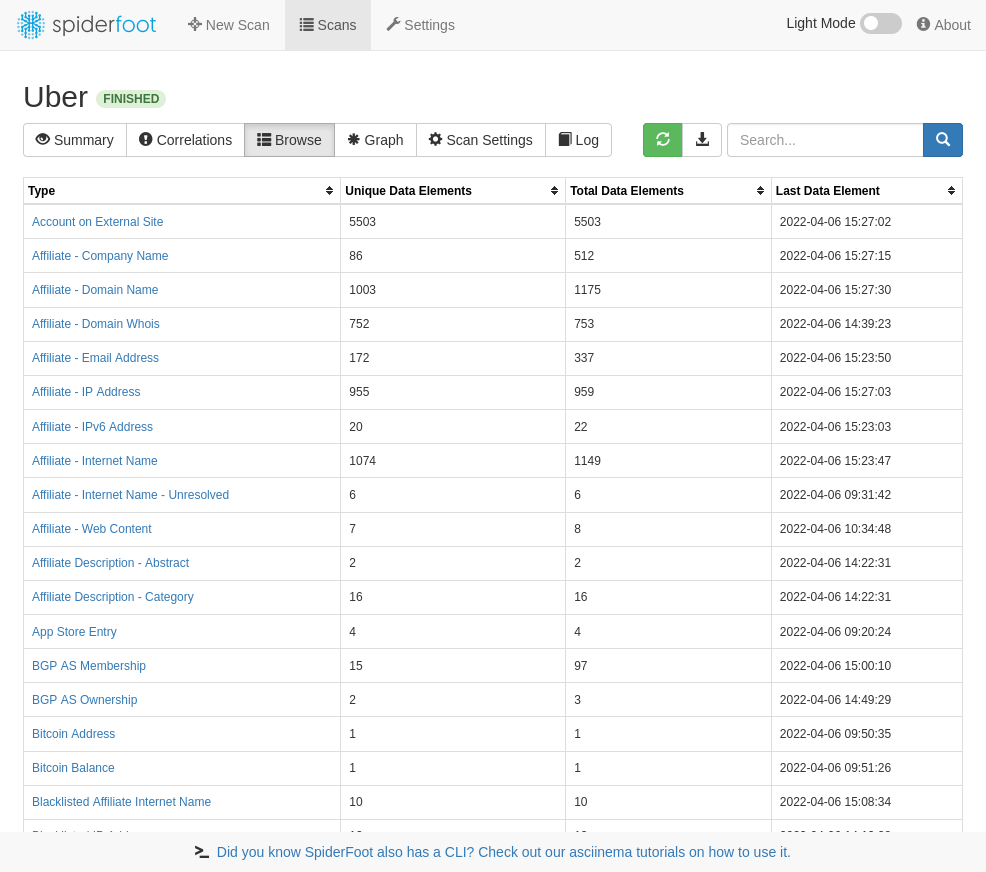

SpiderFoot is an open source intelligence (OSINT) automation tool. It integrates with just about every data source available and utilises a range of methods for data analysis, making that data easy to navigate.

SpiderFoot has an embedded web-server for providing a clean and intuitive web-based interface but can also be used completely via the command-line. It's written in Python 3 and MIT-licensed.

- Web based UI or CLI

- Over 200 modules (see below)

- Python 3.7+

- YAML-configurable correlation engine with 37 pre-defined rules

- CSV/JSON/GEXF export

- API key export/import

- SQLite back-end for custom querying

- Highly configurable

- Fully documented

- Visualisations

- TOR integration for dark web searching

- Dockerfile for Docker-based deployments

- Can call other tools like DNSTwist, Whatweb, Nmap and CMSeeK

- Actively developed since 2012!

Need more from SpiderFoot? Check out SpiderFoot HX for:

- 100% Cloud-based and managed for you

- Attack Surface Monitoring with change notifications by email, REST and Slack

- Multiple targets per scan

- Multi-user collaboration

- Authenticated and 2FA

- Investigations

- Customer support

- Third party tools pre-installed & configured

- Drive it with a fully RESTful API

- TOR integration built-in

- Screenshotting

- Bring your own Python SpiderFoot modules

- Feed scan data to Splunk, ElasticSearch and REST endpoints

See the full set of differences between SpiderFoot HX and the open source version here.

SpiderFoot can be used offensively (e.g. in a red team exercise or penetration test) for reconnaissance of your target or defensively to gather information about what you or your organisation might have exposed over the Internet.

You can target the following entities in a SpiderFoot scan:

- IP address

- Domain/sub-domain name

- Hostname

- Network subnet (CIDR)

- ASN

- E-mail address

- Phone number

- Username

- Person's name

- Bitcoin address

SpiderFoot's 200+ modules feed each other in a publisher/subscriber model to ensure maximum data extraction to do things like:

- Host/sub-domain/TLD enumeration/extraction

- Email address, phone number and human name extraction

- Bitcoin and Ethereum address extraction

- Check for susceptibility to sub-domain hijacking

- DNS zone transfers

- Threat intelligence and Blacklist queries

- API integration with SHODAN, HaveIBeenPwned, GreyNoise, AlienVault, SecurityTrails, etc.

- Social media account enumeration

- S3/Azure/Digitalocean bucket enumeration/scraping

- IP geo-location

- Web scraping, web content analysis

- Image, document and binary file meta data analysis

- Dark web searches

- Port scanning and banner grabbing

- Data breach searches

- So much more...

To install and run SpiderFoot, you need at least Python 3.7 and a number of Python libraries which you can install with pip. We recommend you install a packaged release since master will often have bleeding edge features and modules that aren't fully tested.

wget https://github.com/smicallef/spiderfoot/archive/v4.0.tar.gz

tar zxvf v4.0.tar.gz

cd spiderfoot-4.0

pip3 install -r requirements.txt

python3 ./sf.py -l 127.0.0.1:5001

git clone https://github.com/smicallef/spiderfoot.git

cd spiderfoot

pip3 install -r requirements.txt

python3 ./sf.py -l 127.0.0.1:5001

Check out the documentation and our asciinema videos for more tutorials.

Whether you're a contributor, user or just curious about SpiderFoot and OSINT in general, we'd love to have you join our community! SpiderFoot now has a Discord server for seeking help from the community, requesting features or just general OSINT chit-chat.

We have a comprehensive write-up and reference of the correlation rule-set introduced in SpiderFoot 4.0 here.

Also take a look at the template.yaml file for a walk through. The existing 37 rules are also quite readable and good as starting points for additional rules.

SpiderFoot has over 200 modules, most of which don't require API keys, and many of those that do require API keys have a free tier.

| Name | Description | Type |

|---|---|---|

| AbstractAPI | Look up domain, phone and IP address information from AbstractAPI. | Tiered API |

| abuse.ch | Check if a host/domain, IP address or netblock is malicious according to Abuse.ch. | Free API |

| AbuseIPDB | Check if an IP address is malicious according to AbuseIPDB.com blacklist. | Tiered API |

| Abusix Mail Intelligence | Check if a netblock or IP address is in the Abusix Mail Intelligence blacklist. | Tiered API |

| Account Finder | Look for possible associated accounts on over 500 social and other websites such as Instagram, Reddit, etc. | Internal |

| AdBlock Check | Check if linked pages would be blocked by AdBlock Plus. | Tiered API |

| AdGuard DNS | Check if a host would be blocked by AdGuard DNS. | Free API |

| Ahmia | Search Tor 'Ahmia' search engine for mentions of the target. | Free API |

| AlienVault IP Reputation | Check if an IP or netblock is malicious according to the AlienVault IP Reputation database. | Free API |

| AlienVault OTX | Obtain information from AlienVault Open Threat Exchange (OTX) | Tiered API |

| Amazon S3 Bucket Finder | Search for potential Amazon S3 buckets associated with the target and attempt to list their contents. | Free API |

| Apple iTunes | Search Apple iTunes for mobile apps. | Free API |

| Archive.org | Identifies historic versions of interesting files/pages from the Wayback Machine. | Free API |

| ARIN | Queries ARIN registry for contact information. | Free API |

| Azure Blob Finder | Search for potential Azure blobs associated with the target and attempt to list their contents. | Free API |

| Base64 Decoder | Identify Base64-encoded strings in URLs, often revealing interesting hidden information. | Internal |

| BGPView | Obtain network information from BGPView API. | Free API |

| Binary String Extractor | Attempt to identify strings in binary content. | Internal |

| BinaryEdge | Obtain information from BinaryEdge.io Internet scanning systems, including breaches, vulnerabilities, torrents and passive DNS. | Tiered API |

| Bing (Shared IPs) | Search Bing for hosts sharing the same IP. | Tiered API |

| Bing | Obtain information from bing to identify sub-domains and links. | Tiered API |

| Bitcoin Finder | Identify bitcoin addresses in scraped webpages. | Internal |

| Bitcoin Who's Who | Check for Bitcoin addresses against the Bitcoin Who's Who database of suspect/malicious addresses. | Tiered API |

| BitcoinAbuse | Check Bitcoin addresses against the bitcoinabuse.com database of suspect/malicious addresses. | Free API |

| Blockchain | Queries blockchain.info to find the balance of identified bitcoin wallet addresses. | Free API |

| blocklist.de | Check if a netblock or IP is malicious according to blocklist.de. | Free API |

| BotScout | Searches BotScout.com's database of spam-bot IP addresses and e-mail addresses. | Tiered API |

| botvrij.eu | Check if a domain is malicious according to botvrij.eu. | Free API |

| BuiltWith | Query BuiltWith.com's Domain API for information about your target's web technology stack, e-mail addresses and more. | Tiered API |

| C99 | Queries the C99 API which offers various data (geo location, proxy detection, phone lookup, etc). | Commercial API |

| CallerName | Lookup US phone number location and reputation information. | Free API |

| Censys | Obtain host information from Censys.io. | Tiered API |

| Certificate Transparency | Gather hostnames from historical certificates in crt.sh. | Free API |

| CertSpotter | Gather information about SSL certificates from SSLMate CertSpotter API. | Tiered API |

| CINS Army List | Check if a netblock or IP address is malicious according to Collective Intelligence Network Security (CINS) Army list. | Free API |

| CIRCL.LU | Obtain information from CIRCL.LU's Passive DNS and Passive SSL databases. | Free API |

| CleanBrowsing.org | Check if a host would be blocked by CleanBrowsing.org DNS content filters. | Free API |

| CleanTalk Spam List | Check if a netblock or IP address is on CleanTalk.org's spam IP list. | Free API |

| Clearbit | Check for names, addresses, domains and more based on lookups of e-mail addresses on clearbit.com. | Tiered API |

| CloudFlare DNS | Check if a host would be blocked by CloudFlare DNS. | Free API |

| CoinBlocker Lists | Check if a domain appears on CoinBlocker lists. | Free API |

| CommonCrawl | Searches for URLs found through CommonCrawl.org. | Free API |

| Comodo Secure DNS | Check if a host would be blocked by Comodo Secure DNS. | Tiered API |

| Company Name Extractor | Identify company names in any obtained data. | Internal |

| Cookie Extractor | Extract Cookies from HTTP headers. | Internal |

| Country Name Extractor | Identify country names in any obtained data. | Internal |

| Credit Card Number Extractor | Identify Credit Card Numbers in any data | Internal |

| Crobat API | Search Crobat API for subdomains. | Free API |

| Cross-Referencer | Identify whether other domains are associated ('Affiliates') of the target by looking for links back to the target site(s). | Internal |

| CRXcavator | Search CRXcavator for Chrome extensions. | Free API |

| Custom Threat Feed | Check if a host/domain, netblock, ASN or IP is malicious according to your custom feed. | Internal |

| CyberCrime-Tracker.net | Check if a host/domain or IP address is malicious according to CyberCrime-Tracker.net. | Free API |

| Debounce | Check whether an email is disposable | Free API |

| Dehashed | Gather breach data from Dehashed API. | Commercial API |

| Digital Ocean Space Finder | Search for potential Digital Ocean Spaces associated with the target and attempt to list their contents. | Free API |

| DNS Brute-forcer | Attempts to identify hostnames through brute-forcing common names and iterations. | Internal |

| DNS Common SRV | Attempts to identify hostnames through brute-forcing common DNS SRV records. | Internal |

| DNS for Family | Check if a host would be blocked by DNS for Family. | Free API |

| DNS Look-aside | Attempt to reverse-resolve the IP addresses next to your target to see if they are related. | Internal |

| DNS Raw Records | Retrieves raw DNS records such as MX, TXT and others. | Internal |

| DNS Resolver | Resolves hosts and IP addresses identified, also extracted from raw content. | Internal |

| DNS Zone Transfer | Attempts to perform a full DNS zone transfer. | Internal |

| DNSDB | Query FarSight's DNSDB for historical and passive DNS data. | Tiered API |

| DNSDumpster | Passive subdomain enumeration using HackerTarget's DNSDumpster | Free API |

| DNSGrep | Obtain Passive DNS information from Rapid7 Sonar Project using DNSGrep API. | Free API |

| DroneBL | Query the DroneBL database for open relays, open proxies, vulnerable servers, etc. | Free API |

| DuckDuckGo | Query DuckDuckGo's API for descriptive information about your target. | Free API |

| E-Mail Address Extractor | Identify e-mail addresses in any obtained data. | Internal |

| EmailCrawlr | Search EmailCrawlr for email addresses and phone numbers associated with a domain. | Tiered API |

| EmailFormat | Look up e-mail addresses on email-format.com. | Free API |

| EmailRep | Search EmailRep.io for email address reputation. | Tiered API |

| Emerging Threats | Check if a netblock or IP address is malicious according to EmergingThreats.net. | Free API |

| Error String Extractor | Identify common error messages in content like SQL errors, etc. | Internal |

| Ethereum Address Extractor | Identify ethereum addresses in scraped webpages. | Internal |

| Etherscan | Queries etherscan.io to find the balance of identified ethereum wallet addresses. | Free API |

| File Metadata Extractor | Extracts meta data from documents and images. | Internal |

| Flickr | Search Flickr for domains, URLs and emails related to the specified domain. | Free API |

| Focsec | Look up IP address information from Focsec. | Tiered API |

| FortiGuard Antispam | Check if an IP address is malicious according to FortiGuard Antispam. | Free API |

| Fraudguard | Obtain threat information from Fraudguard.io | Tiered API |

| F-Secure Riddler.io | Obtain network information from F-Secure Riddler.io API. | Commercial API |

| FullContact | Gather domain and e-mail information from FullContact.com API. | Tiered API |

| FullHunt | Identify domain attack surface using FullHunt API. | Tiered API |

| Github | Identify associated public code repositories on Github. | Free API |

| GLEIF | Look up company information from Global Legal Entity Identifier Foundation (GLEIF). | Tiered API |

| Google Maps | Identifies potential physical addresses and latitude/longitude coordinates. | Tiered API |

| Google Object Storage Finder | Search for potential Google Object Storage buckets associated with the target and attempt to list their contents. | Free API |

| Google SafeBrowsing | Check if the URL is included on any of the Safe Browsing lists. | Free API |

| Obtain information from the Google Custom Search API to identify sub-domains and links. | Tiered API | |

| Gravatar | Retrieve user information from Gravatar API. | Free API |

| Grayhat Warfare | Find bucket names matching the keyword extracted from a domain from Grayhat API. | Tiered API |

| Greensnow | Check if a netblock or IP address is malicious according to greensnow.co. | Free API |

| grep.app | Search grep.app API for links and emails related to the specified domain. | Free API |

| GreyNoise Community | Obtain IP enrichment data from GreyNoise Community API | Tiered API |

| GreyNoise | Obtain IP enrichment data from GreyNoise | Tiered API |

| HackerOne (Unofficial) | Check external vulnerability scanning/reporting service h1.nobbd.de to see if the target is listed. | Free API |

| HackerTarget | Search HackerTarget.com for hosts sharing the same IP. | Free API |

| Hash Extractor | Identify MD5 and SHA hashes in web content, files and more. | Internal |

| HaveIBeenPwned | Check HaveIBeenPwned.com for hacked e-mail addresses identified in breaches. | Commercial API |

| Hosting Provider Identifier | Find out if any IP addresses identified fall within known 3rd party hosting ranges, e.g. Amazon, Azure, etc. | Internal |

| Host.io | Obtain information about domain names from host.io. | Tiered API |

| Human Name Extractor | Attempt to identify human names in fetched content. | Internal |

| Hunter.io | Check for e-mail addresses and names on hunter.io. | Tiered API |

| Hybrid Analysis | Search Hybrid Analysis for domains and URLs related to the target. | Free API |

| IBAN Number Extractor | Identify International Bank Account Numbers (IBANs) in any data. | Internal |

| Iknowwhatyoudownload.com | Check iknowwhatyoudownload.com for IP addresses that have been using torrents. | Tiered API |

| IntelligenceX | Obtain information from IntelligenceX about identified IP addresses, domains, e-mail addresses and phone numbers. | Tiered API |

| Interesting File Finder | Identifies potential files of interest, e.g. office documents, zip files. | Internal |

| Internet Storm Center | Check if an IP address is malicious according to SANS ISC. | Free API |

| ipapi.co | Queries ipapi.co to identify geolocation of IP Addresses using ipapi.co API | Tiered API |

| ipapi.com | Queries ipapi.com to identify geolocation of IP Addresses using ipapi.com API | Tiered API |

| IPInfo.io | Identifies the physical location of IP addresses identified using ipinfo.io. | Tiered API |

| IPQualityScore | Determine if target is malicious using IPQualityScore API | Tiered API |

| ipregistry | Query the ipregistry.co database for reputation and geo-location. | Tiered API |

| ipstack | Identifies the physical location of IP addresses identified using ipstack.com. | Tiered API |

| JsonWHOIS.com | Search JsonWHOIS.com for WHOIS records associated with a domain. | Tiered API |

| Junk File Finder | Looks for old/temporary and other similar files. | Internal |

| Keybase | Obtain additional information about domain names and identified usernames. | Free API |

| Koodous | Search Koodous for mobile apps. | Tiered API |

| LeakIX | Search LeakIX for host data leaks, open ports, software and geoip. | Free API |

| Leak-Lookup | Searches Leak-Lookup.com's database of breaches. | Free API |

| Maltiverse | Obtain information about any malicious activities involving IP addresses | Free API |

| MalwarePatrol | Searches malwarepatrol.net's database of malicious URLs/IPs. | Tiered API |

| MetaDefender | Search MetaDefender API for IP address and domain IP reputation. | Tiered API |

| Mnemonic PassiveDNS | Obtain Passive DNS information from PassiveDNS.mnemonic.no. | Free API |

| multiproxy.org Open Proxies | Check if an IP address is an open proxy according to multiproxy.org open proxy list. | Free API |

| MySpace | Gather username and location from MySpace.com profiles. | Free API |

| NameAPI | Check whether an email is disposable | Tiered API |

| NetworksDB | Search NetworksDB.io API for IP address and domain information. | Tiered API |

| NeutrinoAPI | Search NeutrinoAPI for phone location information, IP address information, and host reputation. | Tiered API |

| numverify | Lookup phone number location and carrier information from numverify.com. | Tiered API |

| Onion.link | Search Tor 'Onion City' search engine for mentions of the target domain using Google Custom Search. | Free API |

| Onionsearchengine.com | Search Tor onionsearchengine.com for mentions of the target domain. | Free API |

| Onyphe | Check Onyphe data (threat list, geo-location, pastries, vulnerabilities) about a given IP. | Tiered API |

| Open Bug Bounty | Check external vulnerability scanning/reporting service openbugbounty.org to see if the target is listed. | Free API |

| OpenCorporates | Look up company information from OpenCorporates. | Tiered API |

| OpenDNS | Check if a host would be blocked by OpenDNS. | Free API |

| OpenNIC DNS | Resolves host names in the OpenNIC alternative DNS system. | Free API |

| OpenPhish | Check if a host/domain is malicious according to OpenPhish.com. | Free API |

| OpenStreetMap | Retrieves latitude/longitude coordinates for physical addresses from OpenStreetMap API. | Free API |

| Page Information | Obtain information about web pages (do they take passwords, do they contain forms, etc.) | Internal |

| PasteBin | PasteBin search (via Google Search API) to identify related content. | Tiered API |

| PGP Key Servers | Look up domains and e-mail addresses in PGP public key servers. | Internal |

| PhishStats | Check if a netblock or IP address is malicious according to PhishStats. | Free API |

| PhishTank | Check if a host/domain is malicious according to PhishTank. | Free API |

| Phone Number Extractor | Identify phone numbers in scraped webpages. | Internal |

| Port Scanner - TCP | Scans for commonly open TCP ports on Internet-facing systems. | Internal |

| Project Honey Pot | Query the Project Honey Pot database for IP addresses. | Free API |

| ProjectDiscovery Chaos | Search for hosts/subdomains using chaos.projectdiscovery.io | Commercial API |

| Psbdmp | Check psbdmp.cc (PasteBin Dump) for potentially hacked e-mails and domains. | Free API |

| Pulsedive | Obtain information from Pulsedive's API. | Tiered API |

| PunkSpider | Check the QOMPLX punkspider.io service to see if the target is listed as vulnerable. | Free API |

| Quad9 | Check if a host would be blocked by Quad9 DNS. | Free API |

| ReverseWhois | Reverse Whois lookups using reversewhois.io. | Free API |

| RIPE | Queries the RIPE registry (includes ARIN data) to identify netblocks and other info. | Free API |

| RiskIQ | Obtain information from RiskIQ's (formerly PassiveTotal) Passive DNS and Passive SSL databases. | Tiered API |

| Robtex | Search Robtex.com for hosts sharing the same IP. | Free API |

| searchcode | Search searchcode for code repositories mentioning the target domain. | Free API |

| SecurityTrails | Obtain Passive DNS and other information from SecurityTrails | Tiered API |

| Seon | Queries seon.io to gather intelligence about IP Addresses, email addresses, and phone numbers | Commercial API |

| SHODAN | Obtain information from SHODAN about identified IP addresses. | Tiered API |

| Similar Domain Finder | Search various sources to identify similar looking domain names, for instance squatted domains. | Internal |

| Skymem | Look up e-mail addresses on Skymem. | Free API |

| SlideShare | Gather name and location from SlideShare profiles. | Free API |

| Snov | Gather available email IDs from identified domains | Tiered API |

| Social Links | Queries SocialLinks.io to gather intelligence from social media platforms and dark web. | Commercial API |

| Social Media Profile Finder | Tries to discover the social media profiles for human names identified. | Tiered API |

| Social Network Identifier | Identify presence on social media networks such as LinkedIn, Twitter and others. | Internal |

| SORBS | Query the SORBS database for open relays, open proxies, vulnerable servers, etc. | Free API |

| SpamCop | Check if a netblock or IP address is in the SpamCop database. | Free API |

| Spamhaus Zen | Check if a netblock or IP address is in the Spamhaus Zen database. | Free API |

| spur.us | Obtain information about any malicious activities involving IP addresses found | Commercial API |

| SpyOnWeb | Search SpyOnWeb for hosts sharing the same IP address, Google Analytics code, or Google Adsense code. | Tiered API |

| SSL Certificate Analyzer | Gather information about SSL certificates used by the target's HTTPS sites. | Internal |

| StackOverflow | Search StackOverflow for any mentions of a target domain. Returns potentially related information. | Tiered API |

| Steven Black Hosts | Check if a domain is malicious (malware or adware) according to Steven Black Hosts list. | Free API |

| Strange Header Identifier | Obtain non-standard HTTP headers returned by web servers. | Internal |

| Subdomain Takeover Checker | Check if affiliated subdomains are vulnerable to takeover. | Internal |

| Sublist3r PassiveDNS | Passive subdomain enumeration using Sublist3r's API | Free API |

| SURBL | Check if a netblock, IP address or domain is in the SURBL blacklist. | Free API |

| Talos Intelligence | Check if a netblock or IP address is malicious according to TalosIntelligence. | Free API |

| TextMagic | Obtain phone number type from TextMagic API | Tiered API |

| Threat Jammer | Check if an IP address is malicious according to ThreatJammer.com | Tiered API |

| ThreatCrowd | Obtain information from ThreatCrowd about identified IP addresses, domains and e-mail addresses. | Free API |

| ThreatFox | Check if an IP address is malicious according to ThreatFox. | Free API |

| ThreatMiner | Obtain information from ThreatMiner's database for passive DNS and threat intelligence. | Free API |

| TLD Searcher | Search all Internet TLDs for domains with the same name as the target (this can be very slow.) | Internal |

| Tool - CMSeeK | Identify what Content Management System (CMS) might be used. | Tool |

| Tool - DNSTwist | Identify bit-squatting, typo and other similar domains to the target using a local DNSTwist installation. | Tool |

| Tool - nbtscan | Scans for open NETBIOS nameservers on your target's network. | Tool |

| Tool - Nmap | Identify what Operating System might be used. | Tool |

| Tool - Nuclei | Fast and customisable vulnerability scanner. | Tool |

| Tool - onesixtyone | Fast scanner to find publicly exposed SNMP services. | Tool |

| Tool - Retire.js | Scanner detecting the use of JavaScript libraries with known vulnerabilities | Tool |

| Tool - snallygaster | Finds file leaks and other security problems on HTTP servers. | Tool |

| Tool - testssl.sh | Identify various TLS/SSL weaknesses, including Heartbleed, CRIME and ROBOT. | Tool |

| Tool - TruffleHog | Searches through git repositories for high entropy strings and secrets, digging deep into commit history. | Tool |

| Tool - WAFW00F | Identify what web application firewall (WAF) is in use on the specified website. | Tool |

| Tool - Wappalyzer | Wappalyzer indentifies technologies on websites. | Tool |

| Tool - WhatWeb | Identify what software is in use on the specified website. | Tool |

| TOR Exit Nodes | Check if an IP adddress or netblock appears on the Tor Metrics exit node list. | Free API |

| TORCH | Search Tor 'TORCH' search engine for mentions of the target domain. | Free API |

| Trashpanda | Queries Trashpanda to gather intelligence about mentions of target in pastesites | Tiered API |

| Trumail | Check whether an email is disposable | Free API |

| Twilio | Obtain information from Twilio about phone numbers. Ensure you have the Caller Name add-on installed in Twilio. | Tiered API |

| Gather name and location from Twitter profiles. | Free API | |

| UCEPROTECT | Check if a netblock or IP address is in the UCEPROTECT database. | Free API |

| URLScan.io | Search URLScan.io cache for domain information. | Free API |

| Venmo | Gather user information from Venmo API. | Free API |

| ViewDNS.info | Identify co-hosted websites and perform reverse Whois lookups using ViewDNS.info. | Tiered API |

| VirusTotal | Obtain information from VirusTotal about identified IP addresses. | Tiered API |

| VoIP Blacklist (VoIPBL) | Check if an IP address or netblock is malicious according to VoIP Blacklist (VoIPBL). | Free API |

| VXVault.net | Check if a domain or IP address is malicious according to VXVault.net. | Free API |

| Web Analytics Extractor | Identify web analytics IDs in scraped webpages and DNS TXT records. | Internal |

| Web Framework Identifier | Identify the usage of popular web frameworks like jQuery, YUI and others. | Internal |

| Web Server Identifier | Obtain web server banners to identify versions of web servers being used. | Internal |

| Web Spider | Spidering of web-pages to extract content for searching. | Internal |

| WhatCMS | Check web technology using WhatCMS.org API. | Tiered API |

| Whoisology | Reverse Whois lookups using Whoisology.com. | Commercial API |

| Whois | Perform a WHOIS look-up on domain names and owned netblocks. | Internal |

| Whoxy | Reverse Whois lookups using Whoxy.com. | Commercial API |

| WiGLE | Query WiGLE to identify nearby WiFi access points. | Free API |

| Wikileaks | Search Wikileaks for mentions of domain names and e-mail addresses. | Free API |

| Wikipedia Edits | Identify edits to Wikipedia articles made from a given IP address or username. | Free API |

| XForce Exchange | Obtain IP reputation and passive DNS information from IBM X-Force Exchange. | Tiered API |

| Yandex DNS | Check if a host would be blocked by Yandex DNS. | Free API |

| Zetalytics | Query the Zetalytics database for hosts on your target domain(s). | Tiered API |

| ZoneFile.io | Search ZoneFiles.io Domain query API for domain information. | Tiered API |

| Zone-H Defacement Check | Check if a hostname/domain appears on the zone-h.org 'special defacements' RSS feed. | Free API |

Read more at the project website, including more complete documentation, blog posts with tutorials/guides, plus information about SpiderFoot HX.

Latest updates announced on Twitter.