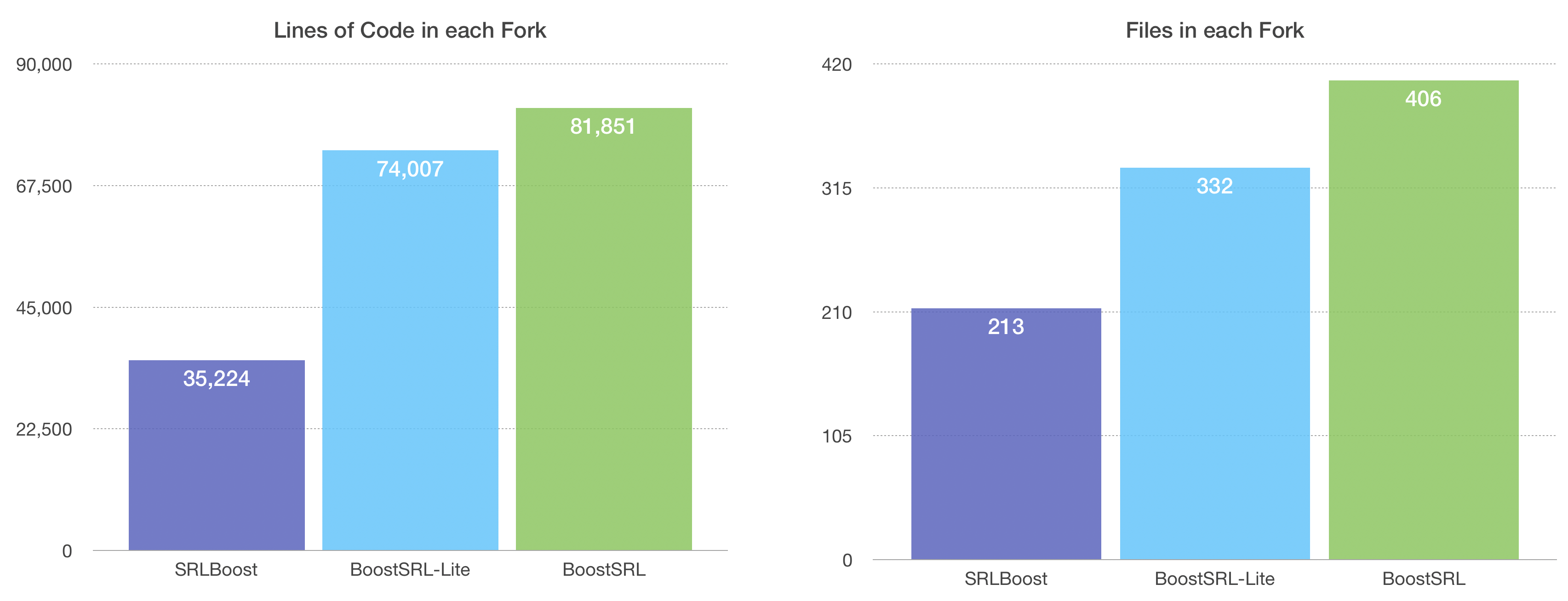

A package for learning Statistical Relational Models with Gradient Boosting,

forked for use as srlearn's core.

It's basically BoostSRL but half the size and significantly faster.

Graphs at commit cb952a4, measured

with cloc-1.84.

(Smaller numbers are better.)

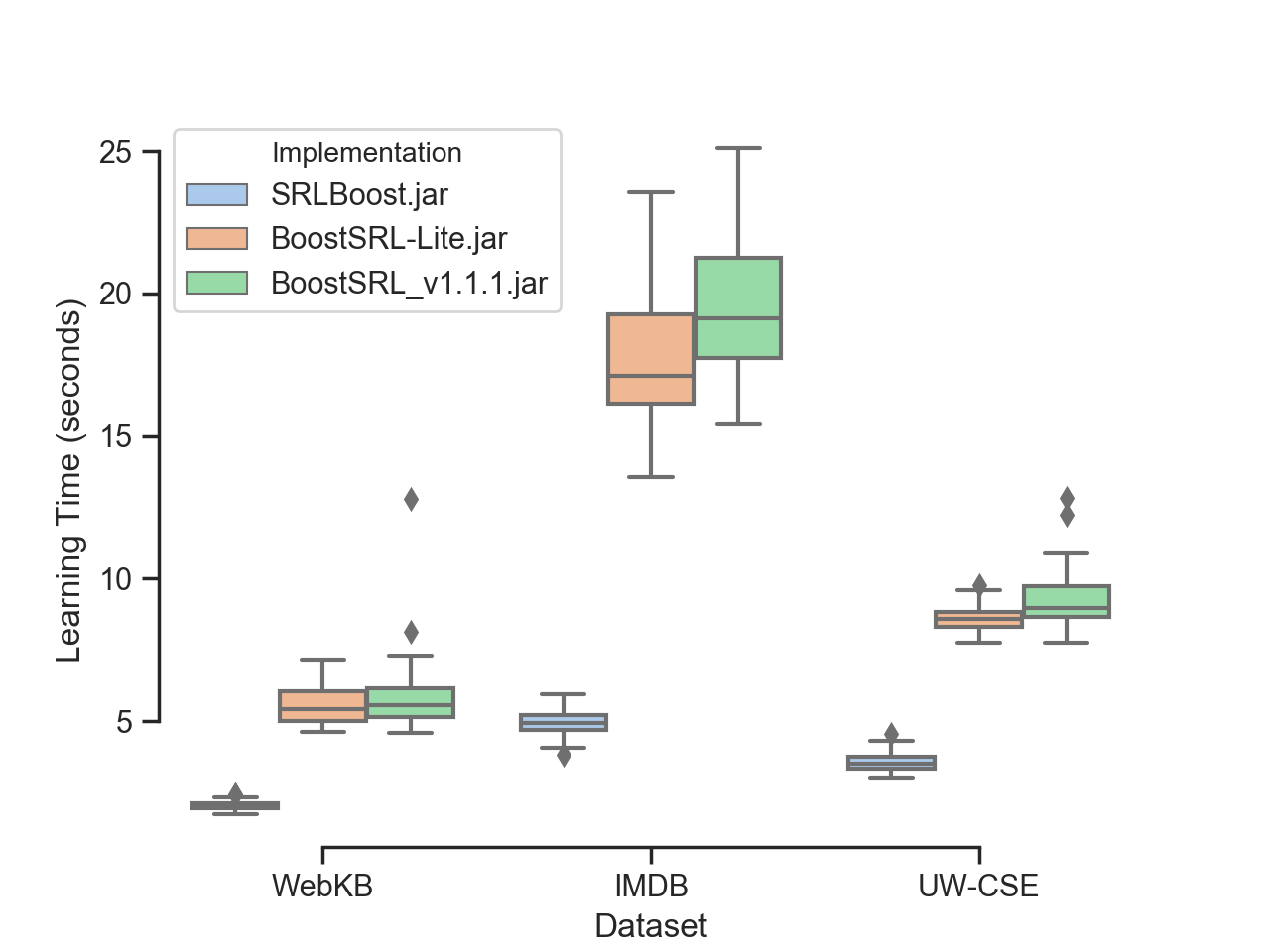

This box plot compares the learning time (in seconds) for three data sets and three implementations of learning

relational dependency networks. BoostSRL-Lite was built from the

repository on GitHub, and BoostSRL_v1.1.1 is the latest official

release.

Each data set included 4-5 cross validation folds, and these results were averaged over 10 runs. This appears to

suggest that SRLBoost is at least twice as fast as other implementations.

With some parameter tuning we have sped this up even further.

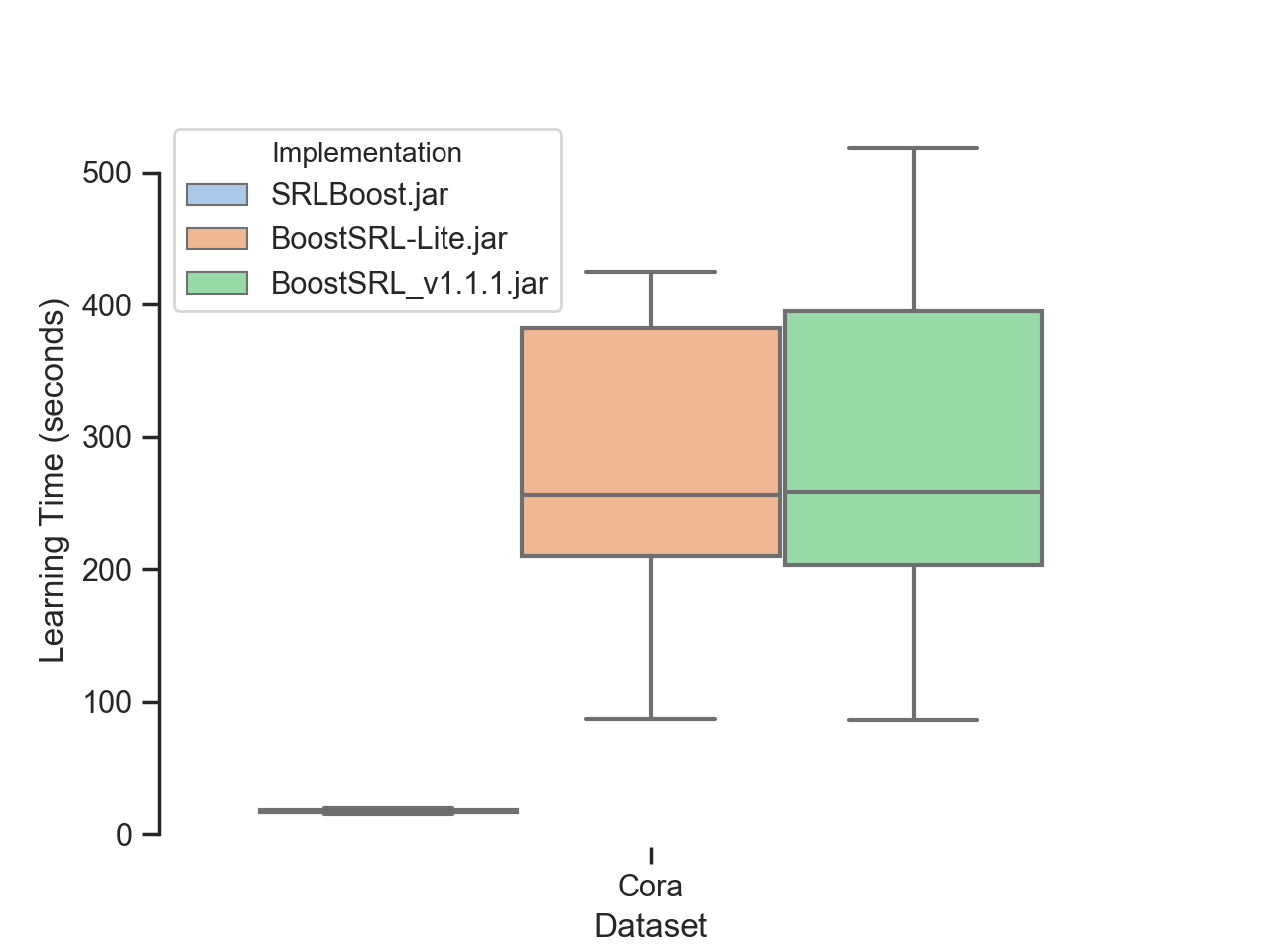

The tiny bar on the left shows the average SRLBoost time for Cora is around 17 seconds, compared to around 4.5 minutes for

BoostSRL-Lite and BoostSRL (that's more like 15x faster).

However, on Cora this does lead to slightly degraded performance in AUC ROC, AUC PR, and conditional log likelihood (CLL); shown in the table below.

| Implementation | mean AUC ROC | mean AUC PR | mean CLL | mean F1 |

|---|---|---|---|---|

| SRLBoost | 0.61 | 0.93 | -0.27 | 0.96 |

| BoostSRL-Lite | 0.65 | 0.94 | -0.29 | 0.96 |

| BoostSRLv1.1.1 | 0.65 | 0.94 | -0.29 | 0.78 |

[Measurements used to produce this table are available online (three_jar_comparison.csv)]

A main aim for this project is to have a faster library. We have made the faster parameters the defaults, and intend to expose them as things that users can tune in instances where slower, more effective learning is critical.

SRLBoost project structure still closely mirrors other implementations.

We're using Gradle to help with building and testing, targeting Java 8.

- Open Windows Terminal in Administrator mode, and use Chocolatey (or your preferred package manager) to install a Java Development Kit.

choco install openjdk- Clone and build the package.

git clone https://github.com/srlearn/SRLBoost.git

cd .\SRLBoost\

.\gradlew build- Learn with a basic data set (switching the

X.Y.Z):

java -jar .\build\libs\srlboost-X.Y.Z.jar -l -train .\data\Toy-Cancer\train\ -target cancer- Query the model on the test set (again, swtiching the

X.Y.Z)

java -jar .\build\libs\srlboost-X.Y.Z.jar -i -model .\data\Toy-Cancer\train\models\ -test .\data\Toy-Cancer\test\ -target cancer- Open your terminal (MacOS: ⌘ + spacebar + "Terminal"), and use Homebrew to install a Java Development Kit. (On Linux:

apt,dnf, oryumdepending on your Linux flavor).

brew install openjdk- Clone and build the package.

git clone https://github.com/srlearn/SRLBoost.git

cd SRLBoost/

./gradlew build- Run a basic example (switching the

X.Y.Z):

java -jar build/libs/srlboost-X.Y.Z.jar -l -train data/Toy-Cancer/train/ -target cancer- Query the model on the test set (again, swtiching the

X.Y.Z)

java -jar build/libs/srlboost-X.Y.Z.jar -i -model data/Toy-Cancer/train/models/ -test data/Toy-Cancer/test/ -target cancer