rightmove.co.uk is one of the UK's largest property listings websites, hosting thousands of listings of properties for sale and to rent.

rightmove_webscraper.py is a simple Python interface to scrape property listings from the website and prepare them in a Pandas dataframe for analysis.

Version 1.1 is available to install via Pip:

pip install -U rightmove-webscraper

- Go to rightmove.co.uk and search for whatever region, postcode, city, etc. you are interested in. You can also add any additional filters, e.g. property type, price, number of bedrooms, etc.

-

Run the search on the rightmove website and copy the URL of the first results page.

-

Create an instance of the class with the URL as the init argument.

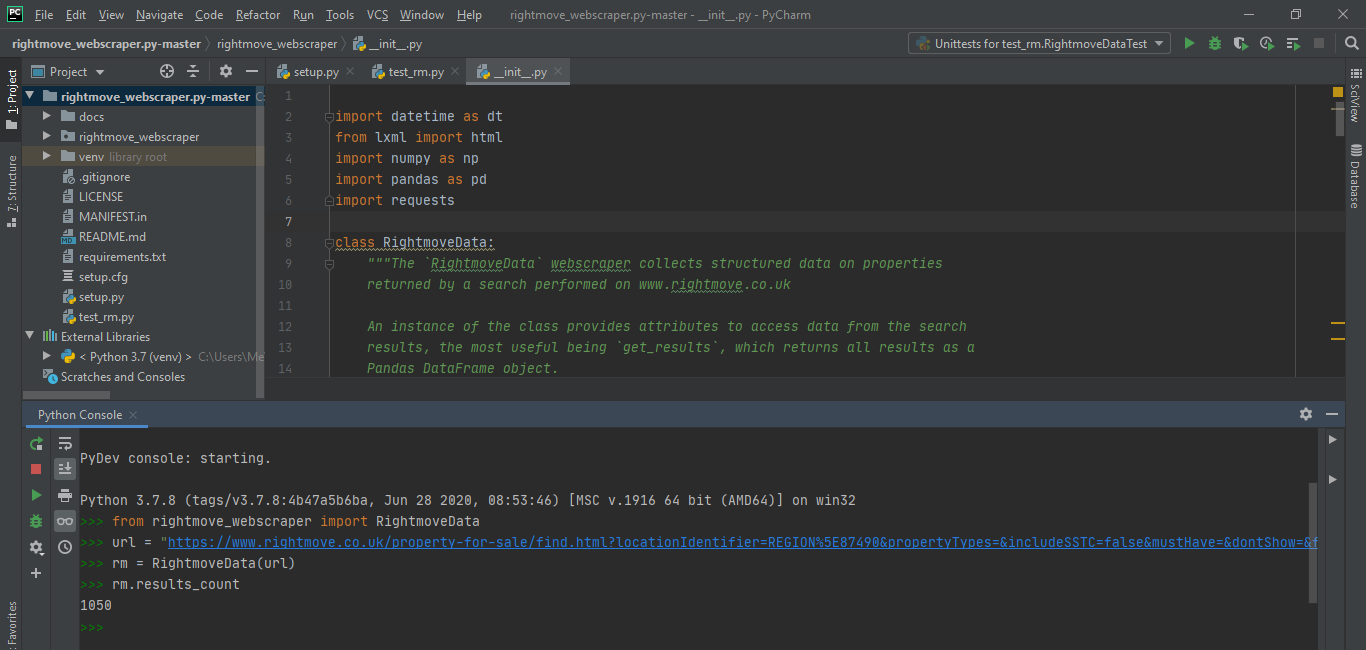

from rightmove_webscraper import RightmoveData

url = "https://www.rightmove.co.uk/property-for-sale/find.html?searchType=SALE&locationIdentifier=REGION%5E94346"

rm = RightmoveData(url)When a RightmoveData instance is created it automatically scrapes every page of results available from the search URL. However please note that rightmove restricts the total possible number of results pages to 42. Therefore if you perform a search which could theoretically return many thousands of results (e.g. "all rental properties in London"), in practice you are limited to only scraping the first 1050 results (42 pages * 25 listings per page = 1050 total listings). A couple of suggested workarounds to this limitation are:

- Reduce the search area and perform multiple scrapes, e.g. perform a search for each London borough instead of 1 search for all of London.

- Add a search filter to shorten the timeframe in which listings were posted, e.g. search for all listings posted in the past 24 hours, and schedule the scrape to run daily.

Finally, note that not every piece of data listed on the rightmove website is scraped, instead it is just a subset of the most useful features, such as price, address, number of bedrooms, listing agent. If there are additional data items you think should be scraped, please submit an issue or even better go find the xml path and submit a pull request with the changes.

The following instance methods and properties are available to access the scraped data.

Full results as a Pandas.DataFrame

rm.get_results.head()Average price of all listings scraped

rm.average_price

1650065.841025641

Total number of listings scraped

rm.results_count

195

Summary statistics

By default shows the number of listings and average price grouped by the number of bedrooms:

rm.summary()| number_bedrooms | count | mean | |

|---|---|---|---|

| 0 | 0 | 39 | 9.119231e+05 |

| 1 | 1 | 46 | 1.012935e+06 |

| 2 | 2 | 88 | 1.654237e+06 |

| 3 | 3 | 15 | 3.870867e+06 |

| 4 | 4 | 2 | 2.968500e+06 |

| 5 | 5 | 1 | 9.950000e+06 |

| 6 | 6 | 1 | 9.000000e+06 |

Alternatively group the results by any other column from the .get_results DataFrame, for example by postcode:

rm.summary(by="postcode")| postcode | count | mean | |

|---|---|---|---|

| 0 | SW11 | 76 | 1.598841e+06 |

| 1 | SW8 | 28 | 2.171357e+06 |

@toddy86 has pointed out per the terms and conditions here the use of webscrapers is unauthorised by rightmove. So please don't use this package!