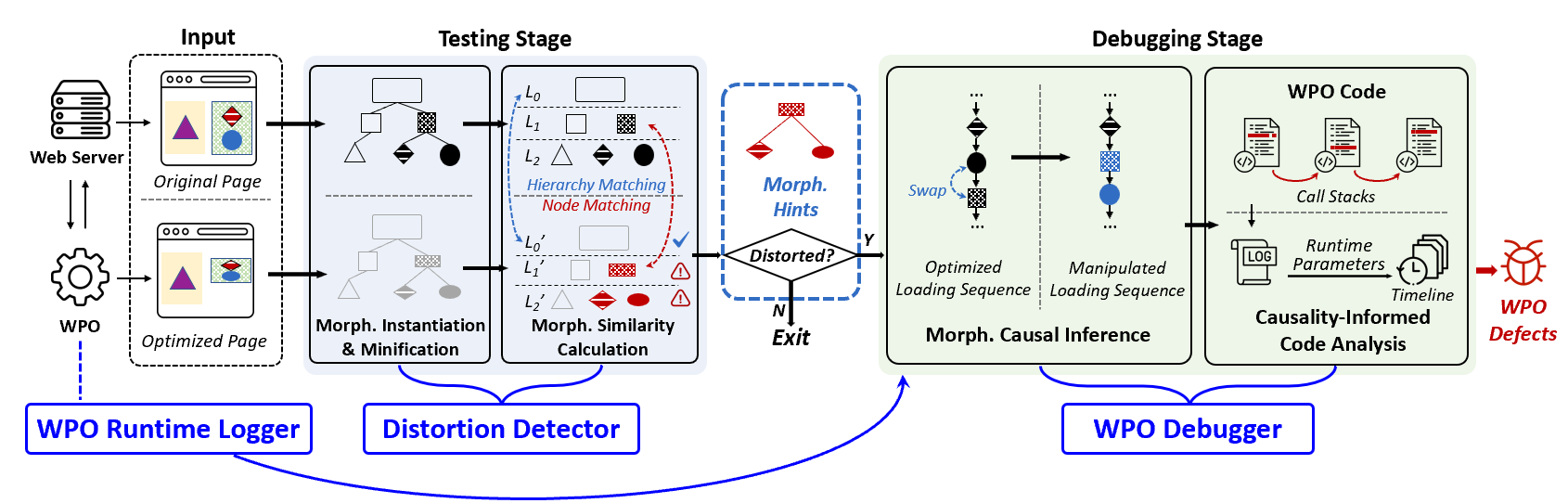

This is the artifact README for the paper "Visual-Aware Testing and Debugging for Web Performance Optimization" published in the proceedings of the International World Wide Web Conference (WWW), 2023. In this paper, we present Vetter, a novel and effective system for automatically testing and debugging visual distortions from the perspective of how modern web pages are generated.

The above figure shows the architecture overview of Vetter, which contains three major components: WPO Runtime Logger, Distortion Detector, and WPO Debugger. WPO Runtime Logger records the WPO’s function call stacks and runtime logs. This component is built upon gdb, Go Execution Tracer, and OpenTelemetry. Distortion Detector records the page’s resources and their loading sequence using Mahimahi. It also records the SkPaint API invocations with the Skia web_to_skp tool during page loading to construct and compare the morphological segment trees (MSTs) of two web pages. Finally, WPO Debugger uses the puppeteer library to monitor and manipulate the page loading process for debugging visual distortions. The source code together with the detailed documentation of the three components are placed in three folders: WPO_runtime_logger/, Distortion_detector/, and WPO_debugger/ correspondingly.

To use Vetter for testing and debugging WPO-incurred visual distortions, we highly recommend that you line up with the following hardware/software environments to avoid unexpected bugs.

-

Hardware Setups. You should at least prepare two servers and one client for using Vetter: one server (dubbed as S1 for short) acts as the web page server that holds the web contents; the other server (S2) is the WPO server that runs a specific WPO. The client should have access to the two servers.

-

Software Environments.

S1 is a typical web page server, which should have the following pre-requisites for use.

-

Ubuntu 16.04 (recommended)

- Nginx 1.10 or latter

S2 is a WPO server, where we should also deploy the WPO runtime logger. To run the four WPOs and their corresponding runtime loggers on S2, the following environment should be satisfied.

- Ubuntu 16.04 (recommended)

- Python 3.7

- Java 1.8 or latter

- Go 1.19.1 or latter

-

Node.js 17.0

- OpenSSL 1.0 or latter

For the client, the recommended environments are listed below.

-

Ubuntu 16.04 (recommended)

-

Google Chrome v95

-

Mahimahi 0.98

WPO runtime logger sits side by side with the WPO server (i.e., S2). The WPO runtime logger should fit the specific implementation of the WPO. For example, Compy is implemented in Go language, and thus the WPO runtime logger can be directly built based on the Go Execution Tracer (an off-the-shelf tracer in Go that tracks the OS execution events such as goroutine creation/blocking/unblocking and syscall enter, exit, block). For other WPOs including Ziproxy, Fawkes, and SipLoader, we take similar approach by taking advantage of existing tools including gdb and OpenTelemetry for building the WPO runtime logger. The detailed instructions are coming soon.

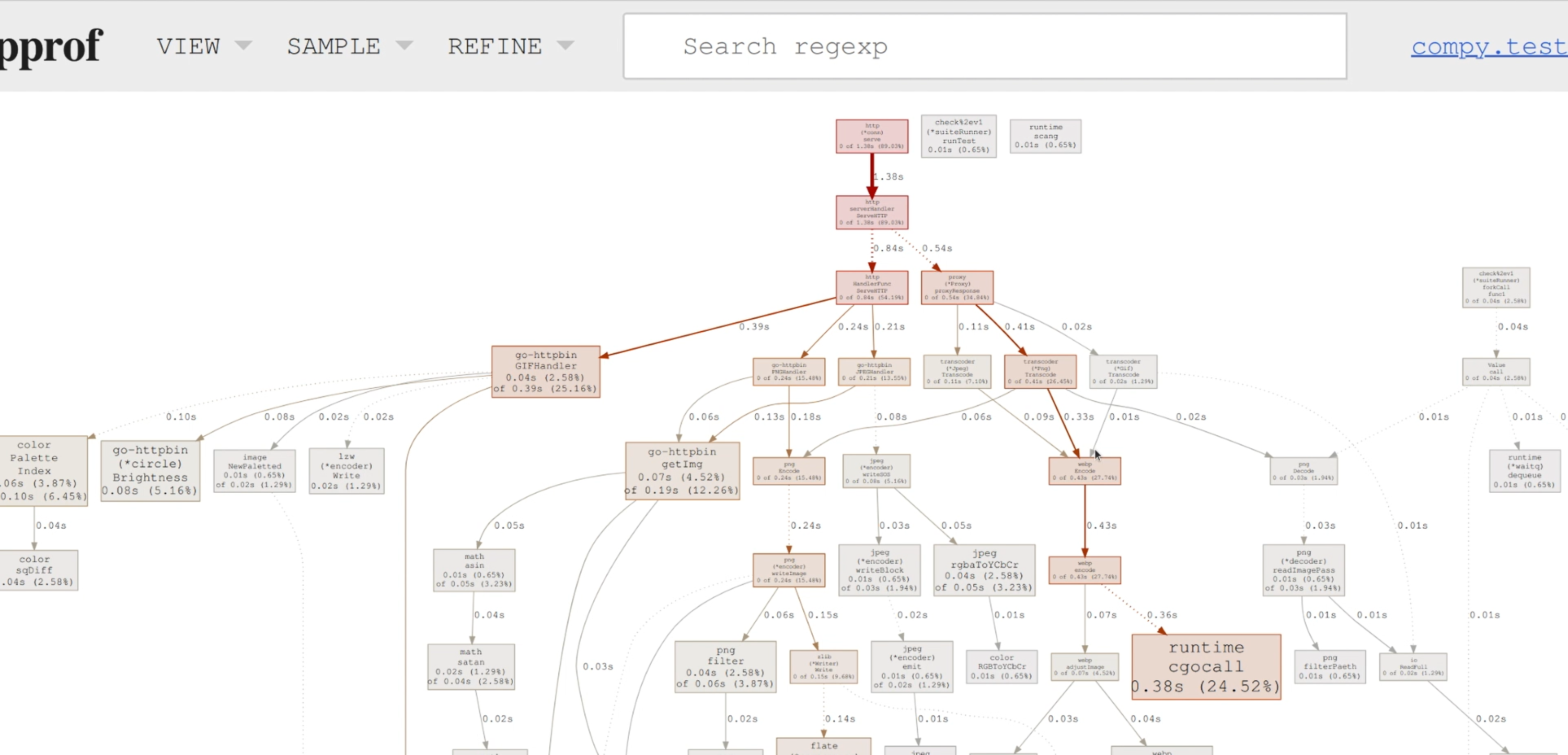

Below we take building the WPO runtime logger for Compy as a typical example.

-

Download and install Compy.

apt-get install -y libjpeg8-dev openssl ssl-cert #install the dependencies of Compy go get github.com/barnacs/compy cd go/src/github.com/barnacs/compy/ go install

To use Compy over HTTPS, generate a certificate for your host using OpenSSL.

openssl req -x509 -newkey rsa:2048 -nodes -keyout cert.key -out cert.crt -days 3650 -subj '/CN=<your-domain>'Start Compy as the web optimization proxy.

cd go/src/github.com/barnacs/compy/ compy -cert cert.crt -key cert.key -ca ca.crt -cakey ca.key -

Install the

pprof, the core module of Go Execution Tracer by executing the following command.go install github.com/google/pprof@latest

The binary will be installed in

$GOPATH/bin($HOME/go/binby default). -

(Optional) Install the

graphvizto generate graphic visualizations of the results output bypprof.brew install graphviz || apt-get install graphviz -

Start

pprofto monitor the function call stacks and runtime logs of Compy.cd go/src/github.com/barnacs/compy/ go test -bench=. -cpuprofile=cpu.prof go tool pprof -http=:9980 cpu.prof

-

Visit a web page and the WPO call stacks together with the runtime logs can be found at

http://localhost:9980/ui/. The function call stacks and runtime logs of WPO are demonstrated as the figure below.

Distortion detector mainly contains two parts. First, it records and replays web pages for testing visual distortions. This part of logics resides on the web page server (i.e., S1). Beisdes, the distortion detector also collects the SKPaint logs of the original and optimized web pages, constructs morphological segment trees (MSTs), and calculates the morphological similarity between the two pages. This part of mechanisms works on the client side.

Use the mm-webrecord tool of Mahimahi to record the loading process of a certain web page that needs optimization. For example, we can record http://www.example.com by:

# Record http://www.example.com

OUTPUT_DIR=output

WEB_PAGE_URL=http://www.example.com

mm-webrecord ${OUTPUT_DIR} /usr/bin/google-chrome --disable-fre --no-default-browser-check --no-first-run --window-size=1920,1080 --ignore-certificate-errors --user-data-dir=./nonexistent/$(date +%s%N) ${WEB_PAGE_URL}The above shell script will launch a Chrome browser instance, automatically navigate to the targe web page, and record the loading process of the original web page. The captured resources are saved in the OUTPUT_DIR directory for replay.

Then, we replay the web page so that the WPO can perform optimization before/during the page loading:

# Replay the recorded https://example.com

mm-webreplay ${OUTPUT_DIR}

/usr/bin/google-chrome --disable-fre --no-default-browser-check --no-first-run --window-size=1920,1080 --ignore-certificate-errors --user-data-dir=./nonexistent/$(date +%s%N) ${WEB_PAGE_URL}Before using Skia, make sure that you have successfully installed chrome on the client.

-

Download the latest version of Skia.

git clone https://skia.googlesource.com/skia.git

-

Use

web_to_skptool to capture the SKPaint logs of a certain web page. The output logs are placed in the folder./skia/skia_data/.cd ./skia/skia/skia/experimental/tools/ #collecting the skpaint logs of www.example.com ./web_to_skp "/usr/bin/google-chrome" http://www.example.com/ "./skia/skia_data/example.skp"

-

Convert the SKPaint logs to JSON file for further processing.

cd ./skia/skia/skia/out/Debug ./skp_parser ./skia/skia_data/example.skp >> ./skia/skia_data/example.json

-

Put the collected SKPaint logs of both the original and the optimized web pages in the folder

Distortion_detector/raw_data/. Then, build the MSTs of the two web pages by running the following command. Note that the two MSTs are output in the same fileMST_output.txt.cd Distortion_detector python3 MST_construction.py original_skpaint.data optimized_skpiant.data MST_output.txt -

Conduct hierarchy matching and node matching between the two MSTs.

python3 MST_comparison.py ./raw_data/ result.txt

-

Calculate the similarity between two MSTs and generate the morphological hints.

g++ MorphSIM_cal.cpp -o run ./run result.txt

WPO Debugger mainly works at the client side. It also cooperates with the distortion detector (works on the web page server S1 and the client) to repeatedly modify and replay the web pages for debugging the visual distortions. Specifically, WPO debugger gradually restores the modified resources/loading sequences to the original ones to see whether the distortion is resolved. If so, the “real culprits” of the distortion are among the most recently restored resources/sequences.

This part details the basic workflow of WPO debugger of Vetter. We use SipLoader coupled with Amazon's landing page as an typical example to illustrate how we can gradually restore the resource loading sequences.

-

Dependencies

pip3 install beautifulsoup4 npm install -g delay puppeteer # May need root privilege, or you can install them locally without the "-g" flag

SipLoader directly modifies the recorded web pages offline to perform optimizations. As a result, Vetter debugs SipLoader based on the modified pages in an offline manner as well. Of course, Vetter's morphological causal inference is generalizable to other WPOs that operate on the resources during actual page loading. This depends on how the WPO performs optimization routines.

We take the landing page of amazon.com as an example. To record amazon.com, simply run the shell script collect.sh, which will automatically record the loading process of amazon.com using Mahimahi:

bash collect.shWhen the page has been fully loaded, you will see a directory named amazon.com with all the resource files recorded in it during page loading.

-

Performing Optimization Routines on the Page. To apply the optimizations of SipLoader on recorded web pages, you need to first

cdto the SipLoader_mod directory, where we provide a modified version of SipLoader that enables you to change the loading sequences after the page is optimized. Then, just run the following commands to install SipLoader to the recorded page:# Extracting the resources in HTML files python3 prepare_html.py # Injecting SipLoader's resource load scheduling logic python3 install_siploader.py

If everything works fine, you will see a new directory named amazon.com_reordered. This directory contains the optimized version of the page.

-

Changing Loading Orders for the Resources. The installation process of SipLoader outputs four additional directories, namely

chunked_html,inline_js, andurl_ids. Specially pay attention to theurl_idswhere you can change the loading sequences for the optimized pages.For each optimized web page, there are two corresponding json files in the

url_idsdirectory. Take amazon.com as an example, fileamazon.com_tag_id.jsonspecifies the resources' parent elements in the DOM tree , whileamazon.com_res_id.jsonspecifies all the resource URLs and their IDs. In particular, this ID is also the loading order of the resource during the page load scheduling of SipLoader. A smaller ID indicates the corresponding resource will be loaded first. You can manually change the resource loading order to restore the original loading sequence:// amazon.com_res_id.json { "https://www.amazon.com/": { // ... "https://m.media-amazon.com/images/W/WEBP_402378-T2/images/I/61cYZXdazOL._SX1500_.jpg": 8, "https://images-na.ssl-images-amazon.com/images/W/WEBP_402378-T2/images/G/01/AmazonExports/Fuji/2020/May/Dashboard/Fuji_Dash_Returns_1x._SY304_CB432774714_.jpg": 9999, // Set this resource to be loaded at the last "https://images-na.ssl-images-amazon.com/images/W/WEBP_402378-T2/images/G/01/AmazonExports/Fuji/2022/February/DashboardCards/GW_CONS_AUS_HPC_HPCEssentials_CatCard_Desktop1x._SY304_CB627424361_.jpg": 10, // ... "https://images-na.ssl-images-amazon.com/images/W/WEBP_402378-T2/images/G/01/AmazonExports/Fuji/2021/June/Fuji_Quad_Keyboard_1x._SY116_CB667159063_.jpg": 20, "https://images-na.ssl-images-amazon.com/images/W/WEBP_402378-T2/images/G/01/AmazonExports/Fuji/2021/June/Fuji_Quad_Mouse_1x._SY116_CB667159063_.jpg": -1, // Set this resource to be loaded first "https://images-na.ssl-images-amazon.com/images/W/WEBP_402378-T2/images/G/01/AmazonExports/Fuji/2021/June/Fuji_Quad_Chair_1x._SY116_CB667159060_.jpg": 22, // ... } }

Note that the above process only changes the network fetching order of the resources. To further change the actual execution order of JavaScript files, you need to modify the json file in

inline_jsdirectory so that the execution dependency between JavaScript code pieces can be changed. Specifically, you need to changed theprev_js_idfield for each JavaScript code segment, which specifies the precedent code needed to be executed before the given code pieces.// inline_js/amazon.com.json { "https://www.amazon.com/": { "0": { "code": "var aPageStart = (new Date()).getTime();", "prev_js_id": -1 }, "1": { "code": "var ue_t0=ue_t0||+new Date();", "prev_js_id": 0 }, "5": { "code": "\nwindow.ue_ihb ...", "prev_js_id": 1 }, "6": { // ... }, // ... } }

After you have changed the resource loading order in

amazon.com_res_id.json(and the related JavaScript execution order if needed), you should delete the oldamazon.com_reorderedand runpython3 install_siploader.pyagain to generate the new optimized page with restored loading sequence.

We also use Mahimahi to replay the modified web page. In this way, developers can analyze the causal relation between the visual distortion and the restored resource loading sequence for SipLoader. To replay the page, try:

mm-webreplay amazon.com_reordered/

node replay.js amazon.com https://www.amazon.comAnd the script will output the CSS selectors and the geometry properties of all web page elements in element_pos.json for developers' further investigation.

Apart from the example amazon.com, you can debug SipLoader with other web pages by yourself. To this end, you should first record the web page by:

DOMAIN_NAME='DOMAIN NAME OF THE PAGE'

URL='URL OF THE PAGE'

mm-webrecord ${DOMAIN_NAME} /usr/bin/google-chrome --disable-fre --no-default-browser-check --no-first-run --window-size=1920,1080 --ignore-certificate-errors --user-data-dir=./nonexistent/$(date +%s%N) ${URL}Then, you need to modify prepare_html.py and install_siploader.py to replace the line sites = [{'domain': 'amazon.com', 'url': 'https://www.amazon.com/'}] with sites = [{'domain': DOMAIN_NAME, 'url': URL}].

Afterwards, manipulate the web page again following the above steps and replay the page by:

mm-webreplay ${DOMAIN_NAME}_reordered/

node replay.js ${DOMAIN_NAME} ${URL}Below, we list all the defects we have found for four representative WPOs: Compy, Ziproxy, Fawkes, and Siploader. Note that for Ziproxy, we mail the developers of Ziproxy with the defects we have found (together with our suggested fixes) through their official channels, but have not received the reply yet.

| Index | Description | Issue/PR NO. | Current State |

|---|---|---|---|

| 1 | Compy goes wrong when compressing some JPG/PNG images, which makes the images unable to load. | Issue-63 & PR-70 | Confirmed & Fixed |

| 2 | Compy fails to parse the compressed images. | Issue-64 | Reported |

| 3 | Compy can't deal with the websocket, which fails some interaction tasks like chatrooms and online services. | Issue-65 | Reported |

| 4 | Compy may block the redirecting process of some websites. | Issue-66 & PR-68 | Confirmed & Fixed |

| 5 | Compy can't support GIF images. | PR-70 | Confirmed & Fixed |

| Index | Description | Issue/PR NO. | Current State |

|---|---|---|---|

| 1 | Ziproxy goes wrong when compressing some contexts, which makes the original contexts become messy code. | - | Waiting For Reply |

| 2 | Ziproxy disturbs the loading sequence of JS files, leading to loading failure of web pages. | - | Waiting For Reply |

| 3 | Ziproxy cannot handle GIF files, leading to image display error transcoding. | - | Waiting For Reply |

| 4 | Ziproxy causes conflicting fields in response header. | - | Waiting For Reply |

| Index | Description | Issue/PR NO. | Current State |

|---|---|---|---|

| 1 | Fawkes can't handle some elements whose innerText has multiple lines. | Issue-14 | Reported |

| 2 | Fawkes mistakenly selects elements in template HTML | Issue-13 | Reported |

| Index | Description | Issue/PR NO. | Current State |

|---|---|---|---|

| 1 | SipLoader cannot track dependencies triggered by CSS files. | Issue-1 | Confirmed |

| 2 | SipLoader cannot handle dependency loops among resources. | Issue-2 | Confirmed |

| 3 | SipLoader cannot request cross-origin resources. | Issue-3 | Confirmed |

| 4 | Disordered page loading of websites with multiple HTML files. | Issue-4 | Confirmed |

| 5 | "404 Not Found" error when loading websites with multiple HTML files. | Issue-5 | Confirmed |

| 6 | SipLoader cannot handle some dynamic resources. | Issue-6 | Confirmed |

| 7 | A problem related to Chromium CDP used by SipLoader. | Issue-7 | Confirmed |

| 8 | CSS abormality of some websites. | Issue-8 & PR-9 | Confirmed & Fixed |

| 9 | SipLoader fails to rewrite web page objects compressed by brotli. | Issue-10 & PR-12 | Confirmed & Fixed |

| 10 | SipLoader cannot distinguish between data URIs and real URLs in CSS files. | Issue-11 & PR-9 | Confirmed & Fixed |

We collected the web page snapshots as well as invocation logs of SKPaint APIs when visiting Alexa top and bottom 2,500 websites on Dec. 9th, 2021, which are available in snapshot.zip and skpdata.tar.gz respectively in the Google Drive. Besides, we have made part of our dataset available in results_of_user_study.zip in Google Drive.

@inproceedings {xinlei2023vetter,

author = {Xinlei Yang, Wei Liu, Hao Lin, Zhenhua Li, Feng Qian, Xianlong Wang, Yunhao Liu and Tianyin Xu},

title = {Visual-Aware Testing and Debugging for Web Performance Optimization},

booktitle = {Proceedings of the International World Wide Web Conference (WWW)},

year = {2023},

publisher = {ACM}

}