| Network normal, linkis, DSS normal |

Sign in |

1. Enter the website and enter the correct account and password 2. Click the login button |

2. Log in normally |

V1.0.3 |

Functional test |

Manual test |

Normal |

|

Through |

| Network normal, linkis, DSS normal |

Rule query |

Query rules, fuzzy query based on data source/database name /table name / table name |

|

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Rule query |

Click the table name to view the table record information |

|

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Rule template |

Add Basic Template |

|

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Rule template |

Delete the base template |

|

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Rule template |

Edit Base Rule Template |

|

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Rule template |

View template details |

|

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Rule template |

Add a cross-table template |

|

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Rule template |

Delete Cross-Table Template |

|

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Rule template |

Edit Cross-Table Rule Template |

|

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Rule template |

View Cross-Table Template Detail |

|

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Rule template |

Batch delete templates |

|

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Task query |

Query by Task Status/Project/Data Source/Task No./Exception Notes |

Query to task list |

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Task query |

Advanced filtering |

Query according to advanced filter criteria / Re-execute |

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Task query |

Data quality analysis exception |

|

V1.0.3 |

Functional test |

Manual test |

Abnormal |

|

|

| Network normal, linkis, DSS normal |

Task query |

Batch re-execution, stop, |

Execute and stop in batch |

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

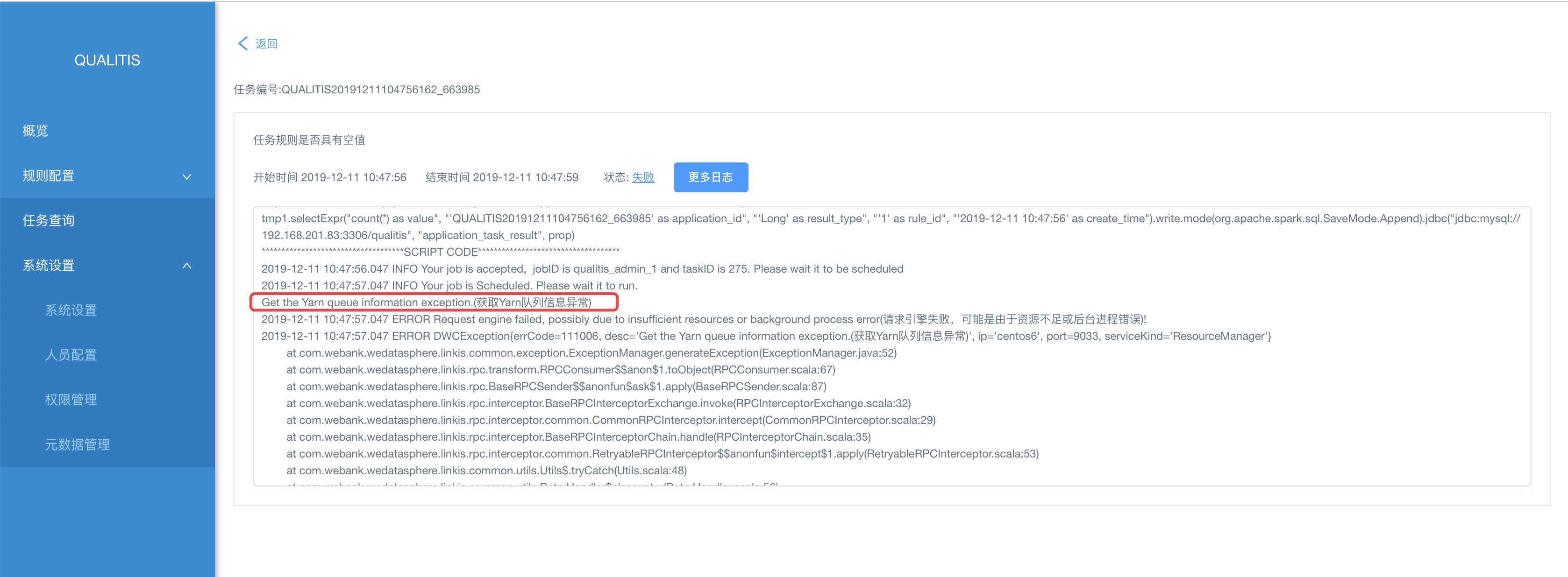

| Network normal, linkis, DSS normal |

Task query |

View failed task status detail |

|

V1.0.3 |

Functional test |

Manual test |

Exception (Obtain the red log) |

Use the hfds user |

|

| Network normal, linkis, DSS normal |

System settings |

Add / Edit /Delete Cluster Configuration |

|

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Staffing |

Add/Edit |

|

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Staffing |

Delete |

|

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

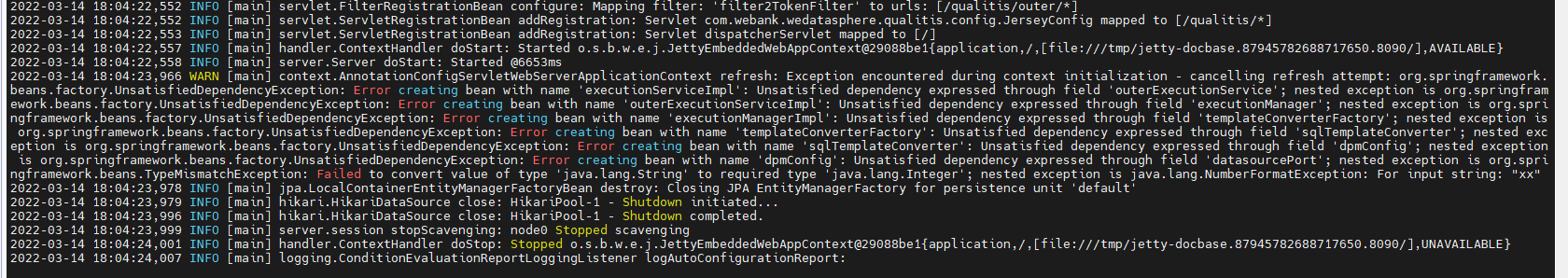

| Network normal, linkis, DSS normal |

Staffing |

New Agent User-> New |

|

V1.0.3 |

Functional test |

Manual test |

Abnormal (explosive red) Input string explosive red |

(Exception due to input format problem) |

|

| Network normal, linkis, DSS normal |

Permission settings |

Add Special Permission |

|

V1.0.3 |

Functional test |

Manual test |

Abnormal (explosive red) Input string explosive red |

(Exception due to input format problem) |

|

| Network normal, linkis, DSS normal |

Permission settings |

New Role Permission Management |

|

V1.0.3 |

Functional test |

Manual test |

Abnormal (explosive red) Input string explosive red |

(Exception due to input format problem) |

|

| Network normal, linkis, DSS normal |

Staffing |

Add user role management |

|

V1.0.3 |

Functional test |

Manual test |

Abnormal (explosive red) Input string explosive red |

(Exception due to input format problem) |

|

| Network normal, linkis, DSS normal |

Engine configuration |

Modify the engine configuration |

Modification succeeded |

V1.0.3 |

|

|

Normal |

|

|

| Network normal, linkis, DSS normal |

Indicators are added |

Fill in indicator data and add indicator |

|

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Index search |

Normal query can be performed according to the indicator name / indicator classification / whether the indicator is available / English code / subsystem |

|

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Indicator import/export function |

Indicator Click Indicator Import and Export |

Import and export metrics |

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

View metrics |

Click to view metric details |

View metric details |

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Edit the indicator |

Click Edit Metrics |

Modify indicator, save and modify successfully |

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Delete an indicator |

Click Delete Indicator |

Indicator deleted successfully |

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Associated indicators |

Click Associate Metrics |

|

V1.0.3 |

Functional test |

Manual test |

To be tested |

|

|

| Network normal, linkis, DSS normal |

Historical value |

Click the historical value |

|

V1.0.3 |

Functional test |

Manual test |

To be tested |

|

|

| Network normal, linkis, DSS normal |

Abnormal display of indicator list management drop-down box |

|

|

V1.0.3 |

Functional test |

Manual test |

Abnormal |

Front-end joint debugging |

|

| Network normal, linkis, DSS normal |

New addition of common item |

Item addition is normal |

Successfully added |

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Normal item deletion |

The item deletion is normal |

Deleted successfully |

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Project Import/Export |

Import and export normal |

Import and export succeeded |

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Project execution |

Click Task Execution |

The task was executed successfully |

V1.0.3 |

Functional test |

Manual test |

Task execution failed (task execution timeout, KIll) |

|

|

| Network normal, linkis, DSS normal |

Item label function |

Add a task label |

Labeling tasks |

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Project editing function |

|

|

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Edit History \ Execution History |

|

|

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Project permission management |

Give hdfs user, view permission |

You can only view the item and cannot perform other operations |

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Project permission management |

Click to display the Permission Management pack |

Displays the rights management |

V1.0.3 |

Functional test |

Manual test |

Exception (permission management list display exception) |

Front-end joint debugging |

|

| Network normal, linkis, DSS normal |

Add Verification Rule-Single Table Verification |

Single table verification rule added successfully |

|

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Add Verification Rule-Cross-table Comparison |

Cross-table comparison check rule added successfully |

|

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Add Verification Rule-File Verification |

File validation rule added successfully |

|

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Add Verification Rule-Cross-database Comparison |

Failed to add cross-database comparison verification rule |

Need more than one library, only one |

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Add Verification Rule-Single Indicator Verification |

Verification rule of single pointer table is added successfully |

|

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Add Verification Rule-Multiple Indicator Verification |

Multi-indicator verification rule is added successfully |

|

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Rule enforcement |

Function is normal |

|

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Import and export rules |

Successfully imported and exported |

|

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Details of the rules |

View rule details succeeded |

|

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Rule editing |

Rule editing function is normal |

|

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Rule Rename |

Rule Rename OK |

|

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Rule deletion |

The delete rule is normal |

|

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Number of Checks Passed/Failed/Failed Today |

Get normal |

Displays the number of passed/failed/failed verification |

V1.0.3 |

Functional test |

Manual test |

Normal |

|

|

| Network normal, linkis, DSS normal |

Get Execution Task Log |

Get "" Failed Task "" Log Error |

Displays the number of passed/failed/failed verification |

V1.0.3 |

Functional test |

Manual test |

Get log exception |

Use the hdfs user |

|

| Network normal, linkis, DSS normal |

Logout login exception |

The toolbar in the upper right corner of the user is repeatedly logged out. |

|

V1.0.3 |

Functional test |

Manual test |

User Toolbar Exception |

Front-end joint debugging |

|

| Network normal, linkis, DSS normal |

Login page exception |

User login page appears, inexplicable jump exception |

|

V1.0.3 |

Functional test |

Manual test |

Login home page exception |

Front-end joint debugging |

|

| Network normal, linkis, DSS normal |

DSS-> Project Management-> Workflow-> Data Quality |

Data quality node, get jump address, projectID is null |

|

V1.0.3 |

Functional test |

Manual test |

Go viral |

The hdfs user is not registered with LDAP |

|