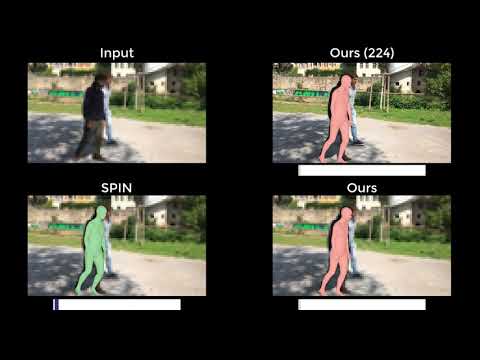

Implementation for "3D Human Pose, Shape and Texture from Low-Resolution Images and Videos", TPAMI 2021

Conference version: "3D Human Shape and Pose from a Single Low-Resolution Image with Self-Supervised Learning", ECCV 2020

-

RSC-Net:

- Resolution-aware structure

- Self-supervised learning

- Contrastive learning

-

Temporal post-processing for video input

-

TexGlo: Global module for 3D texture reconstruction

Make sure you have gcc==5.x.x for installing the packages. Then run:

bash install_environment.sh

If you are running the code without a screen, please install OSMesa and the corresponding PyOpenGL. Then uncomment the 2nd line of "utils/renderer.py".

Note that all paths are set in "config.py".

- Download pretrained RSC-Net, and put it in "./pretrained".

- Run:

python demo.py --checkpoint=./pretrained/RSC-Net.pt --img_path=./examples/im1.png

- Note: if you have trouble in using Pyrender, please try "demo_nr.py":

python demo_nr.py --checkpoint=./pretrained/RSC-Net.pt --img_path=./examples/im1.png

If your neural-renderer has errors, please re-install the package from the source.

python eval.py --checkpoint=./pretrained/RSC-Net.pt

python train.py --name=RSC-Net

If you find this work helpful in your research, please cite our paper:

@article{xu20213d,

title={3D Human Pose, Shape and Texture from Low-Resolution Images and Videos},

author={Xu, Xiangyu and Chen, Hao and Moreno-Noguer, Francesc and Jeni, Laszlo A and De la Torre, Fernando},

journal={TPAMI},

year={2021},

}

@inproceedings{xu20203d,

title={3D Human Shape and Pose from a Single Low-Resolution Image with Self-Supervised Learning},

author={Xu, Xiangyu and Chen, Hao and Moreno-Noguer, Francesc and Jeni, Laszlo A and De la Torre, Fernando},

booktitle={ECCV},

year={2020},

}