by Yi Wang, Xin Tao, Xiaoyong Shen, Jiaya Jia.

This repository gives the Tensorflow implementation of the method in CVPR 2019 paper, 'Wide-Context Semantic Image Extrapolation'. This method can expand semantically sensitive objects (face, body) / scenes beyond image boundary.

- Small-to-large scheme

- Context normalization

- Relative spatial variant loss

- Python3.5 (or higher)

- Tensorflow 1.6 (or later versions, excluding 2.x) with NVIDIA GPU or CPU

- OpenCV

- numpy

- scipy

- easydict

git clone https://github.com/shepnerd/outpainting_srn.git

cd outpainting_srn/-

Download the pretrained models through the following links (CelebA-HQ_256, Paris streetview, Cityscapes), and unzip and put them into

checkpoints/. -

To test images in a folder, we call

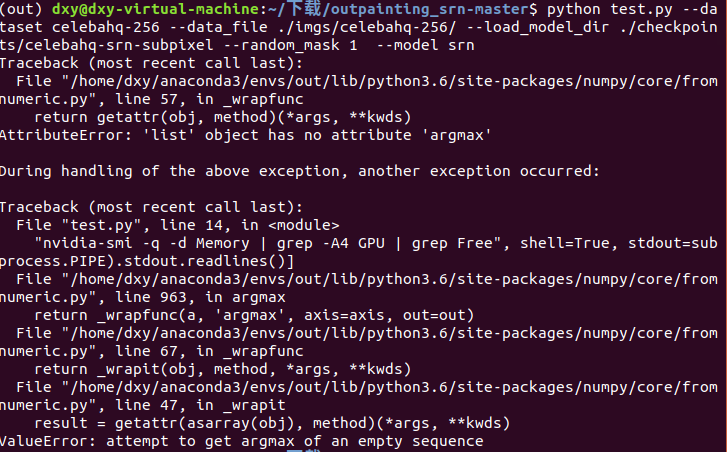

test.pywith the opinion--dataset_pathand--load_model_dir. We give some CelebA-HQ_256 examples in theimgs/celebahq-256/. For example:python test.py --dataset celebahq-256 --data_file ./imgs/celebahq-256/ --load_model_dir ./checkpoints/celebahq-srn-subpixel --random_mask 1

or write / modify

test.shaccording to your own needs, then execute this script as (Linux platform):sh ./test.sh

The visual evaluations will be saved in the folder ./test_results/.

-

Training the model with the reconstruction loss (relative spatial variant loss) firstly (set the opinion

--pretrain_network 1), then fine-tuning the model with all losses (--pretrain_network 0and--load_model_dir [Your model path]) after the first stage converges. To pretrain the network,python train.py --dataset [DATASET_NAME] --data_file [DATASET_TRAININGFILE] --gpu_ids [NUM] --pretrain_network 1 --batch_size 16

where

[DATASET_TRAININGFILE]indicates a file storing the full paths of the training images. A simple example is given as:python train.py --dataset celebahq-256 --data_file ../celebahq-256_train.txt --gpu_ids 0 --img_shapes 256,256 --pretrain_network 1 --batch_size 8

-

Then finetune the network,

python train.py --dataset [DATASET_NAME] --data_file [DATASET_TRAININGFILE] --gpu_ids [NUM] --pretrain_network 0 --load_model_dir [PRETRAINED_MODEL_PATH] --batch_size 8

All used datasets (CelebA-HQ, CUB200, Dog, DeepFashion, Paris-Streetview, Cityscape, and Places2) and their corresponding train/test splits are given in the paper.

- Other pretrained models

- ...

- The training and evaluation performance on large-scale datasets (with a variety of categories) is unstable due to possible mode collapse in adversarial training.

- The used ID-MRF is a simplified version based on contextual loss. The step of excluding

sis omitted for computational efficiency.

If our method is useful for your research, please consider citing:

@inproceedings{wang2019srn,

title={Wide-Context Semantic Image Extrapolation},

author={Wang, Yi and Tao, Xin and Shen, Xiaoyong and Jia, Jiaya},

booktitle={IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

pages={1399--1408},

year={2019}

}

@inproceedings{wang2018image,

title={Image Inpainting via Generative Multi-column Convolutional Neural Networks},

author={Wang, Yi and Tao, Xin and Qi, Xiaojuan and Shen, Xiaoyong and Jia, Jiaya},

booktitle={Advances in Neural Information Processing Systems},

pages={331--340},

year={2018}

}

Our code is built upon Image Inpainting via Generative Multi-column Convolutional Neural Networks and pix2pixHD.

Please send email to [email protected].