The latest version of the project is available at mdsinabox.com. The website embraces the notion of "Serverless BI" - the pages are built asynchronously with open source software on commodity hardware and then pushed to a static site. The github action that automatically deploys the site upon PR can be found here.

This project serves as end to end example of running the "Modern Data Stack" on a single node. The components are designed to be "hot swappable", using makefile to create clearly defined interfaces between discrete components in the stack. It runs in many enviroments with many visualization options. In addition, the data transformation documentation is self hosted on github pages.

It runs practically anywhere, and has been tested in the environments below.

| Operating System | Local | Docker | Devcontainer | Docker in Devcontainer |

|---|---|---|---|---|

| Windows (w/WSL) | n/a | ✅ | ✅ | ✅ |

| Mac (Ventura) | ✅ | ✅ | ✅ | ✅ |

| Linux (Ubuntu 20.04) | ✅ | ✅ | ✅ | ✅ |

| 1 | 2 | 3 |

|---|---|---|

|

|

|

It can also be explored live at mdsinabox.com.

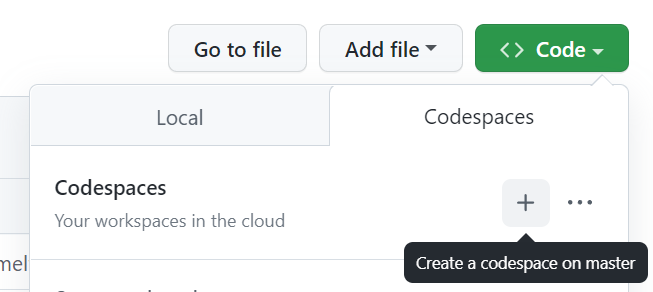

Want to try MDS-in-a-box right away? Create a Codespace:

You can run in the Codespace by running the following command:

make build run

You will need to wait for the pipeline to run and Evidence configuration to complete. The 4-core codespace performs signifcantly better in testing, and is recommended for a better experience.

Once the build completes, you can access the Evidence dashboard by clicking on the Open in Browser button on the Ports tab:

and log in with the username and password: "admin" and "password".

and log in with the username and password: "admin" and "password".

Codespaces also supports "Docker-in-docker", so you can run docker inside the codespace with the following command:

make docker-build docker-run-evidence

- Create your WSL environment. Open a PowerShell terminal running as an administrator and execute:

wsl --install

- If this was the first time WSL has been installed, restart your machine.

- Open Ubuntu in your terminal and update your packages.

sudo apt-get update

- Install python3.

sudo apt-get install python3.9 python3-pip python3.9-venv

- clone the this repo.

mkdir my_projects

cd my_projects

git clone https://github.com/matsonj/nba-monte-carlo.git

# Go one folder level down into the folder that git just created

cd nba-monte-carlo

- build your project

make build run

Make sure to open up evidence when prompted (default location is 127.0.0.1:8088). The username and password is "admin" and "password".

You can build a docker container by running:

make docker-build

Then run the container using

make docker-run-evidence

These are both aliases defined in the Makefile:

docker-build:

docker build -t mdsbox .

docker-run-evidence:

docker run \

--publish 8088:8088 \

--env MDS_SCENARIOS=10000 \

--env MDS_INCLUDE_ACTUALS=true \

--env MDS_LATEST_RATINGS=true \

--env MDS_ENABLE_EXPORT=true \

--env ENVIRONMENT=docker \

mdsbox make run serve

Using DuckDB keeps install and config very simple - its a single command and runs everywhere. It also frankly covers for the sin of building a monte carlo simulation in SQL - it would be quite slow without the kind of computing that DuckDB can do.

Postgres was also considered in this project, but it is not a great pattern to run postgres on the same node as the rest of the data stack.

This project leverages parquet in addition to the DuckDB database for file storage. This is experimental and implementation will evolve over time - especially as both the DuckDB format continues to evolve and Iceberg/Delta support is added to DuckDB.

dbt-duckdb supports external tables, which are parquet files exported to the data_catalog folder. This allows easier integration with Rill, for example, which can read the parquet files and transform them directly with its own DuckDB implementation.

- clean up env vars + implement incremental builds

- submit your PR or open an issue!

The data contained within this project comes from pro football reference, sports reference (cfb), basketball reference, and draft kings.