Try it! Docs CLI Test runner Web worker

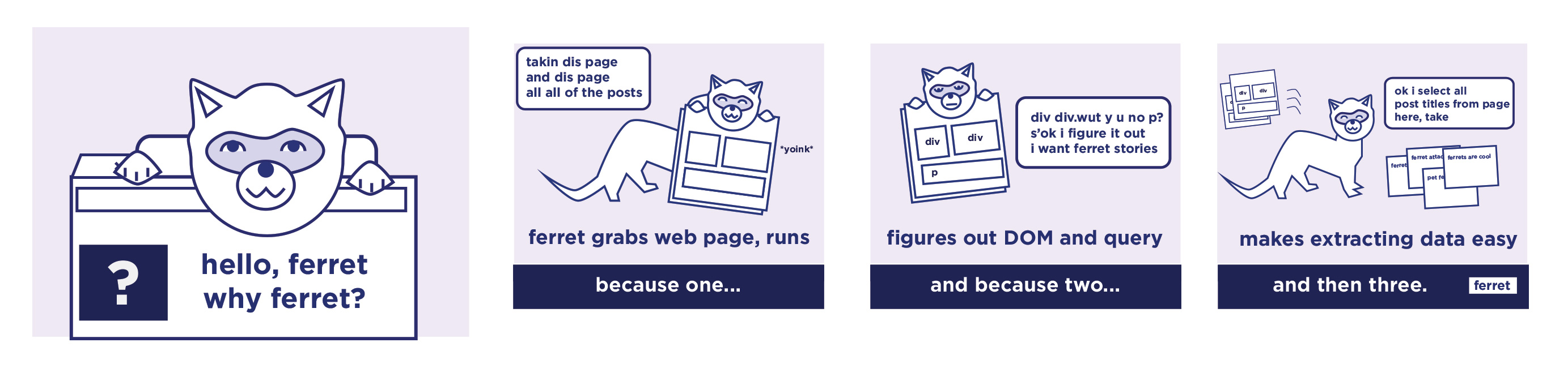

ferret is a web scraping system. It aims to simplify data extraction from the web for UI testing, machine learning, analytics and more.

ferret allows users to focus on the data. It abstracts away the technical details and complexity of underlying technologies using its own declarative language.

It is extremely portable, extensible, and fast.

Read the introductory blog post about Ferret here!

- Declarative language

- Support of both static and dynamic web pages

- Embeddable

- Extensible

Documentation is available at our website.

- Ferret for python. Pyfer