Ever wanted to train a NeRF model of a fox in under 5 seconds? Or fly around a scene captured from photos of a factory robot? Of course you have!

Here you will find an implementation of four neural graphics primitives, being neural radiance fields (NeRF), signed distance functions (SDFs), neural images, and neural volumes. In each case, we train and render a MLP with multiresolution hash input encoding using the tiny-cuda-nn framework.

Instant Neural Graphics Primitives with a Multiresolution Hash Encoding

Thomas Müller, Alex Evans, Christoph Schied, Alexander Keller

ACM Transactions on Graphics (SIGGRAPH), July 2022

Project page / Paper / Video / Presentation / Real-Time Live / BibTeX

For business inquiries, please submit the NVIDIA research licensing form.

If you have Windows, download one of the following releases corresponding to your graphics card and extract it. Then, start instant-ngp.exe.

- RTX 3000 & 4000 series, RTX A4000–A6000, and other Ampere & Ada cards

- RTX 2000 series, Titan RTX, Quadro RTX 4000–8000, and other Turing cards

- GTX 1000 series, Titan Xp, Quadro P1000–P6000, and other Pascal cards

Keep reading for a guided tour of the application or, if you are interested in creating your own NeRF, watch the video tutorial or read the written instructions.

If you use Linux, or want the developer Python bindings, or if your GPU is not listed above (e.g. Hopper, Volta, or Maxwell generations), you need to build instant-ngp yourself.

instant-ngp comes with an interactive GUI that includes many features:

- comprehensive controls for interactively exploring neural graphics primitives,

- VR mode for viewing neural graphics primitives through a virtual-reality headset,

- saving and loading "snapshots" so you can share your graphics primitives on the internet,

- a camera path editor to create videos,

NeRF->MeshandSDF->Meshconversion,- camera pose and lens optimization,

- and many more.

Simply start instant-ngp and drag the data/nerf/fox folder into the window. Or, alternatively, use the command line:

instant-ngp$ ./instant-ngp data/nerf/foxYou can use any NeRF-compatible dataset, e.g. from original NeRF, the SILVR dataset, or the DroneDeploy dataset. To create your own NeRF, watch the video tutorial or read the written instructions.

Drag data/sdf/armadillo.obj into the window or use the command:

instant-ngp$ ./instant-ngp data/sdf/armadillo.objDrag data/image/albert.exr into the window or use the command:

instant-ngp$ ./instant-ngp data/image/albert.exrTo reproduce the gigapixel results, download, for example, the Tokyo image and convert it to .bin using the scripts/convert_image.py script. This custom format improves compatibility and loading speed when resolution is high. Now you can run:

instant-ngp$ ./instant-ngp data/image/tokyo.binDownload the nanovdb volume for the Disney cloud, which is derived from here (CC BY-SA 3.0). Then drag wdas_cloud_quarter.nvdb into the window or use the command:

instant-ngp$ ./instant-ngp wdas_cloud_quarter.nvdbHere are the main keyboard controls for the instant-ngp application.

| Key | Meaning |

|---|---|

| WASD | Forward / pan left / backward / pan right. |

| Spacebar / C | Move up / down. |

| = or + / - or _ | Increase / decrease camera velocity (first person mode) or zoom in / out (third person mode). |

| E / Shift+E | Increase / decrease exposure. |

| Tab | Toggle menu visibility. |

| T | Toggle training. After around two minutes training tends to settle down, so can be toggled off. |

| { } | Go to the first/last training image camera view. |

| [ ] | Go to the previous/next training image camera view. |

| R | Reload network from file. |

| Shift+R | Reset camera. |

| O | Toggle visualization or accumulated error map. |

| G | Toggle visualization of the ground truth. |

| M | Toggle multi-view visualization of layers of the neural model. See the paper's video for a little more explanation. |

| , / . | Shows the previous / next visualized layer; hit M to escape. |

| 1-8 | Switches among various render modes, with 2 being the standard one. You can see the list of render mode names in the control interface. |

There are many controls in the instant-ngp GUI. First, note that this GUI can be moved and resized, as can the "Camera path" GUI (which first must be expanded to be used).

Recommended user controls in instant-ngp are:

- Snapshot: use "Save" to save the trained NeRF, "Load" to reload.

- Rendering -> DLSS: toggling this on and setting "DLSS sharpening" to 1.0 can often improve rendering quality.

- Rendering -> Crop size: trim back the surrounding environment to focus on the model. "Crop aabb" lets you move the center of the volume of interest and fine tune. See more about this feature in our NeRF training & dataset tips.

The "Camera path" GUI lets you create a camera path for rendering a video.

The button "Add from cam" inserts keyframes from the current perspective.

Then, you can render a video .mp4 of your camera path or export the keyframes to a .json file.

There is a bit more information about the GUI in this post and in this video guide to creating your own video.

To view the neural graphics primitive in VR, first start your VR runtime. This will most likely be either

- OculusVR if you have an Oculus Rift or Meta Quest (with link cable) headset, and

- SteamVR if you have another headset.

- Any OpenXR-compatible runtime will work.

Then, press the Connect to VR/AR headset button in the instant-ngp GUI and put on your headset. Before entering VR, we strongly recommend that you first finish training (press "Stop training") or load a pre-trained snapshot for maximum performance.

In VR, you have the following controls.

| Control | Meaning |

|---|---|

| Left stick / trackpad | Move |

| Right stick / trackpad | Turn camera |

| Press stick / trackpad | Erase NeRF around the hand |

| Grab (one-handed) | Drag neural graphics primitive |

| Grab (two-handed) | Rotate and zoom (like pinch-to-zoom on a smartphone) |

- An NVIDIA GPU; tensor cores increase performance when available. All shown results come from an RTX 3090.

- A C++14 capable compiler. The following choices are recommended and have been tested:

- Windows: Visual Studio 2019 or 2022

- Linux: GCC/G++ 8 or higher

- A recent version of CUDA. The following choices are recommended and have been tested:

- Windows: CUDA 11.5 or higher

- Linux: CUDA 10.2 or higher

- CMake v3.21 or higher.

- (optional) Python 3.7 or higher for interactive bindings. Also, run

pip install -r requirements.txt. - (optional) OptiX 7.6 or higher for faster mesh SDF training.

- (optional) Vulkan SDK for DLSS support.

If you are using Debian based Linux distribution, install the following packages

sudo apt-get install build-essential git python3-dev python3-pip libopenexr-dev libxi-dev \

libglfw3-dev libglew-dev libomp-dev libxinerama-dev libxcursor-devAlternatively, if you are using Arch or Arch derivatives, install the following packages

sudo pacman -S cuda base-devel cmake openexr libxi glfw openmp libxinerama libxcursorWe also recommend installing CUDA and OptiX in /usr/local/ and adding the CUDA installation to your PATH.

For example, if you have CUDA 11.4, add the following to your ~/.bashrc

export PATH="/usr/local/cuda-11.4/bin:$PATH"

export LD_LIBRARY_PATH="/usr/local/cuda-11.4/lib64:$LD_LIBRARY_PATH"Begin by cloning this repository and all its submodules using the following command:

$ git clone --recursive https://github.com/nvlabs/instant-ngp

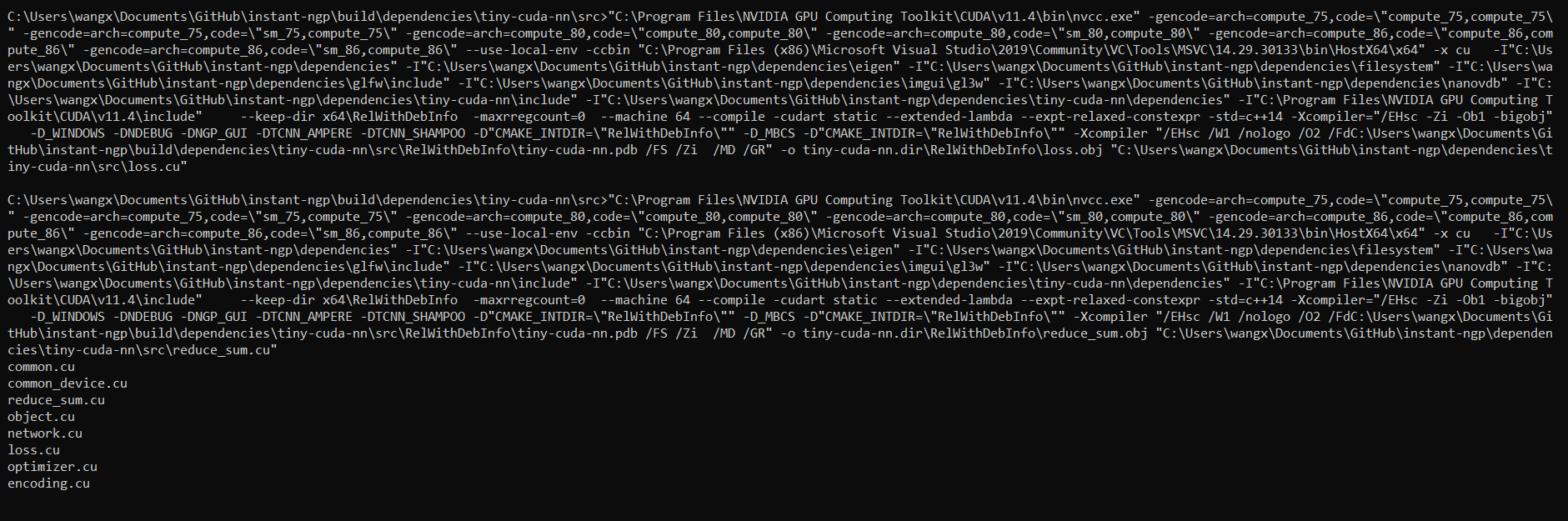

$ cd instant-ngpThen, use CMake to build the project: (on Windows, this must be in a developer command prompt)

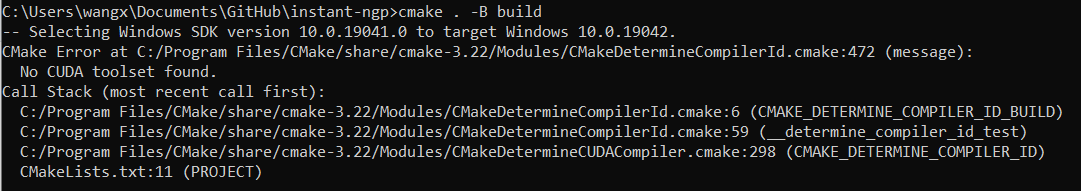

instant-ngp$ cmake . -B build -DCMAKE_BUILD_TYPE=RelWithDebInfo

instant-ngp$ cmake --build build --config RelWithDebInfo -jIf compilation fails inexplicably or takes longer than an hour, you might be running out of memory. Try running the above command without -j in that case.

If this does not help, please consult this list of possible fixes before opening an issue.

If the build succeeds, you can now run the code via the ./instant-ngp executable or the scripts/run.py script described below.

If automatic GPU architecture detection fails, (as can happen if you have multiple GPUs installed), set the TCNN_CUDA_ARCHITECTURES environment variable for the GPU you would like to use. The following table lists the values for common GPUs. If your GPU is not listed, consult this exhaustive list.

| H100 | 40X0 | 30X0 | A100 | 20X0 | TITAN V / V100 | 10X0 / TITAN Xp | 9X0 | K80 |

|---|---|---|---|---|---|---|---|---|

| 90 | 89 | 86 | 80 | 75 | 70 | 61 | 52 | 37 |

After you have built instant-ngp, you can use its Python bindings to conduct controlled experiments in an automated fashion.

All features from the interactive GUI (and more!) have Python bindings that can be easily instrumented.

For an example of how the ./instant-ngp application can be implemented and extended from within Python, see ./scripts/run.py, which supports a superset of the command line arguments that ./instant-ngp does.

If you would rather build new models from the hash encoding and fast neural networks, consider tiny-cuda-nn's PyTorch extension.

Happy hacking!

- Getting started with NVIDIA Instant NeRF blog post

- SIGGRAPH tutorial for advanced NeRF dataset creation.

Q: The NeRF reconstruction of my custom dataset looks bad; what can I do?

A: There could be multiple issues:

- COLMAP might have been unable to reconstruct camera poses.

- There might have been movement or blur during capture. Don't treat capture as an artistic task; treat it as photogrammetry. You want *as little blur as possible* in your dataset (motion, defocus, or otherwise) and all objects must be *static* during the entire capture. Bonus points if you are using a wide-angle lens (iPhone wide angle works well), because it covers more space than narrow lenses.

- The dataset parameters (in particular

aabb_scale) might have been tuned suboptimally. We recommend starting withaabb_scale=128and then increasing or decreasing it by factors of two until you get optimal quality. - Carefully read our NeRF training & dataset tips.

Q: How can I save the trained model and load it again later?

A: Two options:

- Use the GUI's "Snapshot" section.

- Use the Python bindings

load_snapshot/save_snapshot(seescripts/run.pyfor example usage).

Q: Can this codebase use multiple GPUs at the same time?

A: Only for VR rendering, in which case one GPU is used per eye. Otherwise, no. To select a specific GPU to run on, use the CUDA_VISIBLE_DEVICES environment variable. To optimize the compilation for that specific GPU use the TCNN_CUDA_ARCHITECTURES environment variable.

Q: How can I run instant-ngp in headless mode?

A: Use ./instant-ngp --no-gui or python scripts/run.py. You can also compile without GUI via cmake -DNGP_BUILD_WITH_GUI=off ...

Q: Does this codebase run on Google Colab?

A: Yes. See this example inspired on the notebook created by user @myagues. Caveat: this codebase requires large amounts of GPU RAM and might not fit on your assigned GPU. It will also run slower on older GPUs.

Q: Is there a Docker container?

A: Yes. We bundle a Visual Studio Code development container, the .devcontainer/Dockerfile of which you can also use stand-alone.

If you want to run the container without using VSCode:

docker-compose -f .devcontainer/docker-compose.yml build instant-ngp

xhost local:root

docker-compose -f .devcontainer/docker-compose.yml run instant-ngp /bin/bash

Then run the build commands above as normal.

Q: How can I edit and train the underlying hash encoding or neural network on a new task?

A: Use tiny-cuda-nn's PyTorch extension.

Q: What is the coordinate system convention?

A: See this helpful diagram by user @jc211.

Q: Why are background colors randomized during NeRF training?

A: Transparency in the training data indicates a desire for transparency in the learned model. Using a solid background color, the model can minimize its loss by simply predicting that background color, rather than transparency (zero density). By randomizing the background colors, the model is forced to learn zero density to let the randomized colors "shine through".

Q: How to mask away NeRF training pixels (e.g. for dynamic object removal)?

A: For any training image xyz.* with dynamic objects, you can provide a dynamic_mask_xyz.png in the same folder. This file must be in PNG format, where non-zero pixel values indicate masked-away regions.

Before investigating further, make sure all submodules are up-to-date and try compiling again.

instant-ngp$ git submodule sync --recursive

instant-ngp$ git submodule update --init --recursiveIf instant-ngp still fails to compile, update CUDA as well as your compiler to the latest versions you can install on your system. It is crucial that you update both, as newer CUDA versions are not always compatible with earlier compilers and vice versa. If your problem persists, consult the following table of known issues.

*After each step, delete the build folder and let CMake regenerate it before trying again.*

| Problem | Resolution |

|---|---|

| CMake error: No CUDA toolset found / CUDA_ARCHITECTURES is empty for target "cmTC_0c70f" | Windows: the Visual Studio CUDA integration was not installed correctly. Follow these instructions to fix the problem without re-installing CUDA. (#18) |

Linux: Environment variables for your CUDA installation are probably incorrectly set. You may work around the issue using cmake . -B build -DCMAKE_CUDA_COMPILER=/usr/local/cuda-<your cuda version>/bin/nvcc (#28) |

|

| CMake error: No known features for CXX compiler "MSVC" | Reinstall Visual Studio & make sure you run CMake from a developer shell. Make sure you delete the build folder before building again. (#21) |

| Compile error: A single input file is required for a non-link phase when an outputfile is specified | Ensure there no spaces in the path to instant-ngp. Some build systems seem to have trouble with those. (#39 #198) |

| Compile error: undefined references to "cudaGraphExecUpdate" / identifier "cublasSetWorkspace" is undefined | Update your CUDA installation (which is likely 11.0) to 11.3 or higher. (#34 #41 #42) |

| Compile error: too few arguments in function call | Update submodules with the above two git commands. (#37 #52) |

| Python error: No module named 'pyngp' | It is likely that CMake did not detect your Python installation and therefore did not build pyngp. Check CMake logs to verify this. If pyngp was built in a different folder than build, Python will be unable to detect it and you have to supply the full path to the import statement. (#43) |

If you cannot find your problem in the table, try searching the discussions board and the issues area for help. If you are still stuck, please open an issue and ask for help.

Many thanks to Jonathan Tremblay and Andrew Tao for testing early versions of this codebase and to Arman Toorians and Saurabh Jain for the factory robot dataset. We also thank Andrew Webb for noticing that one of the prime numbers in the spatial hash was not actually prime; this has been fixed since.

This project makes use of a number of awesome open source libraries, including:

- tiny-cuda-nn for fast CUDA networks and input encodings

- tinyexr for EXR format support

- tinyobjloader for OBJ format support

- stb_image for PNG and JPEG support

- Dear ImGui an excellent immediate mode GUI library

- Eigen a C++ template library for linear algebra

- pybind11 for seamless C++ / Python interop

- and others! See the

dependenciesfolder.

Many thanks to the authors of these brilliant projects!

@article{mueller2022instant,

author = {Thomas M\"uller and Alex Evans and Christoph Schied and Alexander Keller},

title = {Instant Neural Graphics Primitives with a Multiresolution Hash Encoding},

journal = {ACM Trans. Graph.},

issue_date = {July 2022},

volume = {41},

number = {4},

month = jul,

year = {2022},

pages = {102:1--102:15},

articleno = {102},

numpages = {15},

url = {https://doi.org/10.1145/3528223.3530127},

doi = {10.1145/3528223.3530127},

publisher = {ACM},

address = {New York, NY, USA},

}Copyright © 2022, NVIDIA Corporation. All rights reserved.

This work is made available under the Nvidia Source Code License-NC. Click here to view a copy of this license.