It seems that there arer multiple threads running at the same time since I got this overrride question many times:

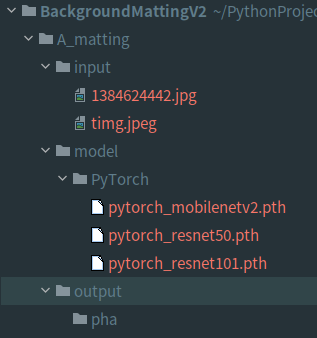

(bgm2) C:\ZeroBox\src\BackgroundMattingV2> python inference_images.py --model-type mattingrefine --model-backbone mobilenetv2 --model-checkpoint PyTorch\pytorch_mobilenetv2.pth --images-src Group15BOriginals --images-bgr Group15BBackground --output-dir output-images --output-type com fgr pha

0%| | 0/1 [00:00<?, ?it/s]Directory output-images already exists. Override? [Y/N]: Directory output-images already exists. Override? [Y/N]: Directory output-images already exists. Override? [Y/N]: Directory output-images already exists. Override? [Y/N]: Directory output-images already exists. Override? [Y/N]: Directory output-images already exists. Override? [Y/N]: Directory output-images already exists. Override? [Y/N]: Directory output-images already exists. Override? [Y/N]: n

0%| | 0/1 [00:40<?, ?it/s]

Traceback (most recent call last):

File "C:\Users\jinzi\miniconda3\envs\bgm2\lib\site-packages\torch\utils\data\dataloader.py", line 872, in _try_get_data

data = self._data_queue.get(timeout=timeout)

File "C:\Users\jinzi\miniconda3\envs\bgm2\lib\queue.py", line 178, in get

raise Empty

_queue.Empty

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "inference_images.py", line 123, in <module>

for i, (src, bgr) in enumerate(tqdm(dataloader)):

File "C:\Users\jinzi\miniconda3\envs\bgm2\lib\site-packages\tqdm\std.py", line 1171, in __iter__

for obj in iterable:

File "C:\Users\jinzi\miniconda3\envs\bgm2\lib\site-packages\torch\utils\data\dataloader.py", line 435, in __next__

data = self._next_data()

File "C:\Users\jinzi\miniconda3\envs\bgm2\lib\site-packages\torch\utils\data\dataloader.py", line 1068, in _next_data

idx, data = self._get_data()

File "C:\Users\jinzi\miniconda3\envs\bgm2\lib\site-packages\torch\utils\data\dataloader.py", line 1024, in _get_data

success, data = self._try_get_data()

File "C:\Users\jinzi\miniconda3\envs\bgm2\lib\site-packages\torch\utils\data\dataloader.py", line 885, in _try_get_data

raise RuntimeError('DataLoader worker (pid(s) {}) exited unexpectedly'.format(pids_str)) from e

RuntimeError: DataLoader worker (pid(s) 54132) exited unexpectedly

(bgm2) C:\ZeroBox\src\BackgroundMattingV2> python inference_images.py --model-type mattingrefine --model-backbone mobilenetv2 --model-checkpoint PyTorch\pytorch_mobilenetv2.pth --images-src Group15BOriginals --images-bgr Group15BBackground --output-dir output-images --output-type com fgr pha

Directory output-images already exists. Override? [Y/N]: y

0%| | 0/1 [00:00<?, ?it/s]Directory output-images already exists. Override? [Y/N]: Directory output-images already exists. Override? [Y/N]: Directory output-images already exists. Override? [Y/N]: Directory output-images already exists. Override? [Y/N]: Directory output-images already exists. Override? [Y/N]: Directory output-images already exists. Override? [Y/N]: Directory output-images already exists. Override? [Y/N]: Directory output-images already exists. Override? [Y/N]: y

0%| | 0/1 [00:00<?, ?it/s]

Traceback (most recent call last):

File "<string>", line 1, in <module>

File "C:\Users\jinzi\miniconda3\envs\bgm2\lib\multiprocessing\spawn.py", line 116, in spawn_main

exitcode = _main(fd, parent_sentinel)

File "C:\Users\jinzi\miniconda3\envs\bgm2\lib\multiprocessing\spawn.py", line 125, in _main

prepare(preparation_data)

File "C:\Users\jinzi\miniconda3\envs\bgm2\lib\multiprocessing\spawn.py", line 236, in prepare

_fixup_main_from_path(data['init_main_from_path'])

File "C:\Users\jinzi\miniconda3\envs\bgm2\lib\multiprocessing\spawn.py", line 287, in _fixup_main_from_path

main_content = runpy.run_path(main_path,

File "C:\Users\jinzi\miniconda3\envs\bgm2\lib\runpy.py", line 265, in run_path

return _run_module_code(code, init_globals, run_name,

File "C:\Users\jinzi\miniconda3\envs\bgm2\lib\runpy.py", line 97, in _run_module_code

_run_code(code, mod_globals, init_globals,

File "C:\Users\jinzi\miniconda3\envs\bgm2\lib\runpy.py", line 87, in _run_code

exec(code, run_globals)

File "C:\ZeroBox\src\BackgroundMattingV2\inference_images.py", line 123, in <module>

for i, (src, bgr) in enumerate(tqdm(dataloader)):

File "C:\Users\jinzi\miniconda3\envs\bgm2\lib\site-packages\tqdm\std.py", line 1171, in __iter__

for obj in iterable:

File "C:\Users\jinzi\miniconda3\envs\bgm2\lib\site-packages\torch\utils\data\dataloader.py", line 352, in __iter__

return self._get_iterator()

File "C:\Users\jinzi\miniconda3\envs\bgm2\lib\site-packages\torch\utils\data\dataloader.py", line 294, in _get_iterator

return _MultiProcessingDataLoaderIter(self)

File "C:\Users\jinzi\miniconda3\envs\bgm2\lib\site-packages\torch\utils\data\dataloader.py", line 801, in __init__

w.start()

File "C:\Users\jinzi\miniconda3\envs\bgm2\lib\multiprocessing\process.py", line 121, in start

self._popen = self._Popen(self)

File "C:\Users\jinzi\miniconda3\envs\bgm2\lib\multiprocessing\context.py", line 224, in _Popen

return _default_context.get_context().Process._Popen(process_obj)

File "C:\Users\jinzi\miniconda3\envs\bgm2\lib\multiprocessing\context.py", line 327, in _Popen

return Popen(process_obj)

File "C:\Users\jinzi\miniconda3\envs\bgm2\lib\multiprocessing\popen_spawn_win32.py", line 45, in __init__

prep_data = spawn.get_preparation_data(process_obj._name)

File "C:\Users\jinzi\miniconda3\envs\bgm2\lib\multiprocessing\spawn.py", line 154, in get_preparation_data

_check_not_importing_main()

File "C:\Users\jinzi\miniconda3\envs\bgm2\lib\multiprocessing\spawn.py", line 134, in _check_not_importing_main

raise RuntimeError('''

RuntimeError:

An attempt has been made to start a new process before the

current process has finished its bootstrapping phase.

This probably means that you are not using fork to start your

child processes and you have forgotten to use the proper idiom

in the main module:

if __name__ == '__main__':

freeze_support()

...

The "freeze_support()" line can be omitted if the program

is not going to be frozen to produce an executable.

0%| | 0/1 [00:25<?, ?it/s]

Traceback (most recent call last):

File "C:\Users\jinzi\miniconda3\envs\bgm2\lib\site-packages\torch\utils\data\dataloader.py", line 872, in _try_get_data

data = self._data_queue.get(timeout=timeout)

File "C:\Users\jinzi\miniconda3\envs\bgm2\lib\queue.py", line 178, in get

raise Empty

_queue.Empty

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "inference_images.py", line 123, in <module>

for i, (src, bgr) in enumerate(tqdm(dataloader)):

File "C:\Users\jinzi\miniconda3\envs\bgm2\lib\site-packages\tqdm\std.py", line 1171, in __iter__

for obj in iterable:

File "C:\Users\jinzi\miniconda3\envs\bgm2\lib\site-packages\torch\utils\data\dataloader.py", line 435, in __next__

data = self._next_data()

File "C:\Users\jinzi\miniconda3\envs\bgm2\lib\site-packages\torch\utils\data\dataloader.py", line 1068, in _next_data

idx, data = self._get_data()

File "C:\Users\jinzi\miniconda3\envs\bgm2\lib\site-packages\torch\utils\data\dataloader.py", line 1024, in _get_data

success, data = self._try_get_data()

File "C:\Users\jinzi\miniconda3\envs\bgm2\lib\site-packages\torch\utils\data\dataloader.py", line 885, in _try_get_data

raise RuntimeError('DataLoader worker (pid(s) {}) exited unexpectedly'.format(pids_str)) from e

RuntimeError: DataLoader worker (pid(s) 45488) exited unexpectedly

I do have GPU and I was able to run the other two interference code without any issue.