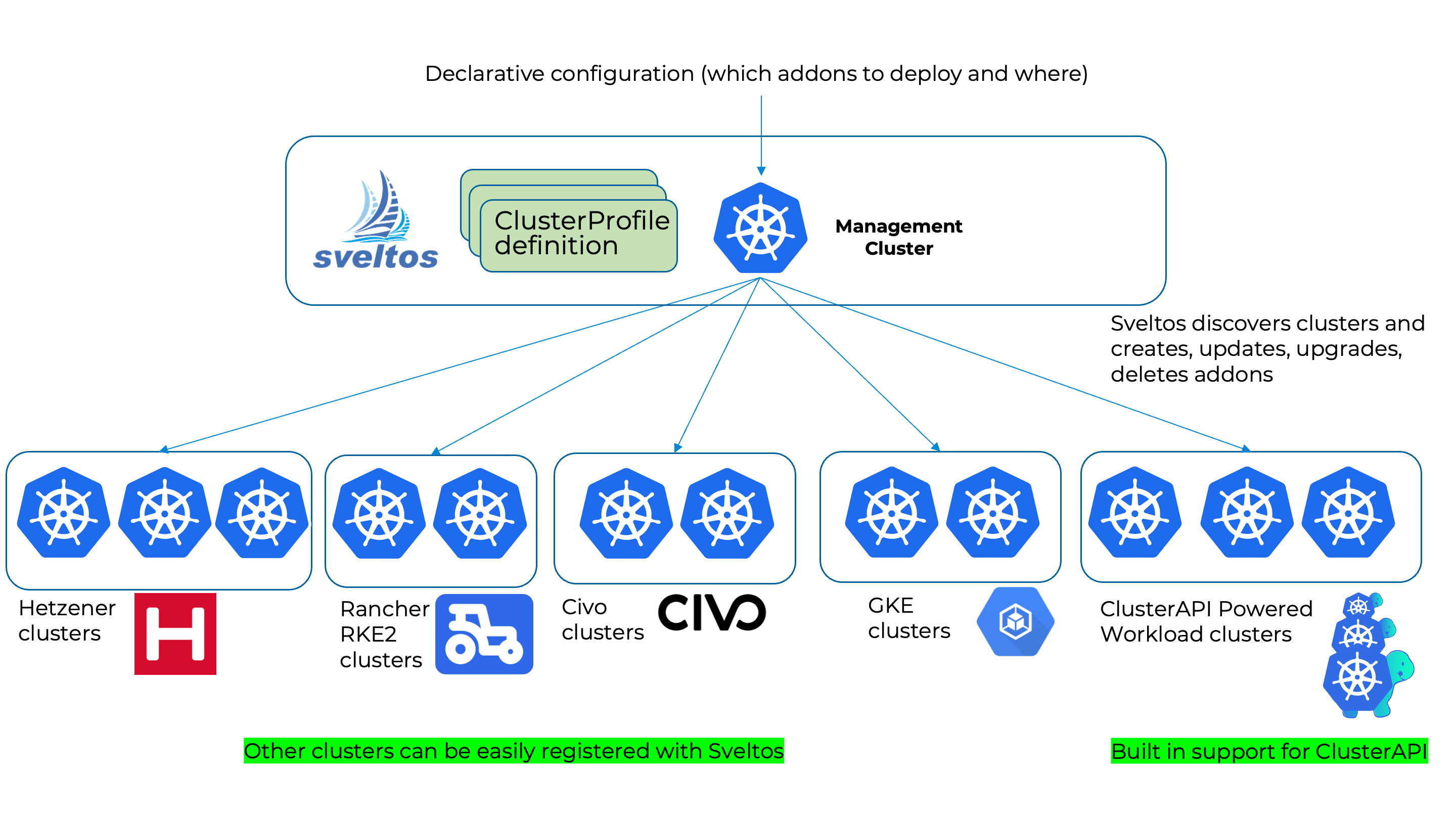

Sveltos is a Kubernetes add-on controller that simplifies the deployment and management of add-ons and applications across multiple clusters. It runs in the management cluster and can programmatically deploy and manage add-ons and applications on any cluster in the fleet, including the management cluster itself. Sveltos supports a variety of add-on formats, including Helm charts, raw YAML, Kustomize, Carvel ytt, and Jsonnet.

Sveltos allows you to represent add-ons and applications as templates. Before deploying to managed clusters, Sveltos instantiates these templates. Sveltos can gather the information required to instantiate the templates from either the management cluster or the managed clusters themselves. This enables you to use the same add-on configuration across all of your clusters, while still allowing for some variation, such as different add-on configuration values. In other words, Sveltos lets you define add-ons and applications in a reusable way. You can then deploy these definitions to multiple clusters, with minor adjustments as needed. This can save you a lot of time and effort, especially if you manage a large number of clusters.

Sveltos provides precise control over add-on deployment order. Add-ons within a Profile/ClusterProfile are deployed in the exact order they appear, ensuring a predictable and controlled rollout. Furthermore, ClusterProfiles can depend on others, guaranteeing that dependent add-ons only deploy after their dependencies are fully operational. Finally Sveltos' event-driven framework offers additional flexibility. This framework allows for deploying add-ons and applications in response to specific events, enabling dynamic and adaptable deployments based on your needs.

👉 To get updates ⭐️ star this repository.

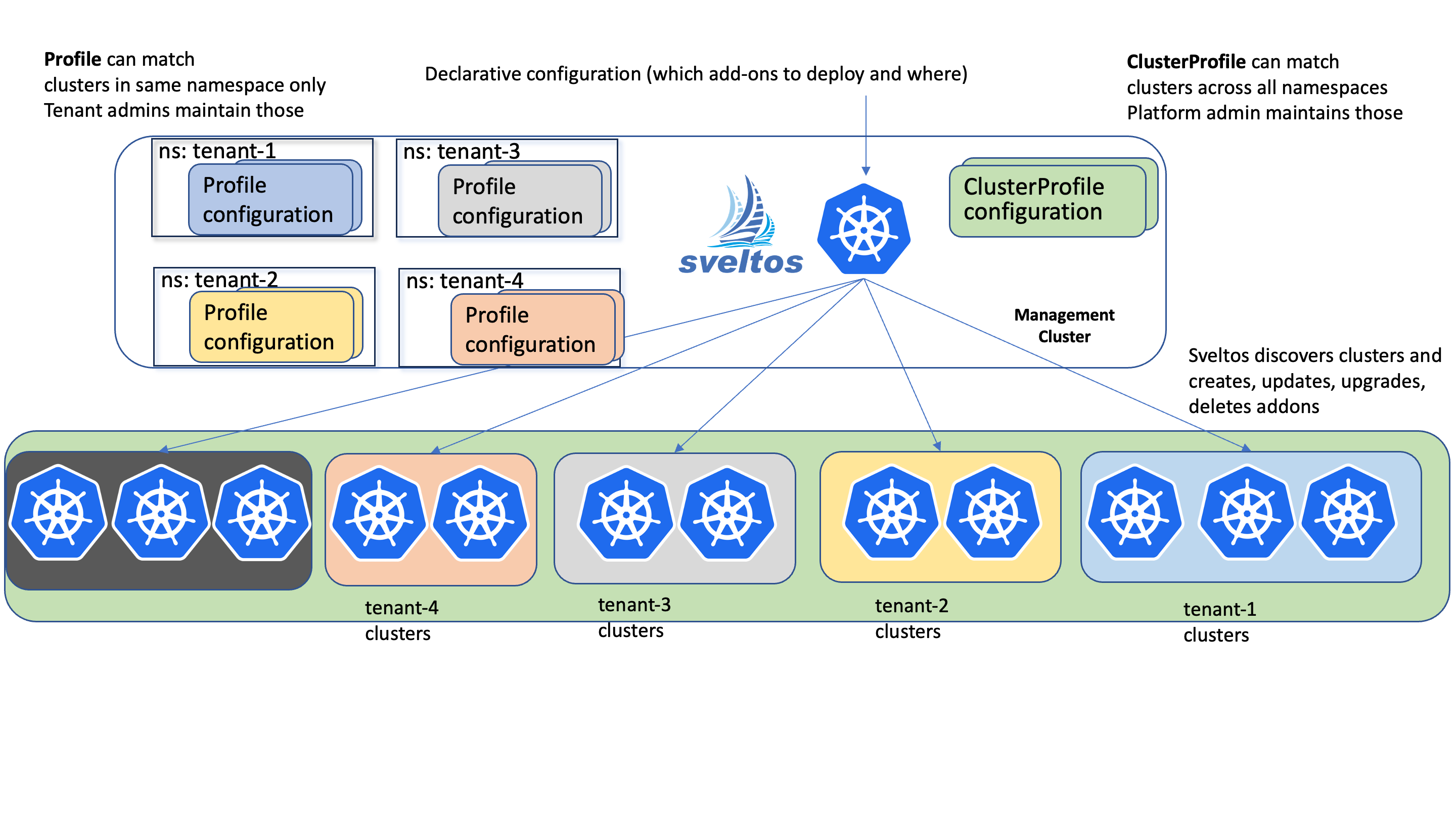

Projectsveltos offers two powerful tools for managing cluster configurations: Profiles and ClusterProfiles. Understanding their distinctions is crucial for efficient setup and administration.

- ClusterProfiles: Apply across all clusters in any namespace. Ideal for platform admins maintaining global consistency and managing settings like networking, security, and resource allocation.

- Profiles: Limited to a specific namespace, granting granular control to tenant admins. This isolation ensures teams manage, from the management cluster, their managed clusters independently without impacting others.

The idea is simple:

- from the management cluster, selects one or more

clusterswith a Kubernetes label selector; - lists which

addonsneed to be deployed on such clusters.

where term:

clustersrepresents both CAPI cluster or any other Kubernetes cluster registered with Sveltos;addonsrepresents either an helm release, Kubernetes resource YAMLs or kustomize resources.

Here is an example of how to require that any CAPI Cluster with label env: prod has following features deployed:

- Kyverno helm chart (version v3.0.1)

- kubernetes resource(s) contained in the referenced Secret: default/storage-class

- kubernetes resource(s) contained in the referenced ConfigMap: default/contour.

apiVersion: config.projectsveltos.io/v1alpha1

kind: ClusterProfile

metadata:

name: deploy-kyverno

spec:

clusterSelector: env=prod

syncMode: Continuous

helmCharts:

- repositoryURL: https://kyverno.github.io/kyverno/

repositoryName: kyverno

chartName: kyverno/kyverno

chartVersion: v3.0.1

releaseName: kyverno-latest

releaseNamespace: kyverno

helmChartAction: Install

values: |

admissionController:

replicas: 3

policyRefs:

- name: storage-class

namespace: default

kind: Secret

- name: contour-gateway

namespace: default

kind: ConfigMapAs soon as a cluster is a match for above ClusterProfile instance, all referenced features are automatically deployed in such cluster.

Here is an example using Kustomize:

apiVersion: config.projectsveltos.io/v1alpha1

kind: ClusterProfile

metadata:

name: flux-system

spec:

clusterSelector: env=fv

syncMode: Continuous

kustomizationRefs:

- namespace: flux-system

name: flux-system

kind: GitRepository

path: ./helloWorld/

targetNamespace: engwhere GitRepository synced with Flux contains following resources:

├── deployment.yaml

├── kustomization.yaml

└── service.yaml

└── configmap.yamlRefer to examples for more complex examples.

- OneTime: This mode is designed for bootstrapping critical components during the initial cluster setup. Think of it as a one-shot configuration injection:

- Deploying essential infrastructure components like CNI plugins, cloud controllers, or the workload cluster's package manager itself;

- Simplifies initial cluster setup;

- Hands over management to the workload cluster's own tools, promoting modularity and potentially simplifying ongoing maintenance.

- Continuous: This mode continuously monitors ClusterProfiles or Profiles for changes and automatically applies them to matching clusters. It ensures ongoing consistency between your desired configuration and the actual cluster state:

- Centralized control over deployments across multiple clusters for consistency and compliance;

- Simplifies management of configurations across multiple clusters.

- ContinuousWithDriftDetection: Detects and automatically corrects configuration drifts in managed clusters, ensuring they remain aligned with the desired state defined in the management cluster.

Sveltos can automatically detect drift between the desired state, defined in the management cluster, and actual state of your clusters and recover from it.

If you want to try projectsveltos with a test cluster:

- git clone https://github.com/projectsveltos/addon-controller

- make quickstart

will create a management cluster using Kind, deploy clusterAPI and projectsveltos, create a workload cluster powered by clusterAPI.

To see the full demo, have a look at this youtube video

- Projectsveltos documentation

- Quick Start

❤️ Your contributions are always welcome! If you want to contribute, have questions, noticed any bug or want to get the latest project news, you can connect with us in the following ways:

- Read contributing guidelines

- Open a bug/feature enhancement on github

- Chat with us on the Slack in the #projectsveltos channel

- Contact Us

Copyright 2022.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License.