Dear Author

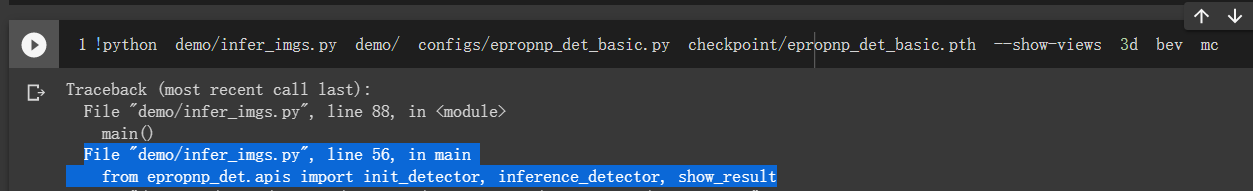

Thank you very much for your great and amazing work, I have got the following error when I run this command line

pip install -v -e . .please help me in this case.

Thanks in advanced

The environment is in the following:

- Ubuntu 20.04 LTS

- Cuda 11.3

- torch 1.10.1+cu113

- torchvision 0.11.2+cu113

- torchaudio 0.10.1+rocm4.1

- pytorch3d 0.6.1

- MMCV 1.4.1

Installing collected packages: epropnp-det

Running setup.py develop for epropnp-det

Running command python setup.py develop

No CUDA runtime is found, using CUDA_HOME='/usr/local/cuda-11.3'

running develop

running egg_info

writing epropnp_det.egg-info/PKG-INFO

writing dependency_links to epropnp_det.egg-info/dependency_links.txt

writing requirements to epropnp_det.egg-info/requires.txt

writing top-level names to epropnp_det.egg-info/top_level.txt

reading manifest file 'epropnp_det.egg-info/SOURCES.txt'

writing manifest file 'epropnp_det.egg-info/SOURCES.txt'

running build_ext

building 'epropnp_det.ops.iou3d.iou3d_cuda' extension

gcc -pthread -B /home/koosha/anaconda3/envs/epropnp_det/compiler_compat -Wl,--sysroot=/ -Wsign-compare -DNDEBUG -g -fwrapv -O3 -Wall -Wstrict-prototypes -fPIC -DWITH_CUDA -I/home/koosha/anaconda3/envs/epropnp_det/lib/python3.7/site-packages/torch/include -I/home/koosha/anaconda3/envs/epropnp_det/lib/python3.7/site-packages/torch/include/torch/csrc/api/include -I/home/koosha/anaconda3/envs/epropnp_det/lib/python3.7/site-packages/torch/include/TH -I/home/koosha/anaconda3/envs/epropnp_det/lib/python3.7/site-packages/torch/include/THC -I/usr/local/cuda-11.3/include -I/home/koosha/anaconda3/envs/epropnp_det/include/python3.7m -c epropnp_det/ops/iou3d/src/iou3d.cpp -o build/temp.linux-x86_64-cpython-37/epropnp_det/ops/iou3d/src/iou3d.o -DTORCH_API_INCLUDE_EXTENSION_H -DPYBIND11_COMPILER_TYPE="_gcc" -DPYBIND11_STDLIB="_libstdcpp" -DPYBIND11_BUILD_ABI="_cxxabi1011" -DTORCH_EXTENSION_NAME=iou3d_cuda -D_GLIBCXX_USE_CXX11_ABI=0 -std=c++14

cc1plus: warning: command line option ‘-Wstrict-prototypes’ is valid for C/ObjC but not for C++

/home/koosha/anaconda3/envs/epropnp_det/lib/python3.7/site-packages/setuptools/command/easy_install.py:147: EasyInstallDeprecationWarning: easy_install command is deprecated. Use build and pip and other standards-based tools.

EasyInstallDeprecationWarning,

/home/koosha/anaconda3/envs/epropnp_det/lib/python3.7/site-packages/setuptools/command/install.py:37: SetuptoolsDeprecationWarning: setup.py install is deprecated. Use build and pip and other standards-based tools.

setuptools.SetuptoolsDeprecationWarning,

/home/koosha/anaconda3/envs/epropnp_det/lib/python3.7/site-packages/torch/utils/cpp_extension.py:381: UserWarning: Attempted to use ninja as the BuildExtension backend but we could not find ninja.. Falling back to using the slow distutils backend.

warnings.warn(msg.format('we could not find ninja.'))

Traceback (most recent call last):

File "", line 36, in

File "", line 34, in

File "/home/koosha/anaconda3/EPro-PnP/EPro-PnP-Det/setup.py", line 143, in

zip_safe=False)

File "/home/koosha/anaconda3/envs/epropnp_det/lib/python3.7/site-packages/setuptools/init.py", line 87, in setup

return distutils.core.setup(**attrs)

File "/home/koosha/anaconda3/envs/epropnp_det/lib/python3.7/site-packages/setuptools/_distutils/core.py", line 185, in setup

return run_commands(dist)

File "/home/koosha/anaconda3/envs/epropnp_det/lib/python3.7/site-packages/setuptools/_distutils/core.py", line 201, in run_commands

dist.run_commands()

File "/home/koosha/anaconda3/envs/epropnp_det/lib/python3.7/site-packages/setuptools/_distutils/dist.py", line 968, in run_commands

self.run_command(cmd)

File "/home/koosha/anaconda3/envs/epropnp_det/lib/python3.7/site-packages/setuptools/dist.py", line 1217, in run_command

super().run_command(command)

File "/home/koosha/anaconda3/envs/epropnp_det/lib/python3.7/site-packages/setuptools/_distutils/dist.py", line 987, in run_command

cmd_obj.run()

File "/home/koosha/anaconda3/envs/epropnp_det/lib/python3.7/site-packages/setuptools/command/develop.py", line 34, in run

self.install_for_development()

File "/home/koosha/anaconda3/envs/epropnp_det/lib/python3.7/site-packages/setuptools/command/develop.py", line 114, in install_for_development

self.run_command('build_ext')

File "/home/koosha/anaconda3/envs/epropnp_det/lib/python3.7/site-packages/setuptools/_distutils/cmd.py", line 319, in run_command

self.distribution.run_command(command)

File "/home/koosha/anaconda3/envs/epropnp_det/lib/python3.7/site-packages/setuptools/dist.py", line 1217, in run_command

super().run_command(command)

File "/home/koosha/anaconda3/envs/epropnp_det/lib/python3.7/site-packages/setuptools/_distutils/dist.py", line 987, in run_command

cmd_obj.run()

File "/home/koosha/anaconda3/envs/epropnp_det/lib/python3.7/site-packages/setuptools/command/build_ext.py", line 84, in run

_build_ext.run(self)

File "/home/koosha/anaconda3/envs/epropnp_det/lib/python3.7/site-packages/setuptools/_distutils/command/build_ext.py", line 346, in run

self.build_extensions()

File "/home/koosha/anaconda3/envs/epropnp_det/lib/python3.7/site-packages/torch/utils/cpp_extension.py", line 735, in build_extensions

build_ext.build_extensions(self)

File "/home/koosha/anaconda3/envs/epropnp_det/lib/python3.7/site-packages/setuptools/_distutils/command/build_ext.py", line 466, in build_extensions

self._build_extensions_serial()

File "/home/koosha/anaconda3/envs/epropnp_det/lib/python3.7/site-packages/setuptools/_distutils/command/build_ext.py", line 492, in _build_extensions_serial

self.build_extension(ext)

File "/home/koosha/anaconda3/envs/epropnp_det/lib/python3.7/site-packages/setuptools/command/build_ext.py", line 246, in build_extension

_build_ext.build_extension(self, ext)

File "/home/koosha/anaconda3/envs/epropnp_det/lib/python3.7/site-packages/setuptools/_distutils/command/build_ext.py", line 554, in build_extension

depends=ext.depends,

File "/home/koosha/anaconda3/envs/epropnp_det/lib/python3.7/site-packages/setuptools/_distutils/ccompiler.py", line 599, in compile

self._compile(obj, src, ext, cc_args, extra_postargs, pp_opts)

File "/home/koosha/anaconda3/envs/epropnp_det/lib/python3.7/site-packages/torch/utils/cpp_extension.py", line 483, in unix_wrap_single_compile

cflags = unix_cuda_flags(cflags)

File "/home/koosha/anaconda3/envs/epropnp_det/lib/python3.7/site-packages/torch/utils/cpp_extension.py", line 450, in unix_cuda_flags

cflags + _get_cuda_arch_flags(cflags))

File "/home/koosha/anaconda3/envs/epropnp_det/lib/python3.7/site-packages/torch/utils/cpp_extension.py", line 1606, in _get_cuda_arch_flags

arch_list[-1] += '+PTX'

IndexError: list index out of range

error: subprocess-exited-with-error

python setup.py develop did not run successfully.

│ exit code: 1

╰─> See above for output.

note: This error originates from a subprocess, and is likely not a problem with pip.

full command: /home/koosha/anaconda3/envs/epropnp_det/bin/python -c '

exec(compile('"'"''"'"''"'"'

# This is <pip-setuptools-caller> -- a caller that pip uses to run setup.py

#

# - It imports setuptools before invoking setup.py, to enable projects that directly

# import from `distutils.core` to work with newer packaging standards.

# - It provides a clear error message when setuptools is not installed.

# - It sets `sys.argv[0]` to the underlying `setup.py`, when invoking `setup.py` so

# setuptools doesn'"'"'t think the script is `-c`. This avoids the following warning:

# manifest_maker: standard file '"'"'-c'"'"' not found".

# - It generates a shim setup.py, for handling setup.cfg-only projects.

import os, sys, tokenize

try:

import setuptools

except ImportError as error:

print(

"ERROR: Can not execute `setup.py` since setuptools is not available in "

"the build environment.",

file=sys.stderr,

)

sys.exit(1)

__file__ = %r

sys.argv[0] = __file__

if os.path.exists(__file__):

filename = __file__

with tokenize.open(__file__) as f:

setup_py_code = f.read()

else:

filename = "<auto-generated setuptools caller>"

setup_py_code = "from setuptools import setup; setup()"

exec(compile(setup_py_code, filename, "exec"))

'"'"''"'"''"'"' % ('"'"'/home/koosha/anaconda3/EPro-PnP/EPro-PnP-Det/setup.py'"'"',), "<pip-setuptools-caller>", "exec"))' develop --no-deps

cwd: /home/koosha/anaconda3/EPro-PnP/EPro-PnP-Det/

error: subprocess-exited-with-error

python setup.py develop did not run successfully.

│ exit code: 1

╰─> See above for output.

note: This error originates from a subprocess, and is likely not a problem with pip.