Ahmed Rida Sekkat¹, Yohan Dupuis², Pascal Vasseur¹ and Paul Honeine¹.

¹Normandie Univ, UNIROUEN, LITIS, Rouen, France

²Normandie Univ, UNIROUEN, ESIGELEC, IRSEEM, Rouen, France

[email protected]

IEEE International Conference on Robotics and Automation (ICRA), 2020.

If you find our dataset useful for your research, please cite our paper:

@INPROCEEDINGS{9197144,

author={A. R. {Sekkat} and Y. {Dupuis} and P. {Vasseur} and P. {Honeine}},

booktitle={2020 IEEE International Conference on Robotics and Automation (ICRA)},

title={The OmniScape Dataset},

year={2020},

pages={1603-1608},

doi={10.1109/ICRA40945.2020.9197144}}

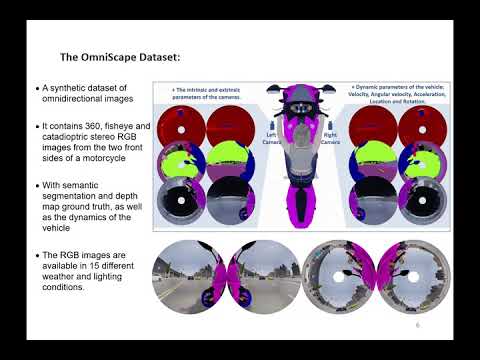

Despite the utility and benefits of omnidirectional images in robotics and automotive applications, there are no datasets of omnidirectional images available with semantic segmentation, depth map, and dynamic properties. This is due to the time cost and human effort required to annotate ground truth images. This paper presents a framework for generating omnidirectional images using images that are acquired from a virtual environment. For this purpose, we demonstrate the relevance of the proposed framework on two well-known simulators: CARLA simulator, which is an open-source simulator for autonomous driving research, and Grand Theft Auto V (GTA V), which is a very high quality video game. We explain in details the generated OmniScape dataset, which includes stereo fisheye and catadioptric images acquired from the two front sides of a motorcycle, including semantic segmentation, depth map, intrinsic parameters of the cameras and the dynamic parameters of the motorcycle. It is worth noting that the case of two-wheeled vehicles is more challenging than cars due to the specific dynamic of these vehicles.

- The class Vehicle is divided into two classes, four-wheeled and two-wheeled.

- Optical Flow.

- Instance semantic segmentation.

- 3D bounding boxes.

The dataset and tools will be provided in stages as soon as the article is published.

If you are interested in our dataset, fulfill This Form to receive the first release.

Instance segmentation:

For each capture stereo 360° cubemap and equirectangular images are also provided:

The images are provided in different weather and lighting conditions:

*This work was supported by a RIN grant, Région Normandie, France