Official implementation of "Walk in the Cloud: Learning Curves for Point Clouds Shape Analysis", ICCV 2021

Paper: https://arxiv.org/abs/2105.01288

- Python>=3.7

- PyTorch>=1.2

- Packages: glob, h5py, sklearn

- Point Cloud Classification

- Point Cloud Part Segmentation

- Point Cloud Normal Estimation

- Point Cloud Classification Under Corruptions

NOTE: Please change your current directory to core/ first before excuting the following commands.

The ModelNet40 dataset is primarily used for the classification experiments. At your first run, the program will automatically download the data if it is not in data/. Or, you can manually download the offical data and unzip to data/.

Alternatively, you can place your downloaded data anywhere you like, and link the path to DATA_DIR in core/data.py. Otherwise, the download will still be automatically triggered.

Train with our default settings (same as in the paper):

python3 main_cls.py --exp_name=curvenet_cls_1

Train with customized settings with the flags: --lr, --scheduler, --batch_size.

Alternatively, you can directly modify core/start_cls.sh and simply run:

./start_cls.sh

NOTE: Our reported model achieves 93.8%/94.2% accuracy (see sections below). However, due to randomness, the best result might require repeated training processes. Hence, we also provide another benchmark result here (where we repeated 5 runs with different random seeds, and report their average), which is 93.65% accuracy.

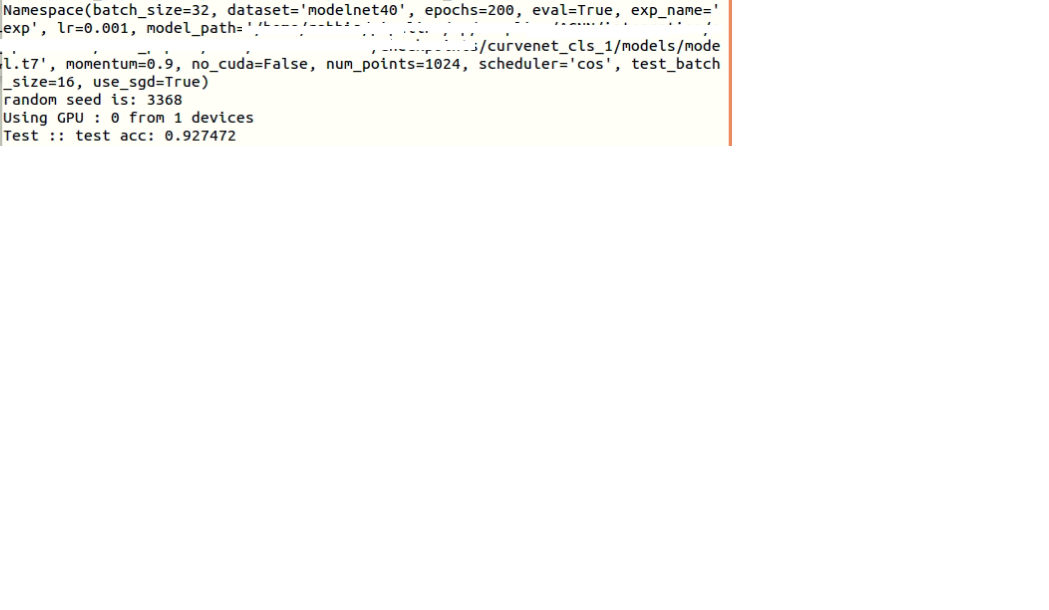

Evaluate without voting:

python3 main_cls.py --exp_name=curvenet_cls_1 --eval=True --model_path=PATH_TO_YOUR_MODEL

Alternatively, you can directly modify core/test_cls.sh and simply run:

./test_cls.sh

For voting, we used the voting_evaluate_cls.pyscript provided in RSCNN. Please refer to their license for usage.

Please download our pretrained model cls/ at google drive.

And then run:

python3 main_cls.py --exp_name=curvenet_cls_pretrained --eval --model_path=PATH_TO_PRETRAINED/cls/models/model.t7

The ShapeNet Part dataset is primarily used for the part segmentation experiments. At your first run, the program will automatically download the data if it is not in data/. Or, you can manually download the offical data and unzip to data/.

Alternatively, you can place your downloaded data anywhere you like, and link the path to DATA_DIR in core/data.py. Otherwise, the download will still be automatically triggered.

Train with our default settings (same as in the paper):

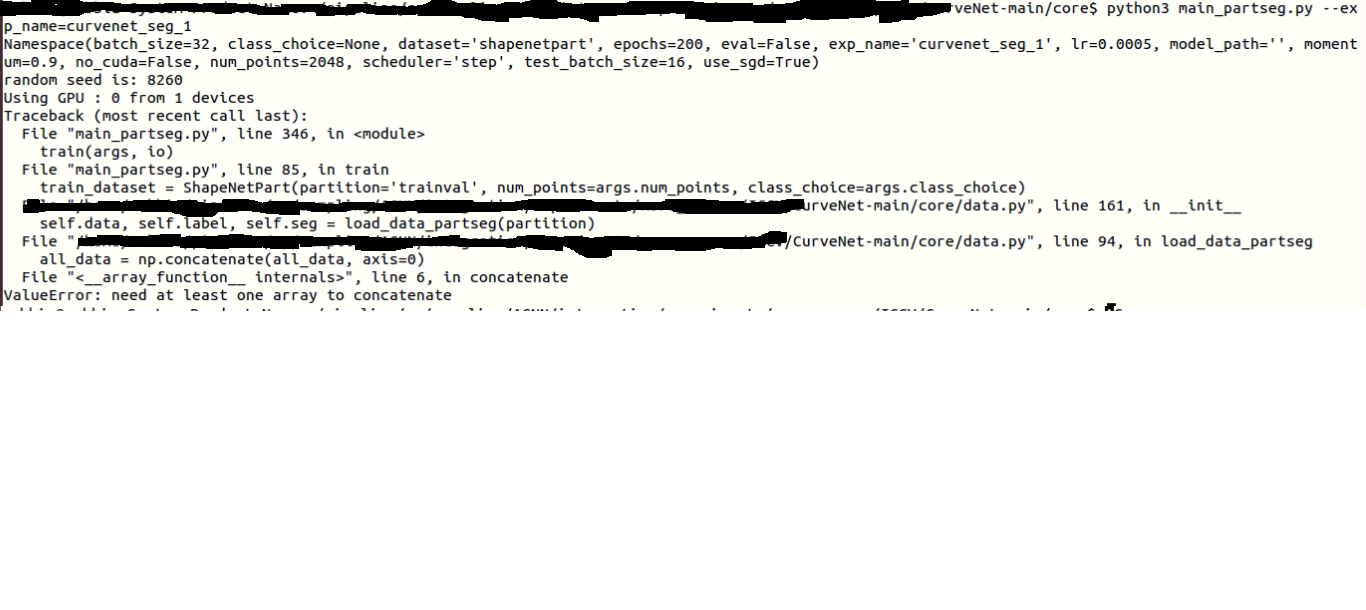

python3 main_partseg.py --exp_name=curvenet_seg_1

Train with customized settings with the flags: --lr, --scheduler, --batch_size.

Alternatively, you can directly modify core/start_part.sh and simply run:

./start_part.sh

NOTE: Our reported model achieves 86.6%/86.8% mIoU (see sections below). However, due to randomness, the best result might require repeated training processes. Hence, we also provide another benchmark result here (where we repeated 5 runs with different random seeds, and report their average), which is 86.46 mIoU.

Evaluate without voting:

python3 main_partseg.py --exp_name=curvenet_seg_1 --eval=True --model_path=PATH_TO_YOUR_MODEL

Alternatively, you can directly modify core/test_part.sh and simply run:

./test_part.sh

For voting, we used the voting_evaluate_partseg.pyscript provided in RSCNN. Please refer to their license for usage.

Please download our pretrained model partseg/ at google drive.

And then run:

python3 main_partseg.py --exp_name=curvenet_seg_pretrained --eval=True --model_path=PATH_TO_PRETRAINED/partseg/models/model.t7

The ModelNet40 dataset is used for the normal estimation experiments. We have preprocessed the raw ModelNet40 dataset into .h5 files. Each point cloud instance contains 2048 randomly sampled points and point-to-point normal ground truths.

Please download our processed data here and place it to data/, or you need to specify the data root path in core/data.py.

Train with our default settings (same as in the paper):

python3 main_normal.py --exp_name=curvenet_normal_1

Train with customized settings with the flags: --multiplier, --lr, --scheduler, --batch_size.

Alternatively, you can directly modify core/start_normal.sh and simply run:

./start_normal.sh

Evaluate without voting:

python3 main_normal.py --exp_name=curvenet_normal_1 --eval=True --model_path=PATH_TO_YOUR_MODEL

Alternatively, you can directly modify core/test_normal.sh and simply run:

./test_normal.sh

Please download our pretrained model normal/ at google drive.

And then run:

python3 main_normal.py --exp_name=curvenet_normal_pretrained --eval=True --model_path=PATH_TO_PRETRAINED/normal/models/model.t7

In a recent work, Sun et al. studied robustness of state-of-the-art point cloud processing architectures under common corruptions. CurveNet was verifed by them to be the best architecture to function on common corruptions. Please refer to their official repo for details.

If you find this repo useful in your work or research, please cite:

@InProceedings{Xiang_2021_ICCV,

author = {Xiang, Tiange and Zhang, Chaoyi and Song, Yang and Yu, Jianhui and Cai, Weidong},

title = {Walk in the Cloud: Learning Curves for Point Clouds Shape Analysis},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2021},

pages = {915-924}

}

Our code borrows a lot from: