VAME is a framework to cluster behavioral signals obtained from pose-estimation tools. It is a PyTorch based deep learning framework which leverages the power of recurrent neural networks (RNN) to model sequential data. In order to learn the underlying complex data distribution we use the RNN in a variational autoencoder setting to extract the latent state of the animal in every step of the input time series.

The workflow of VAME consists of 5 steps and we explain them in detail here.

To get started we recommend using Anaconda with Python 3.6 or higher.

Here, you can create a virtual enviroment to store all the dependencies necessary for VAME. (you can also use the VAME.yaml file supplied here, byt simply openning the terminal, running git clone https://github.com/LINCellularNeuroscience/VAME.git, then type cd VAME then run: conda env create -f VAME.yaml).

- Go to the locally cloned VAME directory and run

python setup.py installin order to install VAME in your active conda environment. - Install the current stable Pytorch release using the OS-dependent instructions from the Pytorch website. Currently, VAME is tested on PyTorch 1.5. (Note, if you use the conda file we supply, PyTorch is already installed and you don't need to do this step.)

First, you should make sure that you have a GPU powerful enough to train deep learning networks. In our paper, we were using a single Nvidia GTX 1080 Ti GPU to train our network. A hardware guide can be found here. Once you have your hardware ready, try VAME following the workflow guide.

If you want to follow an example first you can download video-1 here and find the .csv file in our example folder.

- November 2022: Finally the VAME paper is published! Check it out on the publisher werbsite. In comparison to the preprint version, there is also a practical workflow guide included with many useful instructions on how to use VAME.

- March 2021: We are happy to release VAME 1.0 with a bunch of improvements and new features! These include the community analysis script, a model allowing generation of unseen datapoints, new visualization functions, as well as the much requested function to generate GIF sequences containing UMAP embeddings and trajectories together with the video of the behaving animal. Big thanks also to @MMathisLab for contributing to the OS compatibility and usability of our code.

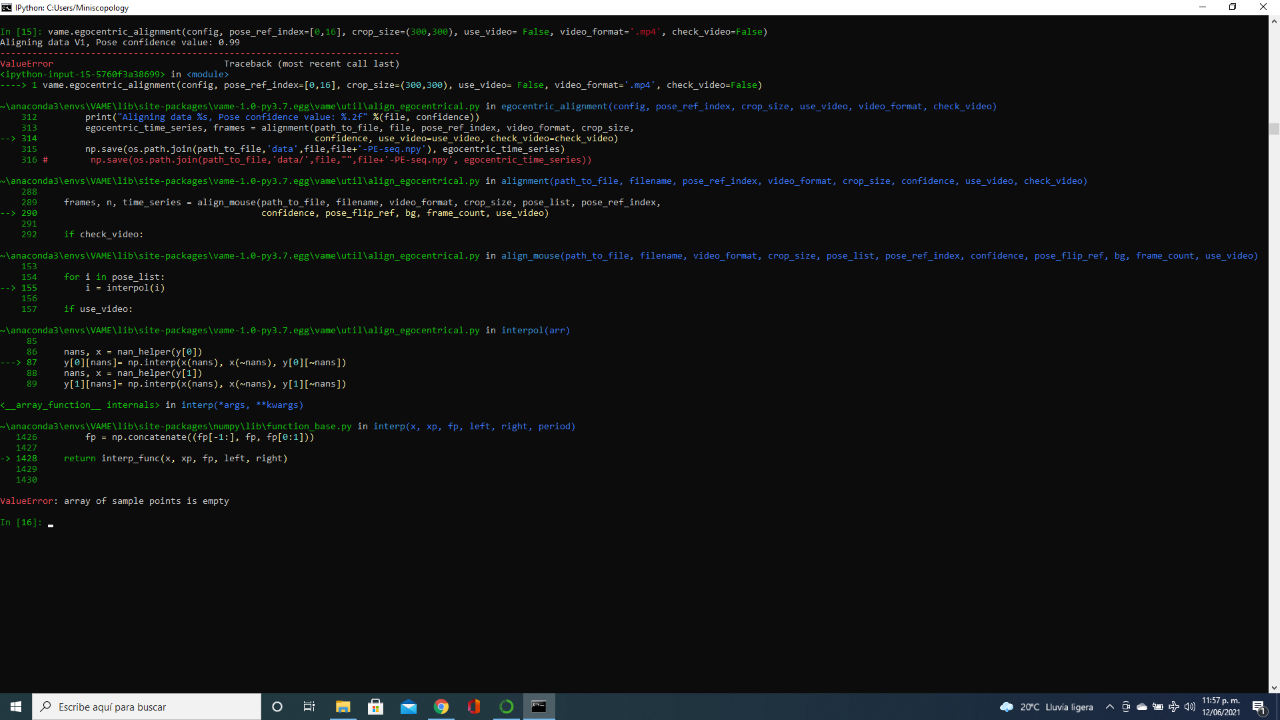

- November 2020: We uploaded an egocentric alignment script to allow more researcher to use VAME

- October 2020: We updated our manuscript on Biorxiv

- May 2020: Our preprint "Identifying Behavioral Structure from Deep Variational Embeddings of Animal Motion" is out! Read it on Biorxiv!

VAME was developed by Kevin Luxem and Pavol Bauer.

The development of VAME is heavily inspired by DeepLabCut. As such, the VAME project management codebase has been adapted from the DeepLabCut codebase. The DeepLabCut 2.0 toolbox is © A. & M.W. Mathis Labs deeplabcut.org, released under LGPL v3.0. The implementation of the VRAE model is partially adapted from the Timeseries clustering repository developed by Tejas Lodaya.

VAME preprint: Identifying Behavioral Structure from Deep Variational Embeddings of Animal Motion

Kingma & Welling: Auto-Encoding Variational Bayes

Pereira & Silveira: Learning Representations from Healthcare Time Series Data for Unsupervised Anomaly Detection

See the LICENSE file for the full statement.