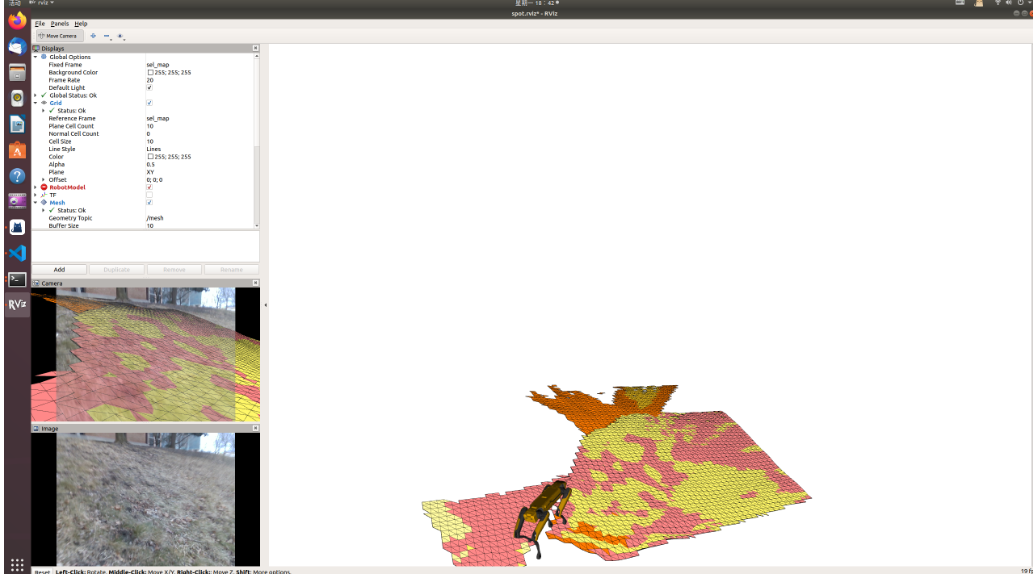

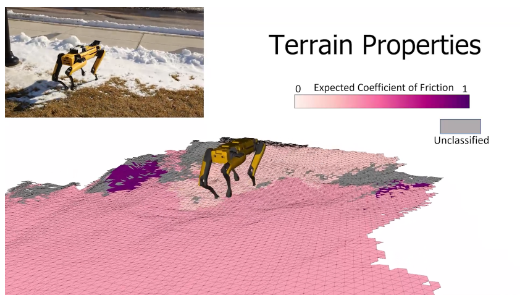

Hello, thanks for your work. I was trying out your work but found some problem. I tried to run the other launch file also, but had some issue. The one without any config or terrain property flag launches with only wireframe mesh visualizable but no semantic class and when I specifically mention the config and property flag, I get the following error:

N.B: I tried to install pip3 install pytorch-encoding and it was installed successfully but still had the same issue. Didn't change the problem / issue at all.

arghya@arghya-Pulse-GL66-12UEK:~/sel_map_ws$ roslaunch sel_map spot_bag_sel.launch semseg_config:=Encoding_ResNet50_PContext_full.yaml terrain_properties:=pascal_context.yaml

... logging to /home/arghya/.ros/log/dd6e5c2a-e2ba-11ed-b832-d34792068a9c/roslaunch-arghya-Pulse-GL66-12UEK-1075380.log

Checking log directory for disk usage. This may take a while.

Press Ctrl-C to interrupt

Done checking log file disk usage. Usage is <1GB.

started roslaunch server http://127.0.0.1:38337/

SUMMARY

========

PARAMETERS

* /robot_description: <?xml version="1....

* /rosdistro: noetic

* /rosversion: 1.16.0

* /sel_map/cameras_registered/realsense/camera_info: /zed2/zed_node/de...

* /sel_map/cameras_registered/realsense/depth_registered: /zed2/zed_node/de...

* /sel_map/cameras_registered/realsense/image_rectified: /zed2/zed_node/le...

* /sel_map/cameras_registered/realsense/pose:

* /sel_map/cameras_registered/realsense/pose_with_covariance:

* /sel_map/colorscale/ends/one: 0,0,0

* /sel_map/colorscale/ends/zero: 255,255,255

* /sel_map/colorscale/stops: [0.2, 0.8]

* /sel_map/colorscale/type: linear_ends

* /sel_map/colorscale/unknown: 120,120,120

* /sel_map/colorscale/values: ['252,197,192', '...

* /sel_map/enable_mat_display: True

* /sel_map/num_cameras: 1

* /sel_map/point_limit: 20

* /sel_map/publish_rate: 20.0

* /sel_map/queue_size: 2

* /sel_map/robot_base: base_link

* /sel_map/save_classes: True

* /sel_map/save_confidence: False

* /sel_map/save_interval: 0.0

* /sel_map/save_mesh_location:

* /sel_map/semseg/extra_args: []

* /sel_map/semseg/model: Encnet_ResNet50s_...

* /sel_map/semseg/num_labels: 59

* /sel_map/semseg/onehot_projection: False

* /sel_map/semseg/ongpu_projection: True

* /sel_map/semseg/package: pytorch_encoding_...

* /sel_map/sync_slop: 0.3

* /sel_map/terrain_properties: /home/arghya/sel_...

* /sel_map/update_policy: fifo

* /sel_map/world_base: odom

* /use_sim_time: True

NODES

/

rviz (rviz/rviz)

/sel_map/

sel_map (sel_map/main.py)

static_tf_linker (sel_map_utils/StaticTFLinker.py)

ROS_MASTER_URI=http://127.0.0.1:11311

process[rviz-1]: started with pid [1075437]

process[sel_map/static_tf_linker-2]: started with pid [1075438]

process[sel_map/sel_map-3]: started with pid [1075439]

[ INFO] [1682360055.061867615]: rviz version 1.14.20

[ INFO] [1682360055.061904828]: compiled against Qt version 5.12.8

[ INFO] [1682360055.061924301]: compiled against OGRE version 1.9.0 (Ghadamon)

[ INFO] [1682360055.069993134]: Forcing OpenGl version 0.

[ INFO] [1682360055.178432992]: Stereo is NOT SUPPORTED

[ INFO] [1682360055.178490269]: OpenGL device: Mesa Intel(R) Graphics (ADL GT2)

[ INFO] [1682360055.178499632]: OpenGl version: 4.6 (GLSL 4.6) limited to GLSL 1.4 on Mesa system.

[INFO] [1682360055.222487, 0.000000]: Spinning all linked static TF frames until killed

[ INFO] [1682360055.431311840]: Mesh Display: Update

[ERROR] [1682360055.431363477]: Mesh display: no visual available, can't draw mesh! (maybe no data has been received yet?)

[ INFO] [1682360055.431451204]: Mesh Display: Update

[ERROR] [1682360055.431459554]: Mesh display: no visual available, can't draw mesh! (maybe no data has been received yet?)

[ INFO] [1682360055.433126891]: No initial data available, waiting for callback to trigger ...

[ INFO] [1682360055.433149737]: Mesh Display: Update

[ERROR] [1682360055.433164985]: Mesh display: no visual available, can't draw mesh! (maybe no data has been received yet?)

[ INFO] [1682360055.433181396]: Mesh Display: Update

[ERROR] [1682360055.433187710]: Mesh display: no visual available, can't draw mesh! (maybe no data has been received yet?)

[ INFO] [1682360055.433216312]: Mesh Display: Update

[ERROR] [1682360055.433222597]: Mesh display: no visual available, can't draw mesh! (maybe no data has been received yet?)

[ INFO] [1682360055.434230655]: Mesh Display: Update

[ERROR] [1682360055.434238770]: Mesh display: no visual available, can't draw mesh! (maybe no data has been received yet?)

Traceback (most recent call last):

File "/home/arghya/sel_map_ws/devel/lib/sel_map/main.py", line 15, in <module>

exec(compile(fh.read(), python_script, 'exec'), context)

File "/home/arghya/sel_map_ws/src/sel_map/sel_map/scripts/main.py", line 171, in <module>

sel_map_node(mesh_bounds, elem_size, threshold)

File "/home/arghya/sel_map_ws/src/sel_map/sel_map/scripts/main.py", line 132, in sel_map_node

segmentation_network = CameraSensor()

File "/home/arghya/sel_map_ws/src/sel_map/sel_map_segmentation/sel_map_segmentation/src/sel_map_segmentation/cameraSensor.py", line 45, in __init__

SemsegNetworkWrapper = importlib.import_module(wrapper_package).SemsegNetworkWrapper

File "/usr/lib/python3.8/importlib/__init__.py", line 127, in import_module

return _bootstrap._gcd_import(name[level:], package, level)

File "<frozen importlib._bootstrap>", line 1014, in _gcd_import

File "<frozen importlib._bootstrap>", line 991, in _find_and_load

File "<frozen importlib._bootstrap>", line 973, in _find_and_load_unlocked

ModuleNotFoundError: No module named 'pytorch_encoding_wrapper'

[sel_map/sel_map-3] process has died [pid 1075439, exit code 1, cmd /home/arghya/sel_map_ws/devel/lib/sel_map/main.py 10 10 4 0.05 10 mesh:=/mesh mesh/costs:=/mesh/costs get_materials:=/get_materials __name:=sel_map __log:=/home/arghya/.ros/log/dd6e5c2a-e2ba-11ed-b832-d34792068a9c/sel_map-sel_map-3.log].

log file: /home/arghya/.ros/log/dd6e5c2a-e2ba-11ed-b832-d34792068a9c/sel_map-sel_map-3*.log

What should I do now? Thanks in advance.