Hi, I receive this error with any configuration a try of Cuda,VPI,dnn,cuDNN,OpenCV, it happened me in Jetson NX a while ago too, always in 20.04

colcon build && source install/setup.bash

[0.283s] WARNING:colcon.colcon_core.verb:Some selected packages are already built in one or more underlay workspaces:

'image_geometry' is in: /opt/ros/foxy

'image_transport' is in: /opt/ros/foxy

'rcpputils' is in: /opt/ros/foxy

'camera_calibration_parsers' is in: /opt/ros/foxy

'cv_bridge' is in: /opt/ros/foxy

'camera_info_manager' is in: /opt/ros/foxy

If a package in a merged underlay workspace is overridden and it installs headers, then all packages in the overlay must sort their include directories by workspace order. Failure to do so may result in build failures or undefined behavior at run time.

If the overridden package is used by another package in any underlay, then the overriding package in the overlay must be API and ABI compatible or undefined behavior at run time may occur.

If you understand the risks and want to override a package anyways, add the following to the command line:

--allow-overriding camera_calibration_parsers camera_info_manager cv_bridge image_geometry image_transport rcpputils

This may be promoted to an error in a future release of colcon-core.

Starting >>> rcpputils

Starting >>> image_transport

Starting >>> isaac_ros_test

Starting >>> isaac_ros_nvengine_interfaces

Starting >>> isaac_ros_common

Starting >>> nvblox_msgs

Starting >>> image_geometry

Starting >>> isaac_ros_apriltag_interfaces

Starting >>> isaac_ros_visual_slam_interfaces

Starting >>> vision_msgs

Starting >>> nvblox_isaac_sim

Finished <<< isaac_ros_test [1.48s]

Finished <<< nvblox_isaac_sim [1.46s]

Finished <<< isaac_ros_common [8.47s]

Finished <<< image_geometry [13.3s]

Finished <<< isaac_ros_nvengine_interfaces [13.3s]

Starting >>> isaac_ros_nvengine

Finished <<< nvblox_msgs [13.5s]

Starting >>> nvblox_ros

Starting >>> nvblox_nav2

Starting >>> nvblox_rviz_plugin

Finished <<< rcpputils [13.7s]

Starting >>> cv_bridge

Starting >>> camera_calibration_parsers

Finished <<< isaac_ros_apriltag_interfaces [15.0s]

Finished <<< isaac_ros_visual_slam_interfaces [17.5s]

Finished <<< vision_msgs [22.6s]

Finished <<< camera_calibration_parsers [9.09s]

Starting >>> camera_info_manager

Finished <<< image_transport [25.8s]

Finished <<< camera_info_manager [4.91s]

Starting >>> image_common

Finished <<< image_common [0.89s]

Finished <<< cv_bridge [16.0s]

Starting >>> isaac_ros_dnn_encoders

Starting >>> isaac_ros_image_proc

Starting >>> isaac_ros_stereo_image_proc

Starting >>> opencv_tests

Starting >>> vision_opencv

Finished <<< isaac_ros_nvengine [17.1s]

Starting >>> isaac_ros_tensor_rt

Starting >>> isaac_ros_dnn_inference_test

Finished <<< vision_opencv [1.37s]

Finished <<< opencv_tests [1.40s]

Finished <<< nvblox_nav2 [17.9s]

Finished <<< isaac_ros_tensor_rt [5.45s]

Finished <<< nvblox_rviz_plugin [22.8s]

Finished <<< isaac_ros_dnn_inference_test [9.20s]

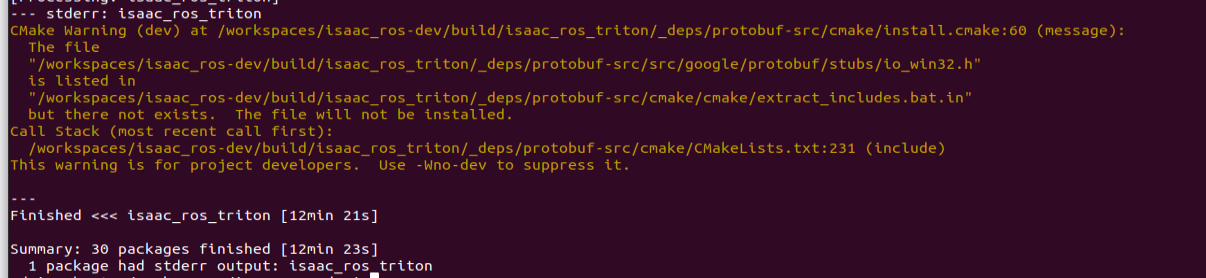

Starting >>> isaac_ros_triton

Finished <<< isaac_ros_triton [4.91s]

--- stderr: isaac_ros_dnn_encoders

/usr/bin/ld: libdnn_image_encoder_node.so: undefined reference to `cv::dnn::dnn4_v20211220::blobFromImage(cv::InputArray const&, double, cv::Size const&, cv::Scalar_ const&, bool, bool, int)'

collect2: error: ld returned 1 exit status

make[2]: *** [CMakeFiles/dnn_image_encoder.dir/build.make:163: dnn_image_encoder] Error 1

make[1]: *** [CMakeFiles/Makefile2:80: CMakeFiles/dnn_image_encoder.dir/all] Error 2

make: *** [Makefile:141: all] Error 2

Failed <<< isaac_ros_dnn_encoders [15.7s, exited with code 2]

Aborted <<< isaac_ros_image_proc [22.9s]

Aborted <<< isaac_ros_stereo_image_proc [48.6s]

Aborted <<< nvblox_ros [1min 41s]

Summary: 23 packages finished [1min 55s]

1 package failed: isaac_ros_dnn_encoders

3 packages aborted: isaac_ros_image_proc isaac_ros_stereo_image_proc nvblox_ros

3 packages had stderr output: isaac_ros_dnn_encoders isaac_ros_image_proc isaac_ros_stereo_image_proc

8 packages not processed

igcs@igcs:~/isaac_ws$