rahul@Rahul:~/aruco_drone_ws$ catkin_make

Base path: /home/rahul/aruco_drone_ws

Source space: /home/rahul/aruco_drone_ws/src

Build space: /home/rahul/aruco_drone_ws/build

Devel space: /home/rahul/aruco_drone_ws/devel

Install space: /home/rahul/aruco_drone_ws/install

Running command: "make cmake_check_build_system" in "/home/rahul/aruco_drone_ws/build"

Running command: "make -j8 -l8" in "/home/rahul/aruco_drone_ws/build"

[ 0%] Built target geometry_msgs_generate_messages_py

[ 0%] Built target std_msgs_generate_messages_cpp

[ 0%] Built target std_msgs_generate_messages_py

[ 0%] Built target std_msgs_generate_messages_eus

[ 0%] Built target geometry_msgs_generate_messages_cpp

[ 0%] Built target std_msgs_generate_messages_lisp

[ 0%] Built target geometry_msgs_generate_messages_eus

[ 0%] Built target geometry_msgs_generate_messages_lisp

[ 2%] Built target aruco

[ 2%] Built target geometry_msgs_generate_messages_nodejs

[ 2%] Built target std_msgs_generate_messages_nodejs

[ 2%] Built target _aruco_msgs_generate_messages_check_deps_Marker

[ 2%] Built target _aruco_msgs_generate_messages_check_deps_MarkerArray

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_RC

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_HeadingCommand

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_ThrustCommand

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_ServoCommand

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_MotorCommand

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_AttitudeCommand

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_Compass

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_YawrateCommand

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_HeightCommand

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_Supply

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_VelocityZCommand

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_RawImu

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_VelocityXYCommand

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_RuddersCommand

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_RawRC

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_MotorStatus

[ 3%] Built target pal_vision_segmentation_gencfg

[ 3%] Building CXX object pal_vision_segmentation/CMakeFiles/pal_vision_segmentation.dir/src/image_processing.cpp.o

[ 3%] Built target _cvg_sim_msgs_generate_messages_check_deps_Altitude

[ 3%] Built target _cvg_sim_msgs_generate_messages_check_deps_MotorPWM

[ 3%] Built target _cvg_sim_msgs_generate_messages_check_deps_ControllerState

[ 3%] Built target _cvg_sim_msgs_generate_messages_check_deps_RawMagnetic

[ 3%] Performing update step for 'ardronelib'

[ 3%] Built target _cvg_sim_msgs_generate_messages_check_deps_Altimeter

[ 3%] Built target _cvg_sim_msgs_generate_messages_check_deps_PositionXYCommand

[ 3%] Performing configure step for 'ardronelib'

No configure

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_time

[ 3%] Performing build step for 'ardronelib'

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_adc_data_frame

make[3]: warning: jobserver unavailable: using -j1. Add '+' to parent make rule.

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_pressure_raw

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_altitude

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_watchdog

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_euler_angles

Libs already extracted

Building target static

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_references

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_demo

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_video_stream

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_RecordEnable

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_zimmu_3000

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_trims

Architecture x86_64 is already built

Creating universal static lib file from architectures x86_64

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_matrix33

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_magneto

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_CamSelect

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_wifi

Build done.

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_gyros_offsets

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_FlightAnim

Building ARDroneTool/Lib

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_hdvideo_stream

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_vector21

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_kalman_pressure

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_trackers_send

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_phys_measures

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_pwm

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_raw_measures

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_vector31

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_LedAnim

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_games

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_rc_references

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_vision_of

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_vision_raw

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_Navdata

[ 3%] Built target aruco_ros_gencfg

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_vision

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_vision_perf

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_wind_speed

[ 3%] Built target ardrone_autonomy_generate_messages_check_deps_navdata_vision_detect

[ 3%] Built target visualization_msgs_generate_messages_py

[ 3%] Built target dynamic_reconfigure_generate_messages_nodejs

[ 4%] Built target aruco_ros_utils

[ 4%] Built target dynamic_reconfigure_generate_messages_eus

[ 4%] Built target sensor_msgs_generate_messages_cpp

[ 4%] Built target roscpp_generate_messages_eus

[ 4%] Built target sensor_msgs_generate_messages_lisp

[ 4%] Built target dynamic_reconfigure_generate_messages_cpp

[ 4%] Built target sensor_msgs_generate_messages_py

[ 4%] Built target sensor_msgs_generate_messages_eus

[ 4%] Built target rosgraph_msgs_generate_messages_cpp

[ 4%] Built target sensor_msgs_generate_messages_nodejs

[ 4%] Built target dynamic_reconfigure_gencfg

/home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp: In function ‘void pal_vision_util::dctNormalization(const cv::Mat&, cv::Mat&, int, const pal_vision_util::ImageRoi&)’:

/home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp:474:53: error: no matching function for call to ‘cv::Mat::Mat(IplImage*, bool)’

normalizedImg = cv::Mat(output.getIplImg(), true);

^

In file included from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core.hpp:59:0,

from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/core.hpp:48,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/include/pal_vision_segmentation/image_processing.h:41,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp:37:

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:1029:14: note: candidate: cv::Mat::Mat(const cv::cuda::GpuMat&)

explicit Mat(const cuda::GpuMat& m);

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:1029:14: note: candidate expects 1 argument, 2 provided

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:1026:37: note: candidate: template cv::Mat::Mat(const cv::MatCommaInitializer<Tp>&)

template explicit Mat(const MatCommaInitializer<Tp>& commaInitializer);

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:1026:37: note: template argument deduction/substitution failed:

/home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp:474:53: note: mismatched types ‘const cv::MatCommaInitializer<_Tp>’ and ‘IplImage* {aka IplImage*}’

normalizedImg = cv::Mat(output.getIplImg(), true);

^

In file included from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core.hpp:59:0,

from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/core.hpp:48,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/include/pal_vision_segmentation/image_processing.h:41,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp:37:

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:1022:37: note: candidate: template cv::Mat::Mat(const cv::Point3<Tp>&, bool)

template explicit Mat(const Point3<Tp>& pt, bool copyData=true);

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:1022:37: note: template argument deduction/substitution failed:

/home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp:474:53: note: mismatched types ‘const cv::Point3<_Tp>’ and ‘IplImage* {aka IplImage*}’

normalizedImg = cv::Mat(output.getIplImg(), true);

^

In file included from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core.hpp:59:0,

from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/core.hpp:48,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/include/pal_vision_segmentation/image_processing.h:41,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp:37:

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:1018:37: note: candidate: template cv::Mat::Mat(const cv::Point<Tp>&, bool)

template explicit Mat(const Point<Tp>& pt, bool copyData=true);

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:1018:37: note: template argument deduction/substitution failed:

/home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp:474:53: note: mismatched types ‘const cv::Point<_Tp>’ and ‘IplImage* {aka _IplImage*}’

normalizedImg = cv::Mat(output.getIplImg(), true);

^

In file included from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core.hpp:59:0,

from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/core.hpp:48,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/include/pal_vision_segmentation/image_processing.h:41,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp:37:

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:1014:51: note: candidate: template<class _Tp, int m, int n> cv::Mat::Mat(const cv::Matx<_Tp, m, n>&, bool)

template<typename _Tp, int m, int n> explicit Mat(const Matx<_Tp, m, n>& mtx, bool copyData=true);

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:1014:51: note: template argument deduction/substitution failed:

/home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp:474:53: note: mismatched types ‘const cv::Matx<_Tp, m, n>’ and ‘IplImage* {aka _IplImage*}’

normalizedImg = cv::Mat(output.getIplImg(), true);

^

In file included from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core.hpp:59:0,

from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/core.hpp:48,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/include/pal_vision_segmentation/image_processing.h:41,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp:37:

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:1010:44: note: candidate: template<class _Tp, int n> cv::Mat::Mat(const cv::Vec<_Tp, m>&, bool)

template<typename _Tp, int n> explicit Mat(const Vec<_Tp, n>& vec, bool copyData=true);

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:1010:44: note: template argument deduction/substitution failed:

/home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp:474:53: note: mismatched types ‘const cv::Vec<_Tp, m>’ and ‘IplImage* {aka _IplImage*}’

normalizedImg = cv::Mat(output.getIplImg(), true);

^

In file included from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core.hpp:59:0,

from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/core.hpp:48,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/include/pal_vision_segmentation/image_processing.h:41,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp:37:

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:993:37: note: candidate: template cv::Mat::Mat(const std::vector<_Tp>&, bool)

template explicit Mat(const std::vector<_Tp>& vec, bool copyData=false);

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:993:37: note: template argument deduction/substitution failed:

/home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp:474:53: note: mismatched types ‘const std::vector<_Tp>’ and ‘IplImage* {aka _IplImage*}’

normalizedImg = cv::Mat(output.getIplImg(), true);

^

In file included from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core.hpp:59:0,

from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/core.hpp:48,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/include/pal_vision_segmentation/image_processing.h:41,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp:37:

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:975:5: note: candidate: cv::Mat::Mat(const cv::Mat&, const std::vectorcv::Range&)

Mat(const Mat& m, const std::vector& ranges);

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:975:5: note: no known conversion for argument 1 from ‘IplImage* {aka _IplImage*}’ to ‘const cv::Mat&’

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:965:5: note: candidate: cv::Mat::Mat(const cv::Mat&, const cv::Range*)

Mat(const Mat& m, const Range* ranges);

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:965:5: note: no known conversion for argument 1 from ‘IplImage* {aka _IplImage*}’ to ‘const cv::Mat&’

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:955:5: note: candidate: cv::Mat::Mat(const cv::Mat&, const Rect&)

Mat(const Mat& m, const Rect& roi);

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:955:5: note: no known conversion for argument 1 from ‘IplImage* {aka _IplImage*}’ to ‘const cv::Mat&’

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:945:5: note: candidate: cv::Mat::Mat(const cv::Mat&, const cv::Range&, const cv::Range&)

Mat(const Mat& m, const Range& rowRange, const Range& colRange=Range::all());

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:945:5: note: no known conversion for argument 1 from ‘IplImage* {aka _IplImage*}’ to ‘const cv::Mat&’

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:933:5: note: candidate: cv::Mat::Mat(const std::vector&, int, void*, const size_t*)

Mat(const std::vector& sizes, int type, void* data, const size_t* steps=0);

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:933:5: note: candidate expects 4 arguments, 2 provided

[ 4%] Built target dynamic_reconfigure_generate_messages_py

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:919:5: note: candidate: cv::Mat::Mat(int, const int*, int, void*, const size_t*)

Mat(int ndims, const int* sizes, int type, void* data, const size_t* steps=0);

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:919:5: note: candidate expects 5 arguments, 2 provided

In file included from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:3642:0,

from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core.hpp:59,

from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/core.hpp:48,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/include/pal_vision_segmentation/image_processing.h:41,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp:37:

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:528:1: note: candidate: cv::Mat::Mat(cv::Size, int, void*, size_t)

Mat::Mat(Size _sz, int _type, void* _data, size_t _step)

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:528:1: note: candidate expects 4 arguments, 2 provided

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:495:1: note: candidate: cv::Mat::Mat(int, int, int, void*, size_t)

Mat::Mat(int _rows, int _cols, int _type, void* _data, size_t _step)

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:495:1: note: candidate expects 5 arguments, 2 provided

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:476:1: note: candidate: cv::Mat::Mat(const cv::Mat&)

Mat::Mat(const Mat& m)

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:476:1: note: candidate expects 1 argument, 2 provided

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:467:1: note: candidate: cv::Mat::Mat(const std::vector&, int, const Scalar&)

Mat::Mat(const std::vector& _sz, int _type, const Scalar& _s)

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:467:1: note: candidate expects 3 arguments, 2 provided

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:450:1: note: candidate: cv::Mat::Mat(int, const int*, int, const Scalar&)

Mat::Mat(int _dims, const int* _sz, int _type, const Scalar& _s)

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:450:1: note: candidate expects 4 arguments, 2 provided

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:459:1: note: candidate: cv::Mat::Mat(const std::vector&, int)

Mat::Mat(const std::vector& _sz, int _type)

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:459:1: note: no known conversion for argument 1 from ‘IplImage* {aka _IplImage*}’ to ‘const std::vector&’

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:442:1: note: candidate: cv::Mat::Mat(int, const int*, int)

Mat::Mat(int _dims, const int* _sz, int _type)

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:442:1: note: candidate expects 3 arguments, 2 provided

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:433:1: note: candidate: cv::Mat::Mat(cv::Size, int, const Scalar&)

Mat::Mat(Size _sz, int _type, const Scalar& _s)

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:433:1: note: candidate expects 3 arguments, 2 provided

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:416:1: note: candidate: cv::Mat::Mat(int, int, int, const Scalar&)

Mat::Mat(int _rows, int _cols, int _type, const Scalar& _s)

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:416:1: note: candidate expects 4 arguments, 2 provided

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:425:1: note: candidate: cv::Mat::Mat(cv::Size, int)

Mat::Mat(Size _sz, int _type)

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:425:1: note: no known conversion for argument 1 from ‘IplImage* {aka IplImage*}’ to ‘cv::Size {aka cv::Size}’

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:408:1: note: candidate: cv::Mat::Mat(int, int, int)

Mat::Mat(int _rows, int _cols, int _type)

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:408:1: note: candidate expects 3 arguments, 2 provided

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:402:1: note: candidate: cv::Mat::Mat()

Mat::Mat()

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:402:1: note: candidate expects 0 arguments, 2 provided

[ 4%] Built target roscpp_generate_messages_py

[ 4%] Built target roscpp_generate_messages_cpp

[ 4%] Built target rosgraph_msgs_generate_messages_eus

[ 4%] Built target dynamic_reconfigure_generate_messages_lisp

[ 4%] Built target roscpp_generate_messages_lisp

[ 4%] Built target rosgraph_msgs_generate_messages_nodejs

[ 4%] Built target roscpp_generate_messages_nodejs

[ 4%] Built target rosgraph_msgs_generate_messages_lisp

[ 4%] Built target actionlib_msgs_generate_messages_py

[ 4%] Built target rosgraph_msgs_generate_messages_py

[ 4%] Built target actionlib_generate_messages_cpp

[ 4%] Built target actionlib_generate_messages_py

[ 4%] Built target tf2_msgs_generate_messages_cpp

[ 4%] Built target actionlib_generate_messages_eus

[ 4%] Built target actionlib_msgs_generate_messages_nodejs

[ 4%] Built target tf2_msgs_generate_messages_eus

[ 4%] Built target tf2_msgs_generate_messages_py

pal_vision_segmentation/CMakeFiles/pal_vision_segmentation.dir/build.make:62: recipe for target 'pal_vision_segmentation/CMakeFiles/pal_vision_segmentation.dir/src/image_processing.cpp.o' failed

make[2]: *** [pal_vision_segmentation/CMakeFiles/pal_vision_segmentation.dir/src/image_processing.cpp.o] Error 1

CMakeFiles/Makefile2:2866: recipe for target 'pal_vision_segmentation/CMakeFiles/pal_vision_segmentation.dir/all' failed

make[1]: *** [pal_vision_segmentation/CMakeFiles/pal_vision_segmentation.dir/all] Error 2

make[1]: *** Waiting for unfinished jobs....

[ 4%] Built target tf_generate_messages_cpp

[ 4%] Built target tf_generate_messages_eus

[ 4%] Built target tf_generate_messages_lisp

[ 4%] Built target tf2_msgs_generate_messages_nodejs

[ 4%] Built target actionlib_msgs_generate_messages_cpp

Building ARDroneTool/Lib

[ 5%] Performing install step for 'ardronelib'

make[3]: warning: jobserver unavailable: using -j1. Add '+' to parent make rule.

[ 5%] Completed 'ardronelib'

[ 6%] Built target ardronelib

Makefile:138: recipe for target 'all' failed

make: *** [all] Error 2

Invoking "make -j8 -l8" failed

rahul@Rahul:/aruco_drone_ws$ rosdep install --from-paths /home/rahul/aruco_drone_ws/src --ignore-src

#All required rosdeps installed successfully

rahul@Rahul:/aruco_drone_ws$ catkin_make

Base path: /home/rahul/aruco_drone_ws

Source space: /home/rahul/aruco_drone_ws/src

Build space: /home/rahul/aruco_drone_ws/build

Devel space: /home/rahul/aruco_drone_ws/devel

Install space: /home/rahul/aruco_drone_ws/install

Running command: "make cmake_check_build_system" in "/home/rahul/aruco_drone_ws/build"

Running command: "make -j8 -l8" in "/home/rahul/aruco_drone_ws/build"

[ 0%] Built target geometry_msgs_generate_messages_py

[ 0%] Built target geometry_msgs_generate_messages_cpp

[ 0%] Built target std_msgs_generate_messages_cpp

[ 0%] Built target std_msgs_generate_messages_eus

[ 0%] Built target std_msgs_generate_messages_py

[ 2%] Built target aruco

[ 2%] Built target geometry_msgs_generate_messages_eus

[ 2%] Built target geometry_msgs_generate_messages_nodejs

[ 2%] Built target geometry_msgs_generate_messages_lisp

[ 2%] Built target std_msgs_generate_messages_lisp

[ 2%] Built target std_msgs_generate_messages_nodejs

[ 2%] Built target _aruco_msgs_generate_messages_check_deps_MarkerArray

[ 2%] Built target _aruco_msgs_generate_messages_check_deps_Marker

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_ThrustCommand

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_RC

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_HeadingCommand

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_MotorCommand

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_ServoCommand

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_AttitudeCommand

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_YawrateCommand

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_Compass

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_HeightCommand

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_RawImu

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_VelocityZCommand

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_RawRC

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_VelocityXYCommand

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_Supply

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_MotorStatus

[ 2%] Built target _cvg_sim_msgs_generate_messages_check_deps_RuddersCommand

[ 3%] Built target pal_vision_segmentation_gencfg

[ 3%] Built target _cvg_sim_msgs_generate_messages_check_deps_Altitude

[ 3%] Building CXX object pal_vision_segmentation/CMakeFiles/pal_vision_segmentation.dir/src/image_processing.cpp.o

[ 3%] Built target _cvg_sim_msgs_generate_messages_check_deps_RawMagnetic

[ 3%] Built target _cvg_sim_msgs_generate_messages_check_deps_MotorPWM

[ 3%] Built target _cvg_sim_msgs_generate_messages_check_deps_Altimeter

[ 3%] Built target _cvg_sim_msgs_generate_messages_check_deps_ControllerState

[ 3%] Built target _cvg_sim_msgs_generate_messages_check_deps_PositionXYCommand

[ 3%] Performing update step for 'ardronelib'

[ 3%] Performing configure step for 'ardronelib'

No configure

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_time

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_watchdog

[ 3%] Performing build step for 'ardronelib'

make[3]: warning: jobserver unavailable: using -j1. Add '+' to parent make rule.

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_altitude

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_adc_data_frame

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_pressure_raw

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_euler_angles

Libs already extracted

Building target static

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_references

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_demo

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_zimmu_3000

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_video_stream

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_trims

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_RecordEnable

Architecture x86_64 is already built

Creating universal static lib file from architectures x86_64

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_matrix33

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_magneto

Build done.

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_CamSelect

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_wifi

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_FlightAnim

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_gyros_offsets

Building ARDroneTool/Lib

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_hdvideo_stream

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_kalman_pressure

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_vector21

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_trackers_send

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_pwm

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_phys_measures

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_raw_measures

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_vector31

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_LedAnim

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_games

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_rc_references

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_vision_of

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_vision_raw

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_vision

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_Navdata

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_vision_perf

[ 3%] Built target _ardrone_autonomy_generate_messages_check_deps_navdata_wind_speed

[ 3%] Built target aruco_ros_gencfg

[ 3%] Built target ardrone_autonomy_generate_messages_check_deps_navdata_vision_detect

[ 3%] Built target visualization_msgs_generate_messages_py

[ 3%] Built target dynamic_reconfigure_generate_messages_nodejs

[ 3%] Built target dynamic_reconfigure_generate_messages_eus

[ 4%] Built target aruco_ros_utils

[ 4%] Built target sensor_msgs_generate_messages_cpp

[ 4%] Built target sensor_msgs_generate_messages_lisp

[ 4%] Built target roscpp_generate_messages_eus

[ 4%] Built target dynamic_reconfigure_generate_messages_cpp

[ 4%] Built target sensor_msgs_generate_messages_py

[ 4%] Built target rosgraph_msgs_generate_messages_cpp

[ 4%] Built target sensor_msgs_generate_messages_eus

[ 4%] Built target dynamic_reconfigure_gencfg

[ 4%] Built target sensor_msgs_generate_messages_nodejs

[ 4%] Built target dynamic_reconfigure_generate_messages_py

[ 4%] Built target roscpp_generate_messages_py

[ 4%] Built target roscpp_generate_messages_cpp

[ 4%] Built target rosgraph_msgs_generate_messages_eus

[ 4%] Built target dynamic_reconfigure_generate_messages_lisp

/home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp: In function ‘void pal_vision_util::dctNormalization(const cv::Mat&, cv::Mat&, int, const pal_vision_util::ImageRoi&)’:

/home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp:474:53: error: no matching function for call to ‘cv::Mat::Mat(IplImage*, bool)’

normalizedImg = cv::Mat(output.getIplImg(), true);

^

In file included from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core.hpp:59:0,

from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/core.hpp:48,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/include/pal_vision_segmentation/image_processing.h:41,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp:37:

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:1029:14: note: candidate: cv::Mat::Mat(const cv::cuda::GpuMat&)

explicit Mat(const cuda::GpuMat& m);

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:1029:14: note: candidate expects 1 argument, 2 provided

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:1026:37: note: candidate: template cv::Mat::Mat(const cv::MatCommaInitializer<Tp>&)

template explicit Mat(const MatCommaInitializer<Tp>& commaInitializer);

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:1026:37: note: template argument deduction/substitution failed:

/home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp:474:53: note: mismatched types ‘const cv::MatCommaInitializer<_Tp>’ and ‘IplImage* {aka IplImage*}’

normalizedImg = cv::Mat(output.getIplImg(), true);

^

In file included from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core.hpp:59:0,

from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/core.hpp:48,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/include/pal_vision_segmentation/image_processing.h:41,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp:37:

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:1022:37: note: candidate: template cv::Mat::Mat(const cv::Point3<Tp>&, bool)

template explicit Mat(const Point3<Tp>& pt, bool copyData=true);

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:1022:37: note: template argument deduction/substitution failed:

/home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp:474:53: note: mismatched types ‘const cv::Point3<_Tp>’ and ‘IplImage* {aka IplImage*}’

normalizedImg = cv::Mat(output.getIplImg(), true);

^

In file included from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core.hpp:59:0,

from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/core.hpp:48,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/include/pal_vision_segmentation/image_processing.h:41,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp:37:

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:1018:37: note: candidate: template cv::Mat::Mat(const cv::Point<Tp>&, bool)

template explicit Mat(const Point<Tp>& pt, bool copyData=true);

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:1018:37: note: template argument deduction/substitution failed:

/home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp:474:53: note: mismatched types ‘const cv::Point<_Tp>’ and ‘IplImage* {aka _IplImage*}’

normalizedImg = cv::Mat(output.getIplImg(), true);

^

In file included from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core.hpp:59:0,

from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/core.hpp:48,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/include/pal_vision_segmentation/image_processing.h:41,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp:37:

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:1014:51: note: candidate: template<class _Tp, int m, int n> cv::Mat::Mat(const cv::Matx<_Tp, m, n>&, bool)

template<typename _Tp, int m, int n> explicit Mat(const Matx<_Tp, m, n>& mtx, bool copyData=true);

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:1014:51: note: template argument deduction/substitution failed:

/home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp:474:53: note: mismatched types ‘const cv::Matx<_Tp, m, n>’ and ‘IplImage* {aka _IplImage*}’

normalizedImg = cv::Mat(output.getIplImg(), true);

^

In file included from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core.hpp:59:0,

from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/core.hpp:48,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/include/pal_vision_segmentation/image_processing.h:41,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp:37:

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:1010:44: note: candidate: template<class _Tp, int n> cv::Mat::Mat(const cv::Vec<_Tp, m>&, bool)

template<typename _Tp, int n> explicit Mat(const Vec<_Tp, n>& vec, bool copyData=true);

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:1010:44: note: template argument deduction/substitution failed:

/home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp:474:53: note: mismatched types ‘const cv::Vec<_Tp, m>’ and ‘IplImage* {aka _IplImage*}’

normalizedImg = cv::Mat(output.getIplImg(), true);

^

In file included from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core.hpp:59:0,

from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/core.hpp:48,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/include/pal_vision_segmentation/image_processing.h:41,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp:37:

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:993:37: note: candidate: template cv::Mat::Mat(const std::vector<_Tp>&, bool)

template explicit Mat(const std::vector<_Tp>& vec, bool copyData=false);

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:993:37: note: template argument deduction/substitution failed:

/home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp:474:53: note: mismatched types ‘const std::vector<_Tp>’ and ‘IplImage* {aka _IplImage*}’

normalizedImg = cv::Mat(output.getIplImg(), true);

^

In file included from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core.hpp:59:0,

from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/core.hpp:48,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/include/pal_vision_segmentation/image_processing.h:41,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp:37:

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:975:5: note: candidate: cv::Mat::Mat(const cv::Mat&, const std::vectorcv::Range&)

Mat(const Mat& m, const std::vector& ranges);

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:975:5: note: no known conversion for argument 1 from ‘IplImage* {aka _IplImage*}’ to ‘const cv::Mat&’

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:965:5: note: candidate: cv::Mat::Mat(const cv::Mat&, const cv::Range*)

Mat(const Mat& m, const Range* ranges);

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:965:5: note: no known conversion for argument 1 from ‘IplImage* {aka _IplImage*}’ to ‘const cv::Mat&’

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:955:5: note: candidate: cv::Mat::Mat(const cv::Mat&, const Rect&)

Mat(const Mat& m, const Rect& roi);

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:955:5: note: no known conversion for argument 1 from ‘IplImage* {aka _IplImage*}’ to ‘const cv::Mat&’

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:945:5: note: candidate: cv::Mat::Mat(const cv::Mat&, const cv::Range&, const cv::Range&)

Mat(const Mat& m, const Range& rowRange, const Range& colRange=Range::all());

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:945:5: note: no known conversion for argument 1 from ‘IplImage* {aka _IplImage*}’ to ‘const cv::Mat&’

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:933:5: note: candidate: cv::Mat::Mat(const std::vector&, int, void*, const size_t*)

Mat(const std::vector& sizes, int type, void* data, const size_t* steps=0);

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:933:5: note: candidate expects 4 arguments, 2 provided

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:919:5: note: candidate: cv::Mat::Mat(int, const int*, int, void*, const size_t*)

Mat(int ndims, const int* sizes, int type, void* data, const size_t* steps=0);

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:919:5: note: candidate expects 5 arguments, 2 provided

In file included from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.hpp:3642:0,

from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core.hpp:59,

from /opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/core.hpp:48,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/include/pal_vision_segmentation/image_processing.h:41,

from /home/rahul/aruco_drone_ws/src/pal_vision_segmentation/src/image_processing.cpp:37:

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:528:1: note: candidate: cv::Mat::Mat(cv::Size, int, void*, size_t)

Mat::Mat(Size _sz, int _type, void* _data, size_t _step)

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:528:1: note: candidate expects 4 arguments, 2 provided

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:495:1: note: candidate: cv::Mat::Mat(int, int, int, void*, size_t)

Mat::Mat(int _rows, int _cols, int _type, void* _data, size_t _step)

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:495:1: note: candidate expects 5 arguments, 2 provided

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:476:1: note: candidate: cv::Mat::Mat(const cv::Mat&)

Mat::Mat(const Mat& m)

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:476:1: note: candidate expects 1 argument, 2 provided

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:467:1: note: candidate: cv::Mat::Mat(const std::vector&, int, const Scalar&)

Mat::Mat(const std::vector& _sz, int _type, const Scalar& _s)

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:467:1: note: candidate expects 3 arguments, 2 provided

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:450:1: note: candidate: cv::Mat::Mat(int, const int*, int, const Scalar&)

Mat::Mat(int _dims, const int* _sz, int _type, const Scalar& _s)

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:450:1: note: candidate expects 4 arguments, 2 provided

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:459:1: note: candidate: cv::Mat::Mat(const std::vector&, int)

Mat::Mat(const std::vector& _sz, int _type)

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:459:1: note: no known conversion for argument 1 from ‘IplImage* {aka _IplImage*}’ to ‘const std::vector&’

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:442:1: note: candidate: cv::Mat::Mat(int, const int*, int)

Mat::Mat(int _dims, const int* _sz, int _type)

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:442:1: note: candidate expects 3 arguments, 2 provided

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:433:1: note: candidate: cv::Mat::Mat(cv::Size, int, const Scalar&)

Mat::Mat(Size _sz, int _type, const Scalar& _s)

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:433:1: note: candidate expects 3 arguments, 2 provided

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:416:1: note: candidate: cv::Mat::Mat(int, int, int, const Scalar&)

Mat::Mat(int _rows, int _cols, int _type, const Scalar& _s)

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:416:1: note: candidate expects 4 arguments, 2 provided

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:425:1: note: candidate: cv::Mat::Mat(cv::Size, int)

Mat::Mat(Size _sz, int _type)

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:425:1: note: no known conversion for argument 1 from ‘IplImage* {aka IplImage*}’ to ‘cv::Size {aka cv::Size}’

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:408:1: note: candidate: cv::Mat::Mat(int, int, int)

Mat::Mat(int _rows, int _cols, int _type)

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:408:1: note: candidate expects 3 arguments, 2 provided

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:402:1: note: candidate: cv::Mat::Mat()

Mat::Mat()

^

/opt/ros/kinetic/include/opencv-3.3.1-dev/opencv2/core/mat.inl.hpp:402:1: note: candidate expects 0 arguments, 2 provided

[ 4%] Built target roscpp_generate_messages_lisp

[ 4%] Built target roscpp_generate_messages_nodejs

[ 4%] Built target rosgraph_msgs_generate_messages_nodejs

[ 4%] Built target rosgraph_msgs_generate_messages_py

[ 4%] Built target rosgraph_msgs_generate_messages_lisp

[ 4%] Built target actionlib_msgs_generate_messages_py

[ 4%] Built target actionlib_generate_messages_cpp

[ 4%] Built target tf2_msgs_generate_messages_cpp

[ 4%] Built target actionlib_generate_messages_py

[ 4%] Built target tf2_msgs_generate_messages_eus

[ 4%] Built target actionlib_generate_messages_eus

[ 4%] Built target actionlib_msgs_generate_messages_nodejs

[ 4%] Built target tf2_msgs_generate_messages_py

[ 4%] Built target tf_generate_messages_eus

[ 4%] Built target tf_generate_messages_cpp

[ 4%] Built target tf_generate_messages_lisp

[ 4%] Built target tf2_msgs_generate_messages_nodejs

[ 4%] Built target actionlib_msgs_generate_messages_cpp

pal_vision_segmentation/CMakeFiles/pal_vision_segmentation.dir/build.make:62: recipe for target 'pal_vision_segmentation/CMakeFiles/pal_vision_segmentation.dir/src/image_processing.cpp.o' failed

make[2]: *** [pal_vision_segmentation/CMakeFiles/pal_vision_segmentation.dir/src/image_processing.cpp.o] Error 1

CMakeFiles/Makefile2:2866: recipe for target 'pal_vision_segmentation/CMakeFiles/pal_vision_segmentation.dir/all' failed

make[1]: *** [pal_vision_segmentation/CMakeFiles/pal_vision_segmentation.dir/all] Error 2

make[1]: *** Waiting for unfinished jobs....

[ 4%] Built target actionlib_generate_messages_nodejs

[ 4%] Built target actionlib_generate_messages_lisp

[ 4%] Built target tf_generate_messages_py

[ 4%] Built target actionlib_msgs_generate_messages_eus

[ 4%] Built target tf_generate_messages_nodejs

[ 4%] Built target actionlib_msgs_generate_messages_lisp

Building ARDroneTool/Lib

[ 5%] Performing install step for 'ardronelib'

make[3]: warning: jobserver unavailable: using -j1. Add '+' to parent make rule.

[ 5%] Completed 'ardronelib'

[ 6%] Built target ardronelib

Makefile:138: recipe for target 'all' failed

make: *** [all] Error 2

Invoking "make -j8 -l8" failed

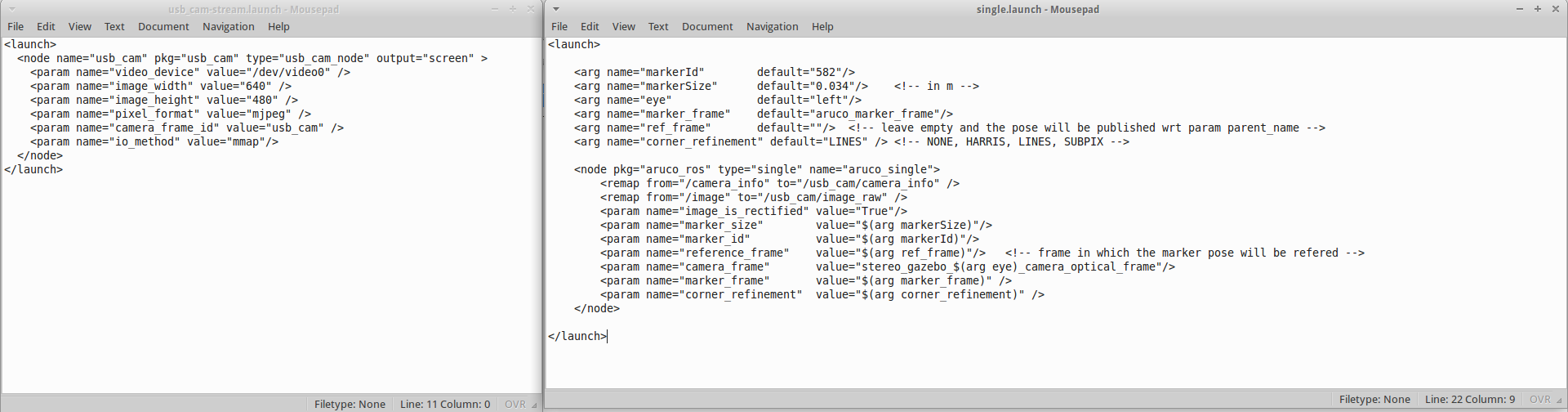

I have looked at the available solutions for this issue but still not able to figure out the problem. I have ran the rosdep command as well to check for dependencies and it says all dependencies have been installed correctly. I am using Kinect version of ROS. Please help. Thanks.